Choosing the right Kubernetes distribution is crucial for any organization’s infrastructure strategy. Whether you’re deploying containerized applications at the edge, managing resource-constrained environments or scaling enterprise workloads, understanding the differences between k3s and standard Kubernetes (K8s) will help you make informed decisions that align with your industry’s technical requirements and business objectives.

K3s and K8s represent one of the most important considerations in modern container orchestration. While both solutions provide strong Kubernetes capabilities, they serve different use cases and operational needs. This comprehensive comparison examines the key differences, helping you determine which distribution best fits your specific deployment scenarios.

What is Kubernetes (K8s)?

Contents

Kubernetes is an open source container orchestration platform originally developed by Google and now maintained by the Cloud Native Computing Foundation (CNCF). It automates the deployment, scaling and management of containerized applications across clusters of machines. The abbreviation “K8s” substitutes the eight letters between ‘K’ and ‘s’ with the number 8, creating a shorthand commonly used in technical documentation and industry discussions.

Standard Kubernetes provides comprehensive container orchestration capabilities, including automated scheduling, service discovery, load balancing, storage orchestration and self-healing mechanisms. These features make it the go-to choice for enterprise-scale deployments where you need full control over complex, distributed applications. Understanding how Kubernetes works requires looking into its distributed architecture and component interactions in detail.

Kubernetes excels in environments that need extensive customization, compliance with enterprise security policies and integration with diverse cloud and on-premises infrastructure. Its rich ecosystem includes thousands of extensions, operators and third-party integrations that support virtually any enterprise use case. The Kubernetes benefits for cloud computing extend beyond basic orchestration to include cost optimization, improved resource utilization and improved deployment speed.

What is K3s?

K3s is a lightweight, certified Kubernetes distribution designed specifically for production workloads in unattended, resource-constrained, remote locations or IoT appliances. Originally developed by Rancher Labs, K3s maintains full Kubernetes API compliance while significantly reducing resource requirements and operational complexity.

K3s packages everything needed for a production Kubernetes cluster into a single binary of less than 70MB. This includes the Kubernetes components, containerd runtime, Flannel CNI, CoreDNS and Traefik ingress controller. The streamlined architecture eliminates many dependencies and configuration steps required by standard Kubernetes installations. This efficiency has made k3s the most downloaded Kubernetes distribution, with millions of installations across diverse environments from IoT devices to enterprise edge deployments.

K3s removes outdated features and consolidates components to achieve its lightweight footprint without sacrificing core functionality. K3s supports both ARM64 and ARMv7 architectures, making it ideal for edge computing scenarios where standard Kubernetes would be too resource-intensive.

K3s and K8s: Detailed comparison

Understanding the specific differences between K3s and K8s across key technical dimensions can help you evaluate which distribution aligns with your needs.

Architecture and components

Standard Kubernetes follows a traditional master-worker architecture with separate components for the API server, scheduler, controller manager and etcd. This modular design provides flexibility but increases complexity and resource overhead.

K3s simplifies this architecture by combining server and agent nodes. The K3s server includes all control plane components in a single process, while maintaining the same Kubernetes APIs. This consolidation reduces the attack surface and simplifies troubleshooting without compromising functionality.

The component differences extend to networking and storage. Standard Kubernetes requires you to choose and configure CNI plugins, while K3s includes Flannel by default. Similarly, K3s bundles Traefik as the default ingress controller, whereas standard Kubernetes leaves ingress controller selection to operators.

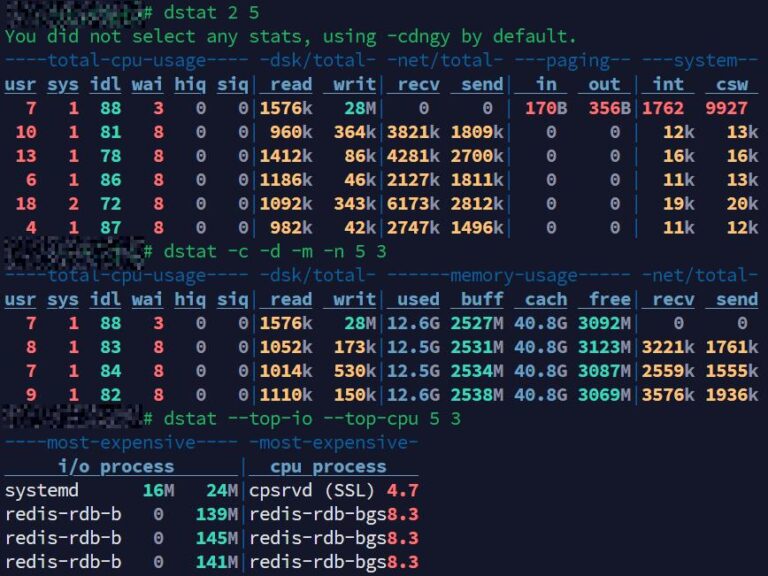

Resource footprint

Resource consumption is one of the most significant differences between these two distributions. Standard Kubernetes typically requires at least 4GB RAM and 2 CPU cores for a basic cluster, with additional overhead for each component.

K3s significantly reduces these requirements, running effectively on devices with as little as 512MB RAM and a single CPU core. This efficiency makes K3s a good choice for edge deployments, IoT devices and development environments where resources are limited.

The memory footprint difference stems from K3s’s streamlined components and removal of deprecated features. While standard Kubernetes maintains backward compatibility with legacy APIs, K3s focuses on current, stable functionality to minimize resource usage.

Installation and management

Installing standard Kubernetes involves multiple steps, including configuring container runtimes, networking plugins, storage drivers and security policies. Tools like kubeadm simplify this process but still require significant expertise and planning.

K3s installation reduces complexity to a single command that downloads and configures everything needed for a working cluster. The automated setup includes TLS certificates, networking configuration and basic security policies, allowing you to have a production-ready cluster in minutes.

Management overhead also differs significantly. Standard Kubernetes requires ongoing maintenance of multiple components, security updates across different packages and coordination between various subsystems. K3s consolidates these concerns into a single binary that updates atomically.

Storage and database

Standard Kubernetes uses etcd as its backing store for cluster state. While etcd provides excellent consistency and performance, it requires careful configuration and maintenance, especially in multi-master scenarios.

K3s offers flexibility in backend storage, supporting both etcd and SQLite for single-node deployments, plus external databases like PostgreSQL and MySQL for high-availability configurations. This flexibility allows you to choose storage backends that match your operational needs.

The SQLite option particularly benefits edge deployments where you need persistence without the complexity of distributed storage systems. For scenarios requiring high availability, K3s supports external database clustering while maintaining simple operations.

Security

Both distributions implement Kubernetes security features, including role-based access control (RBAC), network policies and pod security standards. However, their default security postures differ based on their intended use cases.

Standard Kubernetes provides maximum flexibility in security configuration, allowing you to implement enterprise-grade security policies with extensive customization options. This flexibility requires deep security expertise to configure properly.

K3s implements secure defaults designed to work out of the box while maintaining security best practices. It automatically generates TLS certificates, enables RBAC and implements reasonable default network policies. This approach reduces security misconfigurations common in complex Kubernetes deployments.

Extensibility and ecosystem

The Kubernetes ecosystem includes thousands of operators, helm charts and third-party tools designed for standard Kubernetes deployments. This extensive ecosystem supports virtually any enterprise requirement but can overwhelm teams that have to choose appropriate solutions.

K3s maintains full Kubernetes API compatibility, meaning most ecosystem tools work without modification. However, the lightweight nature of K3s makes it particularly well-suited for essential tools rather than comprehensive enterprise suites.

The extensibility difference primarily impacts teams that need extensive customization or specialized operators. Standard Kubernetes provides maximum flexibility for complex enterprise requirements, while K3s works well when you need core functionality with minimal operational overhead.

Here is a summary of the differences discussed above:

| Comparison Factor | K3s | K8s |

| Architecture & components | Single binary with consolidated control plane components; includes Flannel CNI and Traefik ingress by default; server and agent node architecture | Modular architecture with separate API server, scheduler, controller manager, and etcd; requires separate configuration of CNI plugins and ingress controllers |

| Resource footprint | Runs on minimal hardware requirements suitable for edge devices and IoT appliances; <70MB binary size; optimized for resource-constrained environments | Requires a minimum higher resource requirements for production clusters; larger memory footprint due to multiple components and backward compatibility |

| Installation & management | Single command installation with automated TLS, networking, and security setup; atomic updates via a single binary | Multi-step installation requiring container runtime, networking, and storage configuration; requires ongoing maintenance of multiple components |

| Storage & database | Flexible backend options: SQLite (single-node), etcd, or external databases (PostgreSQL, MySQL); simplified storage configuration | Uses etcd as a backing store; requires careful etcd configuration and maintenance, especially for multi-master setups |

| Security | Secure defaults with automatic TLS certificate generation, enabled RBAC, and reasonable network policies out of the box | Maximum security flexibility requiring deep expertise to configure properly; extensive customization options for enterprise security policies |

| Extensibility & ecosystem | Full Kubernetes API compatibility; works with most ecosystem tools; optimized for essential functionality rather than comprehensive enterprise suites | Extensive ecosystem with thousands of operators, Helm charts, and third-party tools; maximum flexibility for complex enterprise requirements |

When to use K3s

K3s works well in scenarios where resource efficiency, operational simplicity and quick deployment are more important than maximum flexibility and extensive customization options.

Edge and IoT deployments

Edge computing environments often involve resource-constrained hardware, intermittent connectivity and limited operational support. K3s addresses these challenges by running efficiently on minimal hardware while maintaining full Kubernetes functionality.

IoT deployments benefit from K3s’s small footprint and automated operations. You can deploy K3s on devices like Raspberry Pi or industrial edge computers, where standard Kubernetes would consume too many resources. The single-binary architecture also simplifies updates in distributed edge environments.

Manufacturing facilities, retail locations and remote monitoring stations are typical edge use cases where K3s provides enterprise-grade container orchestration without the complexity and resource overhead of standard Kubernetes.

Cost-effective deployments

Development and testing environments often don’t require the full capabilities of standard Kubernetes but benefit from API compatibility. K3s provides identical APIs with significantly reduced resource consumption, lowering infrastructure costs for non-production workloads.

Small to medium-sized organizations can use K3s for production workloads when they need Kubernetes capabilities without enterprise-scale complexity. The reduced operational overhead translates to lower total cost of ownership while maintaining production reliability.

K3s also enables cost-effective multi-environment strategies where you run K3s for development and testing while using standard Kubernetes for production. This approach provides consistency across environments while optimizing costs. Organizations evaluating K3s for production workloads can explore comprehensive deployment options and support through SUSE’s K3s enterprise solutions.

CI/CD and developer environments

Continuous integration pipelines benefit from K3s’ fast startup and minimal resource requirements. You can spin up K3s clusters quickly for testing, run workloads and tear down environments without the overhead associated with standard Kubernetes clusters.

Local developer environments represent another strong K3s use case. Developers can run K3s locally for testing containerized applications without consuming excessive laptop resources. The identical APIs ensure applications behave consistently between development and production environments.

Container-native development workflows particularly benefit from K3s when developers need to test Kubernetes-specific features like ingress, service discovery or storage without setting up complex local Kubernetes installations.

When to use K8s

Standard Kubernetes works well for scenarios that require extensive customization, complex enterprise integrations and maximum operational flexibility.

Large-scale enterprise deployments

Organizations running complex, multi-tenant applications could benefit from the comprehensive feature set and extensive configuration options of standard Kubernetes. Enterprise environments often require specific compliance frameworks, custom security policies and integration with legacy systems that demand the full flexibility of standard Kubernetes.

Large deployments spanning multiple data centers or cloud regions can leverage standard Kubernetes’ mature ecosystem of operators and management tools. The modular architecture allows teams to customize each component according to specific organizational requirements and operational practices.

Complex application architectures

Applications that require sophisticated networking policies, custom storage configurations or specialized workload types often need the comprehensive capabilities that standard Kubernetes provides. Microservices architectures with complex inter-service communication patterns benefit from the extensive service mesh integrations and advanced networking features available in the standard Kubernetes ecosystem.

Standard Kubernetes supports specialized workloads like batch processing, machine learning pipelines and stateful applications that may require custom resource definitions and operators not optimized for lightweight distributions.

Regulatory and compliance requirements

Industries with strict compliance requirements, such as financial services or healthcare, often need the extensive audit capabilities, granular access controls and comprehensive security frameworks that standard Kubernetes provides. The ability to implement custom admission controllers, security policies and compliance monitoring tools makes standard Kubernetes suitable for highly regulated environments.

Organizations that require specific certifications or compliance with frameworks like SOC 2, HIPAA or PCI DSS can leverage the mature security ecosystem and extensive configuration options available with standard Kubernetes deployments.

Lightweight Kubernetes trends

The containerized application industry increasingly emphasizes efficiency, security and operational simplicity. This shift drives growing adoption of lightweight Kubernetes distributions across fields, prioritizing edge computing and cost optimization.

Organizations are recognizing that maximum flexibility often comes with unnecessary complexity for many use cases. Lightweight distributions like K3s provide production-grade capabilities while eliminating features that add operational burden without delivering proportional value.

Edge computing growth particularly drives lightweight Kubernetes adoption. As more workloads move closer to data sources and users, the ability to run Kubernetes efficiently on diverse hardware becomes increasingly valuable. K3s enables consistent orchestration from cloud data centers to edge devices.

The rise of GitOps and declarative infrastructure management also favors lightweight distributions. K3s integrates seamlessly with GitOps workflows while reducing the configuration complexity that often complicates standard Kubernetes deployments.

Security-focused organizations appreciate K3s’ reduced attack surface compared to standard Kubernetes. Fewer components mean fewer potential vulnerabilities and simpler security auditing processes.

Scale Kubernetes at the edge with SUSE

SUSE Rancher Prime offers enterprise-grade support and management capabilities for K3s deployments, allowing you to scale lightweight Kubernetes across thousands of edge locations with confidence.

Rancher Prime addresses the operational challenges of managing distributed K3s clusters through centralized visibility, GitOps-driven continuous delivery and comprehensive lifecycle management. You can deploy, update and monitor K3s clusters from a single management plane while maintaining local autonomy at edge locations.

The platform provides up to five years of enterprise support for K3s, ensuring long-term stability for production deployments. This extended support timeline enables strategic planning for edge infrastructure without concerns about distribution lifecycle management.

SUSE’s enterprise support includes security updates, bug fixes and compatibility testing that ensure your K3s deployments remain secure and stable. The support extends beyond K3s itself to include guidance on architectural decisions, performance optimization and integration with enterprise systems.

Organizations using K3s with SUSE Rancher Prime can manage thousands of edge-based clusters using GitOps-driven continuous delivery, ensuring consistent deployments and efficient operations across distributed infrastructure.

Learn more about enterprise support for K3s with SUSE Rancher Prime.

FAQs about K3s and K8s

What is K3s?

K3s is a lightweight, certified Kubernetes distribution designed for production workloads in resource-constrained environments. It packages a complete Kubernetes cluster into a single binary under 70MB while maintaining full API compatibility with standard Kubernetes. K3s removes outdated features and consolidates components to achieve maximum efficiency without sacrificing core functionality.

What is the difference between K3s and K8s?

The primary differences between K3s and K8s lie in resource requirements, installation complexity and architectural design. K3s requires significantly fewer resources, installs with a single command and consolidates components for simplified operations, while standard Kubernetes provides maximum flexibility and extensive customization options at the cost of increased complexity. K3s uses SQLite as the default datastore instead of etcd, includes Flannel CNI and Traefik ingress controller by default, and combines all control plane components into a single process.

When should I use K3s?

Choose K3s for edge computing deployments, IoT applications, development environments, CI/CD pipelines and scenarios where resource efficiency and operational simplicity outweigh the need for extensive customization. K3s excels in environments with limited resources or operational support. Specific use cases include manufacturing facilities, retail locations, remote monitoring stations, local developer environments and cost-effective testing scenarios where you need Kubernetes functionality without enterprise-scale complexity.

When should I use K8s?

K3s trades some flexibility for simplicity and efficiency. It may not support all specialized enterprise features available in standard Kubernetes distributions, and some third-party tools designed for specific Kubernetes configurations might require adaptation. The streamlined architecture means fewer customization options for networking and storage backends. However, the core Kubernetes functionality remains identical, and most ecosystem tools work without modification due to full API compatibility.

Is K3s production-ready?

Yes, K3s is designed for production workloads and is used by organizations worldwide for critical applications. It maintains the same security and reliability standards as standard Kubernetes while providing a more streamlined operational experience. With SUSE Rancher Prime, you can get up to five years of enterprise support for production K3s deployments.

Can K3s replace full Kubernetes?

K3s can replace standard Kubernetes for many use cases, particularly those prioritizing efficiency over maximum flexibility. The decision depends on your specific needs for customization, ecosystem integration and operational complexity. Both distributions provide the same core Kubernetes APIs and functionality. K3s works best when you need reliable container orchestration without complex enterprise requirements, while standard Kubernetes remains optimal for scenarios that need extensive customization, compliance with complex enterprise policies or integration with specialized enterprise tools.

(Visited 1 times, 1 visits today)