vMotion (the magical ability to manually or automatically teleport a running, Production VM from one ESXi Host (or DataCenter) to another, ideally without interrupting operations and without the Guest OS, its Applications and Processes and even the end-user being aware of the fact that this was happening) is arguably one of the Top-5 innovative features in Virtualization and Cloud Computing since, well… the invention of Toast Bread.

vMotion was first introduced in ESXi version 2.0 to great review and excitements. In its debut, vMotion was more experimental than anything else. It wasn’t targeted at (nor recommended for) large, busy and critical Workloads. As Virtualization became mainstream, though, and Applications Owners’ resistance dissipated, more and more important Workloads previously considered “Too Big to Virtualize” began to make their way onto the virtual arena. It is not misleading to state that (among other things) the appeals of flexibility, scalability, elasticity and ease of operation and administrations were largely responsible for this massive embrace and adoption.

As more workloads migrate to the virtual platform and as virtualization itself eventually replaces the physical hardware as the preferred platform for modern Mission Critical Applications, business and applications owners and the applications themselves impose demands and constraints on the existing version and features in the virtualization platforms and technologies available at the time. What was once a “Nice to Have” fling rapidly becomes a Must-Have core component. The need to continually improve the platform and the features to accommodate more modern and demanding workloads became an imperative.

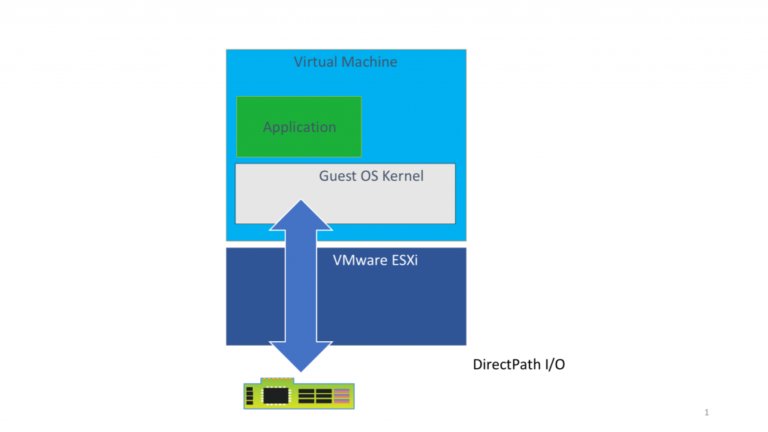

At a very high level, vMotion is the feature that lets you move a VM (while it’s up and running and service client requests or just simply doing its job) from one ESXi Host to another – for any reason. This is achieved by copying and transferring the memory and state of the VM incrementally from its existing Host to the Target Host. The copy operation is iterative (to account for changes happening inside the Guest OS), dirtied pages, and such). To be able to copy a Windows VM and its state, the vMotion operation calls on Microsoft Shadow Copy (VSS) to quiesce the VM. At a point in time, when ESXi can copy what’s left in one go, it does so and then switches the VM from its original Host to the Target Host.

In a perfect world, where all the Stars are happily aligned, this process completes successfully without a hiccup and everyone goes home happy. In the real world, several things can interfere with and either slow down the process (triggering unintended domino falls) or just fail to successfully complete, resulting in a roll-back. Over the years, VMware has invested considerable engineering efforts in addressing and resolving these failure scenarios and improving the efficiency of vMotion, significantly minimizing failure rates.

I will focus on one such improvements recently introduced in vSphere 7.0 Update 2 and explain how it helps remove one of the remaining bottlenecks for Production Microsoft SQL Server workloads, especially Clustered MS SQL Server instances and what we like to call “Jumbo VM”

Recall that vMotion does its thing by copying VM’s state in iterative manner from one Host to another. Imagine that you have a large VM (as defined by individual Customer’s infrastructure and administrative realities). Suppose we want to vMotion a 24-vCPU, 192GB RAM MS SQL Server VM from Host A to Host B. Suppose that Host B has just bare enough compute resource to accommodate this “large” VM. Suppose also that Host B also has a few VMs on it. Now, add to the scenario I just constructed the additional fact that the Network connection between the two Hosts is a 1GB pipe.

In this scenario, the vMotion operation will be constrained by both the need to shift compute resources around in order to make room for the new entrant. The 1GB network speed will also force us to be able to copy and transfer only so much of the data, meaning that we will have to make multiple trips between the two Hosts before we can complete the operation. If this is a truly “Mission Critical” MS SQL Server, we can also assume that it is very busy and generating lots of data and (therefore) dirties pages more frequently than if it we just sleeping. vMotion will take a long time to complete.

And, if the VM is a node in a Windows Server Failover Clustering (WSFC) configuration, the chances are that, when VSS is invoked to quiesce the VM, the VM will likely remain in a “frozen” state for a long time unless we are able to quickly finish the copy and release the hold. WSFC has a thing against Cluster nodes which go AWOL and don’t talk to their Partner Nodes.

In previous versions of Windows, this is 5 seconds and in newer version, it is 10 seconds. If the VM is frozen for longer than this, its Partners will deem it to be unavailable and will trigger a failover of all the clustered resources owned by the absent/frozen VM automatically. This, more than anything else, is usually why a lot of Customers still hesitate to vMotion their IMPORTANT MS SQL Server instances.

To address this challenge, VMware introduced Multi-NIC vMotion Portgroups feature (among other enhancements I won’t mention here) – enabling the vMotion operation to be performed over multiple Network Card interfaces. Theoretically, copy operation over more than one network interface is faster than doing it over one. Ergo, vMotion completes quicker. As faster Network Cards became mainstream, we even introduced multi-VMKernel vMotion capabilities, where you can use multiple VMKernel Portgroups (this time over just one NIC) to perform a vMotion operation. Same logic – improved vMotion operations in less time.

These two feature improvements provided considerable improvements and (combined with the others) addressed the challenges and constraints Customers experienced when using vMotion to relocate large (Monster) VMs. But, of course, this is VMware. Not only are our Customers very savvy and demanding, we just don’t know when to stop when there is any possible avenue for continuous improvement in any partyof the vSphere stack.

And, that, Ladies and Gentlemen, is why I’m happy to mention that, with the release of vSphere 7.0 Update 2, we snuck in another gem of optimization and improvement that will be quite indispensable for all vSphere Administrators in charge of providing hosting services for (say it with me), Monster VMs running any of the Mission Critical Business Applications – especially clustered Microsoft SQL Server.

One of the things I skipped in my high-level description of how vMotion operation works is that the “copy operation” is facilitated by multiple components working in tandem. My Colleague (Duncan Epping) took an in-depth look at this a couple of years ago, so I won’t re-hash them here. Of interest to us is this:

VMware recognizes that, even when you use the multi-NIC or multi-VMKernel for improving vMotion, continued improvements and innovation in technologies present us with even more opportunity to make things go faster, more predictable and more consistently reliable. Our position is that a vMotion operation should be as seamless and uninteresting as deploying a VM from Template (just for an example). As Network card speed and bandwidth continue to increase, we discovered that they present even greater opportunity for vMotion to achieve more scale.

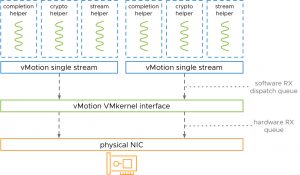

Historically, when you create a vMotion VMKernel Portgroup, a “Stream Helper” is created along with it. This Stream Helper is responsible for getting the data we are copying during vMotion onto the Network stack. In the past, this One-Helper-per-VMKernel made sense because we needed to avoid saturating the Network with vMotion traffic. As 10GB (and then 25GB) NICs became more and more prevalent in the Enterprises, saturating these with vMotion traffic became near impossible. So, why not add more Helpers then, since we have such a wide lane? And, that’s what we did.

We added configuration options to manually double the number of Stream Helpers for each vMotion VMKernel interfaces. Implementing this option is relatively trivial (if you ignore the need to reboot the ESXi Host for the configuration to take effect):

- Get-AdvancedSetting -Entity <ESXi Hostname> -Name Net.TcpipRxDispatchQueues | Set-AdvancedSetting -Value ‘2’

- Get-AdvancedSetting -Entity <ESXi Hostname> -Name Migrate.VMotionStreamHelpers | Set-AdvancedSetting -Value ‘2’

Yaaaayyyyy!!!! Right? Not quite.

For one thing, this configuration is manual. You have to bounce your ESXi Hosts to activate the capability, and we know how much Administrators look forward eagerly to rebooting “Production Servers”. Perhaps this is why only a very small subset of our Customers use this capability.

I speak with Customers running Microsoft Business Critical Applications (mostly MS SQL Server and Exchange Server) on vSphere daily. I am always astounded to learn that the vast majority of these Enterprise customers (especially those with the largest farm of the largest instances of MS SQL Server in Production) are unaware of this feature. They, therefore, don’t take advantage of it.

Worse, the historical fear of slow/interrupted vMotion operations still keeps them from using one of the most powerful features available in vSphere to enhance their applications’ High Availability.

Meanwhile, NIC Vendors have proven to be quite busy lately. Just as people were celebrating their 10GB/25GB NICs, the 40GB models made an appearance on the stage, only to be quickly nudged off-stage by the 100GB brands. You can guess what VMware did in response, no? Well, here is what.

- We noticed that two Stream Helpers weren’t even using the 25GB NICs and their bandwidth to the fullest. Now, imagine 40GB/100GB?

- We also took cognizance of the low adoption/utilization of the multi-Streams capabilities in newer versions of vSphere and concluded that it could be due to the manual intervention required.

- Our take-away? Why not just auto-enable this most beneficial features and help our Customers help themselves?

Starting with vSphere 7.0 Update 2, we made multiple “Stream Helper” an automatic, standard feature.

No need for any Console or Programmatic configuration. In addition, we now auto-detect the NIC speed, bandwidth and capabilities, and we auto-scale the Stream-Helpers accordingly. Here is a list of what we now do automatically for Stream-Helpers, based on NIC Speed:

- 25 GbE = 2 vMotion streams

- 40 GbE = 3 vMotion streams

- 100 GbE = 7 vMotion streams

If you are doing the math, this translates to roughly 1 Stream Helper for each 12.5 Gb slice of a NIC’s bandwidth, starting with 25Gb NICs.

What does this all mean to you and your Clustered Microsoft SQL Server workloads?

- Well, for one, if you are using modern NICs, your vMotion operations would likely start and complete before you finish clicking the “OK” button. Too much? OK, let’s before you can go fetch coffee and come back.

- Your WSFC-backed Clustered workloads are now less likely than ever to spend long enough in the quiesced (“Frozen”) state for unintended clustered resource failover events to occur.

- You don’t have to make any manual configurations in your ESXi cluster for you to begin benefiting from this feature.

- You no longer need to create lots of VMKernel Portgroups (each requiring its own dedicated IP Address) to speed up your vMotion operations.

- If you enjoy creating multiple VMKernel Portgroups, you also get the added benefit of creating more Stream Helpers for your vMotion Operations. I can’t guarantee it, but, if you do this, don’t call Support if you find your Monster MS SQL Server VMs teleporting itself from Host to Host as soon as you think about vMotion.

I know that you don’t need me to nudge you that it is time for you to upgrade to vSphere 7.0 Update 2; I am just letting you know of some of the nuggets and goodies that are hidden in this major release and to encourage you to be less afraid (or shy) to enable vMotion on even your most important Jumbo-sized Microsoft SQL Server VMs, even if they are clustered nodes.