Dead space reclamation has always been a challenge especially with thin-provisioned volumes. Thin provisioning dynamically allocated storage on-demand to the workloads in a flexible and efficient manner.

Any database day to day 2 operations would include dropping tablespaces, dropping or resizing datafiles, truncating tables, deleting archive logs from ASM FRA diskgroup etc. .

One would expect the free space to go back to the storage free pool to be used for further allocation purposes, but that ability depends on

- How the Application reports the discarded space to the GOS (Guest Operating System)

- How the GOS reports the discarded space to underlying VMware layer

- And eventually how VMware vSphere reports the discarded space to the Storage array

This blog focuses on the Oracle ASM Filter Driver (ASMFD) capability to report discarded or free space of thin-provisioned vmdk’s to the GOS which eventually gets reported to the VMware datastores and the underlying Storage array.

VMware Thin Provisioning

Contents

- 1 VMware Thin Provisioning

- 2

- 3 Oracle ASM Filter Driver (ASMFD)

- 4

- 5 Oracle ASM Filter Driver (ASMFD) and Thin Provisioning

- 6

- 7 Oracle ASMLIB and Space reclamation

- 8 Oracle workloads on Filesystems

- 9

- 10

- 11 VMware VMFS / vSAN / vVOL UNMAP Support

- 12 GOS Support for UNMAP operations

- 13 Test Setup

- 14 Test Case

- 15 UNMAP Operation on ‘OraPure’ FC datastore on Pure Storage

Use this format to save storage space. For the thin disk, you provision as much datastore space as the disk would require based on the value that you enter for the virtual disk size. However, the thin disk starts small and at first, uses only as much datastore space as the disk needs for its initial operations. If the thin disk needs more space later, it can grow to its maximum capacity and occupy the entire datastore space provisioned to it.

Thin provisioning is the fastest method to create a virtual disk because it creates a disk with just the header information. It does not allocate or zero out storage blocks. Storage blocks are allocated and zeroed out when they are first accessed.

More information about VMware Thin Provisioning can be found here.

Oracle ASM Filter Driver (ASMFD)

Oracle ASM Filter Driver (Oracle ASMFD) is a kernel module that resides in the I/O path of the Oracle ASM disks. Oracle ASM uses the filter driver to validate write I/O requests to Oracle ASM disks.

Oracle ASM Filter Driver rejects any I/O requests that are invalid. This action eliminates accidental overwrites of Oracle ASM disks that would cause corruption in the disks and files within the disk group.

Oracle ASM Filter Driver (ASMFD) and Thin Provisioning

The Oracle ASM diskgroup attribute “THIN_PROVISIONED” enables or disables the functionality to discard unused storage space after a disk group rebalance is completed.

Storage vendor products that support thin provisioning have the capability to reuse the discarded storage space for a more efficient overall physical storage utilization.

Given such support from a storage vendor, when the COMPACT phase of a rebalance operation has completed, Oracle ASM informs the storage which space is no longer used and can be repurposed.

During ASM storage reclamation, ASM first performs a manual rebalance, which defrags (compacts) the ASM disk group by moving ASM extents to gaps created by the deleted data. When the ASM disk group is compacted, its High Water Mark (HWM) is updated based on its new allocated capacity. Then, ASM sends SCSI unmap commands to the storage system to reclaim the space above the new HWM.

Note: The THIN_PROVISIONED attribute is supported only with Oracle ASM Filter Driver (Oracle ASMFD) in Oracle Grid Infrastructure 12.2 and later releases on Linux.

More information on Oracle ASMFD and THIN_PROVISIONED can be found here.

Oracle ASMLIB and Space reclamation

As stated above, the THIN_PROVISIONED attribute is supported only with Oracle ASM Filter Driver (Oracle ASMFD) in Oracle Grid Infrastructure 12.2 and later releases on Linux.

Unlike Oracle ASMLib , the ASMFD module contains support for SCSI UNMAP commands.

Oracle workloads on Filesystems

In case EXT4 and XFS are used to host the Oracle workloads, use a mount option called “discard” to mount the filesystem on thin-provisioned volume.

The filesystem will then recognize when space becomes unused after a file is deleted and issue trim commands to UNMAP the unused space.

VMware VMFS / vSAN / vVOL UNMAP Support

With VMware VMFS 6, deleting or removing files from a VMFS6 datastore frees space within the file system. This free space is mapped to a storage device until the file system releases or unmaps it. ESXi supports reclamation of free space, which is also called the unmap operation.

VMFS5 and earlier file systems do not unmap free space automatically, but you can use the esxcli storage vmfs unmap command to reclaim space manually.

More information on VMFS UNMAP information can be found here and KB “Using the esxcli storage vmfs unmap command to reclaim VMFS deleted blocks on thin-provisioned LUNs (2057513)”

VMware vSAN supports thin provisioning, which lets you, in the beginning, use just as much storage capacity as currently needed and then add the required amount of storage space at a later time. vSAN 6.7 U1 now has full awareness of TRIM/UNMAP command sent from the guest OS and can reclaim the previously allocated storage as free space.

More information on vSAN UNMAP can be found here , blog article “VMware vSAN 6.7U1 Storage reclamation – TRIM/UNMAP” , Cloud Platform tech Zone document “vSAN Space Efficiency Technologies” and “What’s New in vSphere 6.7 Core Storage”.

With VMware VVols-backed VMs, if a guest OS issues the UNMAP command, the command can be sent directly to the VVols array for handling. This, however, depends on the type of the guest OS and on the hardware version of a particular VM.

To support UNMAP automatically, the VMs that run in VVols environment must be of hardware version 11 or above

More information on this can be found at KB How Virtual Volumes and UNMAP primitive interact? (2112333)

An excellent session by Jason Massae & John Nicolson delves deep into the history of UNMAP

VMworld 2018 Session – Better Storage Utilization with Space Reclamation UNAMP HCI3331BU with John Nicolson and Jason Massae.

https://storagehub.vmware.com/t/vsphere-storage/vmworld-2/better-storage-utilization-with-space-reclamation-unmap-hci3331bu/

GOS Support for UNMAP operations

Some guest OSes that support unmapping of blocks, such as Linux-based systems, do not generate UNMAP commands on virtual disks in vSphere 6.0

The level of SCSI support for ESXi 6.0 virtual disks is SCSI-2, while Linux expects 5 or higher for SPC-4 standard. This limitation prevents generation of UNMAP commands until the virtual disks are able to claim support for at least SPC-4 SCSI commands.

In vSphere 6.5, SPC-4 support was added enabling in-guest UNMAP with Linux-based virtual machines

An excellent blog by Cody Hosterman, Pure Storage “What’s new in ESXi 6.5 Storage Part I: UNMAP” delves into details of how to find out if the Linux version is SPC-4 version and if the device is indeed thin-provisioned

More information on this can be found at KB How Virtual Volumes and UNMAP primitive interact? (2112333)

Test Setup

This blog focuses on the Oracle ASM Filter Driver (ASMFD) capability to report discarded or free space to the GOS which eventually gets reported to the VMware datastores and the underlying Storage array.

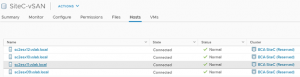

The Test setup included a 4 Node VMware vSphere cluster ‘BCA-SiteC’ with 4 ESXi Servers , all at version 7.0.1, 16850804

- sc2esx09.vslab.local

- sc2esx10.vslab.local

- sc2esx11.vslab.local

- sc2esx12.vslab.local

All ESXi servers in the above cluster ‘BCA-SiteC’ had access to

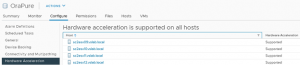

- VMware VMFS6 datastore ‘OraPure’ backed by Pure Storage FlashArray X-50

- VMware vSAN storage ‘SiteC-vSAN’

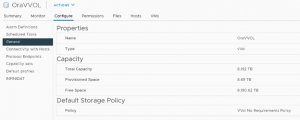

- VMware vVOL datastore ‘OraVVOL’ backed by Pure Storage FlashArray X-50

Details of the ‘OraPure’ FC datastore on Pure FlashArray X-50 is as below

[root@sc2esx12:~] esxcli storage core device vaai status get

..

naa.624a9370a841b405a3a348ca00012592

VAAI Plugin Name:

ATS Status: supported

Clone Status: supported

Zero Status: supported

Delete Status: supported

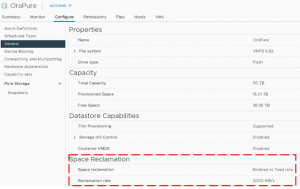

Details of the ‘SiteC-vSAN’ datastore is as below

[root@sc2esx09:~] esxcfg-advcfg -g /VSAN/GuestUnmap

Value of GuestUnmap is 1

[root@sc2esx09:~]

[root@sc2esx10:~] esxcfg-advcfg -g /VSAN/GuestUnmap

Value of GuestUnmap is 1

[root@sc2esx10:~]

[root@sc2esx11:~] esxcfg-advcfg -g /VSAN/GuestUnmap

Value of GuestUnmap is 1

[root@sc2esx11:~]

[root@sc2esx12:~] esxcfg-advcfg -g /VSAN/GuestUnmap

Value of GuestUnmap is 1

[root@sc2esx12:~]

Details of the vVOL datastore ‘OraVVOL’ on Pure FlashArray X-50 is as below.

Observe that there is no Space Reclamations option available for a vVols datastore ?

The explanation to the above is given in the blog “#StorageMinute: Virtual Volumes (vVols) and UNMAP” by Jason Massae.

“With traditional storage, VMFS or NFS, when a supported VM and GOS issue Trim or UNMAP, the command is passed through the vSphere storage stack and then passed to the array. With vSphere 6.7, space reclamation further matured enabling configurable UNMAP and support for SESparse disks.

The new features in vSphere 6.7 only apply to VMFS and NFS and not vVols. This is because the vVols disks are directly mapped to the VM. This allows the GOS to pass the Trim/UNMAP commands directly to the array bypassing the vSphere storage stack. As a result, the space reclamation operation is more efficient with vVols”

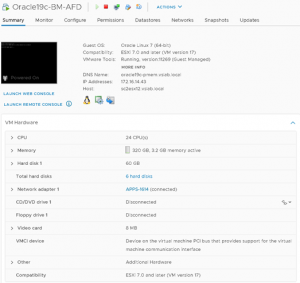

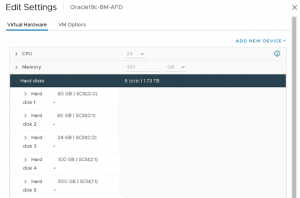

A Virtual Machine ‘Oracle19c-BM-AFD’ was created with OEL 7.9 UEK6 GOS. The VM has 24 vCPU’s and 320GB RAM.

Details of the VM Hard Disk are as below

- Hard Disk 1 – SCSI 0:0 – 60 GB – OS

- Hard Disk 2 – SCSI 0:1 – 60 GB – Oracle binaries

- Hard Disk 3 – SCSI 0:2 – 24 GB – OS Swap

- Hard Disk 4 – SCSI 2:1 – 100 GB – Oracle Redo Log (ASM REDO_DG)

- Hard Disk 5 – SCSI 1:1 – 500 GB – Oracle Database (ASM DATA_DG)

Test Case

The Test cases are below.

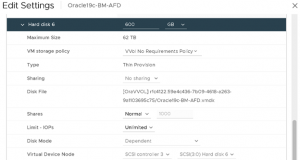

- Add a 600 GB thin-provisioned vmdk from the ‘OraPure’ FC datastore on Pure Storage to the VM at SCSI 3:0 and create a new ASM Disk group called SLOB_DG with ‘THIN_PROVISIONED’ attribute using ASMFD driver

- Install the Oracle workload generator SLOB

- Drop the SLOB schema and Tablespace and record observation

- Perform the SLOB ASM disk group rebalance with compact option and record observation

Repeat the above test cases for a 600 GB vmdk from vSAN datastore ‘SIteC-vSAN” and vVOL datastore ‘OraVVOL’.

UNMAP Operation on ‘OraPure’ FC datastore on Pure Storage

-Add a 600 GB thin-provisioned vmdk from the ‘OraPure’ FC datastore on Pure Storage to the VM at SCSI 3:0. Create a new ASM Disk group called SLOB_DG with ‘THIN_PROVISIONED’ attribute using ASMFD driver

Check that the device is Linux SPC-4 compatible and thin-provisioned.

[root@oracle19c-pmem ~]# sg_inq -d /dev/sdc1

standard INQUIRY:

PQual=0 Device_type=0 RMB=0 version=0x06 [SPC-4]

[AERC=0] [TrmTsk=0] NormACA=0 HiSUP=0 Resp_data_format=2

SCCS=0 ACC=0 TPGS=0 3PC=0 Protect=0 [BQue=0]

EncServ=0 MultiP=0 [MChngr=0] [ACKREQQ=0] Addr16=0

[RelAdr=0] WBus16=1 Sync=1 Linked=0 [TranDis=0] CmdQue=1

length=36 (0x24) Peripheral device type: disk

Vendor identification: VMware

Product identification: Virtual disk

Product revision level: 2.0

No version descriptors available

[root@oracle19c-pmem ~]#

[root@oracle19c-pmem ~]# sg_vpd –p=lbpv /dev/sdc1

Logical block provisioning VPD page (SBC):

Unmap command supported (LBPU): 1

Write same (16) with unmap bit supported (LBWS): 1

Write same (10) with unmap bit supported (LBWS10): 1

Logical block provisioning read zeros (LBPRZ): 1

Anchored LBAs supported (ANC_SUP): 0

Threshold exponent: 1

Descriptor present (DP): 0

Provisioning type: 2

[root@oracle19c-pmem ~]#

-Install the Oracle workload generator SLOB

Before dropping SLOB user and SLOB tablespace, ASM diskgroup Total and Free space statistics are as below:

Total_MB=614399

Free-MB=3224

ASMCMD> lsdg

State Type Rebal Sector Logical_Sector Block AU Total_MB Free_MB Req_mir_free_MB Usable_file_MB Offline_disks Voting_files Name

MOUNTED EXTERN N 512 512 4096 4194304 511996 213720 0 213720 0 N DATA_DG/

MOUNTED EXTERN N 512 512 4096 1048576 102399 7888 0 7888 0 N REDO_DG/

MOUNTED EXTERN N 512 512 4096 1048576 614399 3224 0 3224 0 N SLOB_DG/

ASMCMD>

Before dropping SLOB schema & tablespace , ‘Datastore ‘OraPure’ UNMAP Statistics is as shown below:

Volume: Unmap IOs :33597

Volume: Unmapped blocks :2149405

[root@sc2esx12:~] vsish -e get /vmkModules/vmfs3/auto_unmap/volumes/OraPure/properties

Volume specific unmap information {

Volume Name :OraPure

FS Major Version :24

Metadata Alignment :4096

Allocation Unit/Blocksize :1048576

Unmap granularity in File :1048576

Volume: Unmap IOs :33597

Volume: Unmapped blocks :2149405

Volume: Num wait cycles :0

Volume: Num from scanning :1055152

Volume: Num from heap pool :1518

Volume: Total num cycles :1369506

Unmaps processed in last minute:24576

}

[root@sc2esx12:~]

-Drop the SLOB schema and Tablespace and record observation

After dropping SLOB user and SLOB tablespace, ASM diskgroup Total and Free space statistics are as below:

Total_MB=614399

Free-MB=614329

ASMCMD> lsdg

State Type Rebal Sector Logical_Sector Block AU Total_MB Free_MB Req_mir_free_MB Usable_file_MB Offline_disks Voting_files Name

MOUNTED EXTERN N 512 512 4096 4194304 511996 213720 0 213720 0 N DATA_DG/

MOUNTED EXTERN N 512 512 4096 1048576 102399 7888 0 7888 0 N REDO_DG/

MOUNTED EXTERN N 512 512 4096 1048576 614399 614329 0 614329 0 N SLOB_DG/

ASMCMD>

After dropping SLOB schema & tablespace , Datastore ‘OraPure’ UNMAP Statistics is as shown below:

Volume: Unmap IOs :33597

Volume: Unmapped blocks :2149405

[root@sc2esx12:~] vsish -e get /vmkModules/vmfs3/auto_unmap/volumes/OraPure/properties

Volume specific unmap information {

Volume Name :OraPure

FS Major Version :24

Metadata Alignment :4096

Allocation Unit/Blocksize :1048576

Unmap granularity in File :1048576

Volume: Unmap IOs :33597

Volume: Unmapped blocks :2149405

Volume: Num wait cycles :0

Volume: Num from scanning :1055344

Volume: Num from heap pool :1518

Volume: Total num cycles :1369727

Unmaps processed in last minute:20480

}

[root@sc2esx12:~]

We can observe that Datastore ‘OraPure’ UNMAP Statistics before and after dropping the SLOB schema and tablespace is exactly the same ? Why is the dead space not released?

Remember, with Oracle ASM, when the COMPACT phase of a rebalance operation is completed, Oracle ASM informs the storage which space is no longer used and can be repurposed.

-Perform the Oracle ASM SLOB_DG diskgroup rebalance with COMPACT option

grid@oracle19c-pmem:+ASM:/home/grid> sqlplus / as sysasm

SQL> alter diskgroup SLOB_DG rebalance with balance compact wait;

SQL> exit

After performing Oracle ASM diskgroup rebalance with compact option, Datastore ‘OraPure’ UNMAP Statistics is as shown below:

Volume: Unmap IOs :43196

Volume: Unmapped blocks :2763539

[root@sc2esx12:~] vsish -e get /vmkModules/vmfs3/auto_unmap/volumes/OraPure/properties

Volume specific unmap information {

Volume Name :OraPure

FS Major Version :24

Metadata Alignment :4096

Allocation Unit/Blocksize :1048576

Unmap granularity in File :1048576

Volume: Unmap IOs :43196

Volume: Unmapped blocks :2763539

Volume: Num wait cycles :0

Volume: Num from scanning :1056762

Volume: Num from heap pool :2246

Volume: Total num cycles :1371951

Unmaps processed in last minute:0

}

[root@sc2esx12:~]

UNMAP Operation on ‘SiteC-vSAN’ datastore

Repeat the same steps as above

Check that the device is Linux SPC-4 compatible and thin-provisoned.

[root@oracle19c-pmem ~]# sg_inq -d /dev/sdc1

standard INQUIRY:

PQual=0 Device_type=0 RMB=0 version=0x06 [SPC-4]

[AERC=0] [TrmTsk=0] NormACA=0 HiSUP=0 Resp_data_format=2

SCCS=0 ACC=0 TPGS=0 3PC=0 Protect=0 [BQue=0]

EncServ=0 MultiP=0 [MChngr=0] [ACKREQQ=0] Addr16=0

[RelAdr=0] WBus16=1 Sync=1 Linked=0 [TranDis=0] CmdQue=1

length=36 (0x24) Peripheral device type: disk

Vendor identification: VMware

Product identification: Virtual disk

Product revision level: 2.0

No version descriptors available

[root@oracle19c-pmem ~]#

[root@oracle19c-pmem ~]# sg_vpd –p=lbpv /dev/sdc1

Logical block provisioning VPD page (SBC):

Unmap command supported (LBPU): 1

Write same (16) with unmap bit supported (LBWS): 1

Write same (10) with unmap bit supported (LBWS10): 1

Logical block provisioning read zeros (LBPRZ): 0

Anchored LBAs supported (ANC_SUP): 0

Threshold exponent: 1

Descriptor present (DP): 0

Provisioning type: 2

[root@oracle19c-pmem ~]#

-Install the Oracle workload generator SLOB

Before dropping SLOB user and SLOB tablespace, ASM diskgroup Total and Free space statistics are as below:

Total_MB=614399

Free-MB=3224

ASMCMD> lsdg

State Type Rebal Sector Logical_Sector Block AU Total_MB Free_MB Req_mir_free_MB Usable_file_MB Offline_disks Voting_files Name

MOUNTED EXTERN N 512 512 4096 4194304 511996 213720 0 213720 0 N DATA_DG/

MOUNTED EXTERN N 512 512 4096 1048576 102399 7888 0 7888 0 N REDO_DG/

MOUNTED EXTERN N 512 512 4096 1048576 614399 3224 0 3224 0 N SLOB_DG/

ASMCMD>

Before dropping SLOB schema & tablespace , vSAN Datastore ‘SiteC-vSAN’ UNMAP Statistics is as shown below:

[root@sc2esx12:~] vsish -e get /vmkModules/vsan/dom/clientStats

VsanSparse Layer Consolidation Stats {

number of reads:0

number of writes:0

number of lookup requests:0

microseconds spent reading:0

microseconds spent writing:0

microseconds spent checking:0

bytes read:0

bytes written:0

reads to individual layers:0

lookups to individual layers:0

microseconds spent checking individual layers:0

microseconds spent updating cache:0

microseconds spent searching cache:0

fastest lookup request:9223372036854775807

slowest lookup request:0

fastest lookup request for individual layer:9223372036854775807

slowest lookup request for individual layer:0

fastest read request:9223372036854775807

slowest read request:0

fastest write request:9223372036854775807

slowest write request:0

number of extents discovered:0

size of region covered by extents:0

number of TRIM/UNMAP requests:0

number of bytes trimmed:0

number of read errors:0

number of write errors:0

number of lookup errors:0

number of failed TRIM/UNMAP requests:0

}

[root@sc2esx12:~]

[root@sc2esx12:~] vsish -e get /vmkModules/vsan/dom/clientStats | grep -i unmap

Total number of unmapped writes:0

Total bytes unmapped for write:0

Sum of all unmapped write latencies:0

Sum of all unmapped write latency squares:0

Max of all unmapped write latencies:0

Sum of unmapped write congestion values:0

Total number of unmaps:1822

Total number of unmaps on leaf owner:0

Total unmap bytes:1288413106176

Sum of all unmap latencies:6785801

Sum of all unmap latencies on leaf owner:0

Sum of all unmap latency squares:114824319475

Max of all unmap latencies:0

Sum of unmap congestion values:0

Total number of recovery unmaps:0

Total number of recovery unmaps on leaf owner:0

Total recovery unmap bytes:0

Sum of all recovery unmap latencies:0

Sum of all recovery unmap latencies on leaf owner:0

Sum of all recovery unmap latency squares:0

Max of all recovery unmap latencies:0

Sum of recovery unmap congestion values:0

Sum of all numOIOUnmap values:10903

Sum of all numOIORecoveryUnmap values:0

Histogram of resync unmap congestion values:Histogram {

Histogram of vmdisk unmap congestion values:Histogram {

Histogram of resync unmap latency values:Histogram {

Histogram of vmdisk unmap latency values:Histogram {

Histogram of resync unmap bytes values:Histogram {

Histogram of vmdisk unmap bytes values:Histogram {

Histogram of resync unmap dispatched cost values:Histogram {

Histogram of vmdisk unmap dispatched cost values:Histogram {

Histogram of resync unmap scheduler queue depth:Histogram {

Histogram of vmdisk unmap scheduler queue depth:Histogram {

[root@sc2esx12:~]

-Drop the SLOB schema and Tablespace and record observation

After dropping SLOB user and SLOB tablespace, ASM diskgroup Total and Free space statistics are as below:

Total_MB=614399

Free-MB=614329

ASMCMD> lsdg

State Type Rebal Sector Logical_Sector Block AU Total_MB Free_MB Req_mir_free_MB Usable_file_MB Offline_disks Voting_files Name

MOUNTED EXTERN N 512 512 4096 4194304 511996 213720 0 213720 0 N DATA_DG/

MOUNTED EXTERN N 512 512 4096 1048576 102399 7888 0 7888 0 N REDO_DG/

MOUNTED EXTERN N 512 512 4096 1048576 614399 614329 0 614329 0 N SLOB_DG/

ASMCMD>

After dropping SLOB schema & tablespace, vSAN Datastore ‘SiteC-vSAN’ UNMAP Statistics is as shown below:

[root@sc2esx12:~] vsish -e get /vmkModules/vsan/dom/clientStats | grep -i unmap

Total number of unmapped writes:0

Total bytes unmapped for write:0

Sum of all unmapped write latencies:0

Sum of all unmapped write latency squares:0

Max of all unmapped write latencies:0

Sum of unmapped write congestion values:0

Total number of unmaps:1822

Total number of unmaps on leaf owner:0

Total unmap bytes:1288413106176

Sum of all unmap latencies:6785801

Sum of all unmap latencies on leaf owner:0

Sum of all unmap latency squares:114824319475

Max of all unmap latencies:0

Sum of unmap congestion values:0

Total number of recovery unmaps:0

Total number of recovery unmaps on leaf owner:0

Total recovery unmap bytes:0

Sum of all recovery unmap latencies:0

Sum of all recovery unmap latencies on leaf owner:0

Sum of all recovery unmap latency squares:0

Max of all recovery unmap latencies:0

Sum of recovery unmap congestion values:0

Sum of all numOIOUnmap values:10903

Sum of all numOIORecoveryUnmap values:0

Histogram of resync unmap congestion values:Histogram {

Histogram of vmdisk unmap congestion values:Histogram {

Histogram of resync unmap latency values:Histogram {

Histogram of vmdisk unmap latency values:Histogram {

Histogram of resync unmap bytes values:Histogram {

Histogram of vmdisk unmap bytes values:Histogram {

Histogram of resync unmap dispatched cost values:Histogram {

Histogram of vmdisk unmap dispatched cost values:Histogram {

Histogram of resync unmap scheduler queue depth:Histogram {

Histogram of vmdisk unmap scheduler queue depth:Histogram {

[root@sc2esx12:~]

We can observe that Datastore ‘SiteC-vSAN’ UNMAP Statistics before and after dropping the SLOB schema and tablespace is exactly the same and we now know the reason why.

-Perform ASM diskgroup SLOB_DG rebalance with compact optin and observer again.

After performing Oracle ASM diskgroup rebalance with compact option, Datastore ‘SiteC-vSAN’ UNMAP Statistics is as shown below:

[root@sc2esx12:~] vsish -e get /vmkModules/vsan/dom/clientStats | grep -i unmap

Total number of unmapped writes:0

Total bytes unmapped for write:0

Sum of all unmapped write latencies:0

Sum of all unmapped write latency squares:0

Max of all unmapped write latencies:0

Sum of unmapped write congestion values:0

Total number of unmaps:3194

Total number of unmaps on leaf owner:0

Total unmap bytes:1932583751680

Sum of all unmap latencies:7711453

Sum of all unmap latencies on leaf owner:0

Sum of all unmap latency squares:115795565209

Max of all unmap latencies:8736

Sum of unmap congestion values:0

Total number of recovery unmaps:0

Total number of recovery unmaps on leaf owner:0

Total recovery unmap bytes:0

Sum of all recovery unmap latencies:0

Sum of all recovery unmap latencies on leaf owner:0

Sum of all recovery unmap latency squares:0

Max of all recovery unmap latencies:0

Sum of recovery unmap congestion values:0

Sum of all numOIOUnmap values:13647

Sum of all numOIORecoveryUnmap values:0

Histogram of resync unmap congestion values:Histogram {

Histogram of vmdisk unmap congestion values:Histogram {

Histogram of resync unmap latency values:Histogram {

Histogram of vmdisk unmap latency values:Histogram {

Histogram of resync unmap bytes values:Histogram {

Histogram of vmdisk unmap bytes values:Histogram {

Histogram of resync unmap dispatched cost values:Histogram {

Histogram of vmdisk unmap dispatched cost values:Histogram {

Histogram of resync unmap scheduler queue depth:Histogram {

Histogram of vmdisk unmap scheduler queue depth:Histogram {

[root@sc2esx12:~]

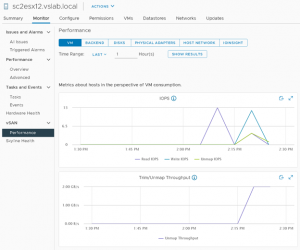

From the web client, we can also observe the UNMAP throughput for the dead space reclamation.

UNMAP Operation on ‘OraVVOL’ vVOL datastore

Repeat the same steps as above

Check that the device is Linux SPC-4 compatible and thin-provisoned.

[root@oracle19c-pmem ~]# sg_inq -d /dev/sdc1

standard INQUIRY:

PQual=0 Device_type=0 RMB=0 version=0x06 [SPC-4]

[AERC=0] [TrmTsk=0] NormACA=0 HiSUP=0 Resp_data_format=2

SCCS=0 ACC=0 TPGS=0 3PC=0 Protect=0 [BQue=0]

EncServ=0 MultiP=0 [MChngr=0] [ACKREQQ=0] Addr16=0

[RelAdr=0] WBus16=1 Sync=1 Linked=0 [TranDis=0] CmdQue=1

length=36 (0x24) Peripheral device type: disk

Vendor identification: VMware

Product identification: Virtual disk

Product revision level: 2.0

No version descriptors available

[root@oracle19c-pmem ~]#

[root@oracle19c-pmem ~]# sg_vpd –p=lbpv /dev/sdc1

Logical block provisioning VPD page (SBC):

Unmap command supported (LBPU): 1

Write same (16) with unmap bit supported (LBWS): 1

Write same (10) with unmap bit supported (LBWS10): 1

Logical block provisioning read zeros (LBPRZ): 0

Anchored LBAs supported (ANC_SUP): 0

Threshold exponent: 1

Descriptor present (DP): 0

Provisioning type: 2

[root@oracle19c-pmem ~]#

-Install the Oracle workload generator SLOB

Before dropping SLOB user and SLOB tablespace, ASM diskgroup Total and Free space statistics are as below:

Total_MB=614399

Free-MB=152

ASMCMD> lsdg

State Type Rebal Sector Logical_Sector Block AU Total_MB Free_MB Req_mir_free_MB Usable_file_MB Offline_disks Voting_files Name

MOUNTED EXTERN N 512 512 4096 4194304 511996 213720 0 213720 0 N DATA_DG/

MOUNTED EXTERN N 512 512 4096 1048576 102399 7888 0 7888 0 N REDO_DG/

MOUNTED EXTERN N 512 512 4096 1048576 614399 152 0 152 0 N SLOB_DG/

ASMCMD>

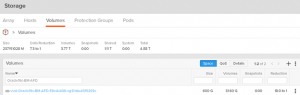

Before dropping SLOB schema & tablespace, the vVOL vmdk statistics from the Pure GUI is as shown below:

The size of the vVOL is 600 G but actual allocated is 31.6 G

-Drop the SLOB schema and Tablespace and record observation

After dropping SLOB user and SLOB tablespace, ASM diskgroup Total and Free space statistics are as below:

Total_MB=614399

Free-MB=614329

ASMCMD> lsdg

State Type Rebal Sector Logical_Sector Block AU Total_MB Free_MB Req_mir_free_MB Usable_file_MB Offline_disks Voting_files Name

MOUNTED EXTERN N 512 512 4096 4194304 511996 213720 0 213720 0 N DATA_DG/

MOUNTED EXTERN N 512 512 4096 1048576 102399 7888 0 7888 0 N REDO_DG/

MOUNTED EXTERN N 512 512 4096 1048576 614399 614329 0 614329 0 N SLOB_DG/

ASMCMD>

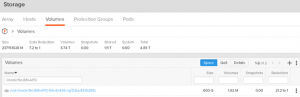

After dropping SLOB schema & tablespace, the vVOL vmdk statistics from the Pure GUI is as shown below:

The size of the vVOL is 600 G and actual allocated is still 31.6 G

Perform ASM diskgroup SLOB_DG rebalance with compact optin and observer again.

After performing Oracle ASM diskgroup rebalance with compact option, the vVOL vmdk statistics from the Pure GUI is as shown below:

The size of the vVOL is 600 G but the actual allocated now is 1.92 M , the dead space was successfully reclaimed.

Summary

The ability to release Oracle workloads dead space depends on

- How Oracle ASM reports the discarded space to the GOS, how the GOS reports the discarded space to underlying VMware layer and eventually how VMware vSphere reports the discarded space to the Storage array

- This blog focuses on the Oracle ASM Filter Driver (ASMFD) capability to report discarded or free space of thin-provisioned vmdk’s to the GOS which eventually gets reported to the VMware datastores and the underlying Storage array

- The Test steps for testing space reclamation on VMware VMFS6 using FC Block datastore backed up by Pure Storage FlashArray, vSAN datastore and vVOL datastore backed up by Pure Storage FlashArray are

- Add a 600 GB thin-provisioned vmdk from the FC Block datastore on Pure Storage to the VM at SCSI 3:0

- Create a new ASM Disk group called SLOB_DG with ‘THIN_PROVISIONED’ attribute using ASMFD driver and install a 600 GB SLOB schema

- Drop the SLOB schema and Tablespace and record observation

- Perform the SLOB ASM disk group rebalance with compact option and record observation

- Repeat the above steps for the vSAN Datastore and vVOL Datastore usse case

All Oracle on vSphere white papers including Oracle on VMware vSphere / VMware vSAN / VMware Cloud on AWS , Best practices, Deployment guides, Workload characterization guide can be found at the “Oracle on VMware Collateral – One Stop Shop”