In part 1 of this blog series, we introduced the “Data for Good” solution motivation and its core components. In this part 2 we looked at the components of the solution. In this part 3 we will bring all components together to build the “Data For Good” solution and see the solution in action.

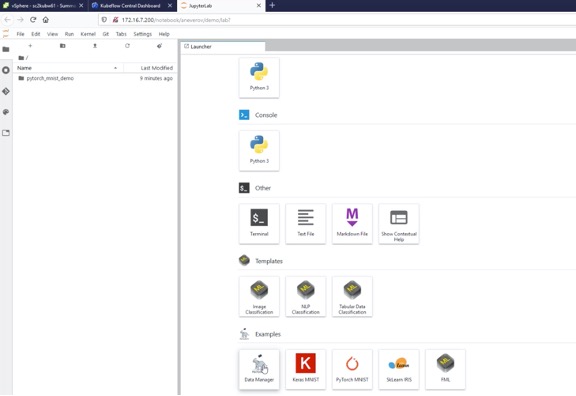

The solution was successfully deployed in the VMware cloud Solutions Lab (VCSLAB) leveraging its state of the art hardware and software components. The deployed solution provides a Jupyter hub interface for data scientists to bring their data and perform data modeling and analysis.

The primary components of the platform leveraging Kubeflow are shown below.

Figure 11: Components of the vMLP solution for data scientists

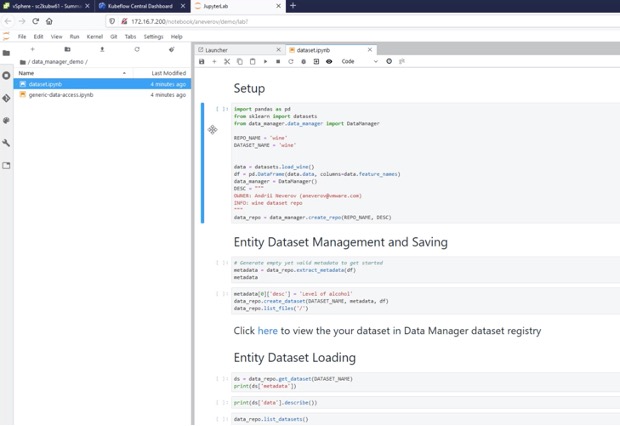

The data scientist has the capability to import their own data into the environment for their analysis.

Figure 12: Importing data into the platform

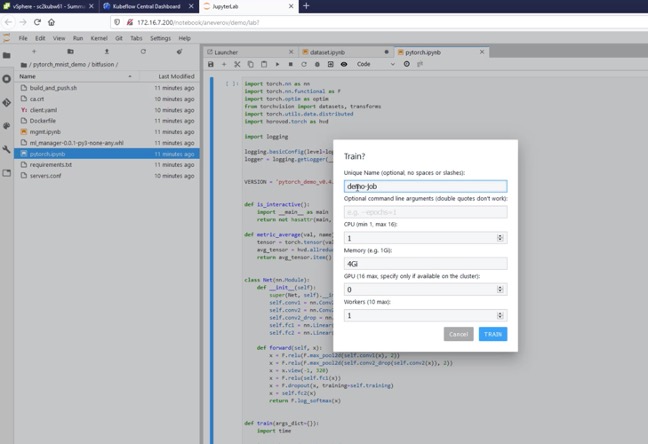

After the data is imported, it can be modeled with machine learning frameworks like PyTorch as shown

Figure 13: Modeling the data with PyTorch

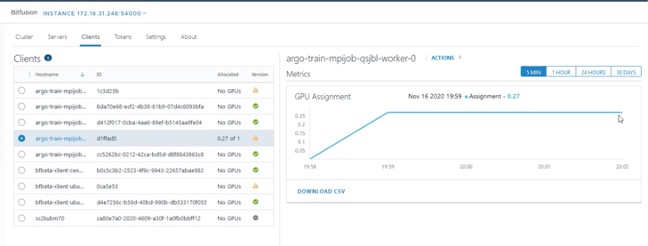

The data model leverages GPUs in the backend through network access with Bitfusion.

Figure 14: GPU utilization during model training

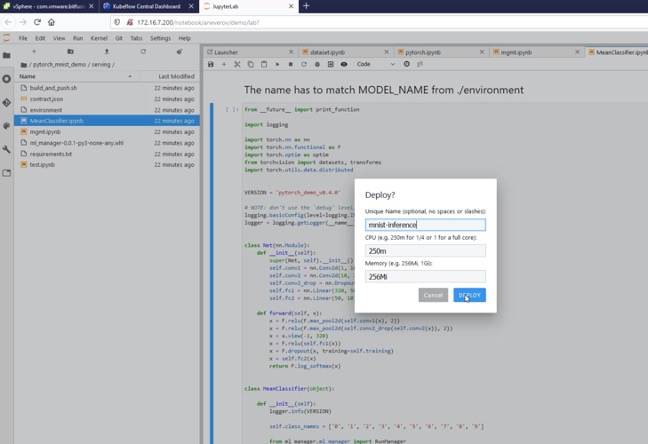

By iterating through multiple models, the data scientists eventually come up with the best fit. This model can then be packaged and deployed for inference in production.

Figure 15: Deploying trained models for inference

By building out this real world solution, we have effectively shown the following:

- VMware Cloud Foundation can be used as a force for good combines VMware Cloud

- Machine Learning platforms like vMLP can effectively run on VCF

- GPUs can be leveraged to speed up data science processing many fold

- VMware Tanzu based Kubernetes can be used to run ML/AI

- Data for Good Solution is a good proof point for running AI/ML on VCF

This solution has shown that VMware Customers and VCPP partners can effectively leverage VCF to deploy a productive data science platform for their end users and customers.