In part 1 of this blog series, we introduced the “Data for Good” solution motivation and its core components. In this part 2 we will look at the components of the solution.

The data for good program needs a platform for data scientists to be able to import and analyze data for non-profits. The data scientists will use this platform to gain insights from critical data from non-profits to help them better server their base.

vMLP is a platform to provide an end-to-end ML platform which seamlessly deploys and runs AI/ML workloads on top of VMware infrastructure.

Benefits

Contents

The vMLP platform provides the following benefits:

- Enables cost efficient use of shared GPUs for AI/ML workloads

- Reduces the risks of broken Data Science workflows by leveraging well-tested and ready-to-use project templates

- Faster development for AI/ML models by utilizing end-to-end tooling including fast and easy model deployment served via standardized REST API

Features

The vMLP platform has the following features:

- Deployment of familiar Jupyter Notebook workbenches

- JupyterLab extensions to achieve smooth workflows

- Support for distributed model training leveraging popular OSS frameworks and GPUs

- Storing and reliably tracking experiments for reproducibility

- Efficient turn-around on model testing based on the built-in serving framework

- Multi-cluster Federated ML support

- Sharing metadata about Data Sources to enable team collaboration

Figure 4: The vMLP platform components

The working of vMLP

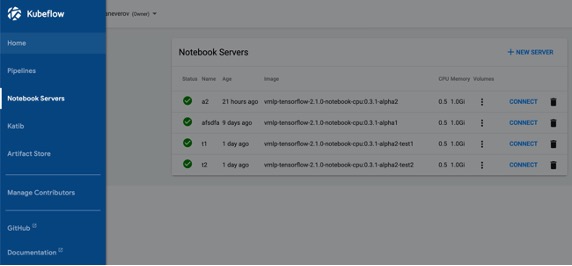

vMLP is based on Kubeflow which can be compared to a Linux kernel. Kubeflow is not consumed directly, but through some distros like Ubuntu, which ensure more thorough testing, integration with diverse drivers, usability fixes, etc. vMLP is a Kubeflow distro with an enterprise and usability focus available as a VMware fling.

Figure 5: Jupyter Notebook Servers offered with vMLP

vMLP can leverage enterprise-grade VCF components: etc. to provide Kubeflow significant accelerated infrastructure. vMLP also includes additional services which are not part of Kubeflow such as MLflow Model Repository, BentoML, Greenplum, R Kernel, etc.

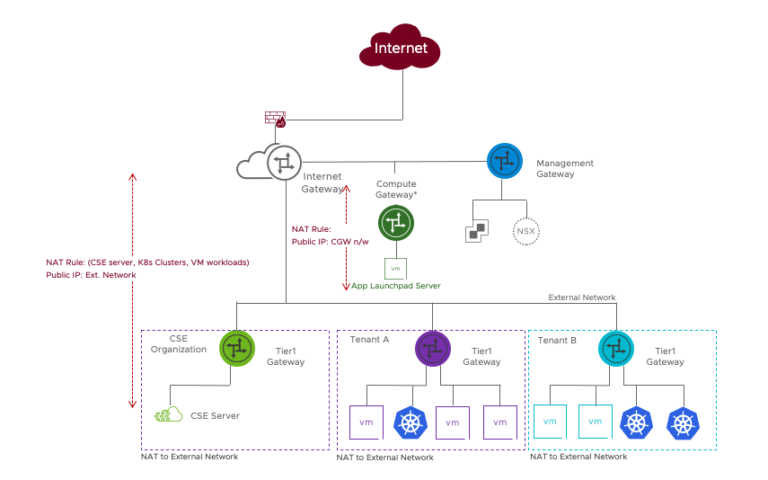

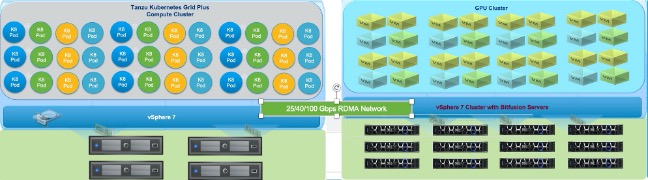

The Logical schematic of the infrastructure used in the solution is shown below. A Tanzu Kubernetes based compute cluster provides scalable compute infrastructure and a vSphere Bitfusion based GPU cluster provides required. A high speed low latency network is leveraged by Kubernetes workloads to access GPU resources over the network.

Figure 6: Logical architecture of the solution

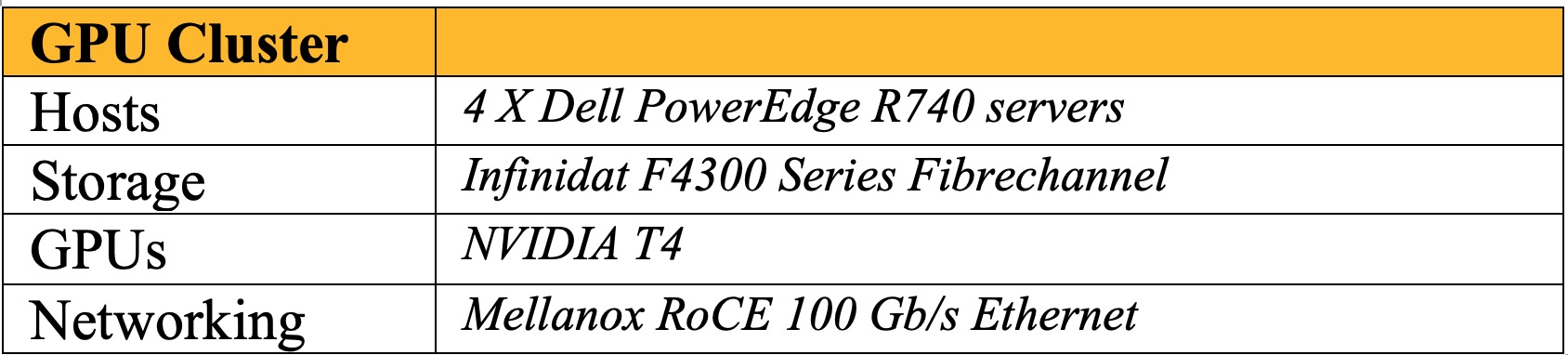

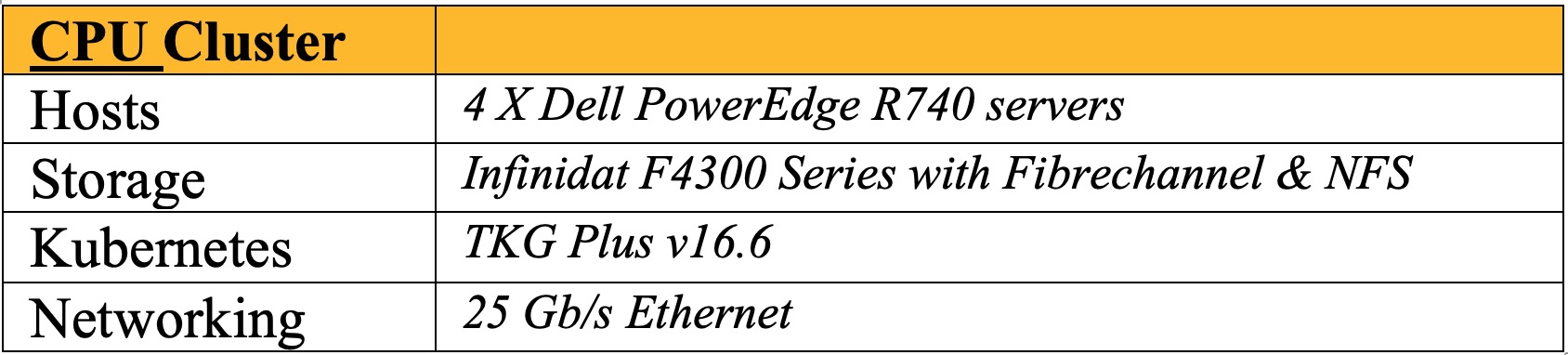

Infrastructure components used in the solution are shown.

Table 1: GPU Cluster components used in the solution

Table 2: CPU Cluster components used in the solution

VMware Tanzu Kubernetes Infrastructure:

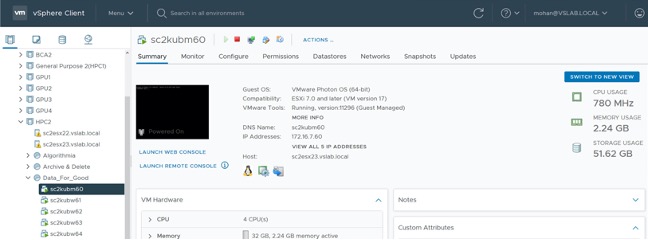

Cloud native applications leverage VMware Tanzu to run Kubernetes natively on VMware. The initial setup of the scalable solution leverages one master node and four worker nodes. The profile of the nodes are shown in figure.

Figure 7: Virtual machines representing the Tanzu Kubernetes

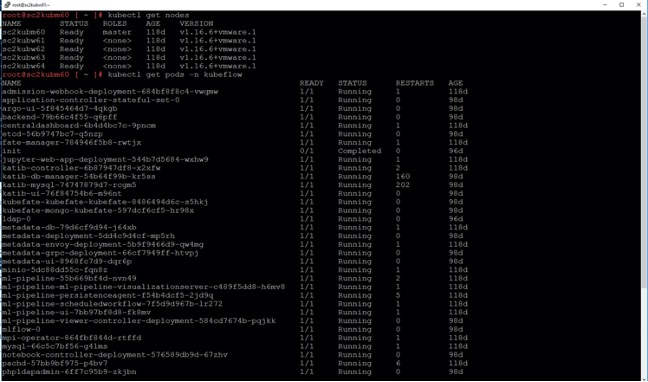

The Kubernetes nodes and the pods used with Kubeflow are shown below. The Kubeflow namespace and its pods, which is deployed by vMLP provides the capabilities of the machine learning platform used by the data scientists.

Figure 8: Kubernetes nodes and Kubeflow pods

GPU Cluster:

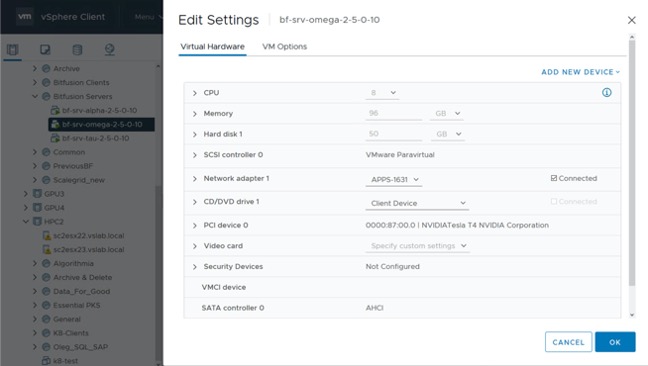

GPU resources are consolidated in a dedicated GPU cluster leveraging NVIDIA vGPU for local access and Bitfusion for remote access over the network. The GPU hosts have been setup with VMWare Bitfusion software for GPU virtualization

Figure 9: Virtual machine components of a Bitfusion GPU server

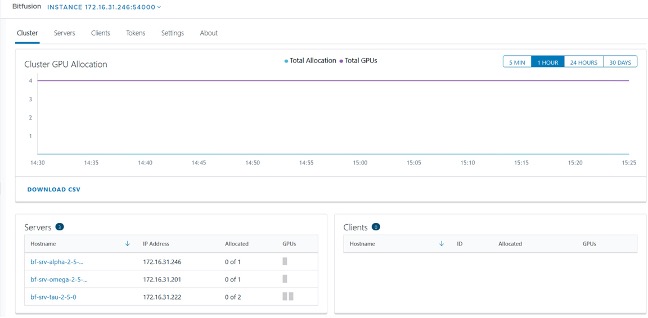

Bitfusion GPU resources can be monitored and managed via the vCenter plugin. Client and server components are seen in the dashboard.

Figure 10: vCenter Bitfusion plugin for monitoring GPU resources

In part 3 we will bring all components together to build the “Data For Good” solution. We will then look at the solution in action.