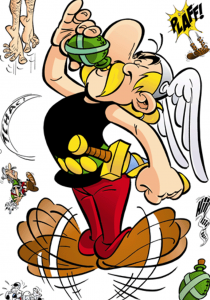

For readers who are familiar with the Asterix and Obelix series, the magic potion refers to the potion brewed by Getafix, that gives the Gaulish warriors superhuman strength.

One of VMware’s magic portion for Oracle workloads is the ‘Paravirtualized SCSI Controllers a.k.a PVSCSI Controllers’ which is accelerates workloads performance on a VMware vSphere Platform.

This blog is meant to raise awareness of the importance of using PVSCSI adapters with adequate Queue Depth settings for an Oracle workload.

This blog

- Is not meant to be a deep dive on PVSCSI and Queue Depth constructs

- contains results that I got in my lab running a load generator SLOB against my workload, which will be way different than any real-world customer workload , your mileage may vary

Remember, any performance data is a result of the combination of hardware configuration, software configuration, test methodology, test tool, and workload profile used in the testing.

VMware Paravirtual SCSI adapter

Contents

VMware Paravirtual SCSI adapter is a high-performance storage controller which provides greater throughput and lower CPU use. VMware Paravirtual SCSI controllers are best suited for environments running I/O-intensive applications.

More information on VMware Paravirtual SCSI adapter can be found here.

More information on ; SCSI, SATA, and NVMe Storage Controller Conditions, Limitations, and Compatibility’ can be found here.

PVSCSI and VMDK Queue Depth

Much has been written and spoken about Queue depth’s, both on the PVSCSI side and the VMDK side.

One of my earliest blogs ‘Queues, Queues and more Queues’ in 2015 attempts to explain the importance of queue depth especially in a virtualized stack.

So , simply using PVSCSI controllers will not help, the queue depth for the PVSCSI controller and the vmdk’s attached to that PVSCSI controller should be set to maximize. Use all 4 PVSCSI controllers whenever and wherever possible.

More information on how to set the queue depths to maximum for workloads with intensive I/O patterns can be found in the article KB 2053145.

Oracle ASM Diskgroups and ASM Disks

Oracle ASM is a volume manager and a file system for Oracle Database files that supports single-instance Oracle Database and Oracle Real Application Clusters (Oracle RAC) configurations. Oracle ASM uses disk groups to store data files; an Oracle ASM disk group is a collection of disks that Oracle ASM manages as a unit.

Keep in mind, Oracle ASM provides both mirroring (except for External ASM redundancy) and striping (Coarse / Fine) right out of the gate.

More information on Oracle ASM can be found here.

Single v/s Multiple ASM Disks in an ASM diskgroup

As the Global Oracle Practice Lead for VMware, I have a unique opportunity to speak to customers across all 4 GEO’s, typical questions I get from customers / field:

- What Should be the ideal size of an ASM Disk?

- How many ASM Disks should I have in an ASM diskgroup?

- Should we go with 1 large ASM disk or smaller number of multiple ASM Disks in an ASM diskgroup?

Before we attempt to answer any/all of the above questions, keep in mind, every workload is born different!!

Different customers have

- different database workload profiles

- different workload requirements

- different business SLA’s / RTO / RPO etc

- different database standards

- different infrastructure standards

and so, what works for Customer 1 will not work for Customer 2.

Let’s us try to attempt to answer some of the above questions:

1) What Should be the ideal size of an ASM Disk?

It depends!! Oracle does not have a recommended size for an ASM Disk.

Even before Oracle ASSM was introduced in version 9i, the question remained among Oracle DBA’s – what is the ideal size of a table extent? It depends. The standard that I adopted that time was to come up with a standard which was good for my workload – 128K, 1M and 128M for extent size for small , medium and large tables.

For example, some customers have adopted their database ASM disk standards as 500GB, 1TB and 2TB for small, medium and large ASM disk for database deployments. Does that work? Sure, it works, for this customer at least, for they know their workload and requirements much better than anyone else.

2) How many ASM Disks should I have in an ASM diskgroup?

Depends on the ASM Disk group redundancy i.e. how many disk failures the customer is prepared to tolerate.

3) Should we go with 1 large ASM disk or smaller number of multiple ASM Disks in an ASM diskgroup?

Using multiple ASM disks helps by

-improving workload performance as Oracle ASM striping has two primary purposes – balance loads across all of the disks in a disk group and reduce I/O latency

-improving performance owing to the multiple queue depth that’s is available with multiple vmdk’s on the VM v/s using fewer vmdk’s on the VM

On the flip side, keep in mind, the maximum PVSCSI adapters per VM is 4, maximum Virtual SCSI Targets per PVSCSI adapter is 64 and hence maximum Virtual SCSI Targets Per Virtual VM = 4 x 64 = 256.

So, we need to keep a fine balance between multiple ASM disk / vmdk’s v/s running out of Virtual SCSI targets for the VM.

Back to the test results now.

Key points to take away from this blog

This blog focuses on the below specific use cases around testing Oracle workloads using a load generator SLOB:

- Compare performance of using 1 Large ASM disk (vmdk) per ASM diskgroup on SCSI 1 v/s 2 Small ASM disks (vmdk) per ASM diskgroup on SCSI 3

- Compare performance of using 2 Small ASM disks (vmdk) per ASM diskgroup on SCSI 3 v/s 2 Small ASM disks (vmdk) per ASM diskgroup split across 2 SCSI controllers, SCSI 3 and SCSI 1

Test Setup

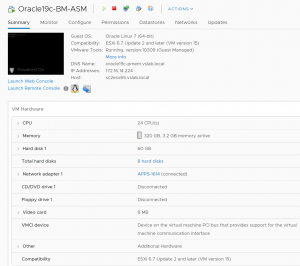

VM ‘Oracle19c-BM-ASM’ was created with 24vCPU’s, 320 GB memory with storage on All-Flash Pure array and Oracle SGA & PGA set to 64G and 6G respectively.

The OS was OEL 7.6 UEK with Oracle 19c Grid Infrastructure & RDBMS installed. Oracle ASM was the storage platform with Oracle ASMLIB. Oracle ASMFD can also be used instead of Oracle ASMLIB.

SLOB 2.5.2.4 was chosen as the load generator for this exercise, the slob SCALE parameter was set to 1530G.

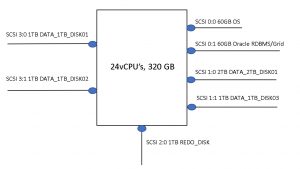

Following VM disks were allocated –

- SCSI 0:0 60G for O/S

- SCSI 0:1 60G for Oracle RDBMS/Grid Infrastructure binaries

- SCSI 1:0 2TB for ASM disk DATA_2TB_DISK01

- SCSI 1:1 1TB for ASM disk DATA_1TB_DISK03

- SCSI 2:0 1TB for ASM disk REDO_DISK02

- SCSI 3:0 1TB for ASM disk DATA_1TB_DISK01

- SCSI 3:1 1TB for ASM disk DATA_1TB_DISK02

Test Cases

1) Single v/s Multiple vmdk’s on the same PVSCSI controller

- SCSI 1:0 2TB for ASM disk DATA_2TB_DISK01 v/s SCSI 3:0 1TB for ASM disk DATA_1TB_DISK01 & SCSI 3:1 1TB for ASM disk DATA_1TB_DISK02

2) Multiple vmdk’s on the same PVSCSI controller v/s Multiple vmdk’s on different PVSCSI controllers

- SCSI 3:0 1TB for ASM disk DATA_1TB_DISK01 & SCSI 3:1 1TB for ASM disk DATA_1TB_DISK02 v/s SCSI 3:0 1TB for ASM disk DATA_1TB_DISK01 & SCSI 1:1 1TB for ASM disk DATA_1TB_DISK03

The illustration below summarizes the VM vmdk setup above.

Test Results

Test Case 1

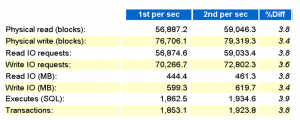

Compare performance of using 1 Large ASM disk (vmdk) per ASM diskgroup on SCSI 1 v/s 2 Small ASM disks (vmdk) per ASM diskgroup on SCSI 3

Compare performance of

- SCSI 1:0 2TB for ASM disk DATA_2TB_DISK01 v/s SCSI 3:0 1TB for ASM disk DATA_1TB_DISK01 & SCSI 3:1 1TB for ASM disk DATA_1TB_DISK02

The database AWR reports from running the same workload under 2 different configurations can be found below.

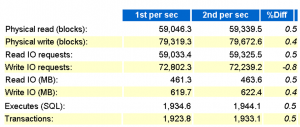

We can see that the 2nd configuration (2 vmdk’s on a single PVSCSI controller) shows better overall performance than the 1st configuration (1 vmdk’s on a single PVSCSI controller).

The VM vmdk statistics for the 2 separate configurations also show the 2nd configuration (2 vmdk’s on a single PVSCSI controller) shows overall better performance than the 1st configuration (1 vmdk’s on a single PVSCSI controller).

Test Case 2

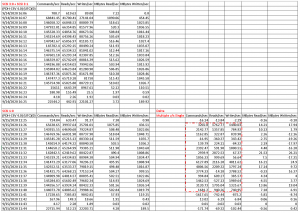

Compare performance of using 2 Small ASM disks (vmdk) per ASM diskgroup on SCSI 3 v/s using 2 Small ASM disks (vmdk) per ASM diskgroup split across 2 SCSI controllers, SCSI 3 and SCSI 1

Compare performance of

- SCSI 3:0 1TB for ASM disk DATA_1TB_DISK01 & SCSI 3:1 1TB for ASM disk DATA_1TB_DISK02 v/s SCSI 3:0 1TB for ASM disk DATA_1TB_DISK01 & SCSI 1:1 1TB for ASM disk DATA_1TB_DISK03

The database AWR reports from running the same workload under 2 different configurations can be found below.

We can see that the 2nd configuration (2 vmdk’s on 2 separate PVSCSI controllers) shows overall better performance than the 1st configuration (2 vmdk’s on the same PVSCSI controller).

Test Results Analysis

1) Test Case 1 – Comparing performance of using 1 Large ASM disk (vmdk) per ASM diskgroup on SCSI 1 v/s 2 Small ASM disks (vmdk) per ASM diskgroup on SCSI 3

- we found that the 2nd configuration (2 vmdk’s on a single PVSCSI controller) shows overall better performance than the 1st configuration (1 vmdk’s on a single PVSCSI controller).

2) Test Case 2 – Compare performance of using 2 Small ASM disks (vmdk) per ASM diskgroup on SCSI 3 v/s using 2 Small ASM disks (vmdk) per ASM diskgroup split across 2 SCSI controllers, SCSI 3 and SCSI 1

- we found that 2nd configuration (2 vmdk’s on 2 separate PVSCSI controllers) shows overall better performance than the 1st configuration (2 vmdk’s on the same PVSCSI controller).

On top Oracle ASM provides both mirroring (except for External ASM redundancy) and striping (Coarse / Fine) right out of the gate.

Remember, any performance data is a result of the combination of hardware configuration, software configuration, test methodology, test tool, and workload profile used in the testing , so the performance improvement I got with my workload in my lab is in no way representative of any real production workload which means the performance improvements for real world workloads will definitely be better.

Summing up the findings, we recommend using PVSCSI adapters for database workloads with increased queue depth and test your workloads with single ASM disk per disk group v/s multiple ASM disks per disk group to find the sweet spot and at the same time ensure that you do not reach the maximum limit for Virtual SCSI disks per VM.

Summary

- This blog is meant to raise awareness of the importance of using PVSCSI adapters with adequate Queue Depth settings for an Oracle workload.

- This blog Is not meant to be a deep dive on PVSCSI and Queue Depth constructs

- This blog contains results that I got in my lab running a load generator SLOB against my workload, which will be way different than any real-world customer workload, your mileage may vary

- Remember, any performance data is a result of the combination of hardware configuration, software configuration, test methodology, test tool, and workload profile used in the testing

All Oracle on vSphere white papers including Oracle licensing on vSphere/vSAN, Oracle best practices, RAC deployment guides, workload characterization guide can be found in the url below

Oracle on VMware Collateral – One Stop Shop

https://blogs.vmware.com/apps/2017/01/oracle-vmware-collateral-one-stop-shop.html