In part 1 of this server we introduced the challenges with GPU usage and the features and components that can make the building blocks for GPU as a service. In part 2 we looked at how VMware Cloud Foundation components can be assembled to provide GPUs as a service to your end users. In this final part 3 we will look at how a virtualized GPU cluster is designed with sample requirements.

Sample Enterprise GPU Cluster Design Example:

Contents

The scope of this sizing example is confined to the GPU requirements only. Sizing of all other infrastructure components such as CPU, Memory, Storage and Networking can be sized based on standard capacity analysis and design.

GPU Specific requirements:

On performing capacity analysis, the following details emerged on the need for GPU compute capacity.

- Customer has a total capacity requirement of 28 GPUs

- Maximum GPUs required per user is 4 GPUs

Customer Use Cases:

- High performance researchers with per user need of a maximum of 4 GPUs

- Data Scientists needing full or partial GPUs to perform analysis

- HPC users needing full or partial GPUs for their applications

- Multiple Developers requiring full or partial GPUs in their development activities

- Distributed Machine Learning users with need for multiple GPU resources across many worker nodes

Workload Design:

High Performance GPU users:

Some data scientists and researchers need more than one GPU at a time and to ensure best performance all these GPUs should exist in a single physical node. Certain HPC applications need GPU access with minimum latency over prolonged periods of time. The virtual machine used by these researchers and specialized HPC applications would run on the GPU cluster itself and leverage NVIDIA vComputeServer for GPU allocation.

Data Science, HPC & Developer Users:

These users require a single or partial GPU to meet their computing needs. To be able to have these users access these resources in a flexible manner from anywhere in the datacenter, these users should use Bitfusion to access their GPUs over the high speed RDMA network.

Distributed Machine Learning:

These users require multiple partial or full GPUs distributed across many worker nodes. Since each worker node gets its own full or partial GPU, Bitfusion based access over the high speed RDMA network would be ideal for this use case.

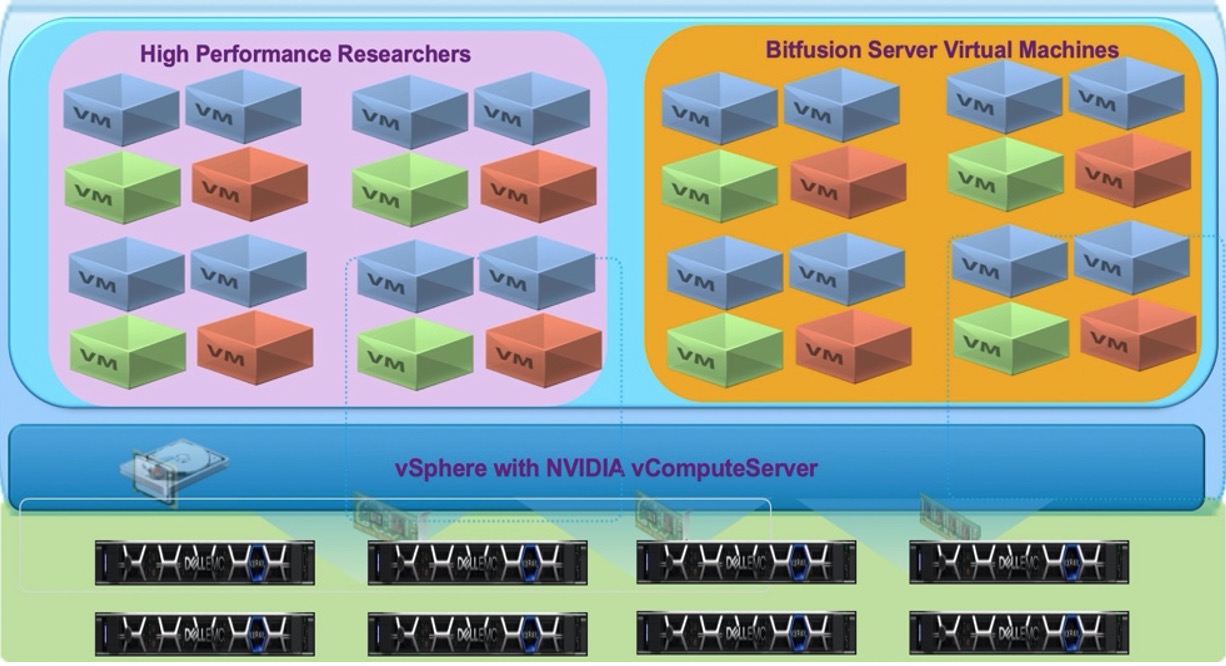

Cluster Design:

The GPU cluster would be deployed in a VCF Workload domain. The design will incorporate the requirements of the above use cases along with some allowance for high availability and maintenance.

Design Decisions:

- Each physical node will have 4 GPUs each to meet the need for high performance researchers

- To provide for a capacity of 28 GPUs, a minimum of 7 hosts with 4 GPU each are required.

- An additional host is required for high availability and maintenance. Any failure during maintenance is assumed to be allowed to avoid adding additional hosts as overhead.

- The cluster would use eight nodes with 4 GPUs each providing a total capacity of 32 GPUs. 28 GPUs will be available in the case of a node failure.

- All GPU allocations in the cluster will be through NVIDIA vComputeServer. Virtual machines using vGPU allocations can be vMotioned from one node to another which is supported. This makes maintenance of the GPU cluster feasible.

- Bitfusion servers are also like other consumers of GPUs allocated by NVIDIA vComputeServer that controls all allocations. A subset of the GPUs are reserved and allocated by vComputeServer for Bitfusion based on user requirements

- High Speed networking with PVRDMA for minimal latencies for Bitfusion access to GPUs via the network

Figure 16: Sample Enterprise GPU Cluster Logical Architecture

Future of Machine Learning is Distributed:

The quality of the prediction for complex applications require the use of a substantial amount of training data. Even though smaller machine learning models can be trained with modest amounts of data, the data and its memory requirements for training of larger models such as neural networks exponentially grow with the number of parameters. More and more GPUs are packed into physical servers to provide processing capabilities for these larger models. But his proposition is very expensive and not optimal. The paradigm for machine learning is shifting to provide the ability to scale out the processing and distributing the workload across multiple machines. Distribution Machine Learning methods like Horovod and distributed TensorFlow will dominate the future landscape with the tremendous growth of data and the complexity of the models.

Summary

With the rapid increase in the need for GPU computing, enterprises are seeking flexible solutions to meet the needs of data scientists, developers and other HPC users. The virtualized GPU workload domain solution combines the best of VMware virtualization software, Tanzu Kubernetes Grid, vSphere Bitfusion and NVIDIA vComputeServer to provide a robust yet flexible solution for GPU users. This end-to-end solution provides a reference framework to deploy a GPU workload domain to meet the common use cases for machine learning and HPC applications.

VMWare Cloud Foundation provides a solid framework with software defined compute, storage and networking. VMware’s support for accelerators such as NVIDIA GPUs combined with the vComputeServer software provides vMotion and DRS capabilities for virtual machines that have associated vGPUs in use. vSphere Bitfusion facilitates the access of GPU resources over the network providing remote users to avail of centralized GPU resources. The sample GPU cluster design discussed shows the flexibility of the solution to accommodate all common GPU use cases.

Call to Action:

- Audit how GPUs are used in your organization’s infrastructure!

- Calculate the costs and utilization of the existing GPUs in the environment

- What are the use cases for GPUs across different groups?

- Propose an internal virtualized GPUaaS infrastructure by combining all resources for better utilization and cost optimization.