This series of blog articles presents a set of use cases for deploying machine learning workloads on VMware Cloud on AWS and other VMware Cloud infrastructure. The first article described the use of table-based data, such as that held in relational databases, for classical machine learning on the VMware Cloud platform. We saw examples of vendor tools executing this kind of ML on VMs in VMware Cloud on AWS. This second article focuses on machine learning model interpretability or explanation as a key area that can be deployed using tools on VMware Cloud on AWS services. Part 3 of the series is here

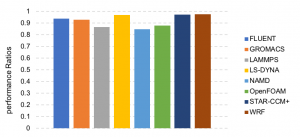

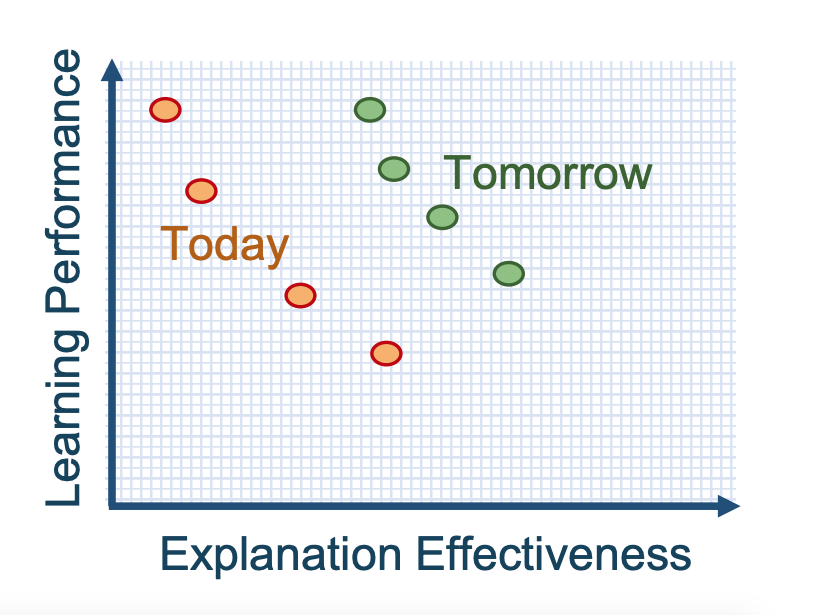

The world of machine learning essentially falls into two general branches – and there is a blending of these going on, naturally. On the one hand, there are the classical statistical algorithms such as generalized linear methods (GLM), logistic regression, support vector machines (SVM) and boosted decision trees. On the other hand, there are the deep neural network-based (DNNs) models that have very high learning performance and can require specialized hardware for acceleration. This article talks about the tradeoff between being able explain the model clearly to a business person (the X-axis below) and the model’s learning/prediction power and performance, in the context of these two branches of ML.

Source: DARPA Naval Research Laboratory – Explainable Artificial Intelligence – D. Gunning 2017

Many enterprises are doing advanced work with deep neural networks that are powered by use of hardware accelerators. The value of that approach is clear for solving many problems, especially in image recognition, NLP and other forms of data. However, the models that many commercial companies have already placed into production deployment today for business use are the linear models like GLM, decision trees like XGBoost and others from the classic statistical field. This is so, to a significant degree, because of the requirement for model explanation that is required by the particular industry they operate in.

Why is Model Explanation a Problem?

Contents

To quote from M. Tulio Ribeiro’s important paper on this subject, “explaining predictions is an important aspect in getting humans to trust and use machine learning effectively, if the explanations are faithful and intelligible”.

To appreciate the importance of model explanation, let’s say an ML model, embedded in a business application, causes someone to be denied their application for a bank loan. What factors or behavior in the trained ML model caused this recommendation to be made and to be trusted by the banker involved? This is a question that is valid to ask of the business. In regulated industries, where ML is being rapidly adopted, such as finance, healthcare and telecom, there are regulations that demand that these decisions be explained. It is not quite good enough to say that the computer system made that recommendation. The real question is – why did it do that? You can see that this gets even more important if an ML algorithm were part of a health diagnosis or another vital process.

The issue is that it can be difficult to answer these questions in the case of some ML models. It can be particularly difficult to explain the internal workings of ML models that use deep neural networks, because they are so complex and have so many components. Research work is ongoing in that area and there is some progress, but it is still a concern today.

On the other hand, the linear models, and others in the classic statistics area, can be formulated using math and explained to a person who is familiar with that field. We will look at other forms of explanation below. Models based on neural networks are not conducive to that type of explanation – so this presents a problem for the business person who wants to use them. This is the tradeoff of learning power (model training time with large complex data) over model explainability that is shown in the graph above. As you can see in the shift from orange to green data points, there is a move to increase explanation power, but the high powered DNN models are still expected to be hard to explain.

Now, we see that in the ML lifecycle, not only is the training phase of a model and the subsequent model deployment work important in the work of a data scientist, but being able to explain why the model is making certain recommendations or predictions is equally important to the project’s success. Business executives have said that unless the model can be explained to a regulator, then it will not be placed into production use.

The category of ML that uses the classical statistical methods, like generalized linear models (GLMs), decision trees and others, has a very distinct advantage here for business users and data scientists – its inherent model interpretability. Those statistical methods operate very well on traditional compute systems without needing the power of hardware acceleration. Hence they are a good fit for deployment on VMware Cloud on AWS.

This model explanation requirement alone turns out to be a key success factor today in most enterprises’ adoption of machine learning models.

Explaining by Use of the Classical Models

Frequently, the way out of this explanation dilemma for more complex DNN models is to use a simpler linear model on the same test data, to see if the simpler model comes close to the more complex model’s result. That simpler model can be run on a separate infrastructure to the main model’s platform. When trying to explain the results coming from Deep Neural Network models, we resort to linear models running on CPUs to see if they come up with a result that is close to the one generated by the DNN one. Comparing the two then gives us some ground for explanation.

The linear models and other classical statistical ones in general do not benefit significantly from acceleration technology. There is work going on in this acceleration area for statistical models, and it looks promising. However, the most common deployments today are on CPU-based hardware and virtual machines. These workloads and models fit very well with VMware Cloud on AWS.

Tools for Model Explanation

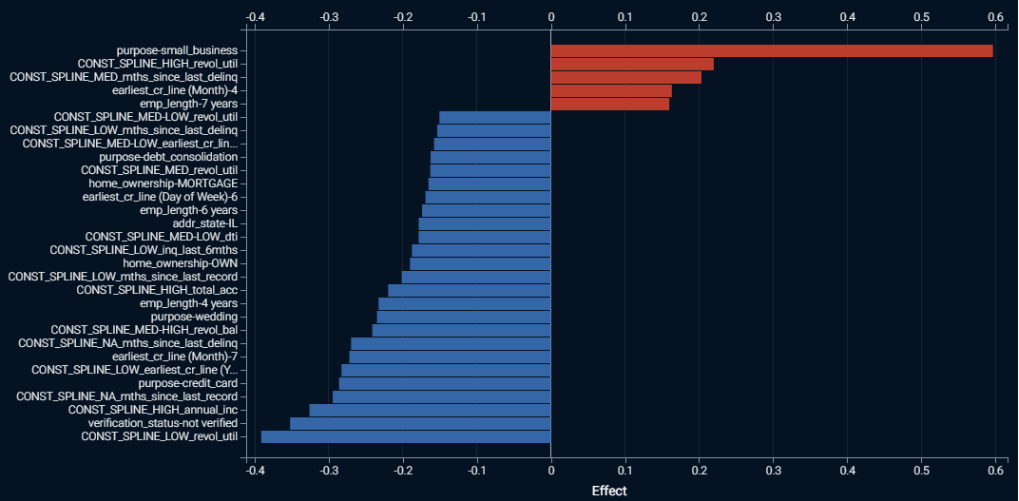

The good news here is that the ML tool vendors clearly recognize the model explanation problem and they have a variety of approaches to solving it. An example screen from the DataRobot AI tool below shows a graph of the strongest feature effects found when using a regularized Generalized Linear Model that is trained on Lending Club’s loan data, used for predicting which personal loans will not perform well. The red bars indicate positive effects (higher probability of loan failure), and the longer the red bar the higher the prediction. Similarly, the blue bars indicate negative effects, and the longer the blue bar the lower the prediction. The labels on the Y axis are the feature names (You can think of these as column names in tables) and the value that each feature takes. The X axis is the linear effect, the coefficient or weight given for the presence of the feature value. Weights determine how much effect this feature has on the model’s predictions. The prefix CONST_SPLINE in a feature’s name refers to different ranges within a numeric value.

Source: DataRobot

Finding the right features to use in an ML model is an art in itself. Andrew Ng, an expert on the area, has said that “machine learning is just feature engineering” – to stress its importance. Here, we see the use of comparison of features as a move toward model explanation. A more detailed explanation of how model explanation is done using DataRobot’s platform is given here. We see from this kind of display that an important step towards model explanation is recognizing the features that contribute the most weight to the prediction outcome.

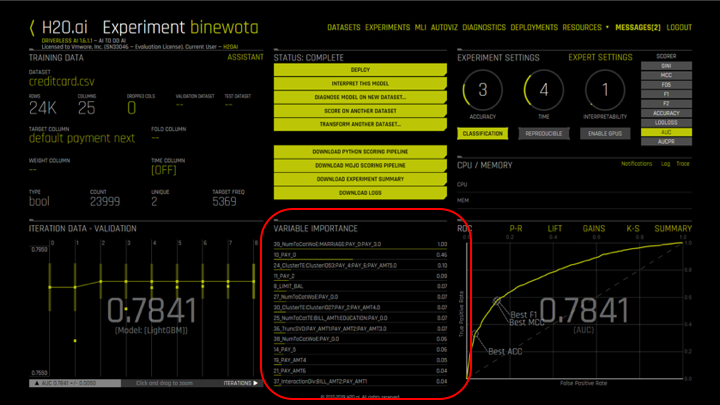

We see this same approach in the ranking of those columns/features that have the most importance to the predictions of an automatically chosen model. In H2O’s tool, for example, the “Variable Importance” section highlighted in the tool below is the part we are interested in for explanation purposes. It shows those features within the input data that have the most effect on the prediction of a default on a credit payment (our “target column” for prediction here), based on the model chosen. The tool itself, based on its own understanding of the training data, chose a lightweight Gradient Boosted Machine (GBM) model for the user-supplied credit card dataset in this case. The various dials in the user interface allow us to tune the model choice. As you see on the top right side, under “Expert Settings”, one dial we can adjust is the “interpretability” of the model chosen. The higher the value we set for “Interpretability” the more likely it is that the tool will choose an inherently well-explained, simpler model.

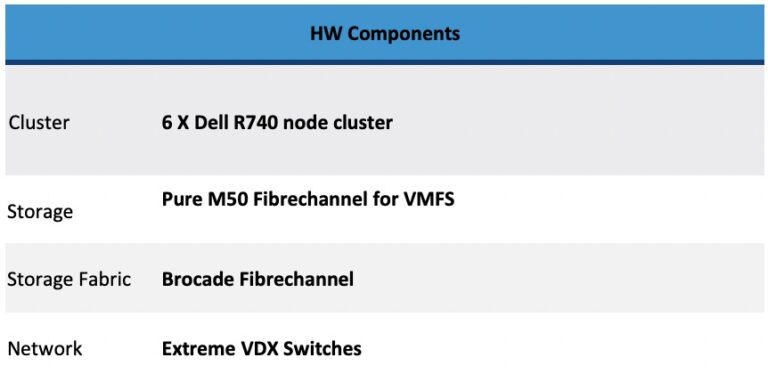

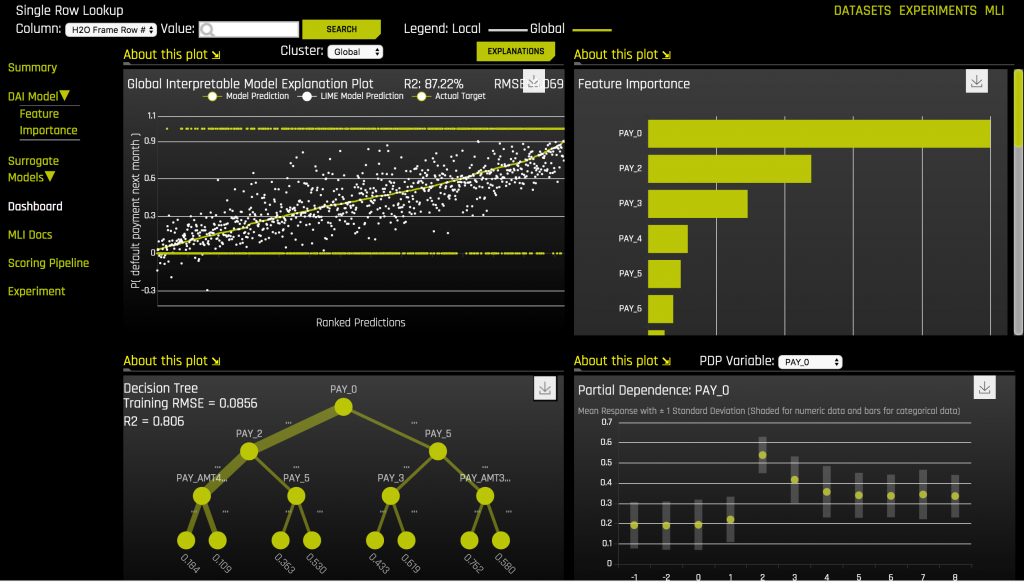

There are also more advanced methods for explaining and interpreting models that are in common use today. These include Locally Interpretable Model Explanations (LIME) and the Shapley Values method. We won’t go into those methods in detail here. But for interest, here is a UI for the model interpretability function of the H2O Driverless AI tool, just as an example of the type of functionality that an ML vendor offers in this area. This ML Interpretability (MLI) work was executed within the vendor’s tool running in a set of VMs on vSphere and on VMware Cloud on AWS, in parallel, to show their functionality. The same exact tooling and dataset were tested in on-premises VMs on vSphere – and then moved to VMware Cloud on AWS for testing there. The tool operated very well in both environments.

Summary

In the first article in this series, we talked about the use of data from traditional sources, such as relational databases and files, for machine learning training and inference. We take the argument for these models further in part two here, by describing the power of the classical approaches for model explanation – and their compatibility across on-premises vSphere and VMware Cloud on AWS. This is essentialy in the majority of business deployments for production today. This description shows another important use-case for machine learning applications on VMware Cloud on AWS.

Call to Action

Check with your data scientist or machine learning practitioners at your organization that they have taken model explanation into account when deploying ML models to production. Introduce them to VMware Cloud on AWS as a great platform for their tooling for machine learning in general and for its support of tools that focus on explanation and interpretability of models. You can evaluate VMware Cloud on AWS by taking a hands-on lab.

There are also in-depth technical articles on distributed machine learning, on the use of neural networks and hardware accelerators and high performance computing available at this site.