VMware vSphere is continuously improving to provide enhanced and new features. In every release, significant improvements have been made in the areas of customer experience, security, availability, and performance.

This blog highlights the new functionality and performance improvements that are closely related to High-Performance Computing (HPC) and Machine Learning/Deep Learning (ML/DL) workloads from vSphere 6.5 to 6.7 Update 1 (u1).

Functionality

- VMware vSphere vMotion with NVIDIA vGPU powered VMs

(introduced in vSphere 6.7 u1)

Following the introduction of the virtual machine Suspend and Resume capabilities in vSphere 6.7, vSphere 6.7 Update 1 further introduces the vMotion feature for NVIDIA vGPU-accelerated VMs. This enables better data-center utilization, improving productivity and reducing costs. For example, when planned maintenance is due for a particular host server, then a long-running ML training job in VMs on that host need not be interrupted, but can be moved instead to another suitable host, without interruption.

As another example, when virtual desktop infrastructure (VDI) VMs become idle at night, these VMs can either be suspended or also consolidated by live migrating them to different hosts, allowing the original hosts to be repurposed for HPC/ML workloads.

- Support for Intel FPGA

(introduced in vSphere 6.7 u1)

With vSphere 6.7 Update 1, Intel Field Programmable Gate Array (FPGA) is also supported as a hardware accelerator by leveraging VMware DirectPath I/O (Passthrough) technology. While many neural networks in DL are trained on CPU or GPU clusters, FPGA could be very suitable for latency-sensitive real-time inference jobs.

- Instant Clone

(introduced in vSphere 6.7)

Instant Clone in vSphere is designed to provide a mechanism to create VMs very quickly. It can be four times faster than Linked Clone, enabling 64 clones to be created within 128 seconds. With Instant Clone, HPC clusters can be stood up or brought down with extreme speed.

- Support for VM Direct Path I/O with High-End Compute Accelerators

(introduced in vSphere 6.5)

While the majority of users run accelerated applications that only take advantage of a single GPU, there are increasing numbers of researchers and data scientists who require more than one high-end accelerator to be made available within a single VM.

Some high-end compute GPUs like NVIDIA V100, P100, and devices such as Intel FPGAs use large, multi-gigabyte passthrough memory-mapped I/O (MMIO) memory regions to transfer data between the host and the device. For example, the NVIDIA Tesla V100’s PCI MMIO space is slightly large than 16GB or 32GB, depending on the model. As described in GPGPU Computing with the nVidia K80 on VMware vSphere 6, prior to vSphere 6.5, the aggregate per-VM PCI BAR memory size cannot exceed 32GB, limiting the number of such devices that can be passed through.

Since the release of vSphere 6.5, that PCI BAR memory size limitation has been removed. Multiple accelerators with large PCI BARs may now be passed into a single VM.

For detailed configuration notes, please see:

How to Enable Nvidia V100 GPU in Passthrough mode on vSphere for Machine Learning and Other HPC Workloads

Using GPUs with Virtual Machines on vSphere – Part 2: VMDirectPath I/O

- Paravirtualized RDMA (PVRDMA)

(introduced in vSphere 6.5)

In HPC environments, RDMA devices improve performance by providing low latency and high bandwidth for networking. While this performance improvement is attractive, RDMA devices accessed from within the guest OS using passthrough or SR-IOV mode cannot take advantage of all VMware platform capabilities.

Released in vSphere 6.5, paravirtualized RDMA (PVRDMA) enables RDMA in the VMware virtual environment while maintaining the use of features like vMotion, the distributed resource scheduler (DRS), and VM snapshots. Those features are unavailable in DirectPath I/O or SR-IOV mode.

To use PVRDMA, a supported RoCE card and compatible ESXi release are required. RoCE, i.e., RDMA over Converged Ethernet, supports low-latency, lightweight, and high-throughput RDMA communication over an Ethernet network.

- vSphere Integrated Containers (VIC)

(introduced in vSphere 6.5)

VIC is an important feature introduced in vSphere 6.5. It’s a robust way to manage containers in a production environment. The end-user or developer uses Docker commands as they normally do. From the administrator’s perspective, containers are deployed as minimal vSphere VMs, that have been customized for container use, providing security and ease of management.

With VIC, HPC container clusters can be stood up or brought down quickly, and managed in a secure way in vSphere environments. VIC is well-suited to run HPC throughput workloads with all of the robust cloud-native features and good performance.

- New Maximums for vCPUs and Memory

(introduced in vSphere 6.5)

The CPU/memory limits introduced in vSphere 6.5 are shown below. They remain unchanged in vSphere 6.7.

128-vCPU per VM

6TB memory per VM

576 logical CPUs per host

16 NUMA nodes per host

12 TB RAM per host

These increased limits are beneficial for cases in which researchers need to request a monster VM, which commonly happens in the life sciences realm. For example, DNA sequence searching can consume large amounts of RAM. Note that configuring VMs with more than 64 vCPUs, requires the use of the Extensible Firmware Interface (EFI) in the VM’s guest operating system.

Performance

- Enhanced RDMA

(introduced in vSphere 6.7)

vSphere 6.7 introduces new protocol support for RDMA over Converged Ethernet (RoCE v2), a new software Fibre Channel over Ethernet (FCoE) adapter, and iSCSI Extension for RDMA (iSER). These features enable enterprises to integrate with even more high-performance storage systems, providing greater flexibility to use the hardware that best complements their workloads.

In additional to traditional HPC workloads that leverage RDMA interconnects, this will also enhance the performance of large-scale distributed machine learning workloads that leverage RDMA interconnects in virtual environments. For example, running Horovod with Tensorflow, Keras, or PyTorch deep learning frameworks in a distributed way can use multiple GPUs across hosts, and RDMA interconnects can enable higher scaling efficiency than Ethernet.

- VMXNET3 v4

(introduced in vSphere 6.7)

The vSphere 6.7 release includes vmxnet3 version 4, which supports some new features, including Receive Side Scaling (RSS) for UDP, RSS for ESP, and offload for Geneve/VXLAN. Performance tests reveal significant improvement in throughput.

These improvements are beneficial for HPC financial service workloads that are sensitive to networking latency and bandwidth.

- Large Memory Page Support

(introduced in vSphere 6.5)

Large memory pages relieve translation lookaside buffer (TLB) pressure and reduce the cost of page table walks. Improvements in these areas give improved workload performance, especially in those cases where application memory access patterns are random. Those random access patterns resulted in the past in higher TLB miss rates.

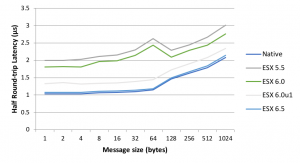

There is a major latency performance improvement for HPC message passaging interface (MPI) workloads since 6.5 due to the implementation of ESXi support for Large Intel VT-d Pages. InfiniBand and RDMA are the two most widely used hardware and software approaches for HPC message passing and they can be used in a virtual environment as well. Figure 1 shows how InfiniBand latencies under vSphere using VM Direct Path I/O have improved over the last several releases of the ESXi hypervisor.

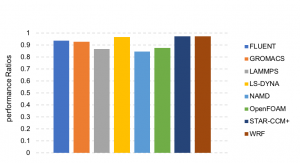

Figure 2 shows performance results for a variety of popular open-source and commercial MPI applications. These measurements show that latencies are now closely approaching those achievable without virtualization,

In vSphere 6.5, the ESXi hypervisor uses 2MB pages for backing guest vRAM by default. vSphere 6.7 ESXi also provides limited support for backing guest vRAM with 1GB pages.

Figure 1 Improvement in InfiniBand latency over several versions of the ESXi hypervisor. Lower is better.

Figure 2 Performance of a variety of MPI applications running on a 16-node cluster using 320 MPI processes. Results are shown as the ratios of virtualized and

un-virtualized performance. Higher is better. vSphere 6.5.

- Auto virtual NUMA configuration

(introduced in vSphere 6.5)

Since vSphere 5.0, the hypervisor exposes a virtual NUMA topology, improving performance by providing NUMA information to the guest OS and applications. Since vSphere 6.5, improvements were made to intelligently size and configure the virtual NUMA topology for a VM regardless of its size. For more information, see these two blog entries:

Decoupling of Cores per Socket from Virtual NUMA Topology in vSphere 6.5

Virtual Machine vCPU and vNUMA Rightsizing – Rules of Thumb

- Turbo Effect Viewable as an ESXtop Tool Indicator

(introduced in vSphere 6.5)

ESXi 6.5 introduced a new esxtop tool indicator called “Aperf/Mperf” to show the effects of Turbo boost as a ratio of clock speeds with and without Turbo. For example, with a 2.6GHz core and a turbo limit of 3.3 GHz, ratios higher than 1.0 may be seen.

In summary, with the continued performance improvements and new capabilities shown here, there are even more good reasons to virtualize your HPC and ML/DL workloads on VMware vSphere. VMware continues to actively track and support the latest developments in the hardware and software acceleration areas.

References

[1]https://www.vmware.com/content/dam/digitalmarketing/vmware/en/pdf/techpaper/performance/whats-new-vsphere67-perf.pdf

[2]https://www.vmware.com/content/dam/digitalmarketing/vmware/en/pdf/whitepaper/vsphere/vmw-white-paper-vsphr-whats-new-6-5.pdf

Authors: Na Zhang, Michael Cui