This is part 4 of a series of four blog articles that give technical details of the different options available to you for setting up GPUs for compute workloads on vSphere.

Part 1 of this series presents an overview of the various options for using GPUs on vSphere

Part 2 describes the DirectPath I/O (Passthrough) mechanism for GPUs

Part 3 gives details on setting up the NVIDIA Virtual GPU (vGPU) technology for GPUs

In this fourth article in the series, we describe the technical setup process for the Bitfusion product on vSphere. Bitfusion is a key part of the VMware vSphere product for optimizing the use of GPUs across multiple virtual machines.

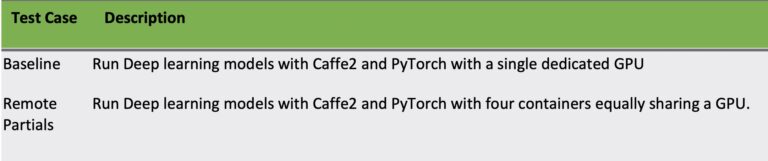

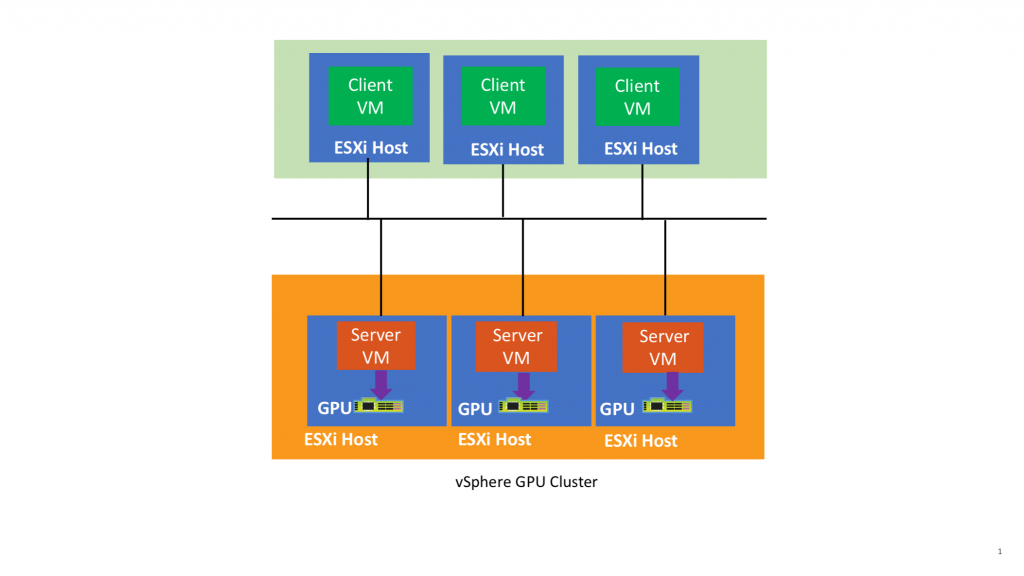

Figure 1: Architecture example for a Bitfusion Setup on Virtual Machines hosted on vSphere

The Bitfusion software increases the flexibility with which you may utilize your GPUs on vSphere. It does so by allowing your physical GPUs to be allocated in part or as a whole to applications running in virtual machines. Those consumer virtual machines may be hosted on servers that do not themselves have physical GPUs attached to them. Bitfusion uses techniques for remoting of the CUDA instructions to other servers in order to achieve this. A more complete description of the technical features of the Bitfusion software is given here

Bitfusion may be used to dedicate one or more full GPUs to a virtual machine, or to allow sharing of a single physical GPU across multiple virtual machines. The VMs that are sharing a physical GPU in this case need not be taking equal shares in it – their share sizes can be different. The share of the GPU is specified by the application invoker at startup time. Bitfusion allows the set of consumer VMs to use multiple physical GPUs at once from one virtual machine.

The Bitfusion Architecture

Contents

Bitfusion uses a client-server architecture, as seen in figure 1, where the server-side VMs provide the GPU resources, while the client-side VMs provide the locations for end-user applications to run. The server-side GPU-enabled VMs are referred to as the “GPU Cluster”. An individual node/VM may play both roles and have client and server-side execution capability locally, if required.

The client-side and server-side VMs may also be hosted on different physical servers as shown above. The VMs can be configured to communicate over a range of different types of network protocols, including TCP/IP and RDMA. RDMA may be implemented using Infiniband or RoCE (RDMA over Converged Ethernet). These different forms of networking have been tested by VMware engineers working together on vSphere and the results of those tests are available here.

The Bitfusion software can be used to remove the need for physical locality of the GPU device to the consumer – the GPU can be remotely accessed on the network. This approach allows for pooling of your GPUs on a set of servers as seen in figure 1. GPU-based applications can then run on any node/VM in the cluster, whether it has a physical GPU attached to it or not.

In the example architecture shown in figure 1, we show one virtual machine per ESXi host server for illustration purposes. Multiple virtual machines of these types can live on the same host server. In the case of the server-side host servers, if there are multiple GPU cards on that host, then that can be accommodated also.

Bitfusion Installation and Setup

For the server-side VMs shown in figure 1, i.e. those on the GPU-bearing hosts, the access to the GPU card by the local VM is done using the Direct Path I/O (i.e. the Passthrough method) that was fully described in the second blog article in this series or by using the NVIDIA vGPU method described in the third article in the series.

The details of these methods of GPU use on vSphere will not be repeated here. We will assume that one of these setups has already been done for any GPU that is participating in the Bitfusion installation.

The software that is installed onto the guest operating system of the client-side and server-side VMs for a Bitfusion setup is called “FlexDirect”. This software operates in user mode within the guest operating system of the VMs and needs no special drivers.

For the most recent installation instructions for vSphere Bitfusion, please consult this document

NOTE: Legacy Installation Process – here for Reference During Beta Only

For the most recent installation instructions for vSphere Bitfusion, please consult this document

Bitfusion has a client-side and a server side process and helpers that are installed as follows. The term “flexdirect” indicates a legacy version of the software and this is no longer used for the Bitfusion product, as of its generally available date, in July 2020

1. Ensure you have the appropriate Bitfusion License Key

If you do not have a current license key, then contact VMware Bitfusion personnel to acquire one.

2. Install the FlexDirect Manager on your Client-side (CPU only) VMs

Firstly, download the installation script:

wget -O installfd getfd.bitfusion.io

Run the install script for client mode (just the binaries)

sudo bash installfd — -m binaries

3. Check the FlexDirect Location

To ascertain where the FlexDirect CLI program has been installed to, use the command

which flexdirect

Output

/usr/bin/flexdirect

4. Initialize the License on the Client Side

To initialize the license, issue the following command.

sudo flexdirect init

Accept the EULA.

Enter the software license key you have previously acquired at this point. If you have a license key, enter it when prompted. If you don’t yet have a license key, then you may email support@bitfusion.io to request access.

Output

License has been initialized Attempting to refresh…

Refresh successful. License is ready for use.

Flexdirect is licensed and is ready for use.

5. Install the FlexDirect Manager on the GPU-enabled Server-side VMs

Download the installation script:

wget -O installfd getfd.bitfusion.io

Run the install script with the option for FlexDirect Manager (fdm) mode. This is a systemd service.

sudo bash installfd — -m fdm

Answer “y” when asked whether to install any dependencies and “y” for starting the service

6. Initialize the License on the GPU Server-Side VMs

To initialize the license, issue the following command.

sudo flexdirect init

Accept the EULA.

Enter the software license key you have previously acquired at this point. If you have a license key, enter it when prompted. If you don’t yet have license key, then you may email support@bitfusion.io to request access.

7. Confirm that the FlexDirect Manager Service is Running

systemctl status flexdirect

This command shows that the Flexdirect Manager is loaded and running (enabled and status=0/Success)

8. Starting the FlexDirect Manager Service

If the service is not running, you can try starting it from the command line as follows:

sudo systemctl start flexdirect

9. Using the Flexdirect Command

The syntax of the various parameters to the “flexdirect” command are given in the BitFusion Usage site

You may also get help on the “flexdirect” command, used on its own, to see the various parameters and options available:

flexdirect

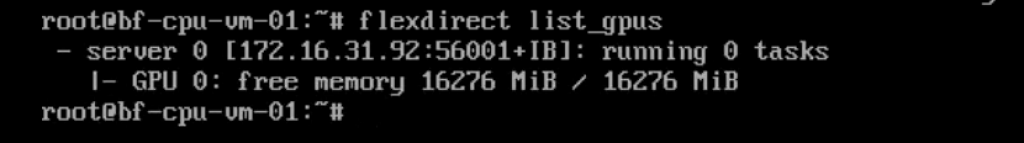

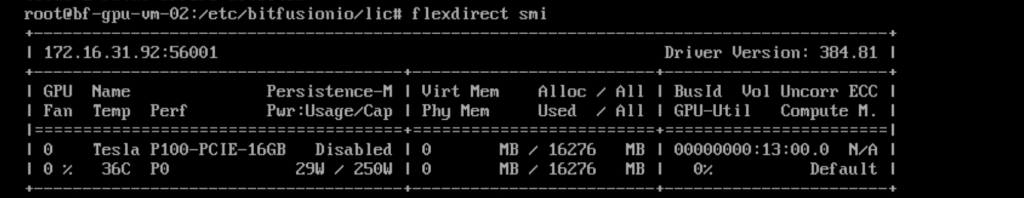

To see the GPUs that are available, from a suitably configured server-side VM, type the command

flexdirect list_gpus

Example output for a single server VM with one GPU enabled is show in figure 2 below

Figure 2: Example output from the “flexdirect” command to show the available GPUs

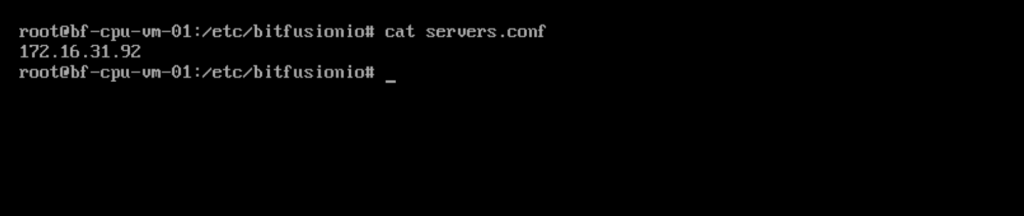

10. Configure the IP Addresses on the Client-side VM for Access to the Server-side GPU VMs

Once the FlexDirect software is installed and initialized, configure an entry in the /etc/bitfusionio/servers.conf file on the client-side VM to contain the IP address or hostname of the server-side VM that this client VM will talk to. This configuration file should have one IP address or hostname per line and there may be several of these server VMs mentioned in that file.

Figure 3: A basic example of the /etc/bitfusionio/servers.conf file on the client-side VM

11. Use the Browser to Access the FlexDirect Manager

Bring up a web browser on any system that can connect to any GPU server-side VM. Each server runs a web browser with the usage and management GUI for the whole cluster. Browse to one of these servers at port 54000.

An example of this would be http://192.168.1.32:54000

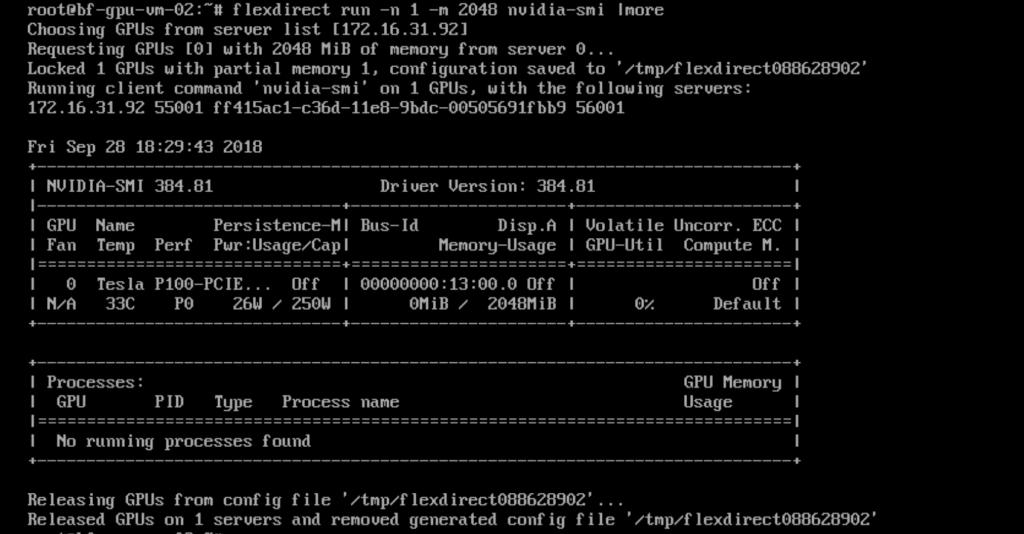

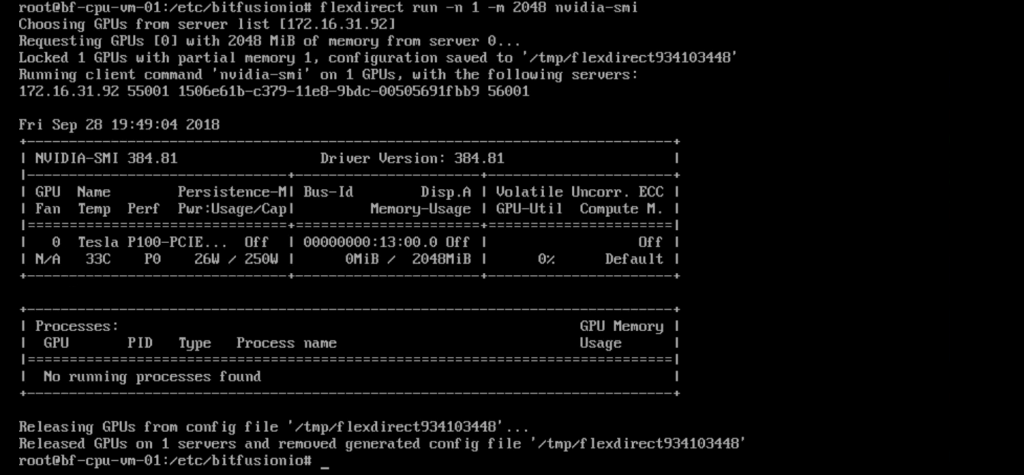

12. Test an Application on the BitFusion FlexDirect Server-side

You may now execute the FlexDirect program on the GPU-enabled VM with a named application as a client would, in order to test it. An example of such a test command, using the nvidia-smi test program is:

flexdirect run -n 1 -m 2048 nvidia-smi |more

Figure 4: The “flexdirect run” command showing output from a health check run

You may replace the “nvidia-smi” string in the above command with your own application’s executable name in order to have it run on the appropriate number of GPUs (-n parameter) using the suitable GPU memory allocation (-m parameter). You may also choose to use a fraction of a GPU by issuing a command such as

flexdirect local -p 0.5 nvidia-smi |more

where the parameter “-p 0.5” indicates a partial share of one half of the GPU memory for this application.

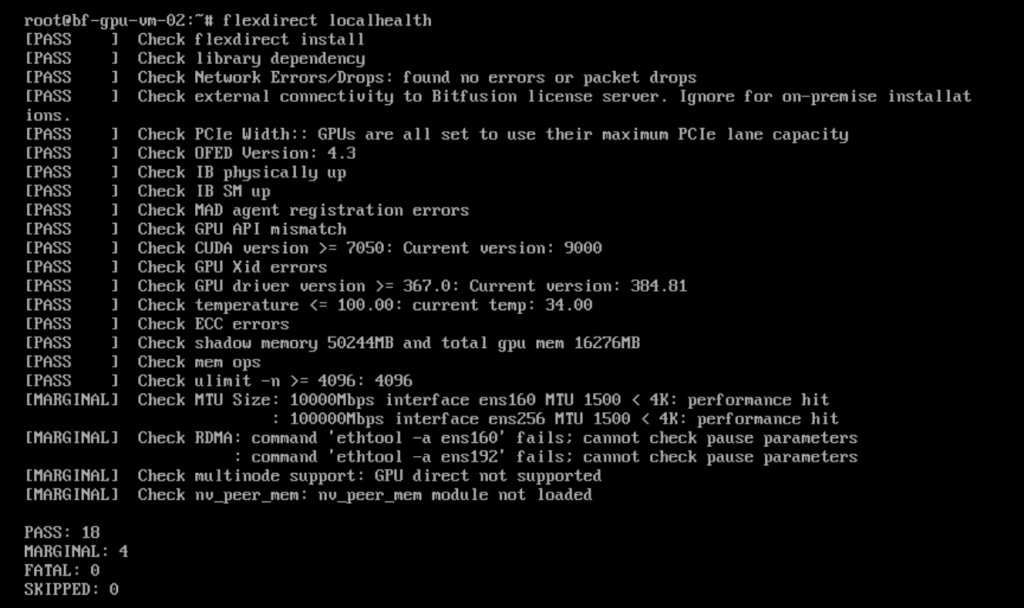

13. Execute FlexDirect Health Checks

You can also get a reading on how healthy the server-side process is using the command:

flexdirect localhealth

Figure 5: Output from the “flexdirect localhealth” command

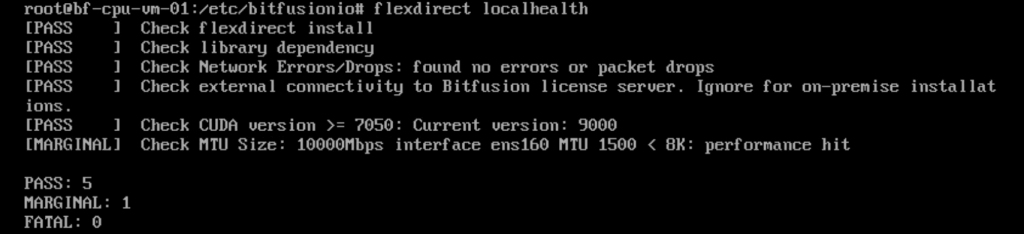

The “flexdirect health” command may also be executed on a client-side VM, that does not have a GPU attached, in the same way, producing a more concise output. Note the hostname of the VM here includes “cpu” indicating it does not have a GPU attached to it in the way that the server-side “gpu” named VM does.

Figure 6: Executing the “flexdirect health” command on the Client-side VM

14. Get Current Data on the GPU and Driver

To get a very concise view of the health and the Driver and the GPU state, use the command:

flexdirect smi

Figure 7: The “flexdirect smi” command output

15. Test the Connection between the Client and Server-side VMs

You can now run your own application on your Client-side VM using the flexdirect program to execute the GPU-specific parts remotely on the server side. You can, for example, use the command we tried earlier on the server side VM to execute the nvidia-smi tool:

flexdirect run -n 1 -m 2048 nvidia-smi

to produce the same result.

Figure 8: Client-side VM health check output using the “flexdirect” and “nvidia-smi” commands

You can now use any portion of a GPU or a set of GPUs on the server VMs to execute your job on, and invoke that run from your client-side VM. You can execute the application from your client-side or server-side VMs, as seen earlier. The client-side VMs can be moved from one vSphere host server to another using vSphere vMotion and DRS.

If your GPU server-side VMs are hosted on servers with VMware vSphere version 6.7 update 1 or later, and are configured using the NVIDIA vGPU software, then they may also be moved across their vSphere hosts using vMotion.

Conclusion

Using Bitfusion, a set of non-GPU enabled virtual machines (client VMs) may make use of sets of GPU-enabled virtual machines that may be remote from the clients across the network. The GPU devices no longer need to be local to their consumers.

The Bitfusion software allows applications to make use of different shares of a single GPU or multiple GPUs at once. These combinations of GPU usage have been tested in the engineering labs at VMware and their performance on that infrastructure has been thoroughly documented in the references given below. The Bitfusion method is an excellent choice among the various options for GPU use on vSphere, as documented in this series of articles.

References

Bitfusion Documentation

Machine Learning leveraging NVIDIA GPUs with Bitfusion on VMware vSphere – Part 1

Machine Learning leveraging NVIDIA GPUs with Bitfusion on VMware vSphere – Part 2

Using GPUs with Virtual Machines on vSphere – Part 1: Overview

Using GPUs with Virtual Machines on vSphere – Part 2: DirectPath I/O

Using GPUs with Virtual Machines on vSphere – Part 3: NVIDIA vGPU