This is part 2 of a series of blog articles on the subject of using GPUs with VMware vSphere.

Part 1 of this series presents an overview of the various options for using GPUs on vSphere

Part 2 describes the DirectPath I/O (Passthrough) mechanism for GPUs

Part 3 gives details on setting up the NVIDIA Virtual GPU (vGPU) technology for GPUs on vSphere

Part 4 explores the setup for the BitFusion Flexdirect method of using GPUs

In this article we describe the VMDirectPath I/O mechanism (also called “passthrough”) for using a GPU on vSphere. Further articles in the series will describe other methods for GPU use.

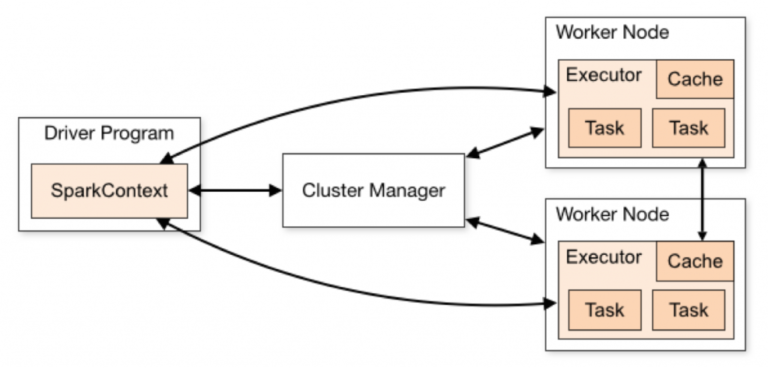

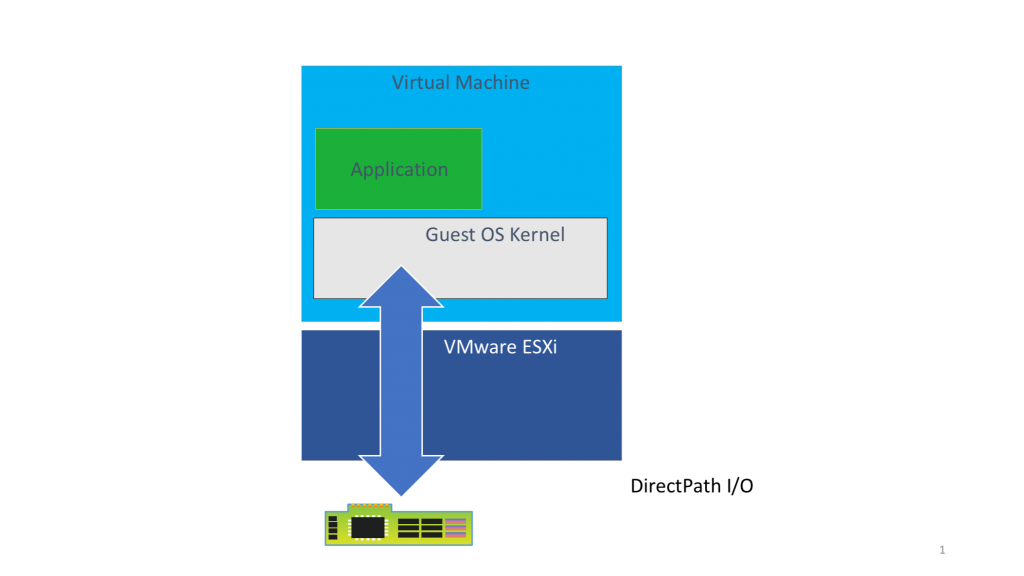

Figure 1: An outline architecture of VMDirectPath I/O mode for GPU access in vSphere

The VMDirectPath I/O mode of operation allows the GPU device to be accessed directly by the guest operating system, bypassing the ESXi hypervisor. This provides a level of performance of a GPU on vSphere that is very close to its performance on a native system (within 4-5%). For more information on VMware’s extensive performance testing of GPUs on vSphere, check here

The main reasons for using the passthrough approach to exposing GPUs on vSphere are:

-you are taking your first steps to exposing GPUs in virtual machines so as to move the end users away from storing their data and executing workloads on physical workstations;

-there is no need for sharing the GPU among different VMs, because a single application will consume one or more full GPUs (Methods for sharing GPUs will be covered in other blogs)

-you need to replicate a public cloud instance of an application, but using a private cloud setup.

An important point to note is that the passthrough option for GPUs works without any third-party software driver being loaded into the ESXi hypervisor.

When using passthrough mode, each GPU device is dedicated to that VM and there is no sharing of GPUs amongst the VMs on a server. The vSphere features of vMotion, Distributed Resource Scheduling (DRS) and Snapshots are not allowed with this form of using GPUs with a virtual machine.

NOTE: A single virtual machine can make use of multiple physical GPUs in passthrough mode. You can find a description of this mode of operation here

1. vSphere Host Server Setup for Direct Use of a GPU

Contents

In this section, we give the detailed instructions for enabling a GPU device in passthrough mode on a vSphere host server. Section 2 deals with the separate setup steps for a VM that will use the GPU.

Firstly, you should check that your GPU device is supported by your host server vendor and that it can be used in “passthrough” mode. In general, most GPU devices can be used in this way.

Secondly, you need to establish whether your PCI GPU device maps memory regions whose size in total is more than 16GB. The Tesla P100 is an example of such a GPU card. The higher-end GPU cards typically need this or higher amounts of memory mapping. These memory mappings are specified in the PCI BARs (Base Address Registers) for the device. Details on this may be found in the GPU vendor documentation for the device. More technical details on this are given in section 2.2 below.

One procedure for checking this mapping is given in this article

NOTE: If your GPU card does NOT need PCI MMIO regions that are larger than 16GB, then you may skip over section 1.1 (“Host BIOS Setting”), section 2.1 (“Configuring EFI or UEFI Mode”) and 2.2 (“Adjusting the Memory Mapped I/O Settings for the VM”) below.

1.1 Host BIOS Setting

If you have a GPU device that requires 16GB or above of memory mapping, find the vSphere host server’s BIOS setting for “above 4G decoding” or “memory mapped I/O above 4GB” or “PCI 64-bit resource handing above 4G” and enable it. The exact wording of this option varies by system vendor – and the option is often found in the PCI section of the BIOS menu for the server. Consult with your server vendor or GPU vendor on this.

1.2 Editing the PCI Device Availability on the Host Server

An installed PCI-compatible GPU hardware device is initially recognized by the vSphere hypervisor at server boot-up time without having any specific drivers installed into the hypervisor.

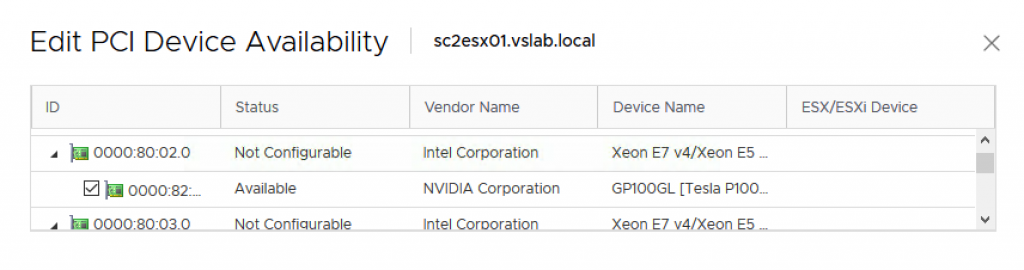

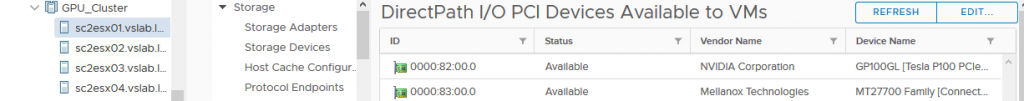

vSphere recognizes all PCI devices in this way. You can see the list of PCI devices found in the vSphere Client tool by choosing the particular host server you are working on, and then following the menu choices

“Configure” -> “Hardware” -> “PCI Devices” -> “Edit” to see the list, as seen in an example in Figure 2 below.

If the particular GPU device has not been previously enabled for DirectPath I/O, you can place the GPU device in Direct Path I/O (passthrough) mode by clicking the check-box on the device entry as seen in the NVIDIA device example shown in Figure 2.

Figure 2: Editing the PCI Device for DirectPath I/O availability

Once you save this edit using the “OK” button in the vSphere Client, you will then reboot your host server. After the server is rebooted, use the menu sequence

“Configure -> Hardware -> PCI Devices”

in the vSphere Client to get to the window entitled “DirectPath I/O PCI Devices Available to VMs”. You should now see the devices that are enabled for DirectPath I/O, as shown in the example in Figure 3. The page shows all devices including the NVIDIA GPU and as another example, a Mellanox device, that are available for DirectPath I/O access.

Figure 3: The DirectPath I/O enabled devices screen in the vSphere Client

2. Virtual Machine Setup

Once the GPU card is visible as a DirectPath I/O device on the host server, we then turn to the configuration steps for the virtual machine that will use the GPU.

First, you create the new virtual machine in the vSphere Client in the normal way. One part of this process needs special attention, that is to do with the boot options for the VM.

2.1 Configuring EFI or UEFI Boot Mode

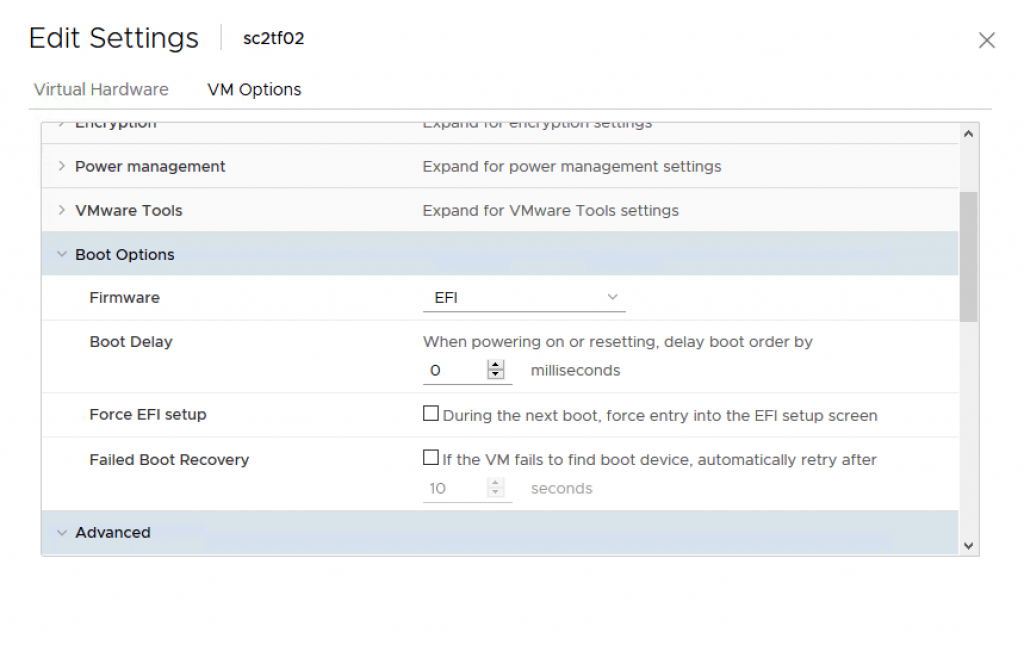

Before installing the Guest OS into the VM – verify the Boot Options configuration for the “Firmware” entry shown below.

Your guest operating system within the virtual machine must boot in EFI or UEFI mode for correct GPU use. To access the setting for this, highlight your virtual machine in the vSphere Client and use the menu items:

“Edit Settings -> VM Options -> Boot Options” in order to get to the “Firmware” parameter. Ensure that the “UEFI” or “EFI” is enabled in the Firmware area as shown in Figure 4.

Figure 4: Enabling EFI in the Boot Options for a virtual machine in the vSphere Client

2.2 Adjusting the Memory Mapped I/O Settings for the VM

In this section, we delve into the memory mapping requirements of your specific GPU device. If your PCI GPU device maps memory regions of more than 16GB in size, then we will need two specific configuration parameters to be set for the VM in the vSphere Client, as described below.

These memory requirements can be different for the various GPU models. You should first consult your GPU vendor’s documentation to determine the PCI Base Address Registers’ (BARs) memory requirements.

More information on this area for an NVIDIA P100 GPU, for example, can be found here (see Table 3 on page 7).

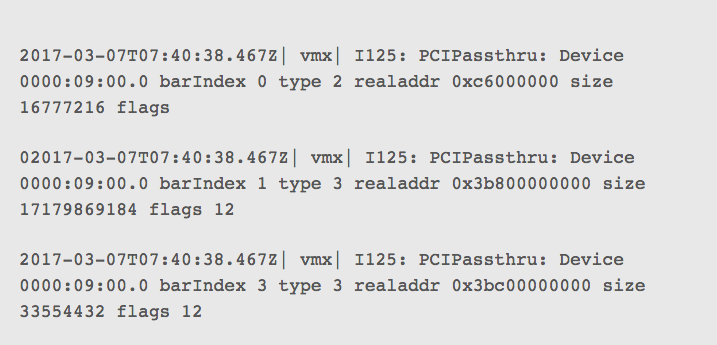

If you cannot determine from the vendor documentation how much memory your GPU device maps, then the procedure to follow is:

- Enable the GPU device in passthrough mode in your virtual machine (without the settings in this section)

- Boot up your virtual machine (This process will fail due to the GPU hardware being present);

- Examine the virtual machine’s vmware.log file to find those entries that resemble the data shown in Figure 5.

Figure 5: The vmware.log file entries for a virtual machine that is using a GPU

All three entries from the virtual machine’s log file above refer to the same PCI GPU device, the one located at device address 0000:09:00.0. Here, the GPU device has requested to map a total of just over 16GB – the sum of the three “size” entries shown before the word “flags” and measured in bytes.

GPU cards that map more than 16GB of memory (referred to as “high-end” cards) require the instructions in this section to be followed. Those GPU cards that map less than 16GB of memory do not require those instructions.

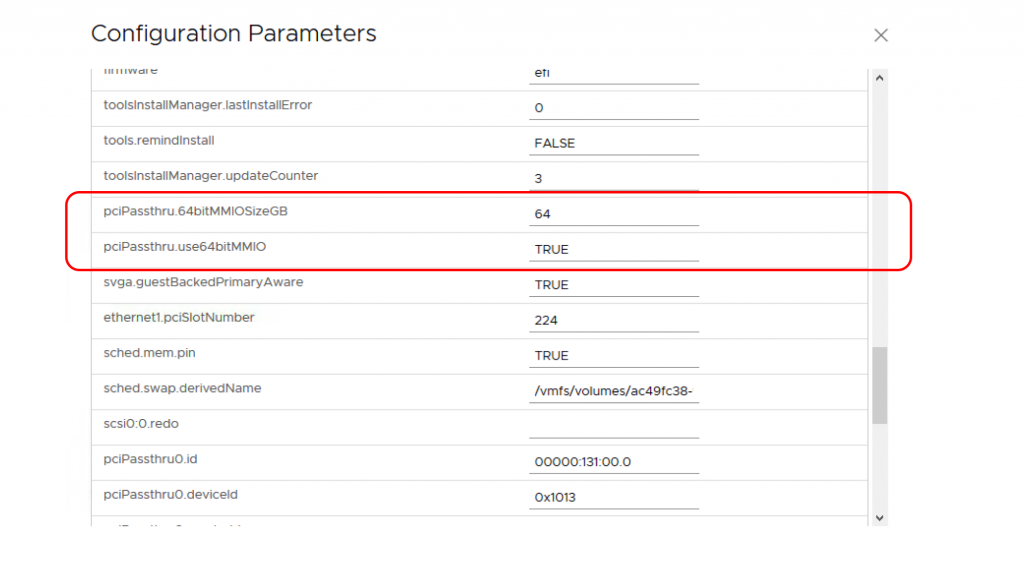

For a GPU device that maps more than 16GB of memory, proceed as follows.

In the vSphere Client again, choose the virtual machine and use the options

“Edit Settings -> VM Options ->Advanced -> Configuration Parameters -> Edit Configuration” to get to the list of PCI-related options shown in Figure 6.

Figure 6: The vSphere Client window for the “pciPassthru” parameters for a virtual machine

Here, you add the following two parameters, with the value of the second parameter, as shown, set to TRUE

pciPassthru.use64bitMMIO=”TRUE”

The value of the first parameter seen in the dialog above is adjusted to suit your specific GPU requirements:

pciPassthru.64bitMMIOSizeGB=<n>

We calculate the value of the “64bitMMIOSizeGB” parameter using a straightforward approach. Count the number of high-end PCI GPU devices that you intend to pass into this VM. This can be one or more GPUs. Multiply that number by 16 and round it up to the next power of two.

For example, to use passthrough mode with two GPU devices in one VM, the value would be:

2 * 16 = 32, rounded up to the next power of two to give 64.

Figure 6 above shows an example of these settings in the vSphere Client for two such passthrough-mode high-end GPU devices being used in a VM.

The largest NVIDIA V100 device’s BAR is 32GB. So, for a single V100 device being used in passthrough mode in a VM, we would assign the value of the 64bitMMIOSizeGB parameter to be 64 (rounding up to the next power of two).

2.3 Installing the Guest Operating System in the VM

Install the Guest Operating System into the virtual machine. This should be an EFI or UEFI-capable operating system, if your GPU card has a requirement for large PCI MMIO regions.

The standard vendor GPU driver must also be installed within the guest operating system.

2.4 Assigning a GPU Device to a Virtual Machine

This section describes the assignment of the GPU device to the VM. Power off the virtual machine before assigning the GPU device to it.

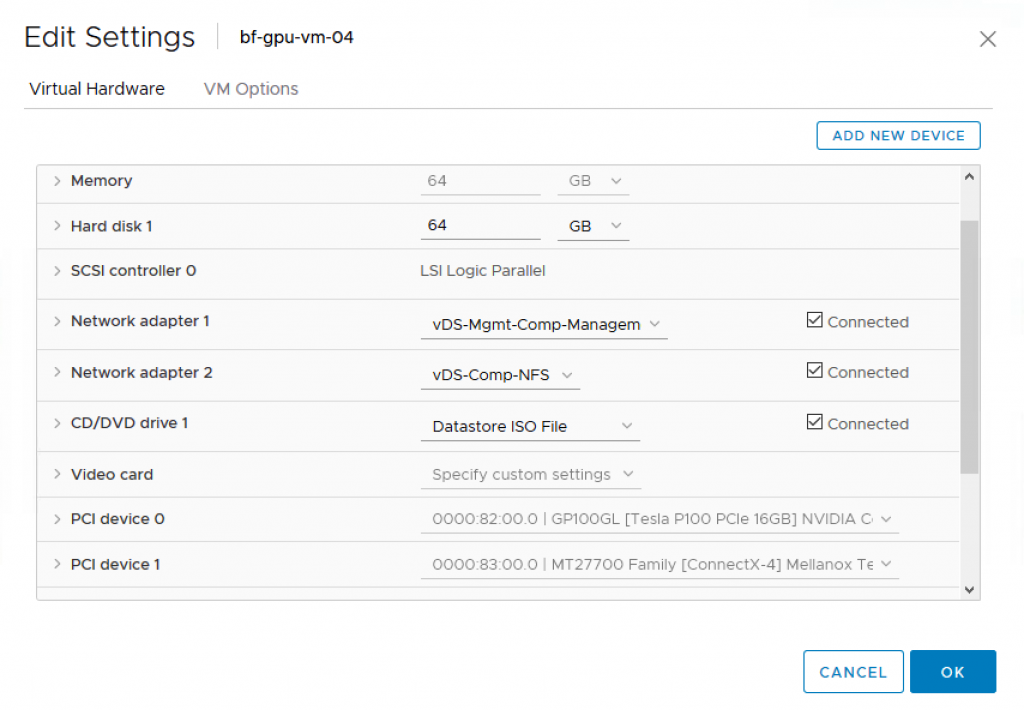

To enable a virtual machine to have access to a PCI device, in the vSphere Client, highlight the virtual machine, use the “Edit Settings” option and scroll down to the PCI Device list. If your device is not already listed there, use the “Add New Device” button to add it to the list. Once added, your virtual machine settings should look similar to those shown in Figure 7. In this example, the relevant entry is “PCI Device 0”.

Figure 7: PCI devices that are enabled in a virtual machine using the “Edit Settings” option.

You can read more about this procedure in the knowledge base article here

2.5 Memory Reservation

Note that when the PCI device is assigned to a VM, the virtual machine must have a memory reservation for the full configured memory size for the VM. This is done in the vSphere client by choosing the VM, using “Edit Settings -> Virtual Hardware -> Memory” to access and change the value in the Reservation area.

Finally, power on the virtual machine. After powering on the virtual machine, login to the guest operating system and check that the GPU card is present using

- On a Linux virtual machine, use the command

“lspci | grep nvidia”

or

- On a Windows operating system, use the “Device Manager” from the Control Panel to check the available GPU devices

The GPU is now ready for application use in passthrough mode.

3. Multiple Virtual Machines using Passthrough Mode

To create multiple identical VMs, it is recommended that you clone a virtual machine without the PCI assignments mentioned above first and then assign specific device(s) to each virtual machine afterwards.

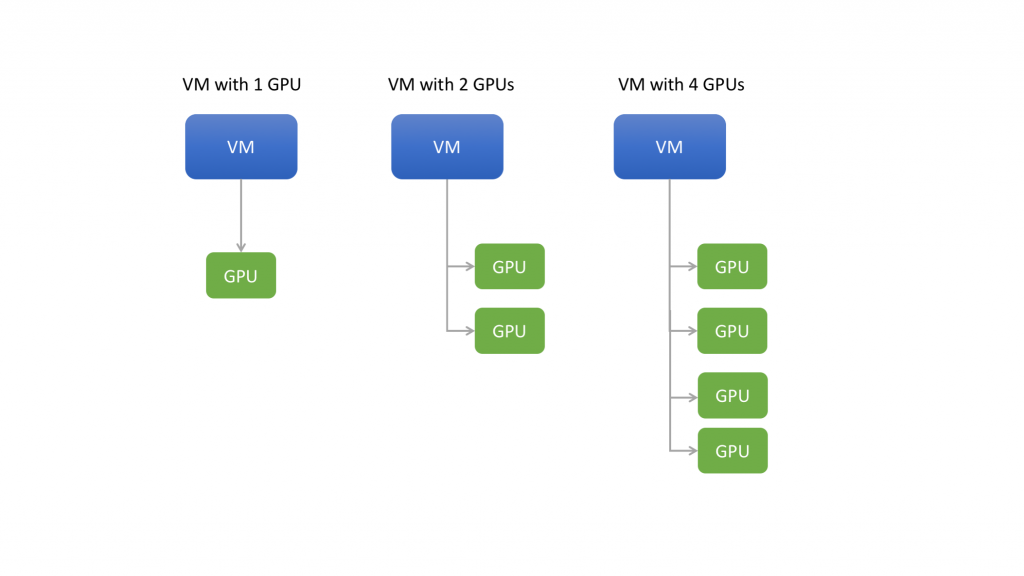

3.1 Multiple GPUs Assigned to One VM

You can use the process described in sections 1 and 2 above multiple times in order to dedicate more than one GPU to one virtual machine, if you wish to do that. This scenario is shown in figure 8 below.

Figure 8: One or more GPUs allocated fully to one virtual machine in passthrough mode

Using this DirectPath I/O method, one or more full GPUs is allocated to only one virtual machine. Multiple virtual machines on the same host server would require separate GPU devices to be fully allocated to them, if using passthrough mode.

References

For further detailed information on this subject consult these articles:

How to Enable Compute Accelerators on vSphere 6.5 for Machine Learning and Other HPC Workloads

Configuring VMDirectPath I/O pass-through devices on a VMware ESX or VMware ESXi host

VMware vSphere VMDirectPath I/O: Requirements for Platforms and Devices

Machine Learning on VMware vSphere 6 with NVIDIA GPUs – VMware VROOM! Blog Site