Introduction

Contents

Undergraduate students at the University of California, Berkeley participated in this project in collaboration with VMware to develop three real-world Machine Learning use cases.

Machine Learning is a hot topic and every size company must leverage its power to remain competitive. Over the past few years, VMware has made substantial investments in improving vSphere® performance to support Machine Learning. This engagement clearly demonstrated that VMware can run and execute a machine learning model in a matter of minutes compared to other popular platforms that can take days or weeks.

What is Machine Learning?

“Machine learning is a subset of Artificial Intelligence (AI) in the field of computer science that often uses statistical techniques to give computers the ability to ‘learn’ with data, without being explicitly programmed.

In an environment where everyone is concerned with personal and data security, ML has multiple uses in the areas of face detection, face recognition, image classification, and speech recognition. Software security companies use ML in the fight to stop computer viruses/spam and to detect fraud. Organizations such as Google and its competitors use it for on-line search. There are even more applications in financial services, healthcare, and customer service. For example, Amazon® uses ML to improve customer experiences in key areas of their business including product recommendations, substitute product prediction, fraud detection, meta-data validation and knowledge acquisition. From predicting the weather to diagnosing diseases to driving cars, ML has many applications.

According to IDC, spending on AI and ML to expected to grow from $12B in 2017 to $57.6B by 2021. According to a MarketsAndMarkets report, the market size for machine learning is expected to grow from $1.03 Billion in 2016 to $8.81 Billion by 2022, at a Compound Annual Growth Rate (CAGR) of 44.1 percent during the forecast period. Market growth will be fueled by continuous technological advancements and the exploding growth of data.

Why VMware and Machine Learning?

ML takes a lot of computational power so the costs of servers to support ML can be expensive. To get around this problem, many organizations have moved their ML to the cloud with solutions offered by Amazon, Google, and Microsoft. With a cloud platform, you can spin up 10,000 machines and run them for an hour to get your answer, which is significantly less expensive than procuring thousands of servers.

Unfortunately, there are a few downsides to these ML cloud solutions. First, these solutions come with management tools that are cumbersome to use and take time to learn. Second, these solutions limit the applications to the one cloud environment and if you have a hybrid- or multi-cloud deployment, you will have problems with performance, cost, and usability if your database, for example, is on-premise and your ML solution is in one of these public clouds.

On the other hand, VMware offers a best-in-class hypervisor from a performance perspective and a popular, best-in-class management structure. Because VMware is a popular IT tool, your IT team is familiar with it. They don’t need to learn anything knew to leverage VMware for ML.

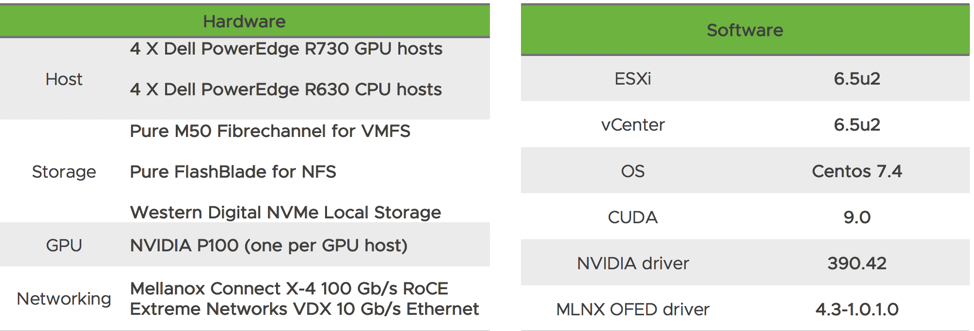

The Virtualized GPU Infrastructure Setup

Figure 1: Hardware and Software Components used for the use cases

The ML solution used a four-node vSphere cluster with Dell® R730 servers containing one NVIDIA Tesla® P100 card each. The GPUs were setup in pass-through mode for direct access from a TensorFlow™ VM. Each node had one TensorFlow Virtual Machine (VM) with dedicated access to the GPU card.

TensorFlow

Created by the Google Brain team, TensorFlow is a popular open source software library for high performance numerical computation. It comes with strong support for machine learning and deep learning and the flexible numerical computation core is used across many scientific domains. TensorFlow works across a multitude of platforms — from desktops to clusters of servers to mobile and edge devices — and can leverage hardware accelerators for its high-performance needs.

TensorFlow uses data flow graphs to perform numerical computations. It is highly flexible, portable, can handle complex computational frameworks, and supports multiple programming languages. The team used TensorFlow for developing and deploying real-world use cases for machine learning.

TensorFlow Setup

The team used Centos® 7.x as the Linux® OS for the TensorFlow VMs and followed the instructions at Linux TensorFlow Install to install TensorFlow components and the GPU version of TensorFlow, and the instructions from NVIDIA to download and install CUDA and cudnn. Lastly, the team templatized the VM to use for cloning and deployment.

Real-World Machine Learning Use Cases

Three real-world machine learning use cases were chosen for this study, with the algorithms developed by the UC Berkeley students. The first two use cases leveraged a single instance TensorFlow for GPU-based processing, while the third use case was deployed on a distributed TensorFlow infrastructure.

Use Case #1: Image Processing

The Objective:

The objective of this use case was to demonstrate that VMWare high-performance computing servers can speed up the time it takes to train and test a solution that emulates the artistic style of famous artists and “repaints” a photograph or picture with that style. Over time, the difference is slowly reduced and an image is discernible.

The Data:

The algorithm was built off a pre-existing image-recognition model and only needed one content image and one style image to iteratively train.

The Algorithm:

The architecture computed the difference between the generated image and image given for content, as well as the difference between the image containing the style and the generated image. For each iteration, it calculates both these differences and then learns iteratively how to minimize this difference.

The algorithm trained the network on 50 epochs with 10,000 individual images per epoch to maximum the output of the network.

The Results:

The speed of the GPU enabled virtual machines was exceptional and far outpaced conventional non-GPU virtual machines. Wherein a non-GPU-enabled VM took 500 hours to process 10,000 images, the GPU-enabled VM took 11 hours. The extreme computational capabilities of GPUs can be combined with the efficiency and sharing of vSphere environments to provide a robust infrastructure for machine learning workloads.

Style Transformation Platform and Examples:

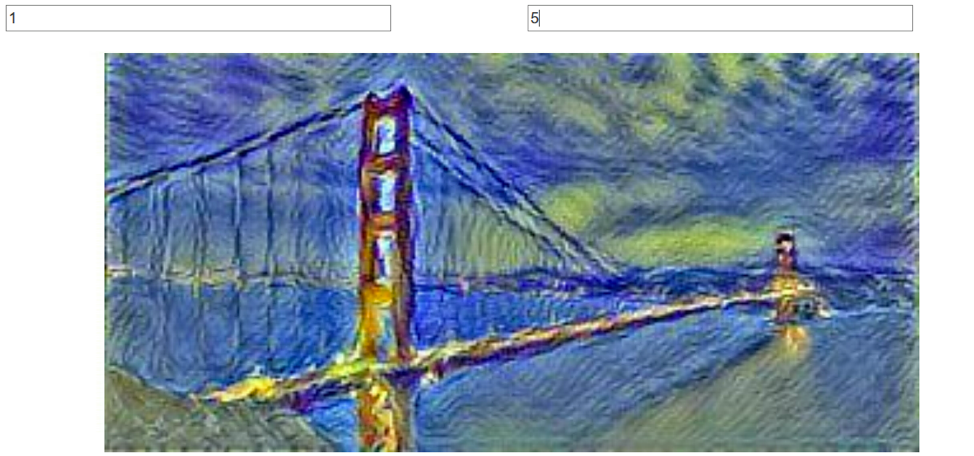

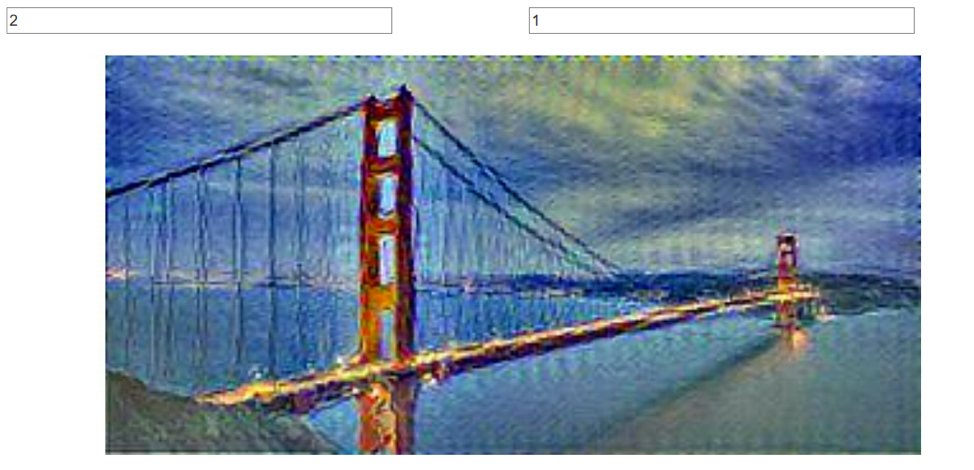

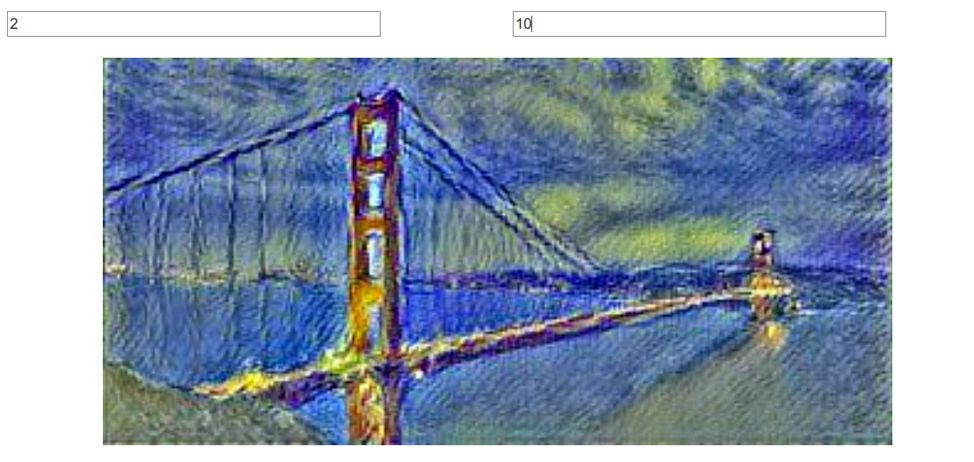

The golden gate bridge image represents the content image ( a photograph) and the style image is the painting. This machine learning training exercise took these two images with different ranges of weights and generated respective output images. The range of weights for content and style are different. The content weights go from 0 to 2 and the style weights go from 0 to 10.

Figure 2: Style Transformation platform

Figure 3: Style transformation example 1

In example 1 we see middle of the range style and content weight and that is reflected in the image.

Figure 4:Style transformation example 2

In example 2 we see that the style weight is really low and we see the transformed image reflecting most of the content image with less style.

Figure 5: Style transformation example 3

In example 3 we see that the style and content weight-age at their maximum and this is reflected in the transformation.

Use Case #2: Stock Market Prediction

The Objective:

The objective of this use case was to predict the values of the S&P 500 stock market on August 31, 2017. The algorithm compiled historical data for five months and used the data for the machine learning process to tune the algorithm and predict the values of the stock on August 31st.

The Data:

The data was collected via API from historical archives available in Google Finance. The data-set contains approximately 42,000 minutes of data ranging from April 2017 to August 2017 on 500 stocks from the total S&P 500 index price.

The Algorithm:

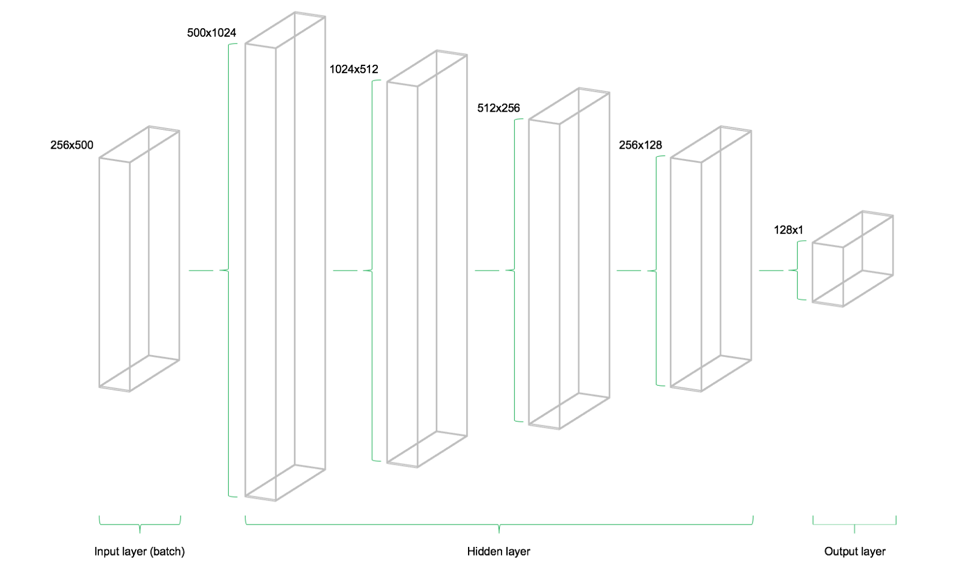

The code creates variables that represent the network’s input, the network’s output, weights, and biases, that were initialized prior to model training. There are three major building blocks for a typical Neural Network model that includes the input layer, the hidden layers and the output layer. The model consists of four Neural Network layers, with the first having 1024 neurons, the second having 512 neurons, followed by 256 and 128 neurons. The type of Neural Network used is a feed forward network. Using activation functions, the hidden layers were transformed. The next layer was the output layer, which was used to transpose the data. Lastly, the model used a cost function to measure the deviation between the networks. The optimizer takes care of computations pertaining to weights and biases during training. After setting up the code, the algorithm used this model to continue training the network and output predicted stock prices.

Source: Deep Learning for Stock Prediction with TensorFlow

Figure 6: Feed Forward Neural Network Layers used in Stock Price Prediction

The Results:

At the end all iterations, the model outputs a final loss, with loss being defined as the mean squared error between the output of our model and expected output. The model is 99 percent accurate with less than a 1 percent error rate as seen in Figure 7, 8, and 9 below.

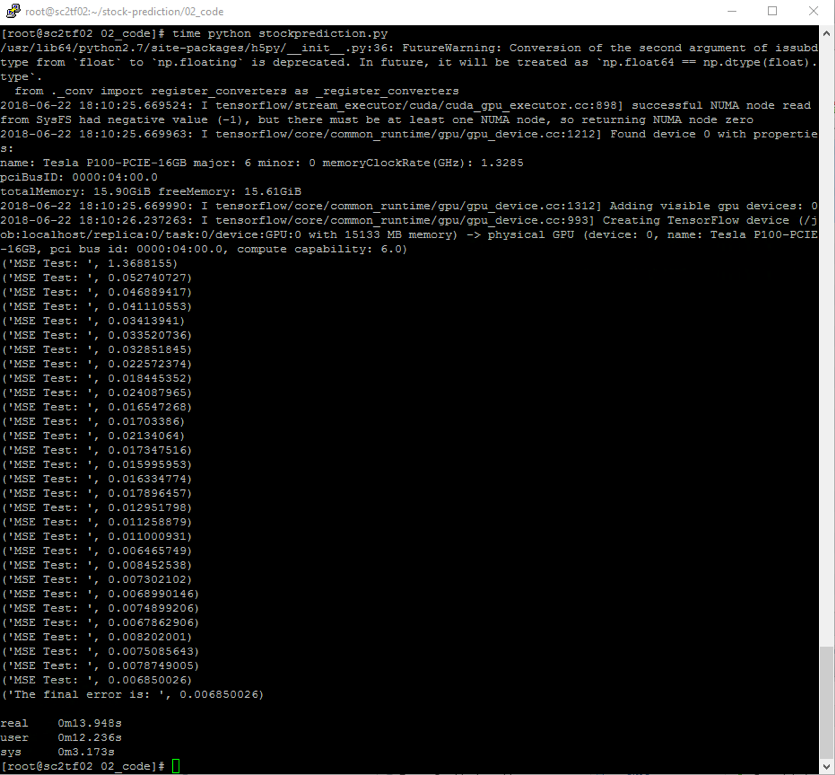

Figure 7: Timed Run with GPU

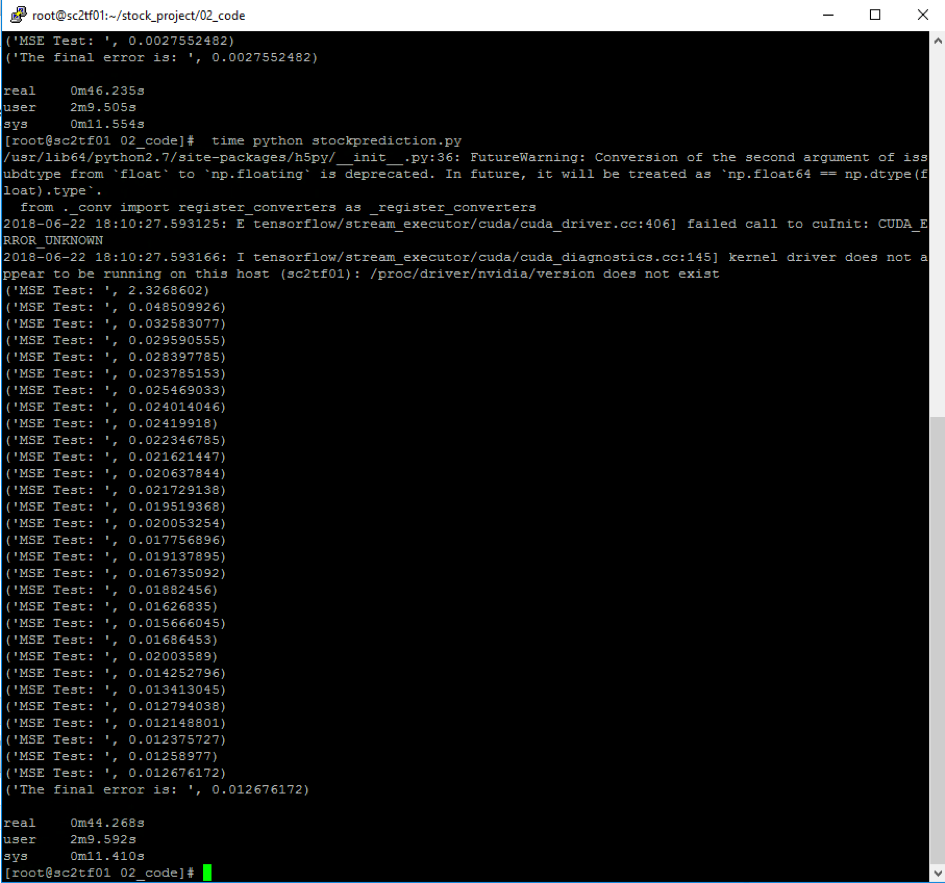

Figure 8: Timed Run without GPU

The GPU accelerated the stock prediction algorithm and takes almost three times longer without GPU.

Figure 9: Stock Price Prediction Model error rate reduction

Use Case #3: Closed Captioning

The Objective:

This machine learning task was chosen to demonstrate the superiority of the multiple graphics card running in the VMWare high-performance server when compared to traditional CPUs. For this task, the team developed a large captioning model that gave imputed images a caption based on what it understood in the scene.

The algorithm trained the network for 150 epochs with 20,000 individual training steps per epoch to maximum the predictive capability of the network.

The Data:

The model used data that was pulled from publicly labelled image sets to retrain a network that was originally trained to recognize images from the ImageNet® dataset.

The Algorithm:

The model used the power of distributed computing to train a very large network, work that won a Stanford competition, the ImageNet challenge. The team assigned three different physical computers to perform various individual tasks and pooled the results of those tasks on a single machine. The code for the model is shown in Appendix D.

The Results:

After training, the model can take in any image and caption it with decently accurate captions that could pass for a human’s captioning. The network even handles images that challenge a human’s ability. Example output is shown below.

Figure 10: Examples of captions generated by the model

Conclusion

VMware & NVIDIA have been collaborating to leverage GPUs in advanced graphics and general-purpose GPU computing. NVIDIA GPUs perform with minimal overhead on vSphere environments, while still providing the benefits of virtualization, and can significantly speed up training in vSphere Machine Learning environments. For example, the GPU-enabled VMware VM processed images almost 98 percent faster than a non-GPU VM. Likewise, the stock prediction inference engine was 66 percent faster on GPU-enabled virtual machines. These use cases have shown that vSphere is a great platform for running general purpose GPU-based machine learning.

Even better, VMware lets you run and scale machine language processing or stop it when required. Best yet, you can use vSphere to run normal day-to-day operations and use the same hypervisor to extend out and answer ML queries. This allows you to get into new lines of business using one set of hardware and one IT skill set.

References

Acknowledgements

Authors:

- Vineeth Yeevani ( UC Berkeley Undergraduate Student)

- Srividhya Shankar ( UC Berkeley Undergraduate Student)

- Anusha Mohan ( UC Berkeley Undergraduate Student

Contributors:

- Mohan Potheri (VMware)

- Dean Bolton (VLSS)