One of the most exciting aspects of data science and machine learning (ML) is the pace of technical innovation that is happening in these fields. New tools, platforms, models and data science workbenches are being created at a rapid pace – so rapid, in fact, that it is difficult for the practitioner to keep up with them.

Not only are there a significant number of new emerging tools and platforms, but those already in use are changing quickly too, and so the practitioner is faced with a continuous choice of which versions of his/her platform, language or tool to use at any one time. This adds to the complexity of choosing and tuning the parameters of the ML model itself.

Thirdly, there is a key step in the machine learning lifecycle where an application/model has been developed, trained and tested in the lab and is now being prepared for production use.

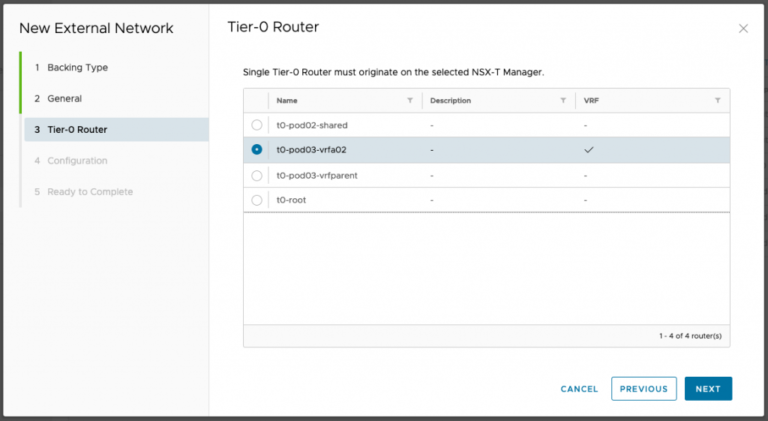

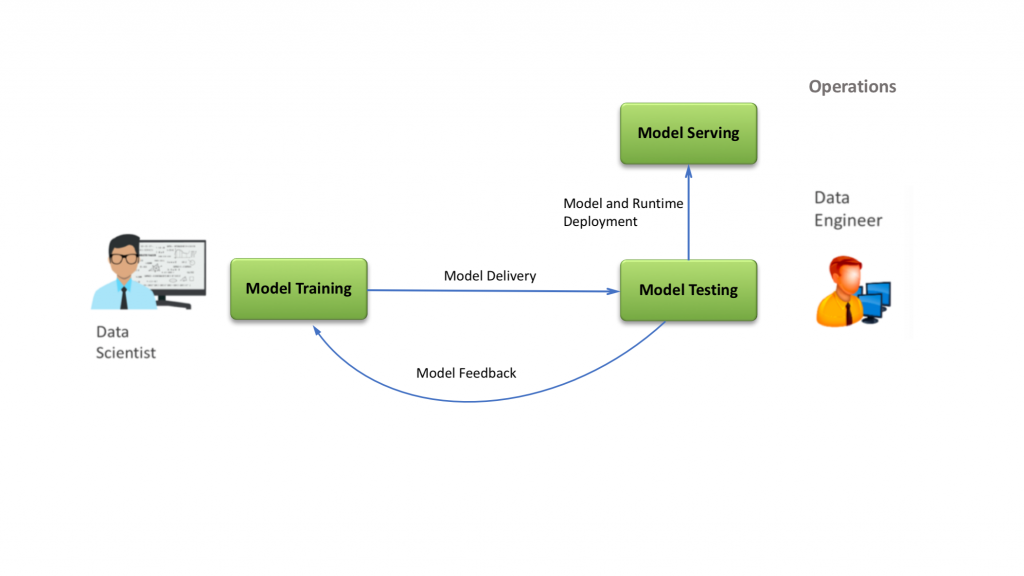

Figure 1: Machine learning model development, testing and delivery to productization in the data science lab, with different roles

Models and applications can often be re-implemented in a new language or on a new platform during this step, in order to achieve production-level scalability for example. This is to guarantee that the model that worked on the testing data actually works also with production quantities of data. This re-implementation phase means that we need to have both environments available at once for cross-checking. This is clearly another area where virtualized platforms help.

To help the data scientist deal with these changes in technology, it is useful if he/she can have a variety of environments or sandboxes available to test ideas and techniques out in, that are somewhat independent of each other and isolatable.

It helps also if there is a variety of available data sources that can be drawn on for the input and training data to the model training and testing processes. But dedicating a set of hardware to just one of these competing activities exclusively can be wasteful and expensive.

The goal of this and the following article is to show how a virtualization platform helps with solving these change-related problems in the machine learning development and testing lab – and in production. To show this, we use some examples from configurations that we have used in our own labs, hosted on VMware vSphere. We apply the virtualization platform to solve some of these change-related problems. A second point brought out here is the capability to run different types of workloads while separating them from each other, though they may be sharing the same collection of hardware.

Multiple Software Platform Versions

Contents

One example of a change control issue in tooling emerged in a recent conversation with a research physicist who works for a noted research organization, and who conducts some machine learning modeling to more accurately understand his test data results. He described to me his surprise when he tried different versions of the Python runtime to support TensorFlow models on an ML project. This was not a complaint about Python in particular – the same thing could have happened with other languages. While performing an upgrade to a later version of Python, with the goal of improving application performance, he found that his programs that previously worked now no longer did. He expected that a newer version would at least be backward-compatible.

His ideal solution to this problem was one in which he could quickly clone his full working environment to a new test one, perform the upgrade of the tool and testing on the new one and then revert to his original version if any problems arose, or alternatively continue with the newer version and discard the old if no issues came up. He described this work to us because he wanted to use virtual machines to achieve this.

Machine Learning Clusters Running in Parallel

Our second example below shows a set of distributed Spark-based machine learning and analytics query systems in concurrent use, rather than a single machine example.

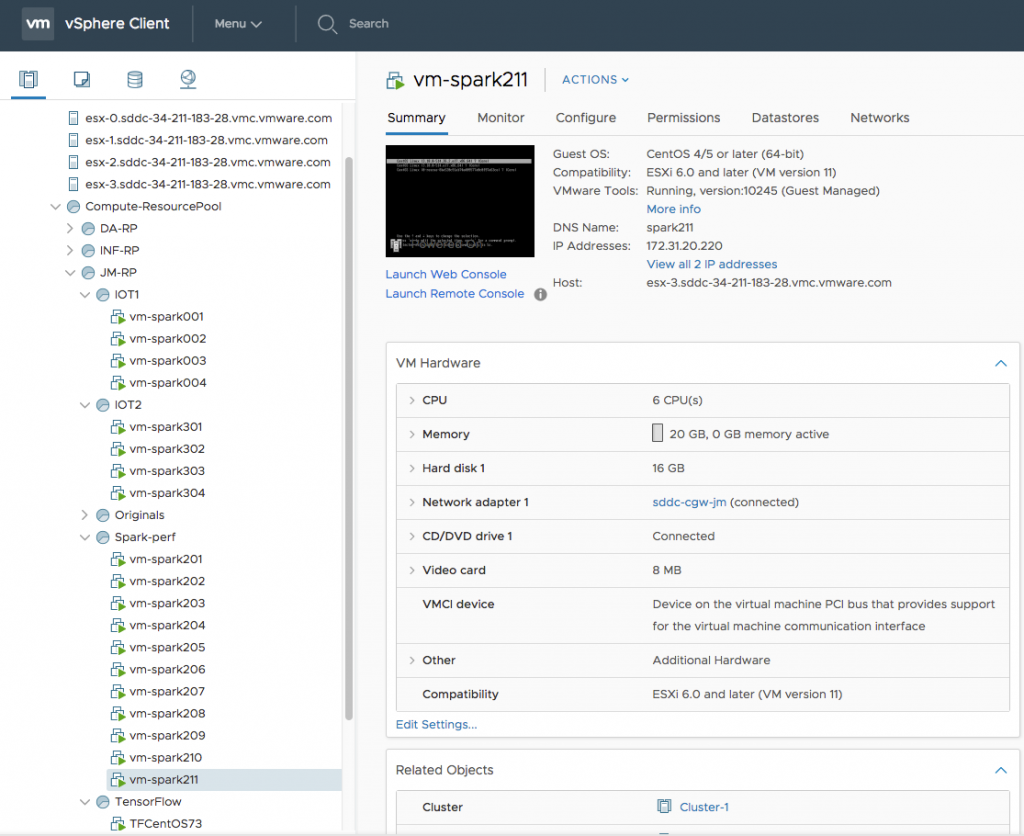

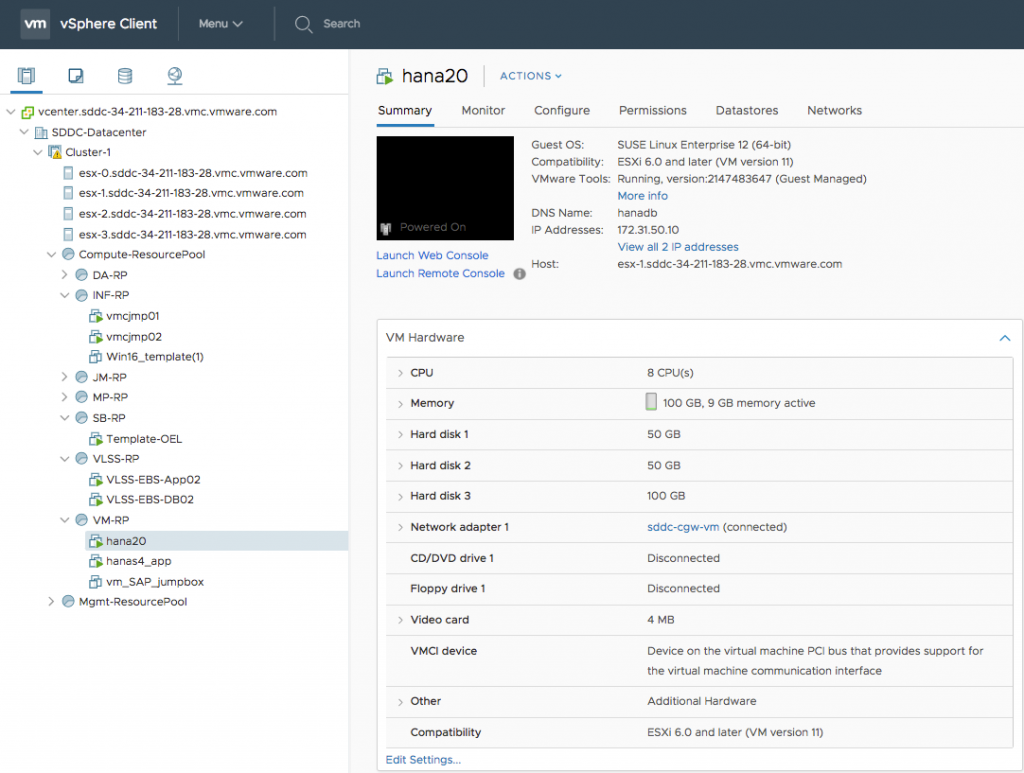

Figure 2 shows a vSphere Client view of a collection of three separate configurations of the Apache Spark environment, used for different purposes, each contained in a set of virtual machines on the vSphere platform. These run alongside other virtual machines containing TensorFlow and Cloudera’s CDH software. All of these environments share the available physical servers (seen in the top left side of the navigation pane) with other software, such as SAP’s HANA and Oracle databases.

Figure 2: The vSphere Client showing multiple Spark clusters and ML tools running in parallel in virtual machines on VMware vSphere

Each of the items on the top left of the navigation pane n Figure 2 that is named “esx-0…” to “esx-3…” is a physical server that is installed with vSphere and fully contolled by it. The physical hosts and virtual machines are managed by the VMware vCenter Server, which is the standard management tool in most VMware installations.

The blue-shaded circular entities labeled “IOT1”, “IOT2”, “Spark-perf” in the left-hand navigation pane represent vSphere Resource Pools – these allow us to separate one Spark cluster from another in terms of the memory and CPU resources they are allowed to use. A vSphere resource pool can be limited in how much of these resources it can supply to its resident virtual machines, from the total physical machine resources of the cluster.

The items within those resource pools with green arrows are running virtual machines that are executing the Driver, Worker and Executor processes from Apache Spark at various versions. There are actually four different versions of Spark in use in our configurations. We are using

- Apache Spark versions 2.1 and 2.2 for the IoT model training and execution work;

- A vendor’s Spark 2.3 version for separate SQL-based analytics work;

- Apache Spark version 1.6.3 (an older version) for some other unrelated cluster sizing tests based on a Spark-Perf suite that we wanted to execute.

The separate virtual machines containing Spark at version 2.3.0 were used for executing Spark SQL queries against a pre-installed dataset. These various versions of the runtime platform are all executing independent workloads of a different nature.

More details on the IoT streaming data suite and tests done can be found in a separate article here:

This is one example of the insulation from version differences that the virtualization platform provides in a much easier fashion that a physical implementation model would. For example, the particular test suite that we used on Apache Spark version 1.6.3 happened to use some older API calls that were no longer supported in later versions, so having this entire toolkit in virtualized form allowed us to continue working with the older version, until the test kit programs are eventually changed. This is not an uncommon scenario that is in part due to the pace of innovation for the platforms mentioned earlier.

Other Workloads

We also wanted to try out some example programs written to leverage the TensorFlow platform by running them in a separate experiment. We set up yet another resource pool for that purpose and executed TensorFlow using Python there. If we had had GPUs available to this cluster, we could have made use of virtualized GPUs as well, independently of all other workloads. Setting your virtualized environment up for GPU use is documented here.

Along with that, we wanted to keep the Cloudera CDH virtual machine work separate from all of the above. More detailed information on the work done on Cloudera CDH on VMware is given here:

These were very separate projects, but they might well have easily interfered with each other, had we used shared physical machines to try to build them incrementally. One might argue that setting the SPARK_HOME variable to point to different directories on a server at different times would achieve the same effect. We did indeed use that method for serialized tests on different versions of Spark installed on the same virtual machines. However, this did not allow our separate teams to use separate versions or sandboxes at the same time on the same hardware. Using virtual machines contained within resource pools made that much easier.

Some of the data sources for the analytics work needed to be derived from an SAP HANA in-memory database or from Oracle databases. These are shown operating in separate virtual machines and separate resource pools in Figure 3 – and they are sharing the same set of hardware servers as the virtualized Spark clusters.

Figure 3: Example data sources for ML applications – an SAP HANA setup in a set of virtual machines

Vendor-supported connector technology can be used for the purpose of data extraction into the big data cluster. Other sources of data that we used included AWS S3, for sharing data across different clusters, such as those set up for the training and testing phases of the IoT ML model.

Conclusion

This first part of a two-part blog article describes the benefits of using the VMware vSphere virtualization platform for testing and deployment of analytics, machine learning and other data-centric applications, both in the machine learning lab and in getting the workload ready for production deployment. We see that the many different versions of the ML platform, such as Spark, TensorFlow, Python and others are better suited for concurrent operation in virtualized form.

In part 2 of this series, we also explore the scaling of a cluster of Spark worker instances to uncover the sweet spot in performance of an example ML logistic regression application. The lesson here is that scaling up or out is more easily achieved through use of virtual machines. We conclude that rapid cloning of workers in a cluster allows the architect/data engineer to scale-out or scale-in their cluster over time in quick order, leading to better prototyping and testing time for new workloads and applications.