Key Trends in Big Data Infrastructure:

Contents

Some of the key trends in big data infrastructure over the past couple of years are:

• Decoupling of Compute and Storage Clusters

• Separate compute virtual machines from storage VMs

• Data is processed and scaled independently of compute

• Dynamic Scaling of compute nodes used for analysis from dozens to hundreds

• SPARK and other newer Big Data platforms can work with regular filesystems

• Newer platforms store and process data in memory

• New platforms can leverage Distributed Filesystems that can use local or shared storage

• Need for High Availability & Fault Tolerance for master components

The Apache Spark Platform:

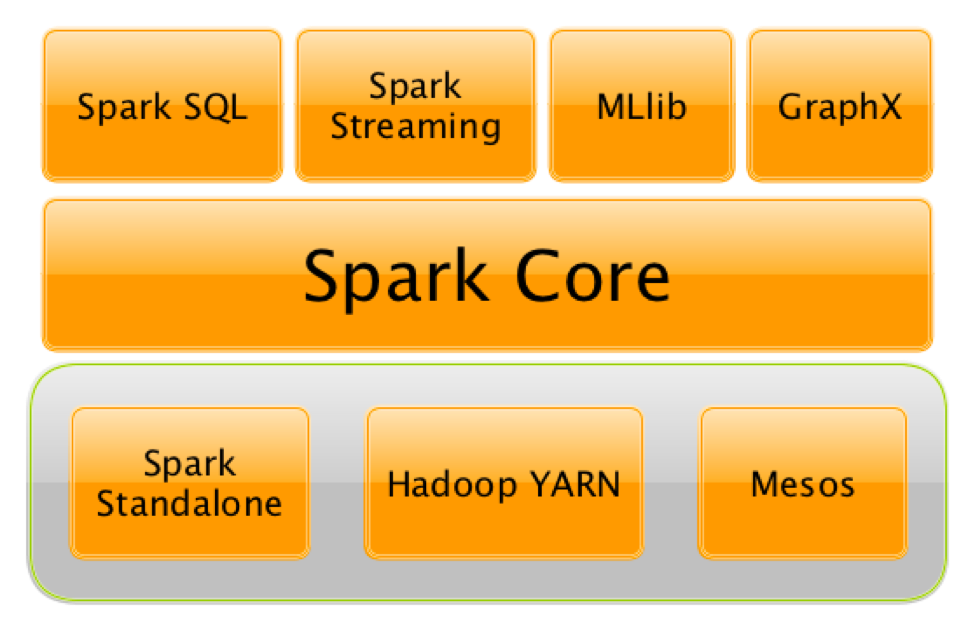

Apache Spark is an open source cluster computing framework with an in memory data processing engine. It is used for a wide variety of use cases such as ETL, analytics, Machine Learning, Graph, Batch and Streams processing.

Storage requirements for Apache Spark:

HDFS has been the predominant storage in legacy big data environments. It is the ideal platform for MapReduce, which leverages large sequential IO for reads and writes during its multiple phases. Typical shared storage used in virtualized environments is not leveraged for HDFS due to its unique requirements for synchronous IO and large block sizes. Local disks in the vSphere hosts are used for HDFS. Due to the use of local resources many of the popular vSphere features such as HA, DRS and vMotion are limited by HDFS.

Apache SPARK being an in-memory platform does not read and write to disk for every step of the processing. The data is read into memory at the beginning of the job and resides in memory through all the intermediary steps and then gets written back to disk in the final step. Apache Spark can work with any good distributed filesystem and does not mandate use of HDFS. This makes Apache Spark a great candidate to leverage existing shared storage in vSphere environments along with HA, DRS and vMotion.

Distributed Filesystem for Apache Spark:

Apache Spark software works with any local or distributed file system solution available for the typical Linux platform. As mentioned earlier, HDFS is an older file system and big data storage mechanism that has many limitations. These make it incompatible with the common shared storage used in virtualized environments, such as SAN and NAS storage. We opted to use a distributed storage platform that works with Spark software and is compatible with virtualized shared storage. By leveraging virtualized shared storage, the core features of the vSphere platform such as vSphere vMotion, vSphere DRS, vSphere HA, and so on, can be fully utilized for the big data infrastructure.

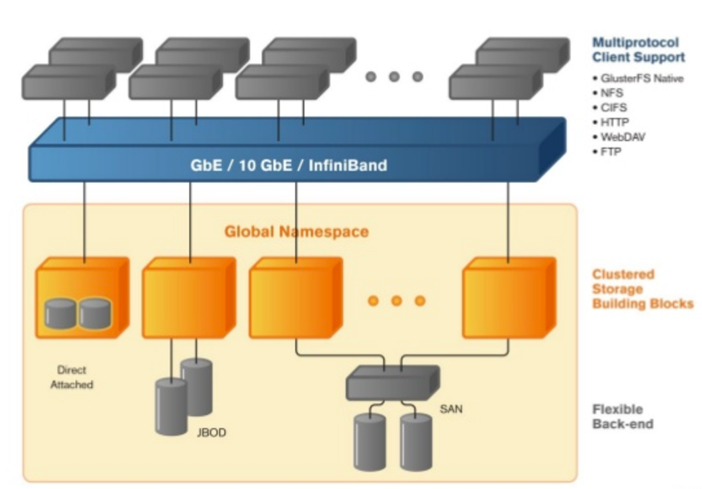

Many file system candidates present themselves. The most common ones are NFS, Ceph, and GlusterFS. A solution is needed that works well with any shared storage backend for the vSphere platform, including block, NFS, and VMware vSAN™. A solution is required that can be easily set up and scaled across hundreds of nodes, if needed, with minimal complexity. A comprehensive shared storage solution for big data must provide the ability to ingest data from multiple sources using the prevailing mechanisms such as NFS, CIFS, HDFS, Amazon S3 (Object), FTP, and so on.

1. NFS, if available, as a data repository for a Spark platform can be leveraged, but it must be highly available. Its innate horizontal scalability is limited.

2. Ceph is relatively new and is primarily used in container environments as a shared storage backend.

3. GlusterFS is mature and has been commonly used for many years as a distributed file system. It is easy to set up and has its own optimized driver for Linux.

Proof of Concept for Virtualized Apache Spark with GlusterFS as distributed Storage:

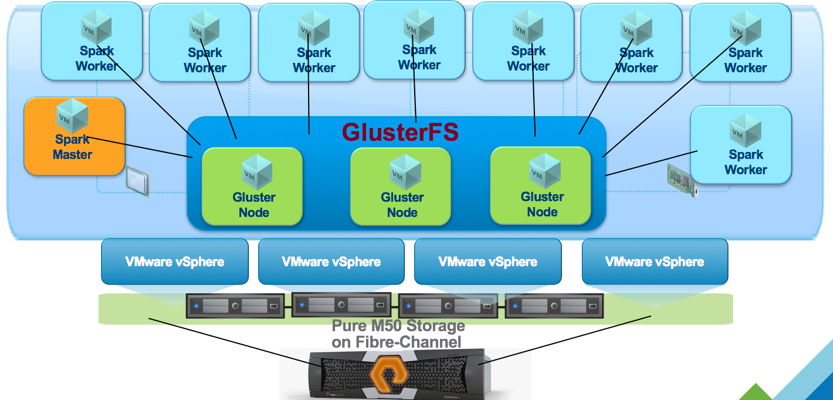

Apache Spark was deployed in a virtual environment initially backed by a FibreChannel All Flash Array from Pure Storage. Standard TPC-DS like workloads were run in the environment to validate the Apache Spark environment.

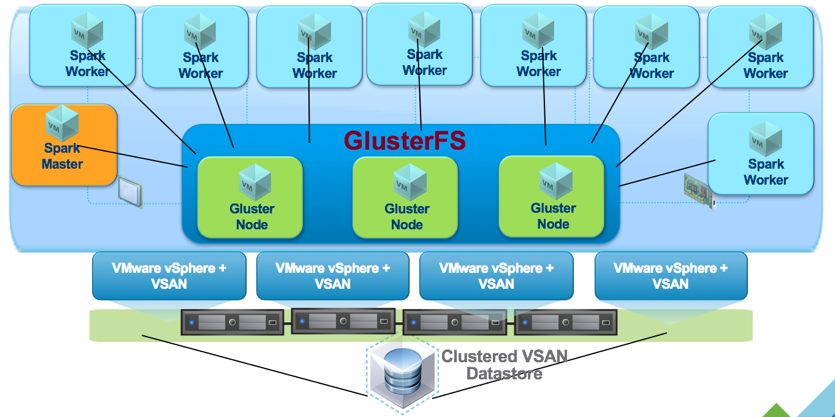

The environment including the GlusterFS nodes were then moved to an all flash Virtual SAN environment. The TPC-DS performance of the FC attached storage and the VSAN were compared.

Conclusion:

This proof of concept and associated test results affirmed that big data platforms such as Apache Spark are excellent candidates for deployment on virtual machines because they are primarily memory resident. GlusterFS provides a high-performance distributed file system for the Spark platform and newer big data workloads. In lieu of HDFS, Spark software can also use Ceph, NFS, and other distributed file systems. Because GlusterFS supports a wide range of protocols and can ingest data from multiple sources, it can potentially serve as the data lake for contemporary big data environments. All major VMware vSphere capabilities—including VMware vSphere vMotion, VMware vSphere Distributed Resource Scheduler, VMware vSphere High Availability, and so on—can be leveraged to provide redundancy and high availability to all big data components, including those that are single points of failure. Dedicated hardware with local storage is no longer the sole technical choice for modern big data applications.

Further details of the proof of concept and the results can be found in the “New Architectures for Apache Spark and Big Data” white paper.