In the digital age, Artificial Intelligence (AI) stands as one of the most transformative technologies, reshaping industries, economies, and even the ways we interact with the world. From enhancing healthcare diagnostics to optimizing supply chain logistics, AI’s potential seems boundless. However, amidst the promises of progress, lurk shadows of security risks that demand our attention. In this blog, we delve into the evolving landscape of AI technology, uncover the security challenges it presents, and explore the pathways toward securing this frontier ensuring prevention and protection with strategies like zero trust security.

Unveiling the security challenges

Contents

However, alongside its advancements, AI brings forth a myriad of security challenges that cannot be ignored. As AI systems become increasingly sophisticated and pervasive, they also become attractive targets for malicious actors seeking to exploit vulnerabilities for personal gain or malicious intent. Here are some examples:

New Malware Worm Can Poison ChatGPT

Deepfake CFO tricks Hong Kong biz out of $25 million

OpenAI’s GPT-4 can exploit real vulnerabilities by reading security advisories

Snowflake at centre of world’s largest data breach

Essential security practices for AI: Guarding the future

AI’s meteoric rise is underpinned by a vast ecosystem of tools, platforms, and frameworks catering to diverse applications and industries. Reports say today most of OpenAI’s experiments take advantage of Kubernetes’s benefits. AI is using the modern Cloud native infrastructure as its foundation. Which also means, AI applications are bringing in new attack surfaces, and, today’s Kubernetes native security solutions could be leveraged to protect AI.

- Cybersecurity threats: As AI becomes integrated into cybersecurity defense mechanisms, it also becomes a target for cyberattacks. Malicious actors can exploit AI vulnerabilities to bypass security measures, launch sophisticated cyber-attacks, or orchestrate large-scale data breaches. As AI permeates critical domains such as healthcare, finance, and autonomous systems, the runtime risks associated with AI deployment become increasingly pronounced. So the zero trust runtime security enforcement is the best answer, observability and visibility is good but not enough, any malicious behaviors need to be detected then blocked in real time, so that the chain actions won’t happen and the attacks are stopped immediately.

- Software supply chain security: the sprawling AI supply chain introduces inherent vulnerabilities, spanning data collection, model training, deployment and maintenance phases. The integration of third-party components, open source libraries, and pre-trained models amplifies the attack surface, exposing AI systems to a myriad of threats ranging from data poisoning to model inversion attacks. So, the whole supply chain or pipe line needs to have the security checks and compliance checks built in. Which includes: A secured build system, apply digital signing, generate and validate SBOM, scan vulnerabilities on repositories and registries as well as at runtime continuously, apply certifications and compliance auditing, enforce admission policies, keep checking security posture and configurations and so on.

- Full stack must be secured: A full stack runtime environment includes all the layers: Operating System, virtual host, hypervisor, the orchestration and management platform, workloads and services like AI engine, LLMs or AI agents. Every layer of the stack has to be secured to avoid a single point of failure. A sophisticated modern workload security solution will be able to integrate and protect full stack, at the same time it should not slow down AI applications. That requires security to be automated, high performance plus seamless scaling.

- Data privacy and misuse: AI algorithms rely heavily on vast datasets for training and decision-making. However, the collection and utilization of sensitive data raise concerns regarding privacy breaches and potential misuse, leading to identity theft, surveillance, or discriminatory practices. Believe that AI will get better and better by itself involving and innovating. On the other side, some necessary security checks are mandatory to make sure data was not leaked to wrong places, people are not accessing the data which they were not authorized to. Security functions like DLP, RBAC could be practical and important to use.

- Security of cloud AI providers: As the previously mentioned Snowflake hack demonstrates, enterprises which use third party cloud AI platforms to process their data can be vulnerable to data breaches if the cloud provider is hacked. Companies can do everything right to protect their AI workloads, but if some part of the process requires sending data to an AI engine, the communications channel as well as the AI engines must be secured.

- Cybersecurity resilience: Strengthening AI cybersecurity resilience involves adopting proactive measures to detect, prevent, and respond to cyber threats effectively. This includes deploying AI-driven cybersecurity solutions for threat detection, anomaly detection, and real-time incident response. With the new AI Agent development, security automation and intelligence will need to be empowered by AI to be able to catch up to protect.

- Ethical guidelines and regulation: Establishing ethical guidelines and regulatory frameworks is paramount to ensure responsible AI development and deployment. This involves collaboration between policymakers, industry stakeholders and civil society to set standards for AI ethics, accountability and transparency.

- Other concerns: Additional concerns arise includes social media exploit, deep fake phishing, Malicious AI tools like FraudGPT or WormGPT, adversarial Attacks, Bias and Fairness – which is a new era that requires security solutions and AI researchers work closely to solve and consolidate step by step.

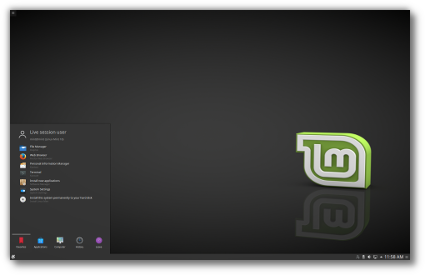

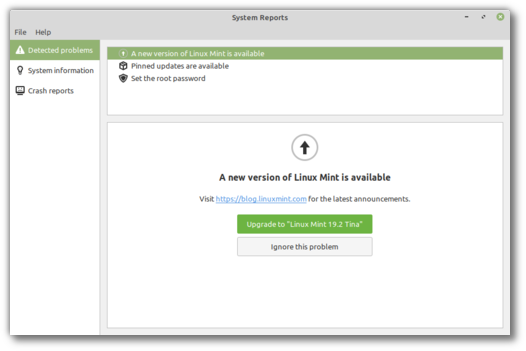

Securing an AI application in production with NeuVector Prime

The recent xz backdoor issue is a real world example that an AI application can also have vulnerable security holes potentially. So what’s the good news? A proactive zero trust security solution plus some solid supply chain security controls will be able to minimize these types of risks efficiently.

SUSE NeuVector Prime is protects containerized AI applications in the pipeline, staging as well as production.

In many cases, the AI applications infrastructure as shown above will run partially under the control (including security policies) of the enterprise, but will also need to leverage cloud-based AI services for some processing. These external connections can be monitored through ingress/egress network policies and WAF/DLP inspection to make sure application exploits are detected and sensitive data is prevented from leaving the enterprise.

Conclusion: Charting a secure course

In conclusion, the journey towards securing AI is complex and multifaceted, requiring concerted efforts from stakeholders across various domains. By understanding the evolving landscape of AI security risks and embracing proactive measures to address them, we can pave the way towards a safer and more resilient AI-powered future.

(Visited 1 times, 1 visits today)