Overview

Contents

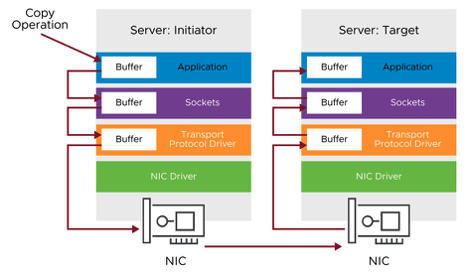

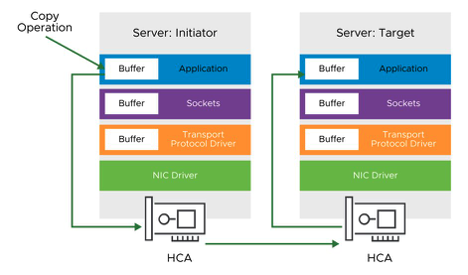

Remote Direct Memory Access (RDMA) is a revolutionary technology that enables host devices (devices connected over a network) to read and write data present in their main memory without the CPU’s and the operating system’s involvement. In a nutshell, computers connected over a network using a particular type of network card can bypass remote system processors and operating system while reading and writing data to their main memory at the same time.

RDMA technology is used extensively in performance-oriented environments. Its unique selling point is low latency while transferring information at the memory to memory level between compute nodes. This is possible because the data transfer function is offloaded to a specially designed network adaptor hardware, also known as Host Channel Adaptor (HCA)

Host Channel Adaptors can interact directly with the memory of applications. This results in network data transfer to happen without consuming any CPU cycles, thus providing a more efficient and faster way to move data between networked computers at lower latency and CPU utilization.

Data transfer without RDMA

Data transfer with RDMA

What is Paravirtual RDMA?

RDMA between VMs is known as Paravirtual RDMA. Introduced from vSphere 6.5, VMs with a PCIe virtual NIC that supports standard RDMA API can leverage PVRDMA technology. VMs must be connected to the same distributed virtual switch to leverage Paravirtual RDMA. The method of communication between Paravirtual RDMA capable VMs is selected automatically based on the following scenarios:

- VMs running on the same ESXi host use memory copy for PVRDMA. This mode does not require ESXi hosts to have an HCA card connected.

- VMs present on different ESXi hosts with HCA cards achieve PVRDMA communication through the HCA card. For this to happen, the HCA card must be configured as an uplink in the distributed virtual switch.

- VMs present on different ESXi hosts when at least one of the ESXi hosts does not have an HCA card connected, the communication happens over a TCP-based channel, and the performance is compromised.

RDMA between VMs is called Para-Virtual RDMA

Paravirtual RDMA capable VMs can now talk to Native Endpoints !!

Introduced in vSphere 7U1, Paravirtual RDMA capable VMs can now communicate with native endpoints. Native endpoints are RDMA capable devices such as storage arrays that do not use the PVRDMA adaptor type (non-PVRDMA endpoints). Usage of RDMA technology for communication between nodes in a cluster and storage arrays is quite common since it offers very low latency and high performance specially for 3-Tier applications.

With this feature, customers can get an enhanced performance for applications & clusters that use RDMA to communicate with storage devices & arrays.

vMotion is not supported for this release, however we continue to work on enhancing this feature to optimize compatibility

Pre-requisites to enable PVRDMA support for native endpoints

- ESXi host must have PVRDMA namespace support.

- ESXi namespaces should not be confused with the vSphere with Tanzu/Tanzu Kubernetes Grid namespaces. In releases previous to vSphere 7.0, PVRDMA virtualized public resource identifiers in the underlying hardware to guarantee that a physical resource can be allocated with the same public identifier when a virtual machine resumed operation following the use of vMotion to move it from one physical host server to another. To do this, PVRDMA distributed virtual to physical resource identifier translations to peers when creating a resource. This resulted in additional overhead that can be significant when creating large numbers of resources. PVRDMA namespaces prevents these additional overheads by letting multiple VMs coexist without coordinating the assignment of identifiers. Each VM is assigned an isolated identifier namespace on the RDMA hardware, such that any VM can select its identifiers within the same range without conflicting with other virtual machines. The physical resource identifier no longer changes even after vMotion, so virtual to physical resource identifier translations are no longer necessary.

- The Guest OS must have kernel and user-level support for RDMA namespaces.

- Namespaces support is part of Linux kernel 5.5 onwards

- User-level support is provided by using the rdma-core library.

- This feature requires VM Hardware version 18.

To conclude

VMware is continuously innovating to solve customers problems. Ensuring minimal latency during data transfer is paramount and critical in some environments and business use cases.

PVRDMA support for native endpoints ensures that applications running on vSphere can exchange data at lightning speed with storage solutions. This ensures that businesses can deliver high performance at all the time.

We are excited about these new releases and how vSphere is always improving to serve our customers and workloads better in the hybrid cloud. We will continue posting new technical and product information about vSphere with Tanzu & vSphere 7 Update 1 on Tuesdays, Wednesdays, and Thursdays through the end of October 2020! Join us by following the blog directly using the RSS feed, on Facebook, and on Twitter, and by visiting our YouTube channel which has new videos about vSphere 7 Update 1, too. As always, thank you, and please stay safe.

The post The Fast Lane for Data Transfer – Paravirtual RDMA(PVRDMA) Support for Native Endpoints. appeared first on VMware vSphere Blog.