To NUMA or not to NUMA – that’s the question that comes up when considering Oracle workloads and NUMA.

This blog

- Is not meant to be a deep dive on ESXi NUMA constructs

- Is not meant to be by any way, any final recommendation, whether to enable NUMA at the database level or not

- contains results that I got in my lab running a load generator SLOB against my workload, which will be way different than any real-world customer workload

- is meant to raise awareness of the importance to test one’s database workload with / without Oracle NUMA and then make an informed decision whether to enable Oracle NUMA or not

Remember, any performance data is a result of the combination of hardware configuration, software configuration, test methodology, test tool, and workload profile used in the testing.

The blog assumes that the reader is familiar with the ESXi NUMA constructs. This blog focuses on the use cases around testing Oracle workloads with and without NUMA using a load generator SLOB.

VMware ESXi and NUMA

Contents

ESXi supports memory access optimization for Intel and AMD Opteron processors in server architectures that support NUMA (non-uniform memory access).

Non-Uniform Memory Access or Non-Uniform Memory Architecture (NUMA) is a physical memory design used in SMP (multiprocessors) architecture, where the memory access time depends on the memory location relative to a processor. Under NUMA, a processor can access its own local memory faster than non-local memory, that is, memory local to another processor or memory shared between processors.

More information about VMware ESXi and NUMA can be found here.

In-depth information on VMware ESXI and NUMA can be found in the excellent series of blog articles written by Frank Denneman.

VMware Virtual NUMA

vSphere 5.0 and later includes support for exposing virtual NUMA topology to guest operating systems, which can improve performance by facilitating guest operating system and application NUMA optimizations.

Virtual NUMA topology is available to hardware version 8 virtual machines and is enabled by default when the number of virtual CPUs is greater than 8. You can also manually influence virtual NUMA topology using advanced configuration options.

More information on VMware Virtual NUMA (vNUMA) can be found here.

Virtual Machine NUMA Configuration

From the “Performance Best Practices for VMware vSphere 7.0”:

VM’s can be classified into 2 NUMA categories:numa

- Non-Wide VM’s – VM’s with vCPUs equal to or less than the number of cores in each physical NUMA node. These virtual machines will be assigned to cores all within a single NUMA node and will be preferentially allocated memory local to that NUMA node. This means that, subject to memory availability, all their memory accesses will be local to that NUMA node, resulting in the lowest memory access latencies.

- Wide VM’s – VM’s with more vCPUs than the number of cores in each physical NUMA node. These virtual machines will be assigned to two (or more) NUMA nodes and will be preferentially allocated memory local to those NUMA nodes. Because vCPUs in these wide virtual machines might sometimes need to access memory outside their own NUMA node, they might experience higher average memory access latencies than virtual machines that fit entirely within a NUMA node.

VMware VM preferHT Setting

From the “Performance Best Practices for VMware vSphere 7.0”:

On hyper-threaded systems, virtual machines with a number of vCPUs greater than the number of cores in a NUMA node but lower than the number of logical processors in each physical NUMA node might benefit from using logical processors with local memory instead of full cores with remote memory. This behavior can be configured for a specific virtual machine with the numa.vcpu.preferHT flag.

More information on this topic can be found in the blog What is PreferHT and When To Use It.

Oracle and NUMA

Oracle NUMA support is enabled by default in Oracle 10g. Oracle NUMA support is disabled by default for 11g and above.

Refer to Oracle MySupport Doc “Enable Oracle NUMA support with Oracle Server Version 11gR2 (Doc ID 864633.1)” for more information.

The two key NUMA init.ora parameters in 10g and 11g are _enable_numa_optimization (10g) and _enable_numa_support(11g).

When running an Oracle database Version 11.2 in a NUMA capable environment, Oracle will not by default detect if the hardware and operating system are NUMA capable and enable Oracle NUMA support.

When running an Oracle database with NUMA support in a NUMA capable environment, Oracle will by default detect if the hardware and operating system are NUMA capable and enable Oracle NUMA support. From 11gR2, Oracle NUMA support is disabled by default.

Oracle MySupport Doc ID 864633.1 recommendations about turning NUMA on :

- Disabling or enabling NUMA can change application performance.

- It is strongly recommended to evaluate the performance before and after enabling NUMA in a test environment before going into production.

- Operating system and/or hardware configuration may need to be tuned or reconfigured when disabling Oracle NUMA support. Consult your hardware vendor for more information or recommendation

Oracle MySupport Doc “Oracle NUMA Usage Recommendation (Doc ID 759565.1)“ lists all known bugs caused by Oracle NUMA support being enabled.

Use Cases for Oracle NUMA or Non-NUMA

The decision to NUMA or not to NUMA for Oracle workloads would depend on several factors including

- whether the workload requires more vCPU’s than the number of physical cores in a NUMA node

- whether the workload is Memory Latency sensitive or Memory Bandwidth hungry

In any case, as per Oracle’s recommendation, It is strongly recommended to evaluate the performance before and after enabling NUMA in a test environment before going into production.

Test Setup

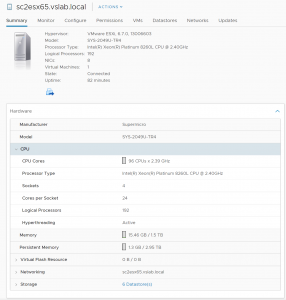

Server ‘sc2esx65.vslab. local’ is at version VMware ESXi, 6.7.0, 13006603 version with 4 sockets, 24 cores per socket, Hyper Threading and Intel(R) Xeon(R) Platinum 8260L CPU @ 2.40GHz and 1.5 TB RAM.

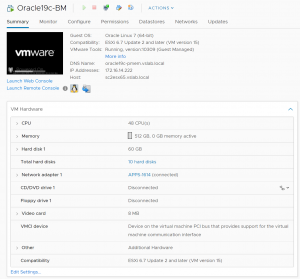

VM ‘Oracle19c-BM’ was created on ESXI 6.7 U2 with 48vCPU’s and 512 GB vRAM with storage on All-Flash Pure array.

The OS was OEL 7.6 UEK with Oracle 19c Grid Infrastructure & RDBMS installed. Oracle ASM was the storage platform with Oracle ASMLIB. Oracle ASMFD can also be used instead of Oracle ASMLIB. Hugepages were set at the Guest OS level. Memory Reservations were set at the M level.

The Oracle SGA was set to 416 GB and PGA set to 6 GB. OS level traditional huge pages was set up for the SGA region. SLOB 2.5.2.4 was chosen as the load generator for this exercise, the slob SCALE parameter was set to 400G.

Test Cases

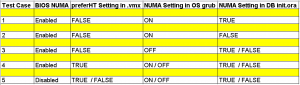

Below were the use cases tested –

Example, for Test case 1:

- NUMA was enabled in BIOS

- preferHT was set to FALSE for VM which means it’s a wide VM config given that number of physical cores per socket is 24 and the number of vCPU’s for VM is set to 48

- NUMA is set to ON at OS and DB level

- SLOB workload was run, and results were captured for that run

Observations for above test case combinations

==========================================

Test Case 1 – 3 : BIOS NUMA=On , preferHT=False

==========================================

Wide VM

Verify the VM PreferHT setting is set to FALSE

[root@sc2esx65:/vmfs/volumes/5e13bc65-eea0e494-77bb-246e96d08eca/Oracle19c-BM] cat Oracle19c-BM.vmx | grep -i PreferHT

numa.vcpu.PreferHT = “FALSE”

[root@sc2esx65:/vmfs/volumes/5e13bc65-eea0e494-77bb-246e96d08eca/Oracle19c-BM]

Thanks to Frank Denneman post which had this very useful command to show the VM NUMA configuration with VPD and PPD – it shows 2 NUMA nodes

[root@sc2esx65:~] vmdumper -l | cut -d / -f 2-5 | while read path; do egrep -oi “DICT.*(displayname.*|numa.*|cores.*|vcpu.*|memsize.*|affinity.*)= .*|numa:.*|numaHost:.*” “/$path/vmware.log”; echo -e; done

DICT numvcpus = “48”

DICT memSize = “524288”

DICT displayName = “Oracle19c-BM”

DICT numa.autosize.cookie = “480001”

DICT numa.autosize.vcpu.maxPerVirtualNode = “48”

DICT numa.vcpu.PreferHT = “FALSE”

DICT numa.autosize.once = “FALSE”

numaHost: NUMA config: consolidation= 1 preferHT= 0

numa: coresPerSocket= 1 maxVcpusPerVPD= 24

numaHost: 48 VCPUs 2 VPDs 2 PPDs

numaHost: VCPU 0 VPD 0 PPD 0

numaHost: VCPU 1 VPD 0 PPD 0

numaHost: VCPU 2 VPD 0 PPD 0

numaHost: VCPU 3 VPD 0 PPD 0

numaHost: VCPU 4 VPD 0 PPD 0

numaHost: VCPU 5 VPD 0 PPD 0

numaHost: VCPU 6 VPD 0 PPD 0

numaHost: VCPU 7 VPD 0 PPD 0

numaHost: VCPU 8 VPD 0 PPD 0

numaHost: VCPU 9 VPD 0 PPD 0

numaHost: VCPU 10 VPD 0 PPD 0

numaHost: VCPU 11 VPD 0 PPD 0

numaHost: VCPU 12 VPD 0 PPD 0

numaHost: VCPU 13 VPD 0 PPD 0

numaHost: VCPU 14 VPD 0 PPD 0

numaHost: VCPU 15 VPD 0 PPD 0

numaHost: VCPU 16 VPD 0 PPD 0

numaHost: VCPU 17 VPD 0 PPD 0

numaHost: VCPU 18 VPD 0 PPD 0

numaHost: VCPU 19 VPD 0 PPD 0

numaHost: VCPU 20 VPD 0 PPD 0

numaHost: VCPU 21 VPD 0 PPD 0

numaHost: VCPU 22 VPD 0 PPD 0

numaHost: VCPU 23 VPD 0 PPD 0

numaHost: VCPU 24 VPD 1 PPD 1

numaHost: VCPU 25 VPD 1 PPD 1

numaHost: VCPU 26 VPD 1 PPD 1

numaHost: VCPU 27 VPD 1 PPD 1

numaHost: VCPU 28 VPD 1 PPD 1

numaHost: VCPU 29 VPD 1 PPD 1

numaHost: VCPU 30 VPD 1 PPD 1

numaHost: VCPU 31 VPD 1 PPD 1

numaHost: VCPU 32 VPD 1 PPD 1

numaHost: VCPU 33 VPD 1 PPD 1

numaHost: VCPU 34 VPD 1 PPD 1

numaHost: VCPU 35 VPD 1 PPD 1

numaHost: VCPU 36 VPD 1 PPD 1

numaHost: VCPU 37 VPD 1 PPD 1

numaHost: VCPU 38 VPD 1 PPD 1

numaHost: VCPU 39 VPD 1 PPD 1

numaHost: VCPU 40 VPD 1 PPD 1

numaHost: VCPU 41 VPD 1 PPD 1

numaHost: VCPU 42 VPD 1 PPD 1

numaHost: VCPU 43 VPD 1 PPD 1

numaHost: VCPU 44 VPD 1 PPD 1

numaHost: VCPU 45 VPD 1 PPD 1

numaHost: VCPU 46 VPD 1 PPD 1

numaHost: VCPU 47 VPD 1 PPD 1

[root@sc2esx65:~]

NUMA set to ON in OS grub file – Observation is OS level NUMA is On

[root@oracle19c-pmem ~]# numactl –hardware

available: 2 nodes (0-1)

node 0 cpus: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23

node 0 size: 258683 MB

node 0 free: 44378 MB

node 1 cpus: 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47

node 1 size: 257035 MB

node 1 free: 42646 MB

node distances:

node 0 1

0: 10 20

1: 20 10

[root@oracle19c-pmem ~]#

[root@oracle19c-pmem ~]# numactl –show

policy: default

preferred node: current

physcpubind: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47

cpubind: 0 1

nodebind: 0 1

membind: 0 1

[root@oracle19c-pmem ~]#

NUMA set to OFF in OS grub file – Observation is OS level NUMA is Off

[root@oracle19c-pmem ~]# numactl –hardware

available: 1 nodes (0)

node 0 cpus: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47

node 0 size: 515719 MB

node 0 free: 87074 MB

node distances:

node 0

0: 10

[root@oracle19c-pmem ~]#

[root@oracle19c-pmem ~]# numactl –show

policy: default

preferred node: current

physcpubind: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47

cpubind: 0

nodebind: 0

membind: 0

[root@oracle19c-pmem ~]#

========================================

Test Case 4 : BIOS NUMA=On , preferHT=True

========================================

VM within the NUMA node

Verify the VM PreferHT setting is set to TRUE

[root@sc2esx65:/vmfs/volumes/5e13bc65-eea0e494-77bb-246e96d08eca/Oracle19c-BM] cat Oracle19c-BM.vmx | grep -i PreferHT

numa.vcpu.PreferHT = “TRUE”

[root@sc2esx65:/vmfs/volumes/5e13bc65-eea0e494-77bb-246e96d08eca/Oracle19c-BM]

Verify VM NUMA configuration with VPD and PPD – it shows 1 NUMA node

[root@sc2esx65:~] vmdumper -l | cut -d / -f 2-5 | while read path; do egrep -oi “DICT.*(displayname.*|numa.*|cores.*|vcpu.*|memsize.*|affinity.*)= .*|numa:.*|numaHost:.*” “/$path/vmware.log”; echo -e; done

DICT numvcpus = “48”

DICT memSize = “524288”

DICT displayName = “Oracle19c-BM”

DICT numa.autosize.cookie = “480001”

DICT numa.autosize.vcpu.maxPerVirtualNode = “24”

DICT numa.vcpu.PreferHT = “TRUE”

DICT numa.autosize.once = “FALSE”

numaHost: NUMA config: consolidation= 1 preferHT= 1

numa: coresPerSocket= 1 maxVcpusPerVPD= 48

numaHost: 48 VCPUs 1 VPDs 1 PPDs

numaHost: VCPU 0 VPD 0 PPD 0

numaHost: VCPU 1 VPD 0 PPD 0

numaHost: VCPU 2 VPD 0 PPD 0

numaHost: VCPU 3 VPD 0 PPD 0

numaHost: VCPU 4 VPD 0 PPD 0

numaHost: VCPU 5 VPD 0 PPD 0

numaHost: VCPU 6 VPD 0 PPD 0

numaHost: VCPU 7 VPD 0 PPD 0

numaHost: VCPU 8 VPD 0 PPD 0

numaHost: VCPU 9 VPD 0 PPD 0

numaHost: VCPU 10 VPD 0 PPD 0

numaHost: VCPU 11 VPD 0 PPD 0

numaHost: VCPU 12 VPD 0 PPD 0

numaHost: VCPU 13 VPD 0 PPD 0

numaHost: VCPU 14 VPD 0 PPD 0

numaHost: VCPU 15 VPD 0 PPD 0

numaHost: VCPU 16 VPD 0 PPD 0

numaHost: VCPU 17 VPD 0 PPD 0

numaHost: VCPU 18 VPD 0 PPD 0

numaHost: VCPU 19 VPD 0 PPD 0

numaHost: VCPU 20 VPD 0 PPD 0

numaHost: VCPU 21 VPD 0 PPD 0

numaHost: VCPU 22 VPD 0 PPD 0

numaHost: VCPU 23 VPD 0 PPD 0

numaHost: VCPU 24 VPD 0 PPD 0

numaHost: VCPU 25 VPD 0 PPD 0

numaHost: VCPU 26 VPD 0 PPD 0

numaHost: VCPU 27 VPD 0 PPD 0

numaHost: VCPU 28 VPD 0 PPD 0

numaHost: VCPU 29 VPD 0 PPD 0

numaHost: VCPU 30 VPD 0 PPD 0

numaHost: VCPU 31 VPD 0 PPD 0

numaHost: VCPU 32 VPD 0 PPD 0

numaHost: VCPU 33 VPD 0 PPD 0

numaHost: VCPU 34 VPD 0 PPD 0

numaHost: VCPU 35 VPD 0 PPD 0

numaHost: VCPU 36 VPD 0 PPD 0

numaHost: VCPU 37 VPD 0 PPD 0

numaHost: VCPU 38 VPD 0 PPD 0

numaHost: VCPU 39 VPD 0 PPD 0

numaHost: VCPU 40 VPD 0 PPD 0

numaHost: VCPU 41 VPD 0 PPD 0

numaHost: VCPU 42 VPD 0 PPD 0

numaHost: VCPU 43 VPD 0 PPD 0

numaHost: VCPU 44 VPD 0 PPD 0

numaHost: VCPU 45 VPD 0 PPD 0

numaHost: VCPU 46 VPD 0 PPD 0

numaHost: VCPU 47 VPD 0 PPD 0

[root@sc2esx65:~]

NUMA set to ON / OFF in OS grub file – Observation is OS level NUMA is Off

[root@oracle19c-pmem ~]# numactl –hardware

available: 1 nodes (0)

node 0 cpus: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47

node 0 size: 515719 MB

node 0 free: 87057 MB

node distances:

node 0

0: 10

[root@oracle19c-pmem ~]#

[root@oracle19c-pmem ~]# numactl –show

policy: default

preferred node: current

physcpubind: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47

cpubind: 0

nodebind: 0

membind: 0

[root@oracle19c-pmem ~]#

==========================================

Test Case 4 : BIOS NUMA=Off , preferHT=True

==========================================

[root@sc2esx65:~] sched-stats -t numa-pnode

nodeID used idle entitled owed loadAvgPct nVcpu freeMem totalMem

[root@sc2esx65:~]

NUMA set to ON / OFF in OS grub file – Observation is OS level NUMA is Off

[root@oracle19c-pmem ~]# numactl –hardware

available: 1 nodes (0)

node 0 cpus: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47

node 0 size: 515719 MB

node 0 free: 87057 MB

node distances:

node 0

0: 10

[root@oracle19c-pmem ~]#

[root@oracle19c-pmem ~]# numactl –show

policy: default

preferred node: current

physcpubind: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47

cpubind: 0

nodebind: 0

membind: 0

[root@oracle19c-pmem ~]#

Test Results & Analysis

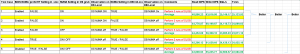

The individual runs for each test case have been averaged out to give a general idea without getting too much into the weeds.

As mentioned before this blog

- Is not meant to be a deep dive on vSphere NUMA constructs

- Is not meant to be by any way, any final recommendation, whether to enabled NUMA at the database level or not

- contains results that I got in my lab running a load generator SLOB against my workload, which will be way different than any real-world customer workload

- is meant to raise awareness of the importance to test one’s database workload with / without Oracle NUMA and then make an informed decision whether to enable NUMA or not

Test Results Analysis for the workload we ran – comparing the database metrics for the various runs

1) Comparing the first 3 runs, with BIOS NUMA ON, turning off NUMA at the OS level for a Wide VM gives the least performance

2) Comparing the first 4 runs, with BIOS NUMA ON, using the VM as a non-wide VM ie using the threads instead of going wide gives the least performance

3) Comparing all 5 runs, treating a NUMA server as a UMA server gives the least performance

==============================================================

Additional Test Case – VM vCPUs <= number of physical cores / socket

==============================================================

For example, given , the server has 48 physical cores / socket and the VM has 24 vCPUS , whether the VM vRAM is > or < physical memory / socket , the VM is a Non-Wide VM and the VM memory is within a NUMA node (over-subscribed in case the VM vRAM > physical available memory / socket ).

With VM vCPU’s = 24 and vRAM = 512 GB –

[root@sc2esx65:/vmfs/volumes/5e13bc65-eea0e494-77bb-246e96d08eca/Oracle19c-BM] vmdumper -l | cut -d / -f 2-5 | while read path; do egrep -oi “DICT.*(displayname.*|numa.*|cores.*|vcpu.*|memsize.*|affinity.*)= .*|numa:.*|numa

Host:.*” “/$path/vmware.log”; echo -e; done

DICT numvcpus = “24”

DICT memSize = “524288”

DICT displayName = “Oracle19c-BM”

DICT numa.autosize.cookie = “480001”

DICT numa.autosize.vcpu.maxPerVirtualNode = “48”

DICT numa.autosize.once = “FALSE”

numaHost: NUMA config: consolidation= 1 preferHT= 0

numa: coresPerSocket= 1 maxVcpusPerVPD= 24

numaHost: 24 VCPUs 1 VPDs 1 PPDs

numaHost: VCPU 0 VPD 0 PPD 0

numaHost: VCPU 1 VPD 0 PPD 0

numaHost: VCPU 2 VPD 0 PPD 0

numaHost: VCPU 3 VPD 0 PPD 0

numaHost: VCPU 4 VPD 0 PPD 0

numaHost: VCPU 5 VPD 0 PPD 0

numaHost: VCPU 6 VPD 0 PPD 0

numaHost: VCPU 7 VPD 0 PPD 0

numaHost: VCPU 8 VPD 0 PPD 0

numaHost: VCPU 9 VPD 0 PPD 0

numaHost: VCPU 10 VPD 0 PPD 0

numaHost: VCPU 11 VPD 0 PPD 0

numaHost: VCPU 12 VPD 0 PPD 0

numaHost: VCPU 13 VPD 0 PPD 0

numaHost: VCPU 14 VPD 0 PPD 0

numaHost: VCPU 15 VPD 0 PPD 0

numaHost: VCPU 16 VPD 0 PPD 0

numaHost: VCPU 17 VPD 0 PPD 0

numaHost: VCPU 18 VPD 0 PPD 0

numaHost: VCPU 19 VPD 0 PPD 0

numaHost: VCPU 20 VPD 0 PPD 0

numaHost: VCPU 21 VPD 0 PPD 0

numaHost: VCPU 22 VPD 0 PPD 0

numaHost: VCPU 23 VPD 0 PPD 0

[root@sc2esx65:/vmfs/volumes/5e13bc65-eea0e494-77bb-246e96d08eca/Oracle19c-BM]

[root@oracle19c-pmem ~]# numactl –hardware

available: 1 nodes (0)

node 0 cpus: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23

node 0 size: 515723 MB

node 0 free: 87152 MB

node distances:

node 0

0: 10

[root@oracle19c-pmem ~]#

With VM vCPU’s = 24 and vRAM = 256 GB –

[root@sc2esx65:/vmfs/volumes/5e13bc65-eea0e494-77bb-246e96d08eca/Oracle19c-BM] vmdumper -l | cut -d / -f 2-5 | while read path; do egrep -oi “DICT.*(displayname.*|numa.*|cores.*|vcpu.*|memsize.*|affinity.*)= .*|numa:.*|numa

Host:.*” “/$path/vmware.log”; echo -e; done

DICT numvcpus = “24”

DICT memSize = “262144”

DICT displayName = “Oracle19c-BM”

DICT numa.autosize.cookie = “240001”

DICT numa.autosize.vcpu.maxPerVirtualNode = “24”

DICT numa.autosize.once = “FALSE”

numaHost: NUMA config: consolidation= 1 preferHT= 0

numa: coresPerSocket= 1 maxVcpusPerVPD= 24

numaHost: 24 VCPUs 1 VPDs 1 PPDs

numaHost: VCPU 0 VPD 0 PPD 0

numaHost: VCPU 1 VPD 0 PPD 0

numaHost: VCPU 2 VPD 0 PPD 0

numaHost: VCPU 3 VPD 0 PPD 0

numaHost: VCPU 4 VPD 0 PPD 0

numaHost: VCPU 5 VPD 0 PPD 0

numaHost: VCPU 6 VPD 0 PPD 0

numaHost: VCPU 7 VPD 0 PPD 0

numaHost: VCPU 8 VPD 0 PPD 0

numaHost: VCPU 9 VPD 0 PPD 0

numaHost: VCPU 10 VPD 0 PPD 0

numaHost: VCPU 11 VPD 0 PPD 0

numaHost: VCPU 12 VPD 0 PPD 0

numaHost: VCPU 13 VPD 0 PPD 0

numaHost: VCPU 14 VPD 0 PPD 0

numaHost: VCPU 15 VPD 0 PPD 0

numaHost: VCPU 16 VPD 0 PPD 0

numaHost: VCPU 17 VPD 0 PPD 0

numaHost: VCPU 18 VPD 0 PPD 0

numaHost: VCPU 19 VPD 0 PPD 0

numaHost: VCPU 20 VPD 0 PPD 0

numaHost: VCPU 21 VPD 0 PPD 0

numaHost: VCPU 22 VPD 0 PPD 0

numaHost: VCPU 23 VPD 0 PPD 0

[root@sc2esx65:/vmfs/volumes/5e13bc65-eea0e494-77bb-246e96d08eca/Oracle19c-BM]

[root@oracle19c-pmem ~]# numactl –hardware

available: 1 nodes (0)

node 0 cpus: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23

node 0 size: 257691 MB

node 0 free: 255502 MB

node distances:

node 0

0: 10

[root@oracle19c-pmem ~]#

Looking athe above use caes , we can surmises

- if VM vCPUs > number of physical cores / socket and whether the VM vRAM is > or < physical memory /socket

- if preferHT = FALSE , its a Wide VM and the VM memory is distributeed equally among the NUMA nodes

- if preferHT = TRUE , its a Non-Wide VM and the VM memory is within a NUMA node

- if VM vCPUs <= number of physical cores / socket and whether the VM vRAM is > or < physical memory /socket

- its a Non-Wide VM and the VM memory is within a NUMA node

Well, does it mean that all Wide VM’s will perform better than non-wide VM’s using HT within a NUMA node? Not necessarily !! it depends, on the workload. There could be other workloads profiles where a non-wide VM performs much better than a wide VM.

There is no hard and fast rule. As mentioned before, test your workload thoroughly with and without NUMA to come up with the best possible configuration.

In any case, as per Oracle’s recommendation, It is strongly recommended to evaluate the performance before and after enabling NUMA in a test environment before going into production

Summary

- Remember, any performance data is a result of the combination of hardware configuration, software configuration, test methodology, test tool, and workload profile used in the testing

- This blog Is not meant to be by any way, any final recommendation, whether to enable NUMA at the database level or not

- This blog contains results that I got in my lab running a load generator SLOB against my workload, which will be way different than any real-world customer workload

- This blog is meant to raise awareness of the importance to test one’s database workload with / without Oracle NUMA and then make an informed decision whether to enable Oracle NUMA or not

All Oracle on vSphere white papers including Oracle licensing on vSphere/vSAN, Oracle best practices, RAC deployment guides, workload characterization guide can be found in the url below

Oracle on VMware Collateral – One Stop Shop

https://blogs.vmware.com/apps/2017/01/oracle-vmware-collateral-one-stop-shop.html