In part 1 of the blog series, we introduced the solution components that included VMware Tanzu Kubernetes and vSphere Bitfusion. In this part of this series we will look at the validation of the solution with Bitfusion access from TKG guest, TKG supervisor and TKGI Kubernetes cluster variants.

Validation of the Solution:

Contents

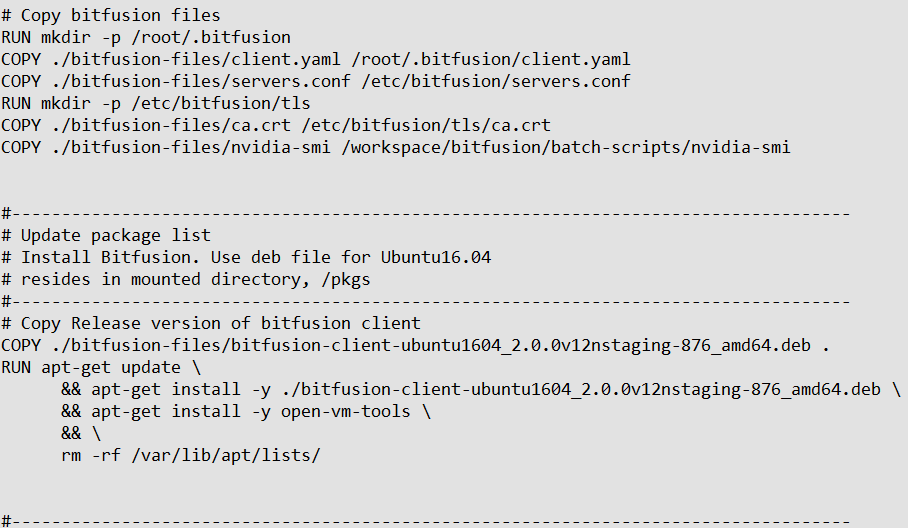

There are three different variants of VMware based Kubernetes that were tested in this solution to confirm interoperability with Bitfusion. To validate the solution containers with TensorFlow and PyTorch with embedded Bitfusion components were used. The three different VMware Kubernetes variants were then tested by deploying these pods in their clusters and running TensorFlow and PyTorch. The customization of the pods with Bitfusion was done by adding the section shown below to the docker file. YAML files used for TensorFlow and PyTorch Kubernetes pods are shown in Appendix B & D.

Figure 6: Embedding of Bitfusion files into the docker container

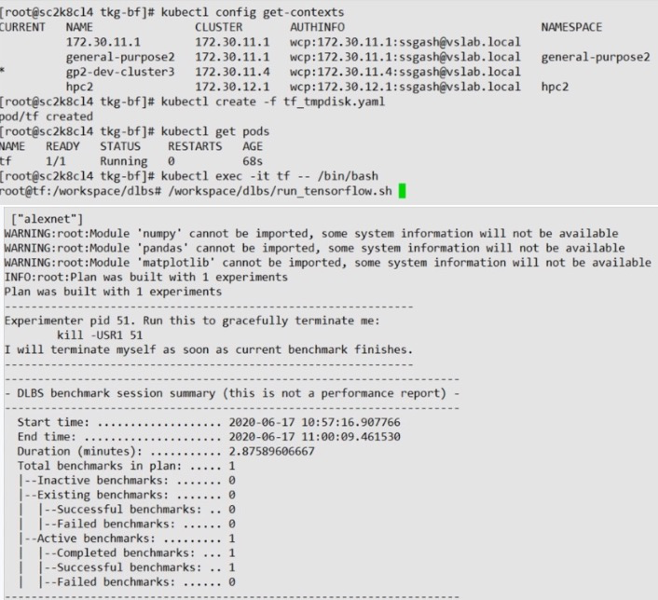

Tanzu Kubernetes Grid

Tanzu Kubernetes Grid (TKG) provides a consistent Kubernetes experience across clouds. With it, customers are able to rapidly provision and manage Kubernetes clusters in any and all locations they need Kubernetes-based workloads to run (both vSphere-based and non-vSphere-based). The goal of TKG is deliver a consistent experience with Kubernetes, irrespective of the underlying infrastructure. However, when TKG runs on vSphere, we are able to leverage all the innovations we’ve created with vSphere 7 with Kubernetes to offer a better experience for customers. Shown below is a successful TensorFlow run leveraging Bitfusion in a TKG cluster that was run as part of the validation.

Figure 7: TensorFlow run leveraging Bitfusion in a TKG cluster

Figure 8: Bitfusion console during the TensorFlow

PodVM or vSphere native pod (Supervisor Clusters)

The vSphere native pod (Supervisor Cluster) in vSphere 7 combines the best of containers and virtualization by running each Kubernetes pod in its own, dynamically created VM.

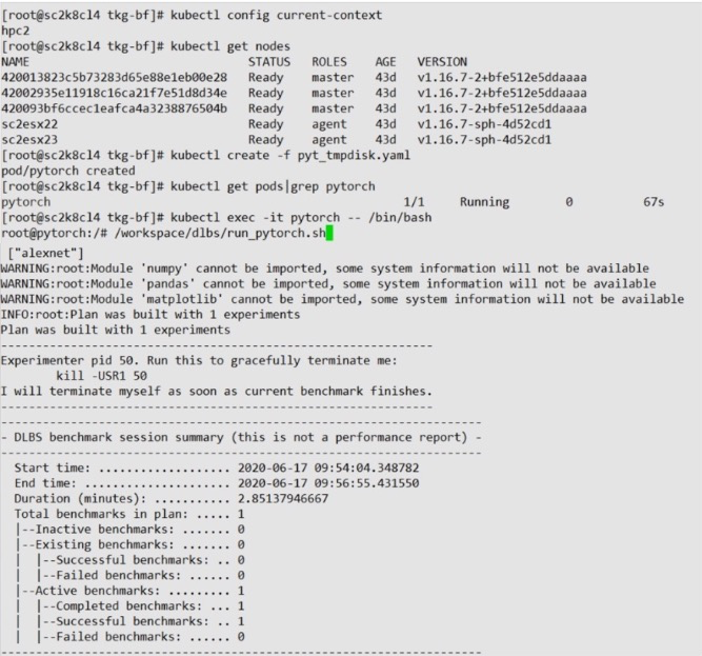

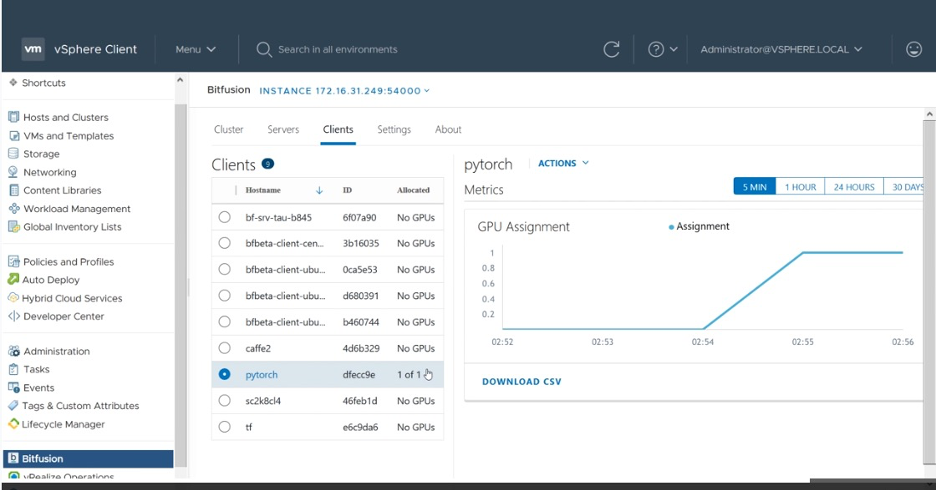

Figure 9: PyTorch run leveraging Bitfusion in a supervisor cluster

The idea here is to leverage the isolation and security of a VM with the simplicity and configurability of a pod. vSphere Pods are also first-class entities in vSphere, so VI admins can both get full visibility into them from the vSphere Client, but can also use all their existing tooling to manage vSphere Pods just like existing VMs. Shown is a successful PyTorch run leveraging Bitfusion in a Supervisor cluster that was run as part of the validation.

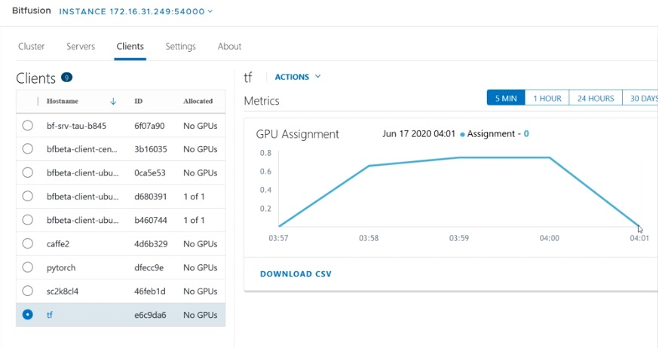

Figure 10: Bitfusion console during PyTorch run in Supervisor cluster

Tanzu Kubernetes Grid Integrated (formerly VMware Enterprise PKS)

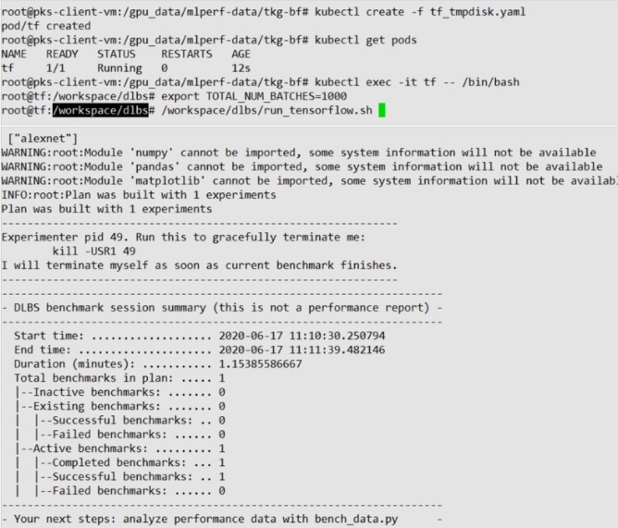

Figure 11: Successful TensorFlow run with TKGI leveraging NVIDIA GPUs over the network

The TensorFlow run shown below uses a remote NVIDIA GPU via Bitfusion for execution. The job executes via the network. The testing with the pods and the containers were seamless with TKGI.

Summary:

This solution has successfully validated access to Bitfusion served GPUs from all three VMware Kubernetes platforms. ML training runs with TensorFlow and PyTorch were executed successfully with GPU access over the network through Bitfusion.

This solution has effectively shown the following:

- VMware Cloud foundation with vSphere 7 has two key new features

- Native Support for Kubernetes

- Bitfusion which enables sharing of GPUs across the network

- This solution showcased integration between Kubernetes and Bitfusion

- vSphere Bitfusion can be used over the network from Kubernetes for machine learning

Call to Action:

- Consider upgrading to vSphere 7 with Tanzu to meet developer needs

- Consolidate GPUs into a GPU server farm to optimize usage

- Provide easy access to GPUs for your data scientists and developers from Kubernetes by leveraging vSphere Bitfusion

Full details of the solution can be found in this whitepaper.