Part 3: Managed services, scalability, pricing and packaging consideration for a true serverless developer experience

Contents

In part one and part two of this blog series, we looked at the different options that VMware Cloud Provider partners get with the VMware Cloud Provider platform and especially VMware Cloud Director to offer serverless capabilities to developers that want to build serverless web applications. We covered the motivation behind the serverless trend, a common architecture for serverless web apps and the capabilities partners can build by using VCD, OSE, CSE and Bitnami from the VMware Cloud Provider program. Within this last post, we will look at the managed services a provider might want to add on-top of the platform, as well as the business model and pricing considerations.

Are we serverless yet?

In the second post, we used the VCD Object Storage Extension to offer object storage to store the static web site contents. From a developer perspective, this is by default a true serverless offering where all they have to care about is configuring and consuming object storage buckets. Charges would typically apply based on the consumed object storage in different storage classes and tiers, with potential add-on charges for replication or snapshots.

Another key-offering we covered was Functions-as-a-Service (FaaS), built with the VCD Extensibility Framework. Building a FaaS offering does require some work from a cloud provider because it’s a customised solution that does not come out of the box today. Partners can build a FaaS offering without additional software charges through vRealize Orchestrator and open-source tools by themselves. With OpenFaaS for example, the trigger for execution of a function can be a variety of events. Beyond the HTTP trigger described in the serverless web app example, this also works with different message brokers and even from the VMware Event Broker Applicance. Further, partners can engage with VMware Professional Services to have the FaaS solution designed, deployed, documented and supported – simply reach out to your account manager or to ps-coe@vmware.com. Either way, FaaS is a true serverless offering from the customers developer perspective; deploy code and trigger execution via HTTP without the need to configure or manage any servers or IaaS Instances – everything that’s required happening in the providers backend, not in a hyperscale provider.

The other required and optional components like MongoDB or any other database, as well as Kong as a secure API-gateway, were deployed using the Bitnami Community Catalog as vApp templates or Kubernetes Helm-charts. By strict definition, these do not exactly qualify as a true serverless offering. This is because even though the pre-packaged Bitnami applications are deployed automatically and hence make the solutions (Databases, API-Gateways etc.) easy to consume, they will by default get deployed into VMs or containers that are unmanaged and the responsibility of the tenant. But in a true serverless world, a developer does not want to manage VMs and containers, or even the database software layer. This is where the provider managed services capabilities come into the bigger picture.

Managed Services

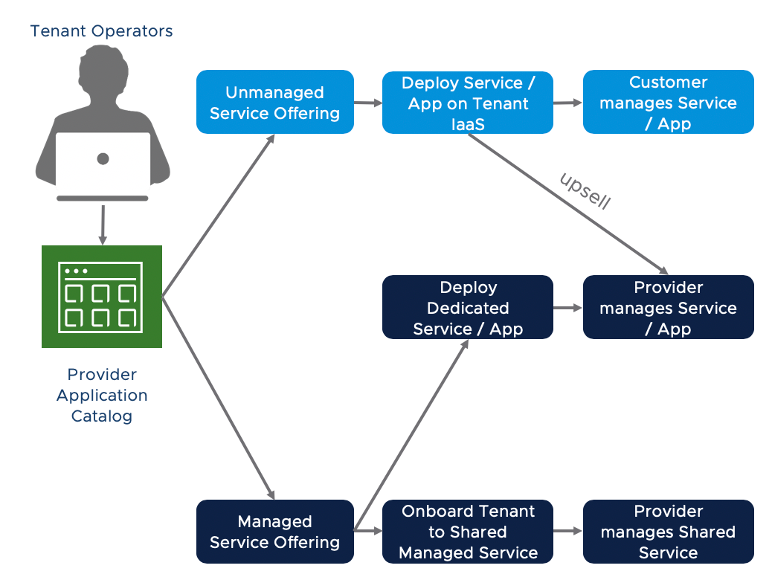

Cloud Providers basically have three ways of offering platform services and application components to their customers and customer developers: Unmanaged, managed dedicated and managed shared.

Figure 1: Unmanaged and managed platform services options

In an unmanaged offering, the customer gets the capabilities to deploy a service, like a database or an API-gateway ready to consume but would have to manage, update and maintain the service afterwards. This is the easiest way to start broadening the portfolio as a provider without having to invest heavily in managed services. However, it is not serverless from a customer perspective since the customer still manages the underlying IaaS servers and installations. Also, a provider will probably only be able to charge for the underlying IaaS instances and nothing on-top.

The second approach is to offer databases, API-gateways and many more as a managed service. In a dedicated fashion, each tenant can get one or several dedicated instances of the service, but these are fully lifecycle managed by the provider. Such managed services could also be upsell-options to unmanaged services and something a provider can charge a managed services fee for. From a developer perspective, this would qualify as a serverless offering since they simply consume a managed Database-as-a-Service or a managed API-Gateway-as-a-Service. The provider takes care of the underlying IaaS Infrastructure as well as updating and maintaining the service. However, the customer would probably get charged based on the entire IaaS VM Instance plus a managed service fee per instance. The financial aspect is therefore not 100% what a customer might expect from a true serverless offering. Which brings us to the last approach: Managed shared services.

Scaling the Platform

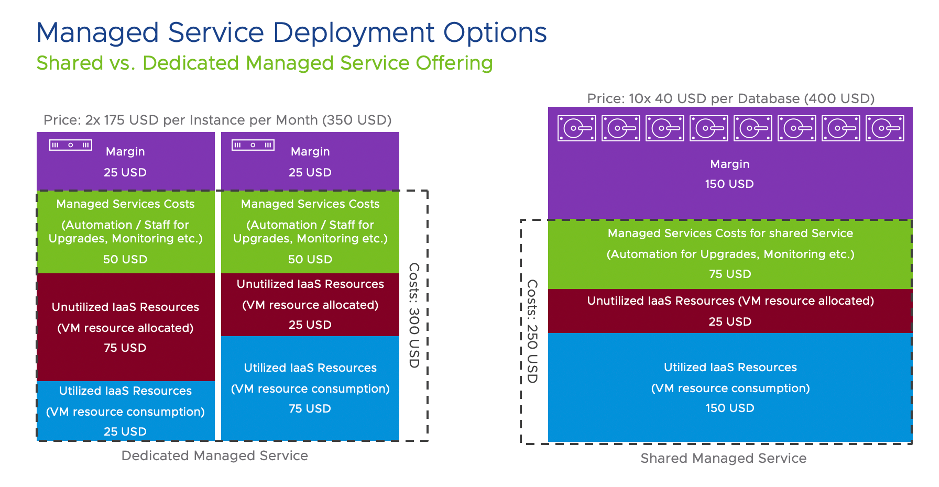

Most admins and developers understand even though a service is called serverless, there are in fact actual servers running somewhere in the providers backend. The appealing aspect here, besides not having to care about these underlying servers, is typically the granular and truly consumption-based charging metric. For example, AWS Lambda (Serverless Functions-as-a-Service) gets charged based on execution requests and execution GB-seconds for memory. AWS Aurora (Database-as-a-Service) gets charged based on Database Storage and read/write requests. And Microsoft Azure Container Instances (Serverless Container-as-a-Service) gets charged based on GB-seconds and vCPU-seconds of container execution. Building such a granular charging logic of course requires more customisation and implementation efforts from the provider. But it also allows the provider to have multiple tenants using the same backend systems, for example a single database cluster in the backend that hosts different databases for several tenants that get charged on a per Database or per GB basis. Compared to dedicated instances, these offerings would be lower in price and offer less flexibility or customisation options for customers. Yet by consolidating managed workloads across tenants on a shared platform, the provider will be able to generate more revenue and improve margin at scale:

Figure 2: Dedicated vs. shared manage services

Another consideration for a provider is whether to deploy managed service and serverless offerings into VMs or Kubernetes Container Pods in the backend. For example by using the Container Service Extension on VMware Cloud Director. Using containers instead of VMs can greatly improve the efficiency of the provider’s platform and therefore reduce his costs. This is because the overhead from running a separate OS in every VM-hosted service instance is significantly reduced using containers. And since most providers will want to start offering Containers or Kubernetes-as-a-Service to their customers soon, they can leverage the synergies from this platform to build serverless offerings based on containers, too.

Conclusion – Expanding the Portfolio

This concludes the third and last part of Building a Developer Ready Cloud to offer serverless web application capabilities with the VMware Cloud Provider Platform. We have seen how VMware Cloud Provider Partners can offer ready to consume and managed serverless capabilities on the VMware Cloud Director Platform. This is achieved with different approaches, starting from existing platform extension from VMware like Object Storage and Container Service Extension, to deploying and managing pre-packaged open source tools from the Bitnami Community Catalog and customising the platform for specific use-cases with the VCD extensibility framework.

No need to stop here! The comprehensive VMware Cloud Provider platform allows providers to rapidly expand their portfolio of developer ready offerings, increase the adoption of modern applications and drive innovation for their customers. Through the unique combination of VCD, Bitnami and the Open-Source Community, partners can offer a whole range of new services and solutions:

- Ready to consume WordPress Blogs

- Content Management Systems based on Drupal

- Tensorflow deployments for machine learning projects

- Hadoop clusters for BigData jobs

- CI/CD pipelines built on Jenkins

- Message brokers for distributed systems using Apache Kafka or RabbitMQ

- Monitoring Services with Prometheus

- … and many more:

Figure 3: Bitnami Community Catalog and VMware Cloud Marketplace

I hope you enjoyed this series of blog posts for service providers and look forward to your feedback and comments. If you would like to learn more about this topic, please reach out to your VMware Cloud Provider partner manager. And take a look at our Cloud Native eBook for cloud provider partners.