In part 1 of this blog we introduced distributed machine learning and virtualized infrastructure components used in the solution. In this part, we will look at the testing methodology and the results. The scalability of worker nodes and fractional GPUs leveraging Bitfusion will be analyzed.

5.1 Running the Distributed TensorFlow benchmark in Kubeflow with Bitfusion

The following is a summary of the steps used to deploy and run the solution.

- VMWare Tanzu Kubernetes Grid (TKG) and Kubeflow were installed.

- Ubuntu 16.0.4 docker images were used in the solution

- Used a docker image as the base and installed Bitfusion Flexdirect inside it. Appendix B shows the Docker file that was used to create the image.

- Created a distributed TensorFlow job using TensorFlow Training (TFJob). TFJob is a Kubernetes custom resource that you can use to run TensorFlow training jobs on Kubernetes.

- Appendix A shows a YAML file to run a distributed TensorFlow training job with 4 PS and 12 workers each using 0.25 GPUs and using Bitfusion Flexdirect to utilize GPU over the network.

- Parameter Servers (PS) are servers that provide a distributed data store for the model parameters.

- Workers: The workers do the actual work of training the model.

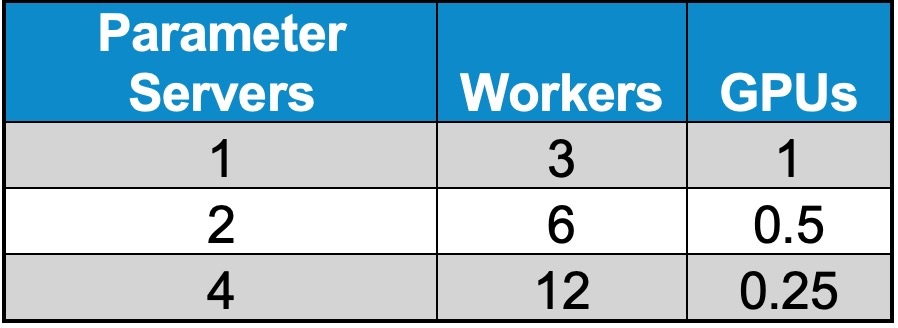

- The following table shows the combinations of TFJobs that were run with different values for GPU (with values like 1.0, 0.5, 0.25 – to test with even partial GPUs) and the number of worker and Parameter Server nodes:

Table 3: Number of parameter servers and workers for different use cases

- The job was run with TCPIP as the transport mechanism over 100 GBPS network infrastructure.

- The training epoch was run for 100 steps and this takes a few minutes on a GPU cluster.

- The logs were inspected to monitor the progress of the jobs.

The infrastructure was used to run the distributed TensorFlow machine learning benchmarks by varying the total number of nodes and GPUs used. The tests were run for TCP/IP and the results were compared.

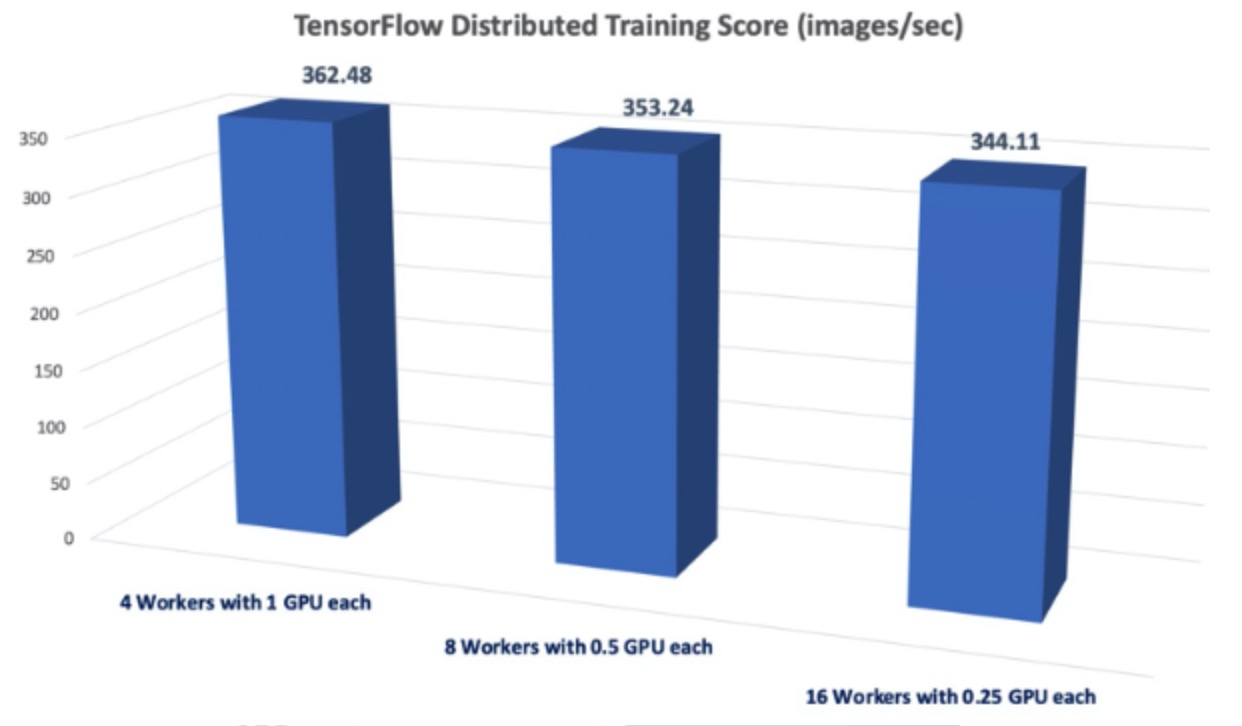

Figure 5: Image processing throughput with Distributed TensorFlow

The bar chart shows the comparison of image processing throughput for the three different use cases. The images processed per second shows less than 5% degradation even when distributed across four time the number of nodes as compared to the baseline with each of four nodes having one GPU each.

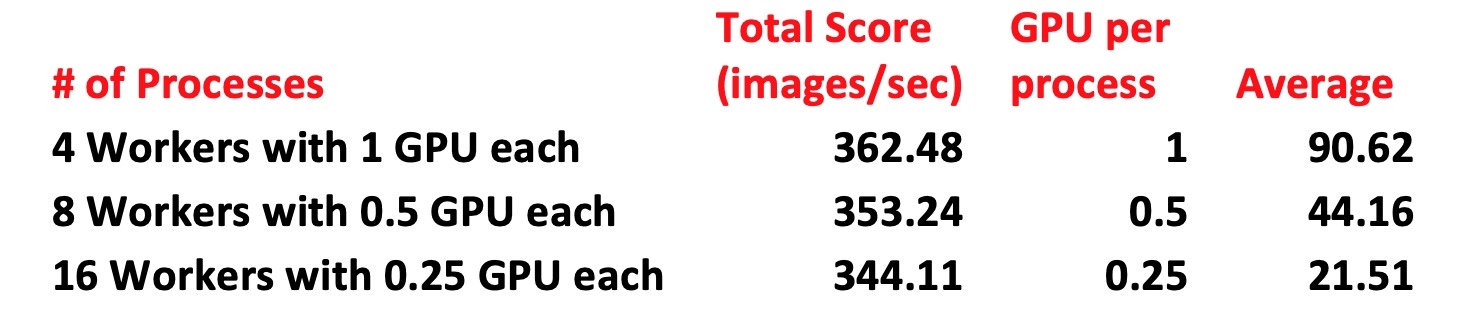

Table 4: Images processed per second with different worker & GPU combinations

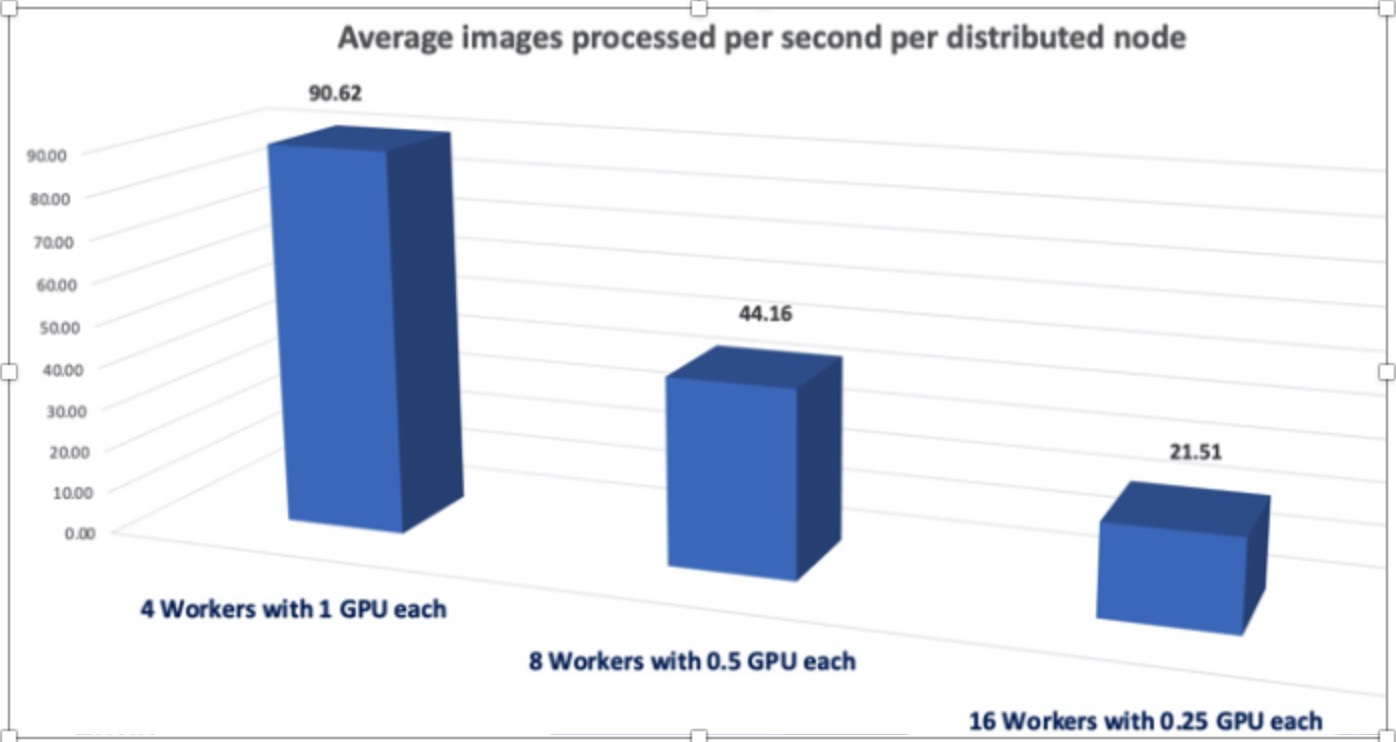

Figure 6: Image processing throughput with Distributed TensorFlow

In figure 6 we can see that there is linear scalability in performance of the distributed worker node for fractional GPUs. Depending on the average GPU needs of the community, Bitfusion can be leveraged to provide full or partial GPUs in a flexible manner with minimal impact on performance.

Distributed machine learning across multiple nodes can be effectively used for training. The results showed the effectiveness of sharing GPU across jobs with minimal loss of performance. VMware Bitfusion makes distributed training scalable across physical resources and makes it limitless from a GPU resources capability. The solution showcases the benefits of combining best in class infrastructure provide by the NVIDIA GPU with vComputeServer software, VMware SDDC with Bitfusion to run distributed machine learning across a scalable infrastructure.

apiVersion: kubeflow.org/v1

kind: TFJob

metadata:

name: tfjob1

namespace: kubeflow

spec:

cleanPodPolicy: None

tfReplicaSpecs:

PS:

replicas: 4

restartPolicy: OnFailure

template:

metadata:

labels:

app: tfjob1

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

– labelSelector:

matchExpressions:

– key: app

# operator: Exists

operator: In

values:

– tfjob1

topologyKey: “kubernetes.io/hostname”

containers:

– name: tensorflow

image: pmohan77/tfjob:tf_cuda

imagePullPolicy: Always

command:

– flexdirect

– run

– –num_gpus=1

– –partial=0.25

– python

– /opt/tf-benchmarks/scripts/tf_cnn_benchmarks/tf_cnn_benchmarks.py

– –variable_update=parameter_server

– –model=resnet50

– –batch_size=32

– –num_batches=100

– –data_format=”NCHW”

– –device=gpu

volumeMounts:

– name: bitfusionio

mountPath: /etc/bitfusionio

readOnly: true

volumes:

– name: bitfusionio

hostPath:

path: /etc/bitfusionio

Worker:

replicas: 12

restartPolicy: OnFailure

template:

metadata:

labels:

app: tfjob1

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

– labelSelector:

matchExpressions:

– key: app

# operator: Exists

operator: In

values:

– tfjob1

topologyKey: “kubernetes.io/hostname”

containers:

– name: tensorflow

image: pmohan77/tfjob:tf_cuda

imagePullPolicy: Always

command:

– flexdirect

– run

– –num_gpus=1

– –partial=0.25

– python

– “/opt/tf-benchmarks/scripts/tf_cnn_benchmarks/tf_cnn_benchmarks.py”

– –variable_update=parameter_server

– –model=resnet50

– –batch_size=32

– –num_batches=100

– –data_format=”NCHW”

– –device=gpu

volumeMounts:

– name: bitfusionio

mountPath: /etc/bitfusionio

readOnly: true

volumes:

– name: bitfusionio

hostPath:

path: /etc/bitfusionio

#

# To use this Dockerfile, use the docker build command.

# See https://docs.docker.com/engine/reference/builder/

# for more information.

#

FROM kubeflow/tf-benchmarks-gpu:v20171202-bdab599-dirty-284af3

RUN apt-get update && apt-get install -y –no-install-recommends

vim wget git

&&

rm -rf /var/lib/apt/lists/

# Install flexdirect v 1.11.7 first and then upgrade flexdirect to v1.11.9

RUN echo “91.189.88.173 archive.ubuntu.com” >> /etc/hosts

&&

echo “91.189.88.174 security.ubuntu.com” >> /etc/hosts

&&

echo “192.229.211.70 developer.download.nvidia.com” >> /etc/hosts

&&

echo “13.224.29.66 getfd.bitfusion.io” >> /etc/hosts

&&

echo “52.216.204.37 s3.amazonaws.com” >> /etc/hosts

&&

cd /tmp && wget -O installfd getfd.bitfusion.io

&&

chmod +x installfd

&&

./installfd -v fd-1.11.7 — -s -m binaries

&&

export BF_SILENT_INSTALL=1

&&

export USE_TTY=””

&&

export BF_INSTALL_FDMODE_COMPATIBILITY=”binaries”

&&

flexdirect upgrade

ENTRYPOINT [“/opt/launcher.py”]