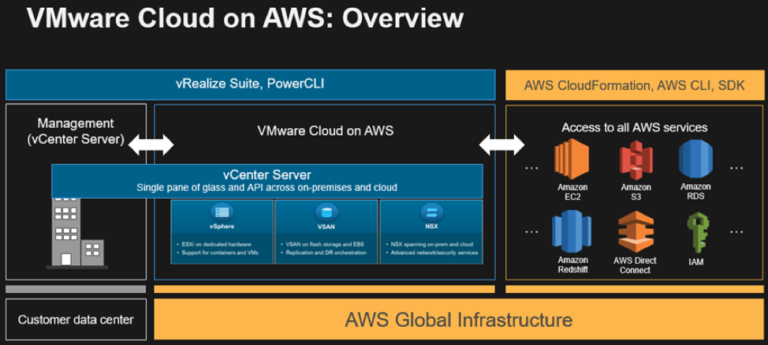

This article describes a set of work that was done at VMware’s labs with Confluent staff to demonstrate deployment of the full Confluent Platform, using the Confluent Operator, on VMware vSphere 7 with Kubernetes. The Confluent Platform is a collection of processes, including the Kafka brokers and others that provide cluster robustness, management and scalability. The Confluent Operator allows the various components of the Confluent Platform to be easily installed and then managed throughout their lifetime. In this case a Tanzu Kubernetes Grid (TKG) cluster operating on vSphere 7 was used as the infrastructure for this work. A Kubernetes Operator is a widely accepted way to deploy an application or application platform, particularly those that are made up of several communicating parts such as this one. Operators are popular in the Kubernetes world today and a catalog of different application operators, based on Helm Charts, is held in the Tanzu Application Catalog.

Source: The Confluent Platform web page

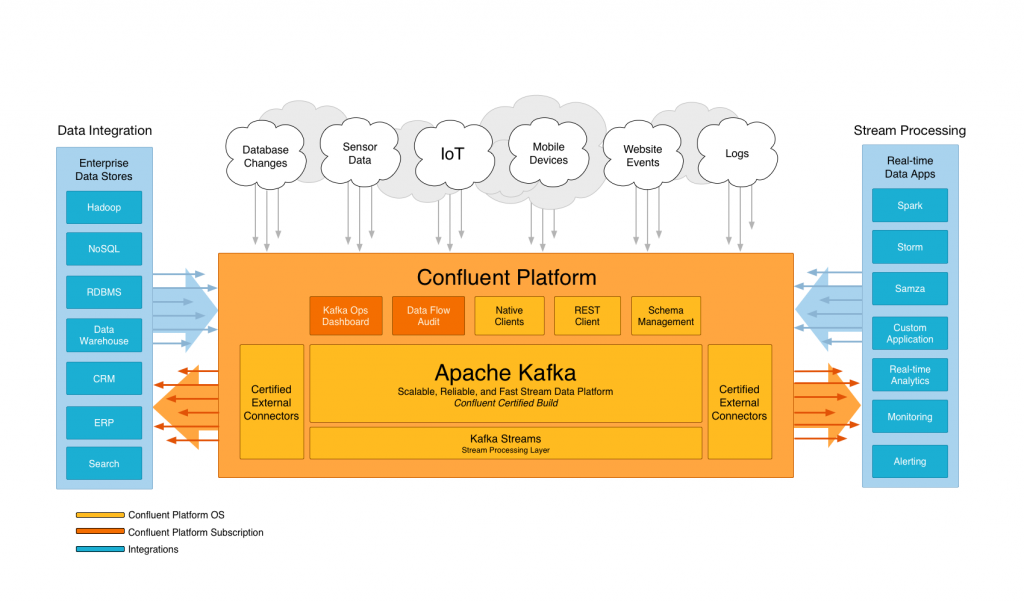

Apache Kafka is an open source software platform that is used very widely in the industry for inter-application messaging and event streaming at high speed and scale for many modern applications. Many use cases of Kafka are applications that perform machine learning training or inference and analytics tasks on streaming data. One example of this kind of application is seen in the IoT analytics benchmark environment that was developed at VMware. The image above from the Confluent site shows a general view of the world that Kafka operates in, forming the glue between different applications and between those applications and the outside world. It is used by most of the large internet companies as well as by many thousands of smaller companies to handle streams of live data as they flow into and out of their applications to others. Confluent is the go-to company for Kafka-based event streaming. The Confluent staff worked closely with VMware personnel on proving the Confluent Platform and the Operator on vSphere 7.

As is the case with most Kubernetes operators, a YAML specification file comes with the downloadable Confluent operator package that makes it easier to deploy and manage all the Kafka components (event brokers, service registry, zookeepers, control center, etc.). Once deployed, the operator and the Kafka runtime (i.e. the Confluent Platform, which has several components) are hosted in pods on a set of virtual machines running on VMware vSphere 7 with Kubernetes.

The version of the Confluent Operator used for this work was V0.65.1. The downloadable tar bundle for the Operator is located on the Deploying Confluent Operator and Confluent Platform web page.

Configure a TKG Cluster on vSphere 7

Contents

Generally, the VMware system administrator creates TKG clusters for the developer/testing/production community to use. They can do this once the Kubernetes functionality is enabled on vSphere 7 and vCenter 7. This blog article does not go into detail on the steps taken by the system administrator to enable the vSphere Kubernetes environment (also called enabling “Workload Management” within vCenter). Those details are given in a separate document, the vSphere with Kubernetes QuickStart Guide.

The commands below are executed on a VM that was created by the system administrator with the appropriate access rights to your newly created TKG cluster. The vSphere with Kubernetes supervisor cluster is the management and control center for several TKG or “guest” clusters, each created for a developer or test engineer’s work by the VMware administrator.

The administrator logs in to the Supervisor cluster on vSphere via the Kubernetes API-server for that cluster. An example of the command to login to the API Server for the Supervisor cluster is shown here.

|

kubectl vsphere login —server 10.174.4.33 —vsphere–username administrator@vsphere.local —insecure–skip–tls–verify |

The VMware administrator creates, among other items, a Storage Class and a NameSpace within the vSphere Supervisor Cluster to contain the new TKG cluster (as shown in the YAML below for the creation of that TKG cluster). The Kubernetes namespace here maps to a vSphere resource pool that allows administrators to control the CPU/memory resources that are used by the new TKG cluster, if we need to. Each development/testing project can operate in a namespace of its own, with its own contained TKG cluster.

Administrators create a TKG Cluster with a YAML specification file that follows the pattern of the example below. The specific edits the VMware system administrator would make are to the “class” and “storageClass” items here.

NOTE: If you have difficulty with viewing the text below, click inside the box – you should then see a menu at the top bar area of the box and choose the <> icon to see the text in a correctly formatted way.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

apiVersion: gcm.vmware.com/v1alpha1 Kind: ManagedCluster metadata: name: confluent–gc namespace: confluent–ns spec: topology: controlPlane: count: 1 class: guaranteed–small # vmclass to be used for master(s) storageClass: project–pacific–storage workers: count: 7 class: guaranteed–large # vmclass to be used for workers(s) storageClass: project–pacific–storage distribution: version: v1.15.5+vmware.1.66–guest.1.37 settings: network: cni: name: calico services: cidrBlocks: [“198.51.100.0/12”] pods: cidrBlocks: [“192.0.2.0/16”] |

Note that the “class” attribute is set to “guaranteed-large” above. This parameter configures the size of the Worker VMs that are used to contain the pods. Certain components/services of the Confluent Platform, such as the SchemaRegistry service, require larger memory/core configuration and this is specified in that class parameter when creating the TKG cluster.

Deploy the Confluent Operator on the vSphere TKG Cluster

In our lab testing work, we used a Linux-based VM with the Kubectl vSphere Plugin installed in it – this we referred to as our TKG “cluster access” VM. Having received instructions on using the cluster access VM from the VMware system administrator, we were ready to start work on our new TKG cluster.

Once logged in to a cluster access VM, the first step is to use the “tar” command to extract the contents of Confluent Operator tar file you downloaded from the Confluent site to a known directory. You may need to unzip the file first.

|

tar –xvf confluent–operator–file–name.tar |

We made a small set of changes to the supplied “private.yaml” file within the “providers” directory under the Confluent Operator main directory. These were customizations to make it specific to our setup. The version of the private.yaml file that we used for our testing is given in full in Appendix 1.

Note: Exercise care with the spacing if you choose to copy some contents from this file – the Helm tool and other YAML tools are very picky about the layout and spacing!

Each named section of the private.yaml file (such as those named “kafka” and “zookeeper”) describes the deployment details for a Kubernetes service. There are Kubernetes pods deployed to support those services, which we will see later on.

Set Up the Helm Tool

We installed the Helm tool into the TKG cluster access VM. There are instructions for this on the Deploying Confluent Operator and Confluent Platform web page. The instructions below are taken from that Confluent web page, with some added guidelines for vSphere with Kubernetes.

Login to the TKG Cluster

Once logged into the cluster access virtual machine, first login to the Kubernetes cluster and set your context.

|

kubectl vsphere login –server ServerIPAddress —vsphere–username username —managed–cluster–name clustername —insecure–skip–tls–verify |

|

kubectl config use–context contextname |

You choose your Kubernetes namespace to operate in, as directed by your system administrator. The namespace is part of the context you operate in. In our case, we made use of the “default” namespace for some of earlier testing work. Later on, as the testing got going, we formalized this to use more meaningful namespace names.

Installing the Confluent Operator

The “private.yaml” file mentioned in the various helm commands below contains the specifications for the various Confluent components. It is copied from the template private.yaml that is given in the Confluent Operator’s “helm/providers” directory. To install the various components of the Confluent Platform/Kafka on vSphere with Kubernetes, we used an edited “private.yaml” cluster specification that is fully listed in Appendix 1. The private.yaml file required certain site-specific parameters for a particular implementation, such as DNS names for kafka brokers, for example. Those edited parts are shown in bold font in the listing of the contents in Appendix 1.

Install the Operator Service Using Helm

|

helm install operator ./confluent–operator –f ./providers/private.yaml —namespace default —set operator.enabled=true |

Apply a Set of Kubernetes Parameters

The following instructions allow certain actions on behalf of the vSphere user that are necessary for a clean installation. They complement the Confluent installation instructions given at the web page above.

|

kubectl –n default patch serviceaccount default –p ‘{“imagePullSecrets”: [{“name”: “confluent-docker-registry” }]}’ |

|

kubectl apply –f https://raw.githubusercontent.com/dstamen/Kubernetes/master/demo-applications/allow-runasnonroot-clusterrole.yaml |

|

kubectl apply –f https://raw.githubusercontent.com/dstamen/Kubernetes/master/demo-applications/allow-all-networkpolicy.yaml |

The Zookeeper Service

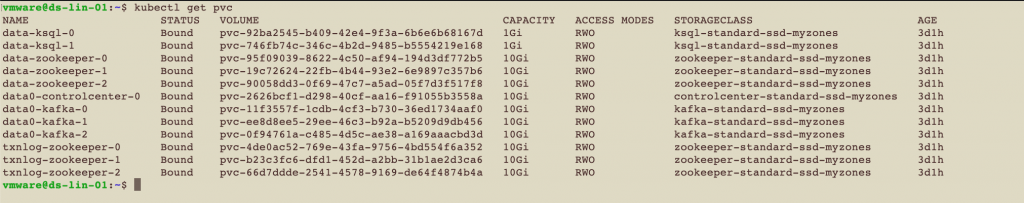

Before installing this service, there are some pre-requisites required at the vSphere and Kubernetes levels. Firstly in the vSphere Client, create a tag that maps to a storage policy named “zookeeper-standard-ssd-myzones”. and attach it to the target namespace. This named storage class is needed so that the storage provisioner, csi.vsphere.vmware.com, mentioned in the global section of the private.yaml file can assign a storage volume to enable Persistent Volumes Claims (PVCs) to be used at the Kubernetes level. The Zookeeper service requires PVC support to allow it to work. Then in your TKG cluster access VM, issue the command below.

|

helm install zookeeper ./confluent–operator –f ./providers/private.yaml —namespace default —set zookeeper.enabled=true |

The Persistent Volume Claims may be seen in Kubernetes by issuing

|

kubectl get pvc |

and after all the services in this list were deployed, we saw them as shown here

The Kafka Service

|

helm install kafka ./confluent–operator –f ./providers/private.yaml —namespace default —set kafka.enabled=true |

See the Note below on certain edits that are required to the Kafka service LoadBalancer, once it is deployed.

The LoadBalancer’s “externalTrafficPolicy” defaults to “local” instead of “cluster”. The former is not supported on the current TKG release. We need to update each loadbalancer entry within the Kafka service from “local” to “cluster” for the traffic policy attribute. The details on this are described below.

The SchemaRegistry Service

|

helm install schemaregistry ./confluent–operator –f ./providers/private.yaml —namespace default —set schemaregistry.enabled=true |

The Connectors Service

|

helm install connectors ./confluent–operator –f ./providers/private.yaml —namespace default —set connect.enabled=true |

The Replicator Service

|

helm install replicator ./confluent–operator –f ./providers/private.yaml —namespace default —set replicator.enabled=true |

The ControlCenter Service

|

helm install controlcenter ./confluent–operator –f ./providers/private.yaml —namespace default —set controlcenter.enabled=true |

The KSQL Service

|

helm install ksql ./confluent–operator –f ./providers/private.yaml —namespace default —set ksql.enabled=true |

NOTE: Once all of the Kubernetes services listed above that make up the Confluent Platform are successfully deployed, then we edit the Kafka service to change certain characteristics of the LoadBalancer section for that service. This is needed for vSphere compatibility. Use the following command to do that

|

kubectl edit svc kafka–0–lb |

In the “spec:” section add a new line or change the existing line to read as follows:

|

externalTrafficPolicy : Cluster |

The same procedure will need to be done to the kafka-1-lb and kafka-2-lb Kubernetes services. These are the three Kafka LoadBalancer services.

An example of the contents of that edit session is shown here:

NOTE: If you have difficulty with viewing the text below, click inside the box – you should then see a menu at the top bar area of the box and choose the <> icon to see the text in a correctly formatted way.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 |

apiVersion: v1 kind: Service metadata: annotations: external–dns.alpha.kubernetes.io/hostname: b0.cpbu.lab external–dns.alpha.kubernetes.io/ttl: “300” service.beta.kubernetes.io/aws–load–balancer–additional–resource–tags: kube_namespace=default,lb–scope=per–pod,zone=myzones creationTimestamp: “2020-01-07T17:41:33Z” name: kafka–0–lb namespace: default resourceVersion: “13342” selfLink: /api/v1/namespaces/default/services/kafka–0–lb uid: 0ccac447–d883–4d98–aa3f–492c7f45d8ee spec: clusterIP: 198.57.212.123 externalTrafficPolicy: Cluster ports: – nodePort: 32016 port: 9092 protocol: TCP targetPort: 9092 selector: physicalstatefulcluster.core.confluent.cloud/name: kafka physicalstatefulcluster.core.confluent.cloud/version: v1 statefulset.kubernetes.io/pod–name: kafka–0 sessionAffinity: None type: LoadBalancer status: loadBalancer: ingress: – ip: 10.174.4.42 |

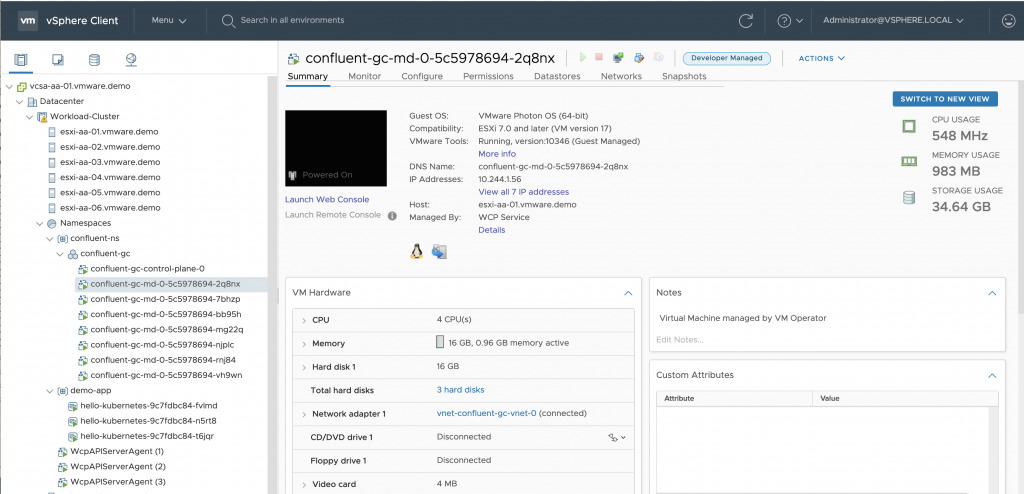

vSphere Client View

Once the Confluent Operator is deployed on vSphere with Kubernetes, you can view the VMs in the vSphere Client tool that each map to the Node functionality in Kubernetes. Here is an image of that setup taken from a later test experiment we did with a different namespace name. Each Kubernetes node is implemented as a VM on vSphere. For the reader who is not familiar with the vSphere Client, each VM has a name prefaced with “confluent-gc-md” in the view below and is a node in Kubernetes. Each VM handles multiple Kubernetes pods just as a node does, as needed.

Users familiar with vSphere Client screens will notice the new “Namespaces” managed object within the vSphere Client left navigation. This was designed specifically for managing Kubernetes from vSphere. The VMs properties can be viewed in the vSphere Client UI that is familiar to every VMware administrator, shown below. These VMs may be subject to vMotion across host servers and DRS rules as they apply to the situation.

The vSphere Client view of host servers and VMs with vSphere 7 with Kubernetes

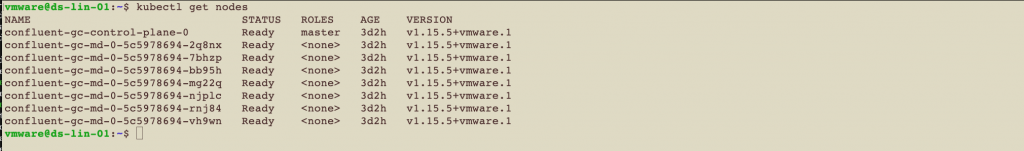

To see these nodes in Kubernetes, issue the command as shown below.

As you can see above, all of the VMs that host the Kubernetes pods are themselves running on a set of ESXi host servers, in our case six machines named “esxi-aa-01.vmware.demo” etc., above. Each host server runs multiple virtual machines concurrently, as is normal in VMware.

Testing the Confluent Operator Installation

Follow the instructions at the Confluent Operator and Platform website to ensure that the Operator and Platform installations are functioning correctly.

https://docs.confluent.io/current/installation/operator/co-deployment.html

There are separate sections described there for intra-Kafka cluster tests and for testing event handling from consumers and producers that are outside of the Kafka cluster. We focus here on the latter, as that is the more comprehensive test of the two. We performed the intra-cluster test before we proceeded to the external testing – and all functionality was correct.

We created a separate Confluent Platform installation that executed independently of our Kubernetes-based Confluent Operator setup, so as to test the TKG cluster deployment. We use the “external” qualifier here to refer to that second Confluent Platform instance.

In our tests, we deployed the external Confluent Platform cluster within the guest operating system in our cluster access VM, which we used earlier to access the Kafka brokers in the Kubernetes-managed cluster. The external Confluent Platform cluster could also be deployed in several different VMs if more scalability were required. We were executing a Kafka functionality health-check only here.

The key parts of the testing process mentioned above were to:

- Install the Confluent Platform in one or more VMs, as shown above.

- Bring up an external Confluent Platform cluster separately from the Kubernetes Operator-based one. This external Confluent Platform/Kafka cluster is configured to run using a “kafka.properties” file. The contents of that file are given in Appendix 3 below. Note that in the kafka.properties file the name of the bootstrap server is that of the Kubernetes-controlled Confluent platform instance. The bootstrap server identifies the access point to the Kafka brokers and load balancers.

- The name of the bootstrap server will need to be set up in the local DNS entries, so that it can be reached by the consuming components.

- In a Kafka consumer-side VM, first set the PATH variable to include the “bin” directory for the external Confluent platform. Then run the command:

|

confluent local start |

This command starts a set of processes for the external Confluent Platform cluster. Note that we did not have to start our Kubernetes Kafka cluster in this way – that was taken care of for us by the Confluent Operator.

The starting status of each component of that new external Kafka cluster is shown.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

Using CONFLUENT_CURRENT: /tmp/confluent.W5IwLk5K Starting zookeeper zookeeper is [UP] Starting kafka kafka is [UP] Starting schema–registry schema–registry is [UP] Starting kafka–rest kafka–rest is [UP] Starting connect connect is [UP] Starting ksql–server ksql–server is [UP] Starting control–center control–center is [UP] |

Once the external Confluent Platform is up and running, invoke the following command to bring up a Kafka consumer-side process. This process listens for any messages appearing in the topic “example” that is present at the server “kafka.cpbu.lab” on port 9092.

|

kafka–console–consumer —topic example —bootstrap–server kafka.cpbu.lab:9092 —consumer.config kafka.properties |

The following producer process can be run in the same VM as the message consumer, or in a separate VM. In a message producer-side VM , first set the PATH variable to include the “bin” directory for the Confluent platform. Once that is done, invoke the command

|

seq 1000000 | kafka–console–producer —topic example —broker–list kafka.cpbu.lab:9092 —producer.config kafka.properties |

The “seq” command from the Confluent Platform produces a series of messages and sends them to the Kubernetes-controlled Kafka brokers at “kafka.cpbu.lab:9092”. The combination of the above two processes, (i.e. consumer and producer) running simultaneously, shows a flow of 1 million messages being transported by the Kubernetes-controlled Kafka brokers from the Kafka producer side to the Kafka consumer side. We can change the number of messages sent and received at will.

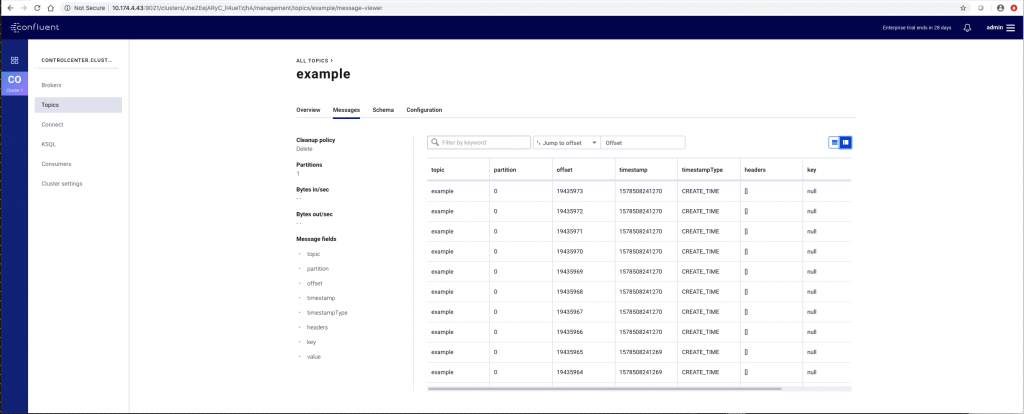

The individual messages that are handled and passed on by the Kafka brokers may be viewed in the Confluent Control Center Console. This was viewed in our tests at:

http://10.174.4.43:9021 (user:admin, p: Developer1)

Here is one view of that Confluent Control Center console, showing messages on an example topic flowing from producer to consumer via the Kafka brokers.

The IP address and port number for the Control Center Console is given in the list of Kubernetes services shown in Appendix 2 below on the line describing the service named as follows:

|

service/controlcenter–0 LoadBalancer 198.63.38.30 10.174.4.43 9021 |

Appendix 1: The Edited Helm/Providers/private.yaml file from the Confluent Operator Fileset

Entries in the private.yaml file used for Operator installation that required edits to be done to customize the contents for this validation test are shown in red font below.

NOTE: If you have difficulty with viewing the text below, click inside the box – you should then see a menu at the top bar area of the box and choose the <> icon to see the text in a correctly formatted way.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 |

## Overriding values for Chart’s values.yaml ## Example values to run Confluent Operator in Private Cloud global provider: name: private ## if any name which indicates regions ## region: anyregion kubernetes: deployment: ## If kubernetes is deployed in multi zone mode then specify availability-zones as appropriate ## If kubernetes is deployed in single availability zone then specify appropriate values ## For the private cloud, use kubernetes node labels as appropriate zones: – myzones ## more information can be found here ## https://kubernetes.io/docs/concepts/storage/storage-classes/ storage: ## Use Retain if you want to persist data after CP cluster has been uninstalled reclaimPolicy: Delete provisioner: csi.vsphere.vmware.com parameters: { svstorageclass: project–pacific–storage } ## ## Docker registry endpoint where Confluent Images are available. ## registry: fqdn: docker.io credential: required: false sasl: plain: username: test password: test123 ## Zookeeper cluster ## zookeeper: name: zookeeper replicas: 3 resources: requests: cpu: 200m memory: 512Mi ## Kafka Cluster ## kafka: name: kafka replicas: 3 resources: requests: cpu: 200m memory: 1Gi loadBalancer: enabled: true domain: “cpbu.lab” tls: enabled: false fullchain: |– privkey: |– cacerts: |– metricReporter: enabled: false ## Connect Cluster ## connect: name: connectors replicas: 2 tls: enabled: false ## “” for none, “tls” for mutual auth authentication: type: “” fullchain: |– privkey: |– cacerts: |– loadBalancer: enabled: false domain: “” dependencies: kafka: bootstrapEndpoint: kafka:9071 brokerCount: 3 schemaRegistry: enabled: true url: <a href=“http://schemaregistry:8081”>http://schemaregistry:8081</a> ## Replicator Connect Cluster ## replicator: name: replicator replicas: 2 tls: enabled: false authentication: type: “” fullchain: |– privkey: |– cacerts: |– loadBalancer: enabled: false domain: “” dependencies: kafka: brokerCount: 3 bootstrapEndpoint: kafka:9071 ## ## Schema Registry ## schemaregistry: name: schemaregistry tls: enabled: false authentication: type: “” fullchain: |– privkey: |– cacerts: |– loadBalancer: enabled: false domain: “” dependencies: kafka: brokerCount: 3 bootstrapEndpoint: kafka:9071 ## ## KSQL ## ksql: name: ksql replicas: 2 tls: enabled: false authentication: type: “” fullchain: |– privkey: |– cacerts: |– loadBalancer: enabled: false domain: “” dependencies: kafka: brokerCount: 3 bootstrapEndpoint: kafka:9071 brokerEndpoints: kafka–0.kafka:9071,kafka–1.kafka:9071,kafka–2.kafka:9071 schemaRegistry: enabled: false tls: enabled: false authentication: type: “” url: http://schemaregistry:8081 ## Control Center (C3) Resource configuration ## controlcenter: name: controlcenter license: “” ## ## C3 dependencies ## dependencies: c3KafkaCluster: brokerCount: 3 bootstrapEndpoint: kafka:9071 zookeeper: endpoint: zookeeper:2181 connectCluster: enabled: true url: http://connectors:8083 ksql: enabled: true url: http://ksql:9088 schemaRegistry: enabled: true url: http://schemaregistry:8081 ## ## C3 External Access ## loadBalancer: enabled: false domain: “” ## ## TLS configuration ## tls: enabled: false authentication: type: “” fullchain: |– privkey: |– cacerts: |– ## ## C3 authentication ## auth: basic: enabled: true ## ## map with key as user and value as password and role property: admin: Developer1,Administrators disallowed: no_access |

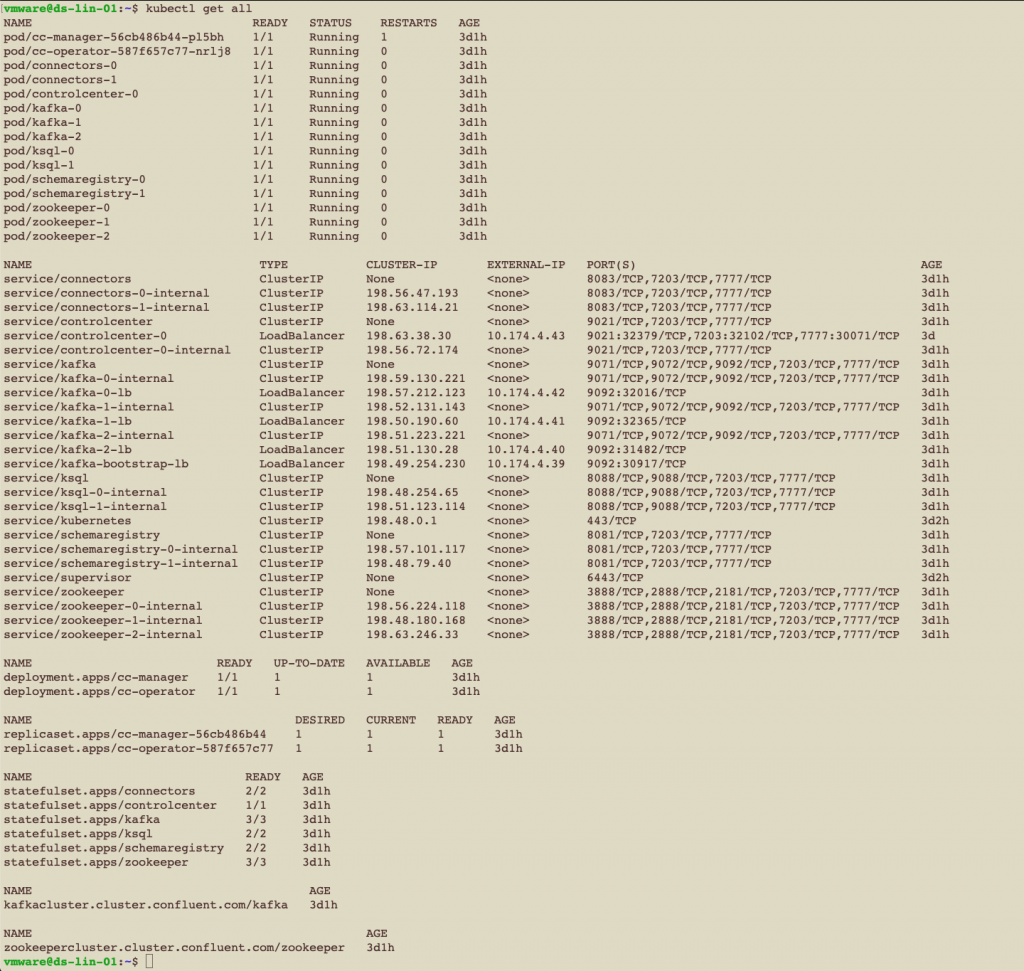

Appendix 2 – Checking the Status of all Confluent Components in a TKG Cluster

Using the cluster access VM with the TKG cluster, issue the kubectl command below. We then see output that is similar to that below. This output shows a healthy set of Kubernetes pods, services, deployments, replicasets, statefulsets and other objects from the Confluent Platform, as deployed and running under the Operator’s control.

|

kubectl get all |

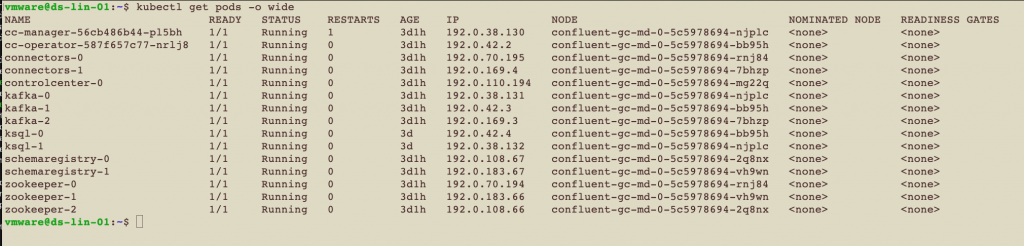

Multiple pods are running within each Kubernetes node. Those pods are seen in the output from the command shown here along with the nodes (VMs) that host them:

Appendix 3 : The Properties File for the External Confluent Platform Kafka Cluster Used for Testing

External testing of a Confluent Platform deployment is described in more detail in the Installation page for the Confluent Operator: https://docs.confluent.io/current/installation/operator/co-deployment.html

A file named “kafka.properties” is used in the testing of the Kubernetes-based Kafka Brokers. This is done from a second Confluent Platform deployment that is external to the original Kubernetes-based Kafka cluster we deployed using the Operator. This file is in the local directory from which the “confluent local start” command is issued to invoke that external Kafka cluster. The kafka.properties file has the following contents:

bootstrap.servers=kafka.cpbu.lab:9092

sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username=”test” password=”test123″;

sasl.mechanism=PLAIN

security.protocol=SASL_PLAINTEXT