Deep Reinforcement Learning

Contents

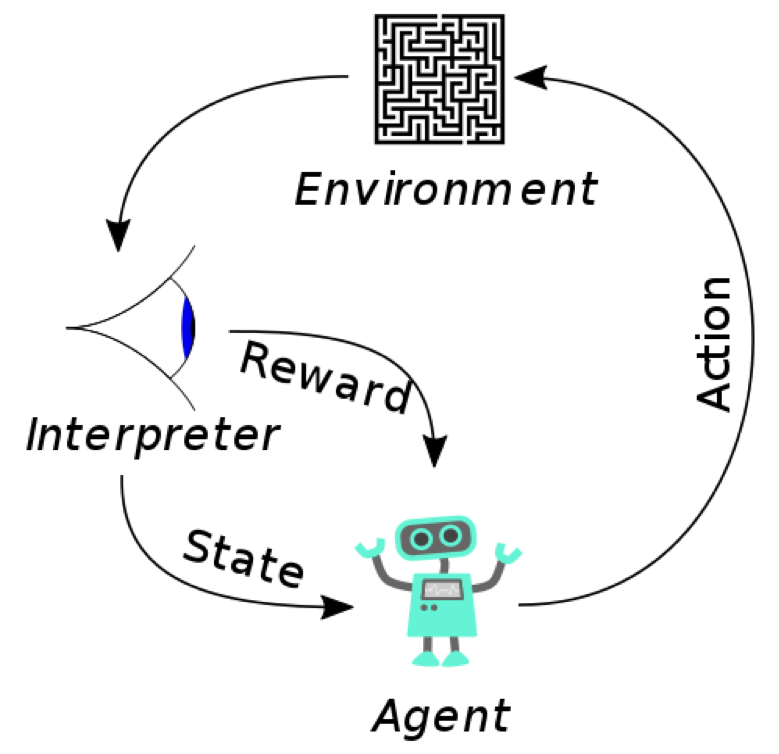

Humans excel at solving a wide variety of challenging problems, from low-level motor control through to high-level cognitive tasks. Deep Reinforcement learning (DRL) is an aspect of machine learning that leverages agents by taking actions in an environment to maximize the cumulative reward. DRL uses a paradigm of learning by trial-and-error, solely from rewards or punishments. DRL is one of three basic machine learning paradigms, along with supervised learning and unsupervised learning. DRL is focused on finding a balance between exploration (looking at uncharted territory) in combination with exploitation (existing knowledge). It uses the Markov decision process along with reinforcement learning

Figure 1: Markov Decision Process

Anaconda:

Anaconda is a popular tool with data scientists to deploy AI and machine learning projects into production at scale. Anaconda contains packages in Python and R programming languages for scientific computing (data science, machine learning applications, large-scale data processing, predictive analytics, etc.), while simplifying package management and deployment. The Anaconda distribution includes data-science packages suitable for Windows, Linux, and MacOS.

The Solution:

The goal of the solution is to showcase Virtualized Deep Reinforcement Learning leveraging Bitfusion to share NVIDIA GPUs. The components used in the solution include

- Anaconda4 running on a Ubuntu 16.0.4 virtual machine

- Bitfusion Flexdirect Client to access GPU resources over the network

- Deep Q Learning code to create a trained model for playing Doom with PyTorch

- CPU only machine to be used as for model deployment to run Doom before and after training the model as an exercise in inference

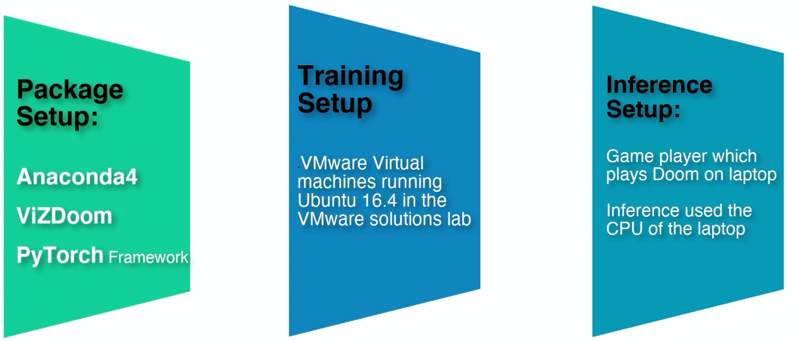

Package Setup:

The solution was run on a VMware Ubuntu VM. The components of the solution include:

- Anaconda4 allows setting up of python environments that are isolated and have their own set of libraries (which could be python-based or based on other languages too)

- Vizdoom – ViZDoom allows developing AI bots that play Doom using only the visual information (the screen buffer). It is primarily intended for research in machine visual learning, and deep reinforcement learning, in particular.

ViZDoom is based on ZDoom to provide the game mechanics.

- Pytorch is an open source machine learning framework that accelerates the path from research prototyping to production deployment.

Figure 2: Solution components

Training Setup

- VMware VM running Ubuntu 16.0.4 run on-premises in the Santa Clara Datacenter

- Training leveraged Bitfusion based access to GPU resources over the network within the same datacenter

Inference Setup

- Inference in this case is a Game Player which plays a simple version of the game Doom and is run on a Windows lap-top

- This lap-top uses just the CPU for playing the game Doom using the model generated in the training phase

Leveraging ViZDoom for Deep Reinforcement Learning:

ViZDoom will be used in the solution for AI bots that play Doom with the available visual information, represented by the screen buffer. ViZDoom is primarily intended for research in machine visual learning, and deep reinforcement learning, in particular. ViZDoom is based on ZDoom to provide the game mechanics. ViZDoom API is reinforcement learning friendly and is suitable also for learning from demonstration, apprenticeship learning or apprenticeship via inverse reinforcement learning, etc.

Figure 3 Reinforcement learning agent shooting a monster in ViZDoom

Training Process:

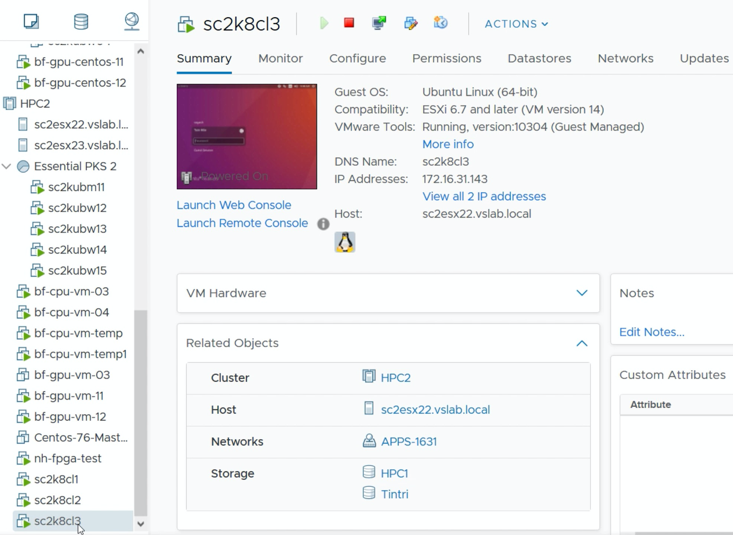

Figure 4: Virtual infrastructure used for training

The training for ViZdoom was performed initially with the CPUs available in the virtual machine. The virtual machine used to train the model is shown.

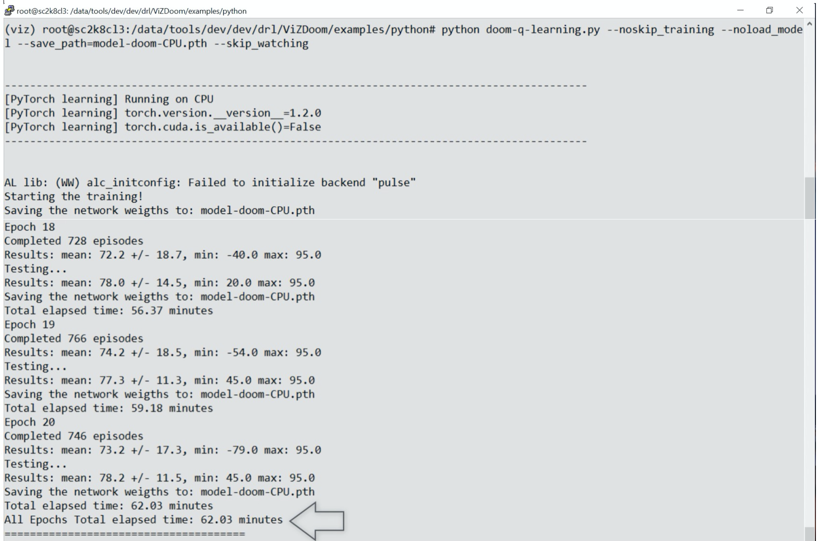

Figure 5: DRL Training run on CPUs

The deep reinforcement training process leveraging CPUs and the training times were seen to be between 62 and 85 minutes. One of the runs with some of the Epochs are shown

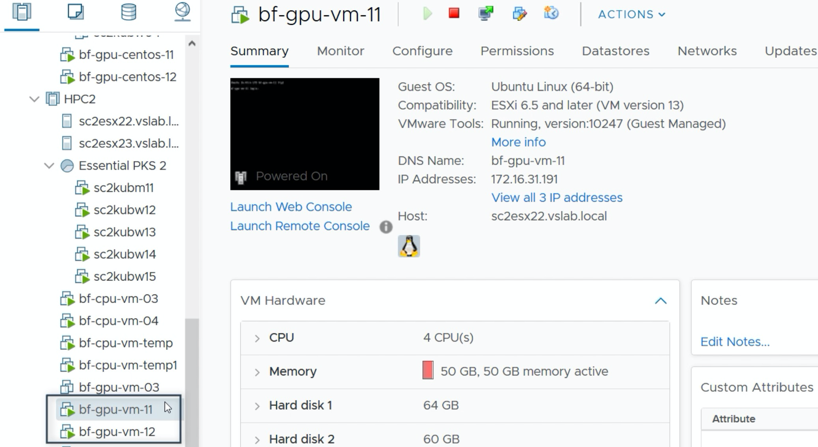

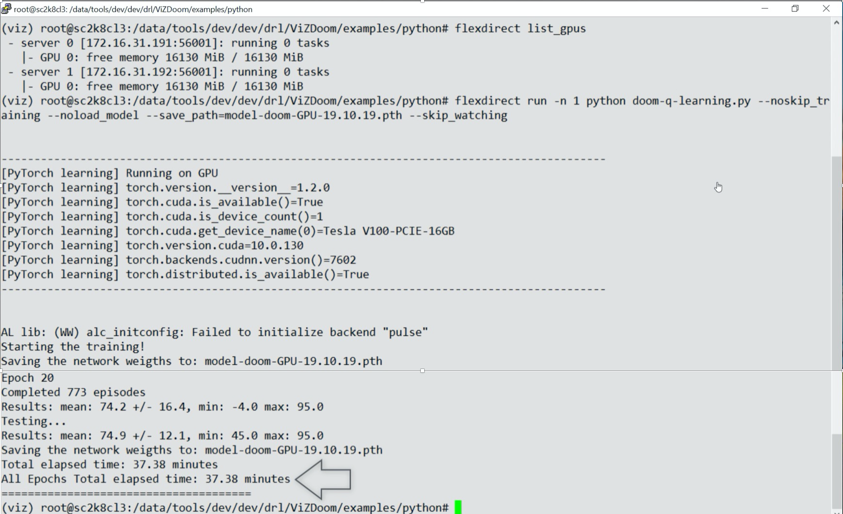

In the second phase, the virtual machine was provided access to NVIDIA GPUs through VMware Bitfusion and the training phase leveraged GPUs. Bitfusion uses a client server model for access with the GPUs being accessed over the network. One of the virtual machines servicing GPU requests is shown below.

Figure 6: GPU servers used for Training

The training phase leveraging GPUs is shown below with some of the EPOCHs. Training with GPUs took only half the time compared to CPU only training.

Figure 7: DRL Training run on GPUs

Inference:

Basic Model:

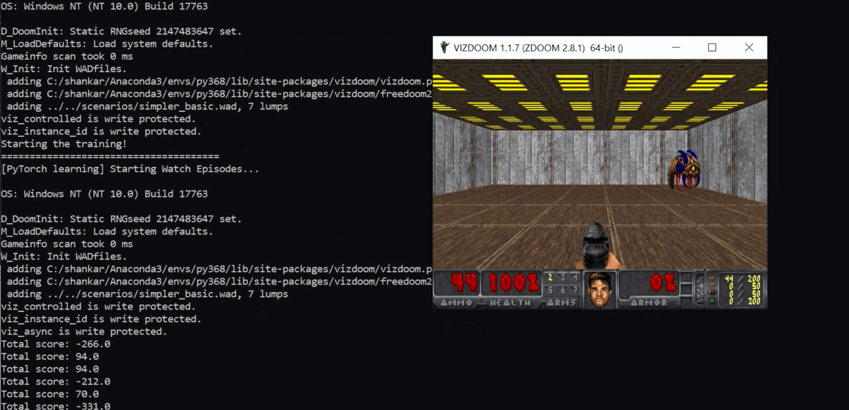

The inference engine is a laptop where a model plays the game of ViZDoom. A run leveraging a simple model without any deep reinforcement learning is shown below.

Figure 8: Training game with simple model

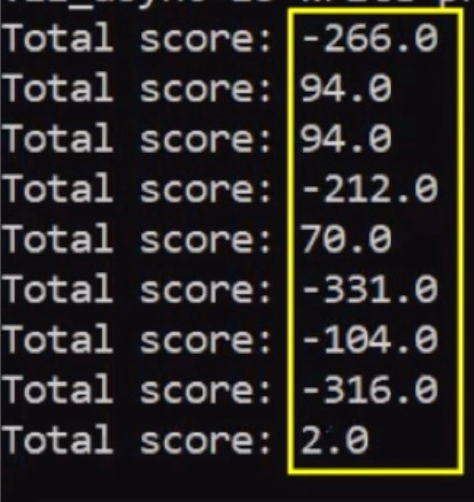

The accuracy of the computer playing the game is dismal with the simple model as shown in the results below.

Figure 9: Game Scores from simple model

Deep Reinforcement Learning model:

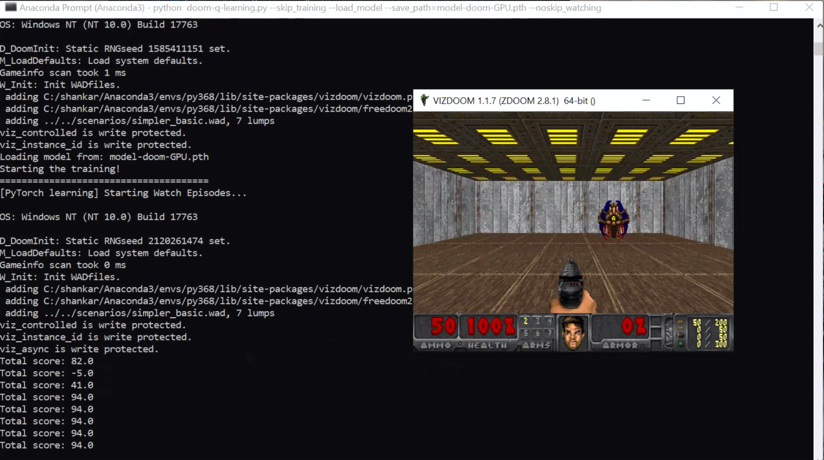

The model that was trained using deep reinforcement learning was used by the computer to play ViZDoom.

Figure 10: Training game with DRL model

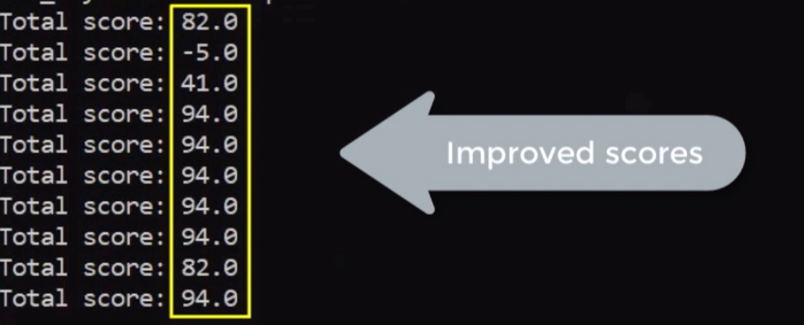

The soundness of the model is seen in the results which show great accuracy by the computer in the execution of the game.

Figure 11: Game Scores from DRL model

Summary:

In this solution, we successfully showed a virtualized environment used for deep reinforcement learning. The ViZDoom game was played with a simple model, then with the DRL based model and the results were compared. The training phases was run with traditional CPUs and then with over the network GPUs leveraging Bitfusion. The capabilities of leveraging GPUs for Deep Reinforcement Learning were showcased successfully in a vSphere based virtualized environment. Anaconda, a preferred platform for Data Scientists running on vSphere was effectively demonstrated for training, evaluation and inference in the DRL workflow.