Introduction

Contents

While virtualization technologies have proven themselves in the enterprise with cost effective, scalable and reliable IT computing, Machine Learning (ML) however has not evolved and is still bound to dedicating physical resources to obtain explicit runtimes and maximum performance. VMWare and Bitfusion has developed technologies to effectively share accelerators for machine learning over the network

NVIDIA v100 GPUs for Machine Learning

With the impending end to Moore’s law, the spark that is fueling the current revolution in deep learning is having enough compute horsepower to train neural-network based models in a reasonable amount of time

The needed compute horsepower is derived largely from GPUs, which Nvidia began optimizing for deep learning since 2012. One of the latest in this family of GPU processors is the NVIDIA Tesla v100.

Figure 1: The NVIDIA v100 GPU

NVIDIA® Tesla® V100 Tensor Core is the most advanced data center GPU ever built to accelerate AI, high performance computing (HPC), data science and graphics. It’s powered by NVIDIA Volta architecture, comes in 16 and 32GB configurations, and offers the performance of up to 100 CPUs in a single GPU. Data scientists, researchers, and engineers can now spend less time optimizing memory usage and more time designing the next AI breakthrough. (Source: NVIDIA )

Bitfusion FlexDirect

Bitfusion FlexDirect that will evolve to become part of vSphere and NVIDIA GPU accelerators can now be part of a common infrastructure resource pool, available for use by any virtual machine in the data center in full or partial configurations, attached over the network. The solution works with any type of GPU server and any networking configuration such as TCP, RoCE or InfiniBand. GPU infrastructure can now be pooled together to offer an elastic GPU as a service, enabling dynamic assignment of GPU resources based on an organization’s business needs and priorities. Bitfusion FlexDirect runs in the user space and doesn’t require any changes to the OS, drivers, kernel modules or AI frameworks. (Source: Bitfusion)

Natural Language Processing

Natural Language Processing, usually shortened as NLP, is a branch of artificial intelligence that deals with the interaction between computers and humans using the natural language. The ultimate objective of NLP is to read, decipher, understand, and make sense of the human languages in a manner that is valuable. (Source: BecomingHuman.ai)

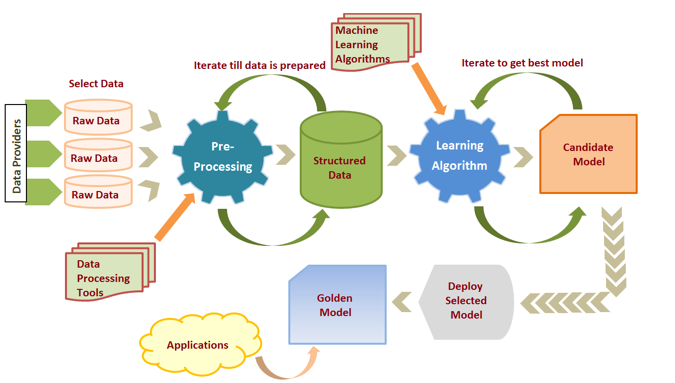

The Machine Learning Process:

In machine learning, we are able to leverage existing data to learn from and predict an outcome without using human judgement or manual rules. The processing steps in machine learning include:

- Data gathering

- Data preparation

- Choice of model

- Training

- Model Validation

- Hyperparameter tuning

- Inference

Figure 2: Machine Learning Pipeline. (Source: eLearning Industry)

The Solution:

This solution showcases the use of VMware Essential PKS for end to end machine learning. Machine learning will use natural language processing to develop an accurate model for movie reviews in this solution. The data processing and collection phases are already accomplished by IMDB and hence the solution will address the training, validation, tuning and the inference phases of the ML process pipeline.

VMware Essentials PKS:

There are hundreds of tools in the cloud native ecosystem, and new ones are rapidly emerging from the open-source community. Building flexibility into infrastructure is key to ensure adoption of new technologies to run workloads anywhere: on premises, in public clouds, or in a hybrid-cloud environment. VMware Essential PKS provides upstream Kubernetes binaries and expert support, enabling enterprise to build a flexible, cost-effective cloud native platform.

VMware Essentials PKS offers the following core advantages:

- Provides access to the energy and innovation stemming from the open source community

- Provides the flexibility to run Kubernetes across public clouds and on-premises infrastructure

- Can grow the cluster count from tens to hundreds to thousands without fear of spiraling costs

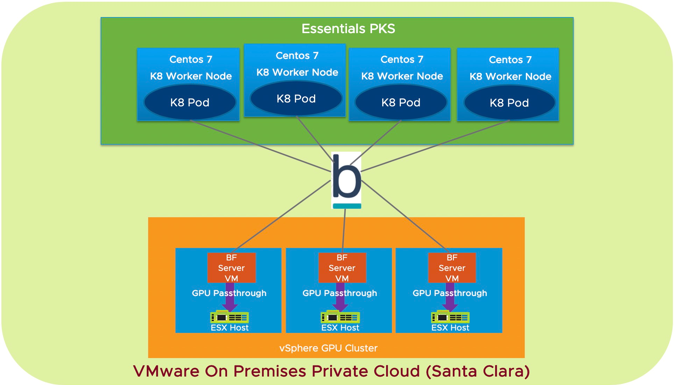

Solution Architecture:

Typically, in enterprise all the data is aggregated into a data lake or database within an on-premises centralized datacenter that has advanced computing capabilities. To simulate this situation, in this solution we will do the training, evaluation and tuning phases in an on-premise virtualized datacenter with NVIDIA GPU compute capability.

Figure 3: Logical Schematic of the Training Infrastructure

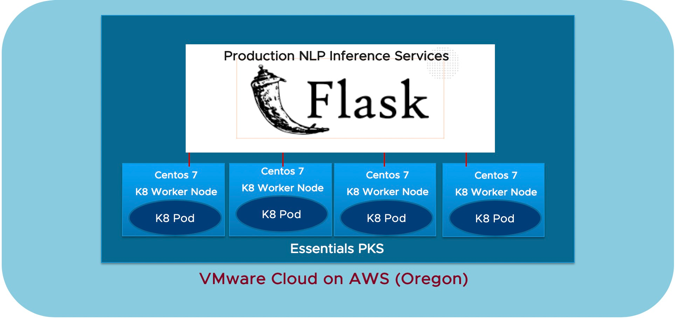

The inference process for a big enterprise happens in edge locations which typically have limited compute capabilities. In this solution, we will use a VMware cloud on AWS remote location with CPU only virtual machines as the edge location for inference.

Figure 4: Logical Schematic of the Inference Infrastructure

The newer more accurate models are transferred from the training site to the Inference site regularly. Data that has been inaccurately classified in the production inference site is transferred to the training site to learn from and make the model more accurate.

The components of the solution include the following:

Virtual Infrastructure Components:

The virtual infrastructure used to build the solution is shown below:

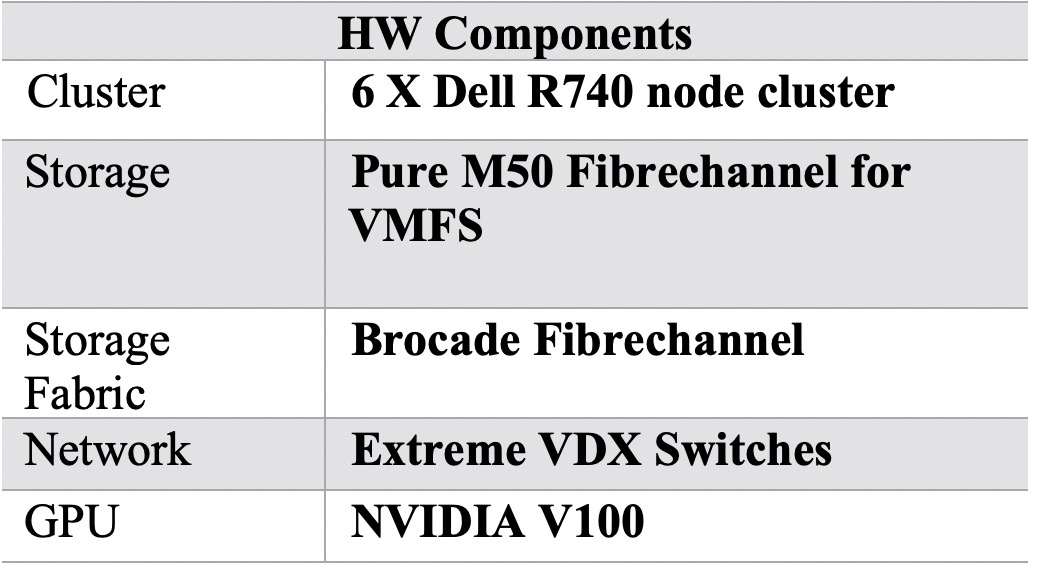

Table 1: HW components of the solution

The VMware SDDC and other SW components used in the solution are shown below:

Table 2: SW components of the solution

Logical Architecture of Solution Deployed:

Essentials PKS provided the framework to create Kubernetes clusters seamlessly working with the VMware SDDC components. A logical schematic of the Kubernetes clusters for training on-premises and Inference in the Cloud are shown.

Figure 5: Logical Schematic of Solution

Summary of steps for Solution Deployment:

- VMware Essentials PKS was installed on a vSphere Cluster with six Dell R740 servers.

- Two of these nodes had one NVIDIA GPU V100 cards each.

- FlexDirect server was deployed on two Linux virtual machines, each attached to an NVIDIA GPU.

- A Kubernetes cluster with four worker nodes and one master was created with Essentials PKS

- Docker images were created with the components shown in Appendix A

- Paddle (Parallel Distributed Deep Learning) an easy-to-use, efficient, flexible and scalable deep learning platform was deployed

- Flask is a micro web framework written in Python was installed in the inference site

- MLPerf is a benchmark suite for measuring how fast systems can train models to a target quality metric. Each MLPerf Training benchmark is defined by a Dataset and Quality Target.

- Sentiment Analysis is a binary classification task. It predicts positive or negative sentiment using raw user text. The IMDB dataset is used for this benchmark.

- IMDB Data set obtained from http://ai.stanford.edu/~amaas/data/sentiment/

In part 2 we will look at the deployment and the workings of the solution.