In part 1, we have looked at two options for sharing GPUs, NVIDIA vGPU and Bitfusion. In addition, we have discussed Bitfusion architecture and its primary use-cases. In this part 2, we will look at our performance testing methodology and Bitfusion results with three different networking options for remote GPU usage.

Testing Methodology

In this study, we have carried out performance testing to show the results of running deep learning workloads using a remote GPU with Bitfusion. The performance is compared to the same workloads running directly on the GPU without Bitfusion. To be more specific, we have tested the following cases:

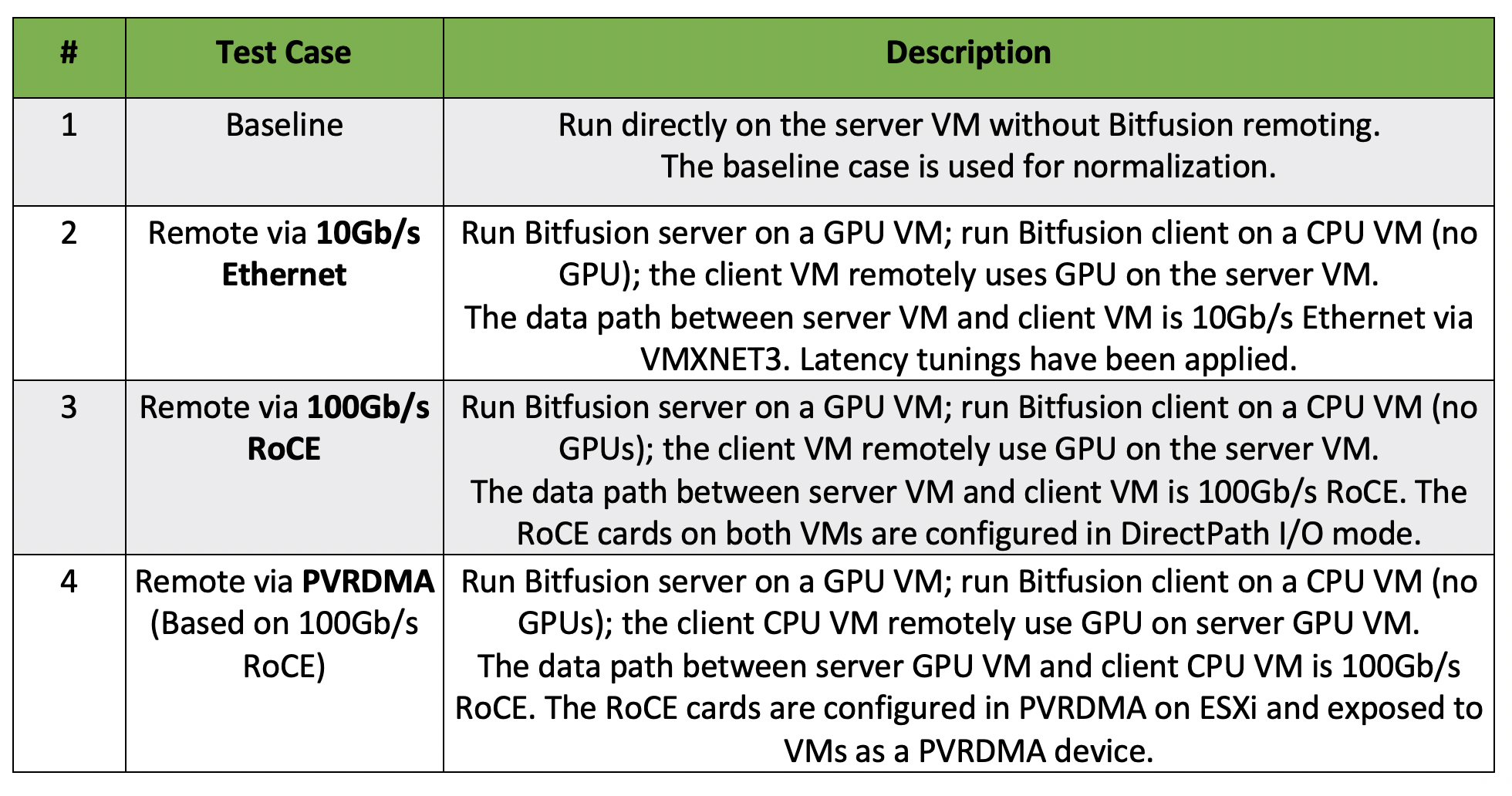

- Baseline tests are run in a VM on a GPU server with a GPU configured in DirectPath I/O (Passthrough) mode. This scenario with no Bitfusion remote networking related overhead is used as the baseline for performance normalization. In our previous study, we demonstrated that Passthrough performance matches bare-metal performance for several HPC and ML workloads.

- In the Bitfusion tests, the client VM (without GPU) is on a different ESXi host from the server VM and is connected through one of the following three networking options to the server VM.

- 10Gb/s VMXNET3

- 100Gb/s RDMA Over Converged Ethernet (RoCE)

- 100Gb/s PVRDMA (RoCE)

The normalized performance of the three networking options against the baseline case will be presented in the Results section.

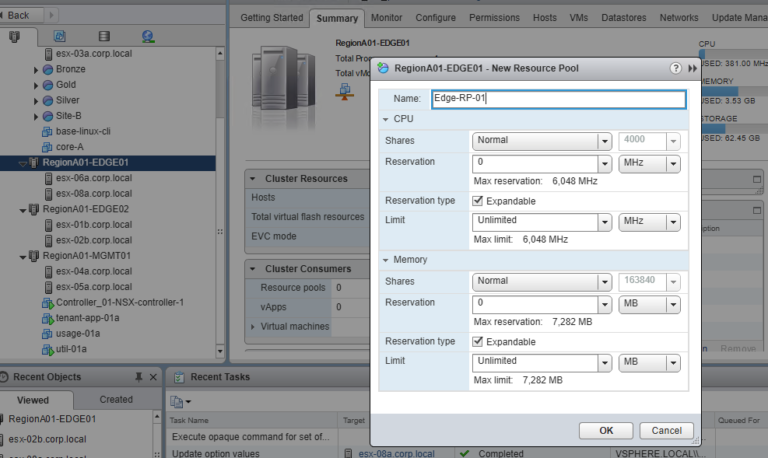

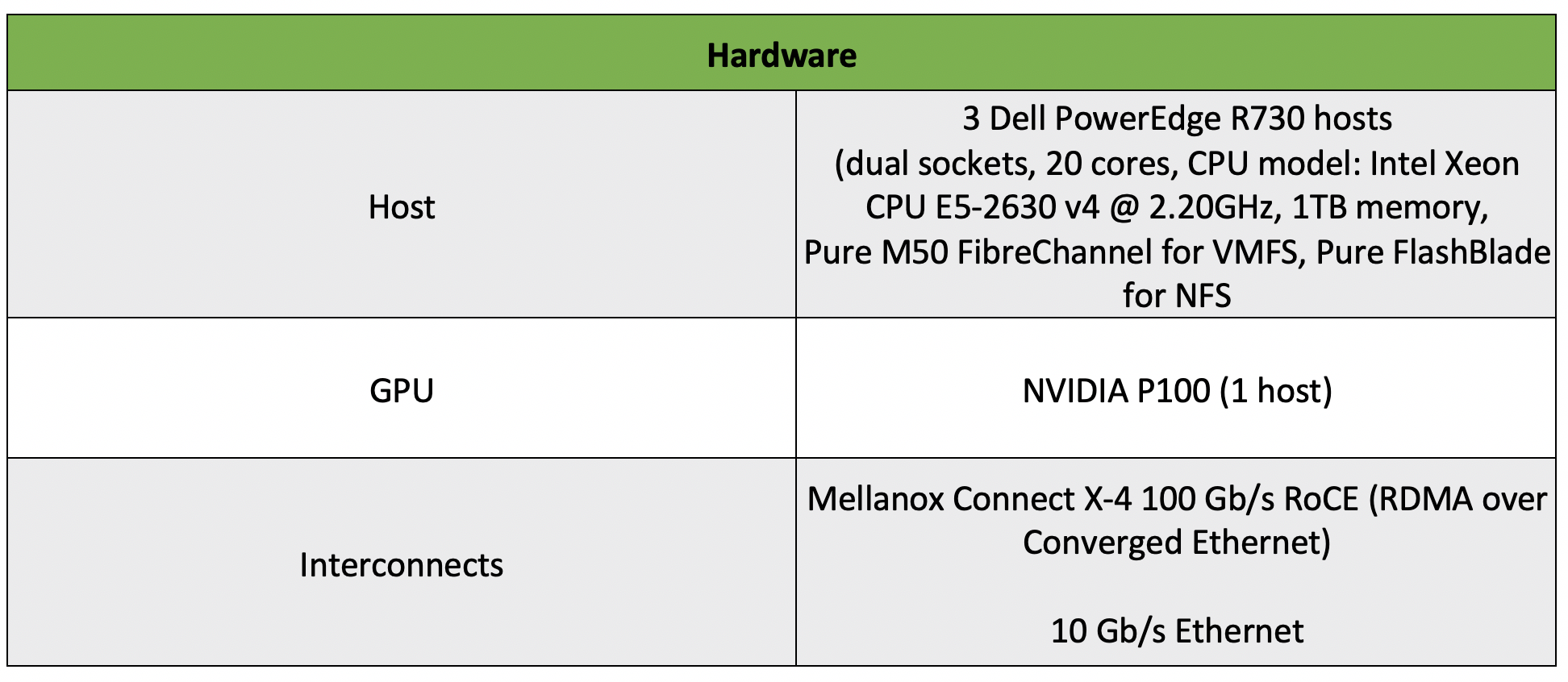

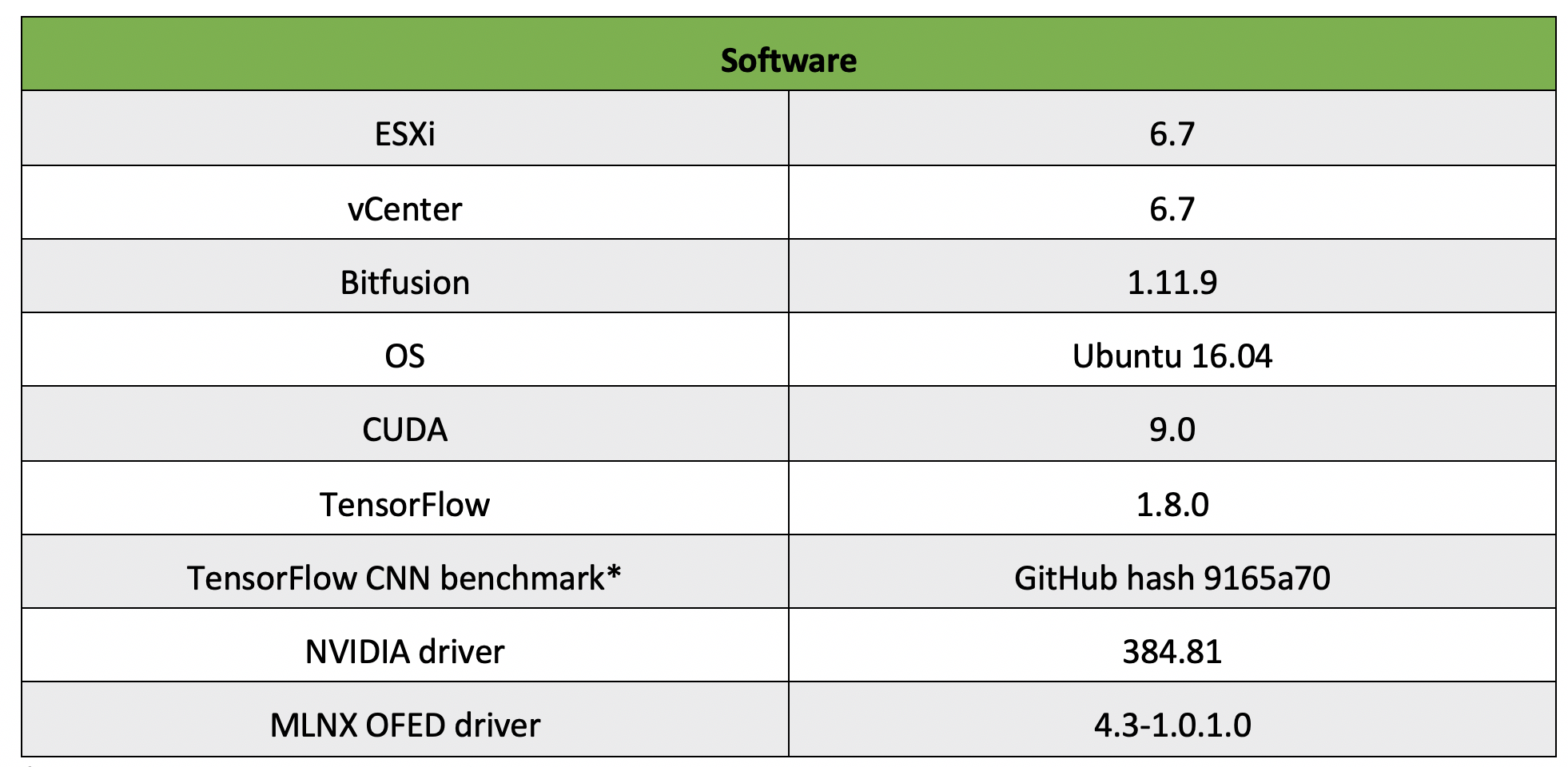

Table 1 summarizes the details of different test cases. Table 2 and 3 summarize the hardware and software used in this testing.

Table 1: Test Cases

Table 2: Hardware for Testing

Table 3: Software for Testing

*: The testing was done with tf_cnn_benchmarks, a TensorFlow benchmark suite. It contains implementations of several popular convolutional models. In this performance testing, we used Inception3 and resnet50 with ImageNet dataset. Incepton3 and resnet50 are representative deep learning models.

Results

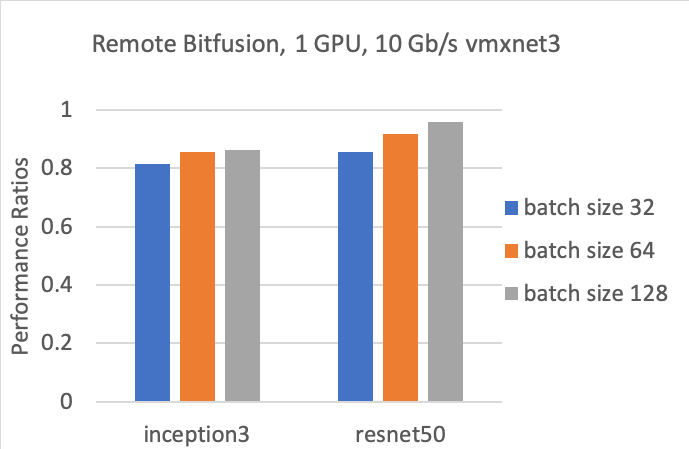

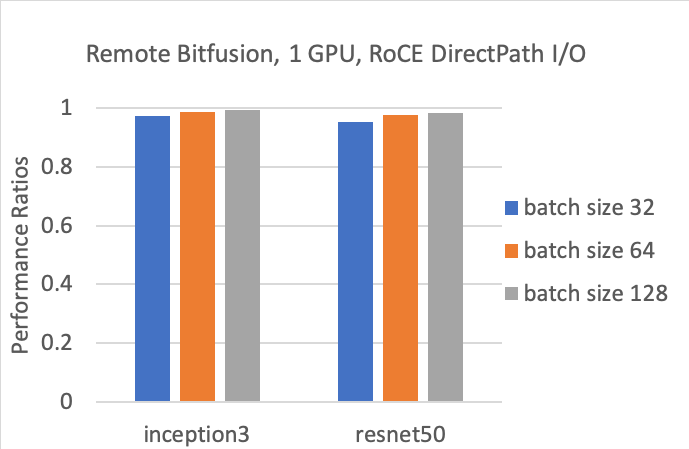

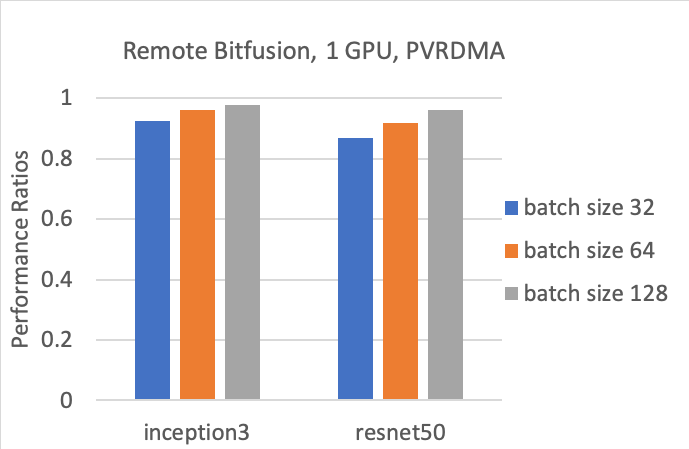

Figure 2 – 4 show performance ratios running inception3 and resnet50 deep learning models in the client VM with different batch sizes using a GPU remotely with 10Gb/s vmxnet3, RoCE DirectPath I/O and PVRDMA respectively. All ratios are relative to the Passthrough baseline as previously described. Higher ratios are better, with 1.0 indicating that there is no overhead relative to the baseline when using Bitfusion remoting.

Figure 2: Bitfusion remote performance with 10 Gb/s VMXNET3 networking

Figure 3: Bitfusion remote performance with 100 Gb/s RoCE configured in DirectPath I/O mode

Figure 4: Bitfusion remote performance with PVRDMA based on 100 Gb/s RoCE

Discussion

For all cases where the client VM remotely leverages a single GPU on the server VM over a network, there are performance overheads. The overheads come from CUDA calls remote launching and data transfer. The overhead depends on the network type, the specific deep learning model, and the batch size. As we can see, performance over 100 Gb/s RoCE in DirectPath I/O mode delivers the best performance among the three options – with 5% or less performance degradation compared with the baseline, while 10Gb/s VMXNET3 delivers the lowest performance among the three scenarios, with the performance overheads ranging from 4% to 20%. While individual applications may experience some network-dependent slowdown, the ability of the Bitfusion technology to enable the use of shared pools of GPU hardware allows customers to greatly increase the overall utilization of their GPU resources.

RDMA devices have the advantages of low latency and high bandwidth. DirectPath I/O essentially bypasses the hypervisor with the shortest path from guest OS to RDMA device. While this DirectPath I/O performance is attractive, VMs with an RDMA device configured in DirectPath I/O mode cannot take advantage of some vSphere core functions, including vMotion, hot-adding and removal of virtual devices, taking snapshots and distributed resource scheduler (DRS), and high availability (HA). PVRDMA is VMware’s Paravirtualized RDMA implementation which overcomes these technical drawbacks of RDMA devices on ESXi in DirectPath I/O, enabling the use of vMotion.

The performance overheads also depend on the ML models and batch sizes, e.g. resnet50 has better performance than inception3 with 10G VMXNET3, and larger batch sizes with low communication/computation ratio leads to better performance than smaller batch sizes.

Conclusion

The results show that remote use of GPUs with Bitfusion technology delivers good performance for deep learning applications. Using a high-speed interconnect like RoCE (100 Gb/s) can bring the performance very close to the baseline. VMXNET3 can also be effectively used for GPU remoting with tunings for latency sensitivity. PVRDMA offers the trade-off between performance and functionality. Different virtualized networking options combining vSphere management capabilities with Bitfusion provide maximum flexibility for VMs across the environment to access GPU resources remotely as well as create fractional GPUs of any size, at runtime. A vSphere and Bitfusion FlexDirect based GPU sharing solution helps reduce infrastructure costs, and increase GPU utilization, while providing easy and pervasive GPU access for machine learning workloads.