In part 1 we introduced the concept of virtualizing HPC and its architecture. In part 2 we will look at the makeup of management/compute clusters and some sample designs.

Management Cluster

Contents

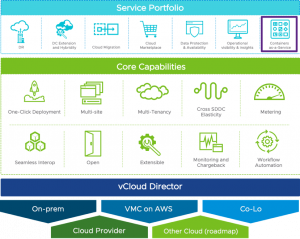

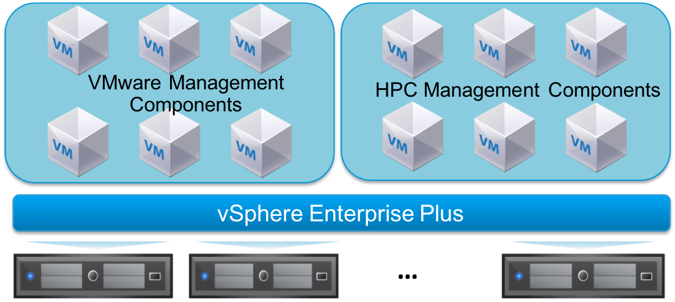

The management cluster runs the VMs that manage the virtualized HPC environment. As shown in Figure 7, these include vSphere and vSphere integrated components such as vSAN, NSX, vCenter Server, vRealize Operations, vRealize Automation, and HPC administrative components such as the master VM for workload scheduling and login VM. The management cluster provides high availability for these critical services. Permissions for the management cluster limit access only to administrators, protecting VMs running management, monitoring, and infrastructure services from unauthorized access.

Figure 7: Virtualized HPC management cluster with VMware vSphere Enterprise Plus

The management cluster, based on VMware Validated Designs, recommends a minimum 4 node vSAN cluster in order to tolerate failure of a node even with one node removed for maintenance. The new cluster should be sized based on projected management workload, including additional headroom for growth. Capacity analysis should be performed on an existing management cluster and adjusted to ensure that there is enough capacity to add HPC management components.

Due to the critical nature of workloads with many single points of failure, it is recommended that this cluster is licensed with VMware vSphere Enterprise Plus for high availability and other advanced features. VMware vSphere Enterprise Plus provides VMware vSphere High Availability (HA), Distributed Resource Scheduler (DRS) and other advanced capabilities that help reduce downtime for these critical workloads.

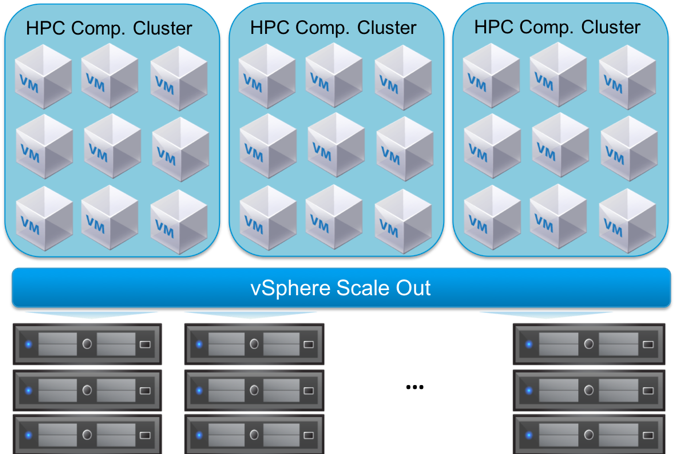

Compute Clusters

The compute clusters run the HPC workloads for different scientific and engineering groups. As shown in Figure 8, VMware vSphere Scale-Out license targets at HPC workloads at a cost effective price point and can be leveraged for these compute clusters.

The following sections demonstrate the ways in which various common HPC scenarios can be virtualized.

Scenario A – MPI Workloads

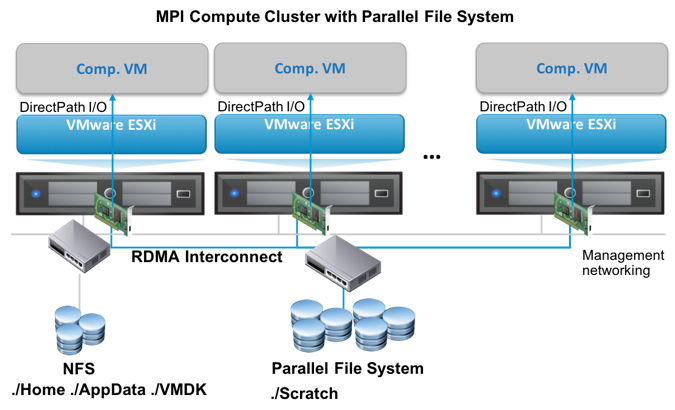

Figure 9: Virtualized Compute Cluster Architecture for MPI workloads with access to parallel file system via RDMA interconnect (DirectPath I/O)

As illustrated in Figure 9, the sample architecture for a virtualized HPC environment that runs MPI workloads consists of:

Hardware

- Multiple compute nodes – the number of nodes determined by the workload computational needs

- Management nodes – a minimum of four nodes is recommended for enterprise-class redundancy.

- Existing network storage for VMDK placement and long-term application data storage

- Parallel file system for application scratch data (optional)

- High-speed interconnects (such as 100 Gb/s Ethernet or RDMA) for achieving low-latency and high-bandwidth for HPC application message exchanges or the accessing parallel file system

- Ethernet cards with 10/25 Gb/s connectivity speed for management

- GPUs or other accelerators for application acceleration needs (optional)

Software

- VMware vSphere

- VMware vSAN

- VMware NSX

- VMware vRealize Suite/VMware Integrated OpenStack

It’s possible to leverage existing VMware management clusters that support enterprise workloads to also manage the vHPC environment.

- HPC management and operations solutions, such as

- HPC batch scheduler

- Lustre for parallel file system

VM sizing

- One compute VM per host because MPI workloads are CPU-heavy and can make use of all cores. CPU and memory overcommit would greatly impact performance and are not recommended. For further details, please see “VM-Sizing, Placement, and CPU/Memory Reservation” in Running High-Performance Computing Workloads on VMware vSphere Best Practices Guide.

- The size of other management VMs should be determined by the considerations of management requirements.

Scenario B – Throughput Workloads

Figure 10: Virtualized Compute Cluster Architecture for throughout workloads without parallel file system

Figure 11: Virtualized Compute Cluster Architecture for throughout workloads with access to parallel file system via RDMA interconnect (SR-IOV)

As illustrated in Figure 10 and Figure 11, the sample architectures for a virtualized HPC environment running throughput workloads with or without a parallel file system consists of:

Hardware

- Multiple compute nodes – the number of nodes determined by the workload computational needs

- Management nodes. A minimum of four nodes is recommended for enterprise-class redundancy

- Existing network storage for VMDK placement and long-term application data storage

- Parallel file system nodes for application scratch data (optional)

- Throughput workloads don’t require low-latency and high-bandwidth for HPC application message exchanges, however, they can still leverage parallel file systems. If there is a need for a parallel file system, high-speed interconnects (such as 100 Gb/s Ethernet or RDMA) are preferred. DirectPath I/O can only pass one InfiniBand device to a single VM but most throughput cases involve running multiple VMs per host. In that case, use SR-IOV (single root I/O virtualization) technology can enable a single physical PCI device be shared by multiple VMs. As shown in Figure 13, SR-IOV functionality of the network adapter enables virtualization and exposes the PCIe physical function (PF) into multiple Virtual Functions (VF).

- Ethernet cards with 10/25 Gb/s connectivity speed for management

- GPUs for application acceleration needs (optional)

Software

- VMware vSphere

- VMware vSAN

- VMware NSX

- VMware vRealize Suite/VMware Integrated OpenStack

It’s possible to leverage existing VMware management clusters that support enterprise workloads to also manage the vHPC environment.

- HPC management and operations solutions, such as:

- HPC batch scheduler

- Lustre for parallel file system (optional)

VM sizing

- Multiple compute VMs per host. It’s recommended different sizes of VMs conforming with NUMA boundaries. CPU oversubscription can be leveraged to achieve higher overall cluster throughput than bare-metal.

- The size of other management VMs should be determined by the considerations of management requirements

Conclusions

Virtualization offers tremendous benefits for HPC environments. With a simple understanding of HPC components and technologies, any enterprise organization can leverage HPC best practices and guidelines to adopt a VMware virtualized infrastructure. Virtualization provides the building blocks for individualized or hybrid clusters designed to host important HPC applications. Meanwhile, scale-out licensing for vSphere and HPC allows administrators to virtualize compute nodes at a low cost.

Through the addition of optional VMware management solutions, HPC further benefits from increased security, self-service, multi-workflow environments and remote-console accessibility. As the broader HPC community looks toward the cloud, HPC virtualization is a must to protect and tune IT infrastructure for the future.

Detailed information contained in this blog post can be found in the comprehensive HPC Reference Architecture White Paper.