Oracle RAC and VMware Raw Device Mapping (rdm)

Contents

- 1 Oracle RAC and VMware Raw Device Mapping (rdm)

- 2 Key points to take away from this blog

- 3 Important caveats to keep in mind about shared RDM ’s and vMotion

- 4 SCSI Bus Sharing

- 5 Oracle ASM with ASMLIB / Linux udev and Partitioning

- 6 Example setup of Oracle RAC VMs

- 7

- 8

- 9 Add Shared RDM (s) in Physical Compatibility Mode

- 10 Add Shared RDM (s) in Virtual Compatibility Mode

- 11 Alternate method of Adding Shared RDM (s) in Virtual Compatibility Mode

- 12 Summary

“To be, or not to be“- Famous words from William Shakespeare’s play Hamlet. Act III, Scene I.

This is true even in the Virtualization world for Oracle Business Critical Applications where one wonders which way to go when it comes to provisioning shared disks for Oracle RAC disks, Raw Device Mappings (RDM) or VMDK ?

Much has been written and discussed about RDM and VMDK and this post will focus on the Oracle RAC shared disks use case.

Some common questions I get talking to our customer who are embarking on the virtualization journey for Oracle on vSphere are

- What is the recommended approach when it comes to provisioning storage for Oracle RAC or Oracle Single instance? Is it VMDK or RDM?

- What is the use case for each approach?

- How do I provision shared RDM (s) in Physical or Virtual Compatibility mode for an Oracle RAC environment?

- If I use shared RDM (s) (Physical or Virtual) will I be able to vMotion my RAC VM ’s without any cluster node eviction?

We recommend using shared VMDK (s) with Multi-writer setting for provisioning shared storage for ALL Oracle RAC environments so that one can take advantage of all the rich features vSphere as a platform can offer which includes

- better storage consolidation

- manage performance

- increases storage utilization

- provides better flexibility

- easier administration and management

- use features like SIOC (Storage IO control)

For setting multi-writer flag on classic vSphere, refer to KB article “Enabling or disabling simultaneous write protection provided by VMFS using the multi-writer flag (1034165)”

For setting multi-writer flag on vSAN, refer to KB article “Using Oracle RAC on a vSphere 6.x vSAN Datastore (2121181)”

Key points to take away from this blog

- VMware recommends using shared VMDK (s) with Multi-writer setting for provisioning shared storage for ALL Oracle RAC environments (KB 1034165)

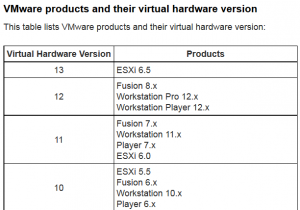

- vMotion of shared rdm (s) is possible in vSphere 6.0 and above as long as the VMs are in “Hardware 11” compatibility mode

- To provision shared rdm (s) in physical compatibility mode , follow the steps as outlined below

- To provision shared rdm (s) in Virtual Compatibility mode in a VMware supported configuration, follow KB 1034165

- Best Practices needs to be followed when configuring Oracle RAC private interconnect and VMware vMotion network which can be found in the “Oracle Databases on VMware – Best Practices Guide”

Back to the topic of Oracle RAC shared disks and to rdm or not to rdm !!

There still are some use cases where it makes more sense to use RDM storage access over vmdk:

- Migrating an existing application from a physical environment to virtualization

- Using Microsoft Cluster Service (MSCS) for clustering in a virtual environment

- Implementing N-Port ID Virtualization (NPIV)

This is well explained in the white paper “VMware vSphere VMFS Technical Overview and Best Practices” for version 5.1

Difference between Physical compatibility RDMs (rdm-p) and Virtual compatibility RDMs (rdm-v) can be found in the KB 2009226.

Majority of VMware customers run Oracle RAC on vSphere using shared vmdk (s) with multi-writer setting. There are a few select group of customers who choose to deploy Oracle RAC with shared rdm (s) (physical/virtual compatibility mode).

For those few select customers whose requirement falls in the list of used cases above where the need is to deploy Oracle RAC with shared RDM disks, yes, RDM ’s can be used as shared disks for Oracle RAC clusters on classic vSphere. VMware vSAN does not support Raw Device Mappings (RDMs) at the time of writing this blog.

- There was never an issue vMotioning VMs with non-shared RDM (s) from one ESXi server to another, it has worked in all versions of vSphere including the latest release

- The only issue was with shared RDM (s) for Clustering. vMotion of VMs with shared RDMs requires virtual hardware 11 or higher i.e VMs must be in “Hardware 11” compatibility mode – which means that you are either creating and running the VMs on ESXi 6.0 hosts, or you have converted your old template to Hardware 11 and deployed it on ESXi 6.0.

- The VMs must be configured with this above hardware version at minimum for shared RDM vMotion to take place

VMware products and their virtual hardware version table can be found below:

As per the table above, with vSphere 6.0 and above, for VMs with virtual hardware version 11 or higher, the restriction for shared RDM vMotion has been lifted.

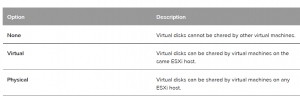

SCSI Bus Sharing

SCSI Bus sharing can be set to either of the 3 options ( None, Physical & Virtual ) as per the table below.

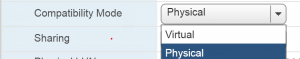

“SCSI Bus sharing” would be set to

- “Physical” for clustering across ESXi servers

- “Virtual” for clustering within an ESXi server

Oracle ASM with ASMLIB / Linux udev and Partitioning

Oracle ASMLIB requires that the disk be partitioned for use with Oracle ASM, you can use the raw device without partitioning as is if you are using Linux udev for Oracle ASM purposes.

Partitioning is a good practice anyways to prevent anyone from attempting to create a partition table and file system on any raw device he gets his hands on which will lead to issues if the device is being used by ASM.

12cR2 Installing and Configuring Oracle ASMLIB Software

Oracle ASMLib

12.2 Configuring Device Persistence Manually for Oracle ASM ( Linux udev)

For Oracle OCFS2 or clustered file system , partitioning of disk is required.

Oracle Linux 7 Installing and Configuring OCFS2

Example setup of Oracle RAC VMs

The 2 Oracle RAC VMs are rdmrac1 (10.128.138.1) and rdmrac2 (10.128.138.2) . Both VMs are running Oracle Enterprise Linux 7.4.

[root@rdmrac1 ~]# uname –a

Linux rdmrac1 4.1.12-94.5.7.el7uek.x86_64 #2 SMP Thu Jul 20 18:44:17 PDT 2017 x86_64 x86_64 x86_64 GNU/Linux

[root@rdmrac1 ~]# cat /etc/oracle-release

Oracle Linux Server release 7.4

[root@rdmrac1 ~]#

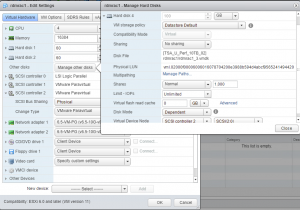

VM ‘rdmrac1’ VM ‘rdmrac2’

The disk setup as shown below

- SCSI 0:0 for Operating System (/) , size 60G

- SCSI 0:1 for Oracle binaries (/u01) , size 60G

- SCSI 1:0 for Physical RDM shared disk , size 100G

- SCSI 2:0 for Virtual RDM shared disk , size 100G

SCSI Controller setup

- SCSI 0 Controller is LSI Logic Parallel

- SCSI 1 & 2 Controller is Paravirtual

Now that we are aware of the above restrictions, lets add a shared RDM in Physical compatibility mode to 2 VMs as part of an Oracle RAC example using Oracle ASM and ASMLIB and see if we can vMotion the 2 VMs without any failure.

The high-level steps are

- add a shared RDM in Physical compatibility mode (rdm-p) to 2 VMs which are part of an Oracle RAC installation

- Use Oracle ASMLIB to mark those disk as ASM disks

- vMotion the 2 VMs from one ESXi server to another and see if we encounter any issues

There are 2 points to keep in mind when adding shared RDM (s) in physical compatibility mode to a VM.

- The SCSI controller where the shared rdm (s) will added to, the “SCSI Bus Sharing” needs to be set to “Physical”

- The “Compatibility mode for the” shared RDM needs to set to “Physical” for Physical Compatibility (rdm-p)

The RDM (WWN 60:06:01:60:78:70:42:00:5C:98:8B:59:85:DC:51:A7) in physical compatibility mode that we would use is highlighted below:

Following are the steps:

1) Add a new controller to VM “rdmrac1”. Recommendation is use PVSCSI for Controller type. Set the controller “SCSI Bus Sharing” to “Physical”. Do the same for VM “rdmrac2” also.

2) Add the RDM disk to the first VM “rdmrac1”.

3) Pick the correct RDM device (WWN 60:06:01:60:78:70:42:00:5C:98:8B:59:85:DC:51:A7)

4) Set RDM “Compatibility Mode” to “Physical” as shown. Please make a note of the SCSI ID to which you have attached the disk. You will use the same ID for this disk when attaching it to the other VM “rdmrac2” which will be sharing this disk. In this case we used SCSI 1:0.

5) Add the existing hard disk ( same RDM disk ) to VM “rdmrac2” to the new PVSCSI controller.

6) Add the existing RDM disk to the same SCSI channel we used in step 4 which was SCSI 1:0 (same as in the case of rdmrac1).

7) Now the shared rdm is added to both VMs “rdmrac1” and “rdmrac2”. Next order of business is to format the raw disk.

Oracle ASMLIB requires that the disk be partitioned for use with Oracle ASM, you can use the raw device without partitioning as is if you are using Linux UDEV for Oracle ASM purposes. For Oracle OCFS2 or clustered file system , partitioning of disk is required.

Partitioning is a good practice anyways to prevent anyone from attempting to create a partition table and file system on any raw device he gets his hands on which will lead to issues if the device is being used by ASM.

Format the disks

[root@rdmrac1 ~]# fdisk -lu

…

Disk /dev/sdc: 107.4 GB, 107374182400 bytes, 209715200 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 8192 bytes / 33553920 bytes

..

[root@rdmrac1 ~]#

Use the partitioning weapon of your choice (fdisk, parted, and gparted) , I used fdisk below to partition with default alignment offset.

[root@rdmrac1 ~]# fdisk -lu

…

Disk /dev/sdc: 107.4 GB, 107374182400 bytes, 209715200 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 8192 bytes / 33553920 bytes

Disk label type: dos

Disk identifier: 0xddceaeeb

Device Boot Start End Blocks Id System

/dev/sdc1 2048 209715199 104856576 83 Linux

[root@rdmrac1 ~]#

Scan the SCSI bus using operating system commands on “rdmrac2”

[root@rdmrac2 ~]# fdisk -lu

….

Disk /dev/sdc: 107.4 GB, 107374182400 bytes, 209715200 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 8192 bytes / 33553920 bytes

Disk label type: dos

Disk identifier: 0xddceaeeb

Device Boot Start End Blocks Id System

/dev/sdc1 2048 209715199 104856576 83 Linux

[root@rdmrac2 ~]#

Install Oracle ASMLIB rpm as usual and marked the new rdm disk as ASM disk.

[root@rdmrac1 software]# /usr/sbin/oracleasm createdisk DATA_DISK01 /dev/sda1

Writing disk header: done

Instantiating disk: done

[root@rdmrac1 software]#

[root@rdmrac1 software]# /usr/sbin/oracleasm listdisks

DATA_DISK01

[root@rdmrac1 software]#

[root@rdmrac2 ~]# /usr/sbin/oracleasm listdisks

DATA_DISK01

[root@rdmrac2 ~]#

8) Now for the vMotion test that we have been waiting for. VM “rdmrac1” is on host 10.128.136.118 and VM “rdmrac2” is on host 10.128.136.117.

vMotion “rdmrac1” from host 10.128.136.118 to 10.128.136.119.

Simultaneously, you can choose to vMotion VM “rdmrac2” from 10.128.136.117 to 10.128.136.118.

Start vMotion of “rdmrac1” from host 10.128.136.118 to 10.128.136.119

While the vMotion is taking place, perform ping test by pinging VM “rdmrac1” from VM “rdmrac2”

[root@rdmrac2 ~]# ping 10.128.138.1

PING 10.128.138.1 (10.128.138.1) 56(84) bytes of data.

64 bytes from 10.128.138.1: icmp_seq=2 ttl=64 time=0.288 ms

64 bytes from 10.128.138.1: icmp_seq=3 ttl=64 time=0.296 ms

64 bytes from 10.128.138.1: icmp_seq=4 ttl=64 time=0.288 ms

…

64 bytes from 10.128.138.1: icmp_seq=8 ttl=64 time=0.293 ms

64 bytes from 10.128.138.1: icmp_seq=9 ttl=64 time=0.242 ms

64 bytes from 10.128.138.1: icmp_seq=18 ttl=64 time=0.252 ms

64 bytes from 10.128.138.1: icmp_seq=19 ttl=64 time=0.279 ms

64 bytes from 10.128.138.1: icmp_seq=20 ttl=64 time=0.256 ms

64 bytes from 10.128.138.1: icmp_seq=21 ttl=64 time=0.422 ms

..

64 bytes from 10.128.138.1: icmp_seq=23 ttl=64 time=0.675 ms <– Actual Cutover

..

64 bytes from 10.128.138.1: icmp_seq=24 ttl=64 time=0.295 ms

64 bytes from 10.128.138.1: icmp_seq=25 ttl=64 time=0.251 ms

64 bytes from 10.128.138.1: icmp_seq=26 ttl=64 time=0.246 ms

64 bytes from 10.128.138.1: icmp_seq=27 ttl=64 time=0.177 ms

…

^C

— 10.128.138.1 ping statistics —

53 packets transmitted, 52 received, 1% packet loss, time 52031ms

rtt min/avg/max/mdev = 0.177/0.297/0.902/0.107 ms

[root@rdmrac2 ~]#

At the end of the vMotion operation, the VM “rdmrac1” is are now on a different host without experiencing any network issues.

Yes, the most conclusive test would be to have a fully functional RAC running and see if we have any cluster node evictions or disconnects of RAC sessions. I have performed those tests as well and have not encountered any issues.

In case you were wondering what steps needed to be taken to add a shared RDM in virtual compatibility mode (rdm-v) , follow the same steps as is outlined in KB 1034165 for adding shared vmdk using Multi-writer setting. This is the official supported way to add shared RDM (s) in virtual compatibility mode.

Enabling or disabling simultaneous write protection provided by VMFS using the multi-writer flag (1034165) .

Same vMotion with ping test was done and no issues were observed.

Alternate way to add shared RDM (s) in virtual compatibility mode with vMotion capability (vMotion of VMs with shared RDMs requires virtual hardware 11 or higher i.e VMs must be in “Hardware 11” compatibility mode) without Multi-writer setting is :

- The SCSI controller where the shared rdm (s) will added to, the “SCSI Bus Sharing” needs to be set to “Physical” (cluster across any ESXi servers)

- The “Compatibility mode for the” shared RDM needs to set to “Virtual” for Virtual Compatibility (rdm-v)

In this case, I used the same 2 VMs, “rdmrac1” and “rdmrac2”.

- added a new SCSI Controller of Type Paravirtual (SCSI 2)

- added the shared RDM in virtual compatibility mode (new device with WWN 60:06:01:60:78:70:42:00:E3:98:8B:59:4D:4A:BC:F9) at the same SCSI position (SCSI 2:0) for both the 2 VMs.

The steps for adding the rdm-v’s are the same as shown above for rdm-p’s.

VM “rdmrac1” showing SCSI 2 Paravirtual Controller with shared rdm in Virtual compatibility mode at SCSI 2:0 position.

VM “rdmrac2” showing SCSI 2 Paravirtual Controller with shared rdm in Virtual compatibility mode at SCSI 2:0 position.

Same vMotion with ping test was done and no issues were observed.

Summary

- VMware recommends using shared VMDK (s) with Multi-writer setting for provisioning shared storage for ALL Oracle RAC environments (KB 1034165)

- Majority of VMware customers run Oracle RAC on vSphere using shared vmdk (s) with multi-writer setting.

- There are a few select group of customers who choose to deploy Oracle RAC with shared rdm (s) (physical/virtual compatibility mode).

- vMotion of shared rdm (s) is possible in vSphere 6.0 and above as long as the VMs are in “Hardware 11” compatibility mode

- To provision shared rdm (s) in physical compatibility mode , follow the steps as outlined above

- To provision shared rdm (s) in Virtual Compatibility mode in a VMware supported configuration, follow KB 1034165

- Best Practices needs to be followed when configuring Oracle RAC private interconnect and VMware vMotion network which can be found in the “Oracle Databases on VMware – Best Practices Guide”

- All Oracle on vSphere white papers including Oracle licensing on vSphere/vSAN, Oracle best practices, RAC deployment guides, workload characterization guide can be found at “Oracle on VMware Collateral – One Stop Shop“