Introduction to Queues Sizing

Contents

- 1 Introduction to Queues Sizing

- 2 Queues

- 3 Overview of Queues

- 4 Virtualization Stack

- 5 Server Stack

- 6 Storage Stack

- 7 Assumptions

- 8 Queue Depth Calculation: A Physical Server perspective

- 9 Queue Depth Calculation: Perspective from a Storage SAN

- 10 From the above calculation

- 11 Queue Depth Calculation: Virtualization Perspective

- 12 Lessons learnt

- 13 Acknowledgements

Proper queues sizing is a key element in ensuring current database workloads can be sustained and all SLA’s are met without any processing disruption.

Queues

Queues are often misrepresented as the very “bane of our existence” and yet queues restore some order of semblance to our chaotic life.

Imagine what would have happened if there were no queues?

Much has been written about Virtualization & Storage queues, excellent blog articles by Duncan Epping, Cormac Hogan and Chad Sakac go through in depth about the various queues.

What this article tries to endeavor is to illustrate, with a simple example, the fallacy in presuming that queue tuning only needs to be done at the Application level and that it’s okay to ignore storage physical limitations.

The results of this theoretical analysis amply demonstrates the fact that application owners, DBA’s, VMware administrators and storage administrators would have to work hand in hand in order to setup a well-tuned Oracle database on vSphere especially in in a non-VVol or VSAN world. “Software defined storage” and Quality of Service (QoS) through policy based management solutions will solve this over time.

My background, I have been a Production Oracle DBA / Architect for the last 19 odd years for many Fortune 100 companies (having started with Oracle version 6 in my school days, long long time ago). I joined VMware in 2012 as a Senior PSO Consultant and now work in the GCoE as Senior Solution Architect, Data Platforms. I also have hands on EMC Storage background having played around with Symmetrix 8730/8830 , DMX & Clariions but by no means am I a storage expert!!

This article is written with an Oracle database in perspective, much of this discussion holds good for any Mission critical application.

There are many queues in a virtualized Oracle database stack with the Oracle database & Operating System contributing their set own queues to the already existing list of queues.

For sake of simplicity, assume that the Oracle DBA and the OS Admin have done due diligence and have taken care of the queue tuning on their side.

I will be covering the Oracle and OS queues in part 2 of this discussion.

Overview of Queues

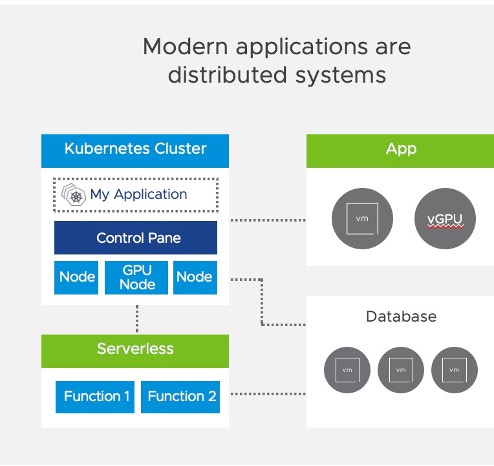

Let’s look at the queues at the Virtualization, Server and Storage Stack, then follow best practices at every stack and see if just following the best practices is enough? Does one best practice conflict with the other?

Figure 1 Illustration of Virtualization, Server and Storage Queues

Figure 1 above shows an example of a single ESXi 5.5 server with 2 single port Emulex HBA card attached to a SAN storage with different queues at various layers of the stack. For sake of simplicity consider a single ESXi server.

Does setting the queue depth, at the Virtualization and Server stack, to the maximum set limit to achieve maximum throughput sufficient?

Virtualization Stack

At the Virtual Machine level, there are 2 queues

- PVSCSI Adapter queue

- Per VMDK queue

KB 2053145 talks about the default and maximum PVSCSI adapter and VMDK queue size and how to go about increasing them for Windows/Linux hosts.

As per above KB, default queue size is 64 (for device) / 254 (for adapter) with a maximum of 256 (for device) / 1024 (for adapter). They are also known as a World queue (a queue per virtual machine)

Following Oracle on VMware Best Practices, for high end mission critical Oracle database, let’s assume we set

- PVCSCI queues to maximum (vmw_pvscsi.ring_pages = 32 for Linux)

- Per vmdk queue to maximum (vmw_pvscsi.cmd_per_lun = 254 for Linux)

Server Stack

At a physical Server level there are 2 queues

- A HBA (Host Bus Adapter) queue per physical HBA

- A Device/LUN queue (a queue per LUN).

Assume new native drivers on ESXi 5.5 for Emulex.

Refer KB 2044993 troubleshooting native drivers in ESXi 5.5 or later

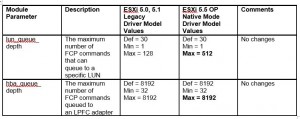

From the “Emulex Drivers Version 10.2 for VMware ESXi User Manual”:

FC/FCoE Driver Configuration Parameters , Table 3-1, FC and FCoE Driver Parameters, lists the FC and FCoE driver module parameters, their descriptions, and their corresponding values in previous ESXi environments and in ESXi 5.5 native mode:

Excerpt from Table 3-1

Following Server Best Practices, with Emulex HBA in design (settings for Qlogic and Brocade differ), let’s assume we set

- Host HBA queue depth to maximum (lpfc_hba_queue_depth : the maximum number of FCP commands that can queue to an Emulex HBA (8192))

- LUN queue depth to maximum (lpfc_lun_queue_depth : the default maximum commands sent to a single logical unit (disk) (512))

KB 1267 talks about setting the queue depth for devices for QLogic, Emulex, and Brocade HBAs

Figure 2 Illustration of Virtualization & Server Queues

Storage Stack

Figure 3 below is an example SAN layout showing an ESXi server with 2 single port Emulex HBA’s cards connected to a Storage array. Normally, there would only be a path from the ESX server to any given SP port (best practice).

For simplicity,

- 1 ESXi server in the server farm with a database having 1 LUN (LUN1) allocated to it

- LUN1 is mapped to Port 0 of FA0 and Port 1 of FA1 card only

- Array is an active-active array being able to send down IO’s down all storage ports.

Figure 3 Illustration of Storage Queues

A specific Storage port queue can fill up to the maximum if the port is flooded with a lot of IO requests. The host’s HBA will notice this by getting queue full (QFULL) signal and will display very poor response times.

Older OS’s may experience a blue screen or freeze, newer OS’s will throttle IOs down to a minimum to get around this issue.

ESXi for example will reduce the LUN queue depth down to 1. When the number of queue full messages disappear, ESXi will increase the queue depth a bit until its back at the configured value. The overall performance of the SAN is fine but the host may experience issues, so to avoid it Storage Port queue depth setting must also be taken into account.

Following the Storage Best Practices, let’s assume we set

- Maximum queue depth of all Storage Processor FC ports to 1600. (e.g. for EMC VNX, theoretical limit is 2048, performance limit is 1600)

We are not addressing the Ingress and Egress queues of the SAN switches which also needs to tweaked, refer to Chad’s excellent blog about the various queues)

Assumptions

- A Database Workload Generator (Swing bench/SLOB) is used to generate IOPS

- Database has 1 LUN (LUN1) allocated to it

- 1 ESXI server is issuing IO’s to LUN1

- LUN1 is mapped to 2 Storage front end ports

- Server has 2 Emulex Single port HBA’s

- Average 10 ms latency between ESXi host and storage array

- VMDK queue / PVSCI adapter queue increased to maximum (254/1024)

- HBA queue depth is set to maximum allowed (8192)

- Device lun-queue-depth is set to maximum allowed (512)

- Storage port have their queue set to maximum (1600)

- Active-Active Array

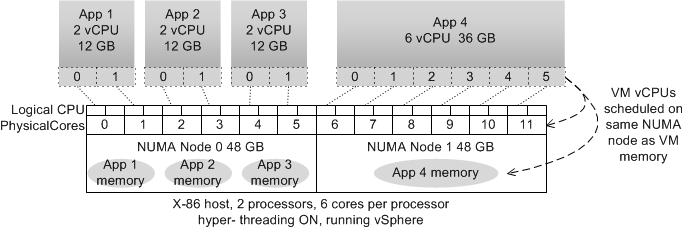

Queue Depth Calculation: A Physical Server perspective

Assuming average 10 ms latency between the host and storage array,

Number of IO commands which can be generated per LUN per sec with single slot queue length (1) = 1000ms / 10ms = 100

Max number of IOPS for a LUN with device queue set to maximum (512) = 100 x 512= 51,200

With another LUN with its lun-queue-depth set to maximum, Max number of IOPS with a 512 slot queue = 100 x 512= 51,200

…..

And so on…

Figure 3 Illustration of a LUN Queue Depth

Typically all LUNS are masked to both HBA cards for load balancing. With 2 HBA cards in play, each HBA card is masked to the same set of LUNs to avoid Single Point of Failure (SPOF), so number of LUNs which can be theoretically be supported by 2 Emulex HBA cards without flooding the HBA queue

Number of supported LUNs

= (HBA1 queue depth + HBA2 queue depth) / (lun_queue_depth per lun)

= (8192 + 8192) / 512 = 16384 / 512 = 32 LUNS

Theoretically, the server can push 32 LUNs * 512 queue slots per LUN = 16384 IO’s per every 10ms (average latency) , WOW !!!

The DBA may be lulled into thinking that as long as he does not flood the HBA & LUN queue depths , setting all queues to maximum limits, he may be able to maximize throughput and push = 51200 IOPS per LUN * 32 LUNs = 1,638,400 IOPS

Let’s see if the SAN is capable of supporting the above requirement

Queue Depth Calculation: Perspective from a Storage SAN

As stated above, storage port queues can fill up to the maximum if the port is flooded with a lot of IO requests resulting in performance degradation.

In order to avoid overloading the storage array’s ports, the maximum queue depth of a Storage port is calculated using the below formula:

Port-QD ≥ Host1 (P * L * QD) + Host2 (P * L * QD) + … + Hostn (P * L * QD)

Where,

Port-QD = Maximum queue depth of the array target port

P = Number of initiators per Storage Port (number of ESX hosts, plus all other hosts sharing the same SP ports)

L = Number of LUNs presented to the host via the array target port ie sharing the same paths

QD = LUN queue depth / Execution throttle (maximum number of simultaneous I/O for each LUN any particular path to the SP)

Maximum number of LUNS which can be serviced by both the FC ports without flooding the FC storage port queue with lun queue depth=512 and heavy IO to the luns

= (Port-QD of 1st FA port 0 + Port-QD of 2nd FA port 1) / lun_queue_depth

= (1600 + 1600) / 512 = 6.25 ~ 6 LUNS

Other initiators are likely to be sharing the same SP ports, so these will also need to have their queue depths limited.

Typically a SAN will have at least 2 storage FA cards each with 2 storage ports which could support a lots of LUNs, so a QFULL situation may not arise that often if proper queue tuning is done

Given the server is able to send 16384 IO’s per 10 ms, the storage port has only 3200 (Both FA ports) slots and hence QFULL error condition will happen.

From the above calculation

- Number of supported LUNs from an Application perspective (given lun-queue-depth = 512) is 32 LUNs

- Actual number of LUNs which can be supported without flooding the Storage port queues is 6 LUNs

Aah !! This difference is because there are queue restrictions on the storage end which also needs to be taken into account.

The storage admin would have the application owner throttle down the device lun-queue-depth to a small number, called Execution Throttling to avoid flooding the SP storage port queues.

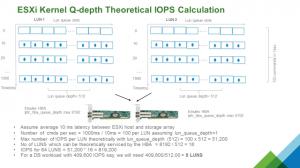

Queue Depth Calculation: Virtualization Perspective

Let’s throw another wrench into the wheel here.

There is another perspective that needs to be taken into consideration, the virtualization stack.

Both the above 2 perspectives were from a pure physical stack perspective without any virtualization flavor.

With the virtualization stack in play, the number of outstanding disk requests to a LUN is determined by 2 parameters in the ESXi stack, Disk.SchedNumReqOutstanding (DSNRO) and the Lun queue depth.

The number of outstanding disk requests to a lun:

- With only 1 VM sending active IO’s to a LUN ,effective queue depth for that LUN = lpfc_lun_queue_depth

- With > 1 VM sending active IO’s to a LUN , effective queue depth = minimum(lpfc_lun_queue_depth , DSNRO)

Best Practices is to set the DSNRO to the LUN queue depth to maximize throughput. So as per above example, DSNRO would have been set to 512 (LUN queue depth)

KB 1268 talks about setting the Maximum Outstanding Disk Requests for virtual machines

There are a couple of different throttling mechanisms that ESXi can use e.g. Adaptive Queueing, SIOC etc. You can read about them on Cormac’s blog below

Lessons learnt

• Keep the physical limitations of the Storage FA port queue into account when setting the lun queue depth for the database LUNs

• Set Disk.SchedNumReqOutstanding (DSNRO) equal to the effective lun-queue-depth

Acknowledgements

Many thanks to Duncan Epping and Cormac Hogan for their valuable time in advising me on this blog post

Due credit should be given to Vas Mitra for prodding me to write about it 🙂