Synopsis.

Contents

Generative AI (gen AI) is taking the enterprise by storm. Sample use cases include content creation, product design development, and customer service to name a few. Businesses stand to achieve increased efficiencies and productivity, enhanced creativity and innovation, improved customer experience, and reduced development costs.

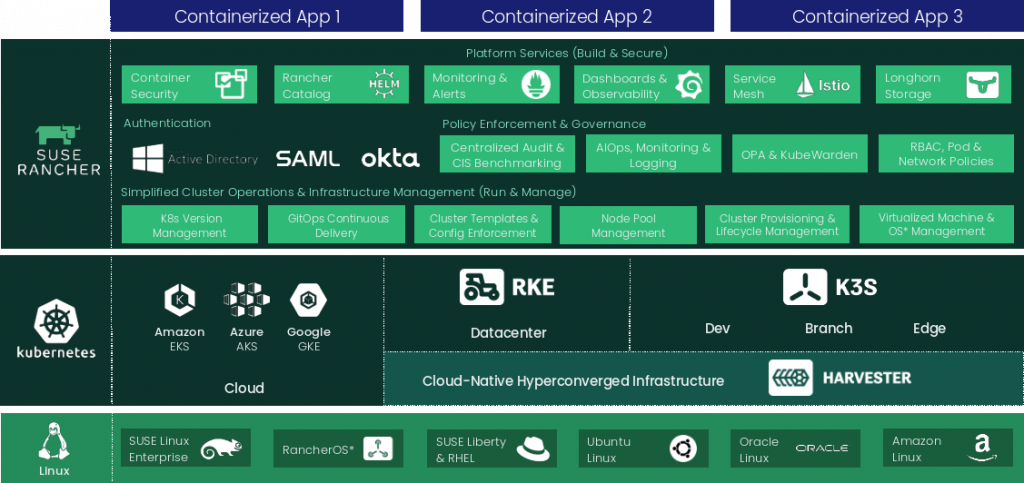

SUSE’s Enterprise Container Management (ECM) Products (Rancher Prime, Rancher Kubernetes Engine 2, Longhorn, and NeuVector), combined with NVIDIA NIM inference microservices, enables joint partners and customers alike to accelerate the adoption of gen AI with faster development cycles, efficient resource utilization, and easier scaling.

SUSE Enterprise Container Management Stack.

The rest of this document will focus on:

- Establishing a base definition of generative AI for this document.

- The current state of generative AI usage in the enterprise.

- Needs (requirements) and challenges impacting generative AI deployments.

- How the combination of SUSE’s ECM stack and NVIDIA NIM help address said needs and challenges.

- The Future – What we see as the next steps with SUSE ECM products and NVIDIA NIM.

What is Generative AI – Base Definition.

“Generative AI is a type of artificial intelligence technology that can produce various types of content, including text, imagery, audio, and synthetic data”[1]. You can think of it as “a machine learning model that is trained to create new data, rather than make a prediction about a specific dataset. A generative AI system is one that learns to generate more objects that look like the data it was trained on”[2].

The Current State of Generative AI in the Enterprise.

It’s fair to state that generative AI is in a state of rapid growth within the enterprise, but it’s still in early stages of adoption.

According to Deloitte in their Q2 Generative AI Report[3], “organizations are prioritizing value creation and demanding tangible results from their generative AI initiatives. This requires them to scale up their generative AI deployments – advancing beyond experimentation, pilots, and proof of concept”. Two of the most critical challenges are building trust (in terms of making generative AI both more trusted and trustworthy) and evolving the workforce (addressing generative AI’s potentially massive impact on worker skills, roles and headcount).

Early use cases involve areas such as:

- Content Creation: Generative AI can create marketing copy, social media posts, product descriptions, or even scripts based on provided prompts and style guidelines.

- Product Design and Development: AI can generate new product ideas, variations, or mockups based on existing data and user preferences.

- Customer Service Chatbots: AI chatbots that handle routine custom inquiries, freeing up human agents for more complex issues.

There are also two more technical areas:

- Data augmentation: Generative AI creates synthetic data to train machine learning models in situations where real data might be scarce or sensitive.

- Drug Discovery and Material Science: Generative models can design new molecules for drugs or materials with specific properties, reducing research and development time.

When we combine the business priorities as outlined in Deloitte’s report with the early use cases, we can come up with the following requirements and challenges.

Requirements:

- Fast development and deployment of generative AI models to accelerate value creation and deliver tangible results.

- Simplified integration that allows them to integrate AI models into their existing applications.

- Enhanced security and data privacy that allows enterprises to keep their data secure.

- Scalability on demand as needs fluctuate.

- Ability to customize the model with the inclusion of enterprise proprietary data.

Challenges:

- Technical expertise: Implementing and maintaining generative AI models requires specialized skills and resources that may not be available in all enterprises.

- Data Quality and Bias: Generative models are only as good as the data they are trained on. Ensuring high-quality, unbiased data is crucial to avoid generating misleading or offensive outputs.

- Explainability and Trust: Understanding how generative models arrive at their outputs can be challenging. This can raise concerns about trust and transparency, especially in critical applications.

- Security and Control: Mitigating potential security risks associated with generative AI models, such as the creation of deep fakes or malicious content, is an ongoing concern.

Addressing Enterprise Generative AI Requirements with the SUSE ECM Stack and NVIDIA NIM.

Deploying NVIDIA NIM combined with the SUSE Enterprise Container Management stack provides an ideal DevOps mix for both the development and production deployment of generative AI applications.

NVIDIA NIM and NVIDIA AI Enterprise.

NVIDIA NIM is available through the NVIDIA AI Enterprise software platform. These prebuilt containers support a broad spectrum of AI models — from open-source community models to NVIDIA AI Foundation models, as well as custom AI models. NIM microservices are deployed with a single command for easy integration into enterprise-grade AI applications using standard APIs and just a few lines of code. Built on robust foundations, including inference engines like NVIDIA Triton Inference Server, NVIDIA TensorRT, NVIDIA TensorRT-LLM, and PyTorch, NIM is engineered to facilitate seamless AI inferencing at scale, ensuring users can deploy AI applications anywhere with confidence. Whether on premises or in the cloud, NIM is the fastest way to achieve accelerated generative AI inference at scale.

When it comes to addressing the challenges:

|

Challenge |

How NVIDIA NIM and NVIDIA AI Enterprise help address or mitigate the challenge. |

|

Technical expertise in the enterprise |

Pre-built containers and microservices can simplify deployment for developers without extensive expertise in building and maintaining complex infrastructure. |

|

Data quality and bias |

By simplifying the process for approved, well-tested models, enterprises can focus their resources on ensuring the quality of the training data used for their models. |

|

Explainability and trust |

NIM supports versioning of models, allowing users to track changes and ensure that the same model version is used for specific tasks. By managing dependencies and the runtime environment, NIM ensures that models run in a consistent environment, reducing variability in outputs due to external factors. |

|

Security and control |

NIM supports strong authentication mechanisms and allows the implementation of Role-Based Access Control (RBAC). NIM can log all activities related to model usage. |

SUSE Enterprise Container Management Stack.

The SUSE Enterprise Container Management Stack is well-known in the industry. These are some of the potential benefits when deploying NVIDIA NIM with the Rancher Prime, Rancher Kubernetes Engine 2 (RKE2), Longhorn, and NeuVector Prime combination.

- Rancher Prime is an enterprise Kubernetes management platform that simplifies the deployment and management of Kubernetes clusters:

- Its centralized management provides a single pane of glass to manage multiple Kubernetes clusters, ensuring consistent configuration and policy enforcement across different environments.

- Rancher Prime facilitates the scaling of Kubernetes cluster to meet the demands of large-scale AI workloads, such as those that could be run with NVIDIA NIM.

- Multi-Cluster Support allows deployment across cloud providers and on-premise environments, providing flexibility and redundancy.

- RKE2 is a lightweight, certified Kubernetes distribution that is optimized for production environments.

- Offers a streamlined installation and configuration process, making it easier to deploy and manage NIM.

- Supports out-of-the-box security features such as CIS Benchmark and SELinux (on nodes).

- Longhorn is a cloud-native distributed block storage solution for Kubernetes.

- It provides highly-available and replicated storage for data, such as the one needed for the AI model, ensuring data durability and fault tolerance.

- Easily scales to meet the storage demands of growing AI workload, supporting large datasets typically used by generative AI.

- NeuVector is a Kubernetes-native security platform that provides comprehensive container security.

- Runtime Security for running container monitoring for threats and anomalies in AI workloads.

- Provides advanced network segmentation and firewall capabilities, securing the communication between different components of the infrastructure.

- Helps maintain compliance with security standards by providing visibility and control over container activities.

- Scans for vulnerabilities in container images, ensuring that only secure images are deployed.

When we combine the elements of the SUSE Enterprise Container Management (ECM) Stack with NVIDIA NIM, joint customers stand to benefit from:

- Enhanced Security – With RKE2’s secure configuration, NeuVector’s runtime security, and vulnerability management, the AI environment is well-protected against threats and unauthorized access.

- High Availability and Reliability – Longhorn ensures the storage is highly available and reliable, preventing data loss and downtime.

- Scalability: Rancher Prime and Longhorn provide the scalability needed across clusters should NIM requirements demand that level of scale in the enterprise.

- Simplified Management: Rancher Prime offers a centralized Kubernetes management platform, making it easier to scale clusters and ensure consistent policies and configurations.

- Performance – RKE2 and Longhorn are optimized for high performance, ensuring that NIM runs efficiently and can handle intensive AI tasks.

- Flexibility and Compatibility: The SUSE ECM stack is compatible with Kubernetes, allowing integration with a wide range of tools and services, providing flexibility in deployment options.

In summary, the SUSE ECM stack provides a robust, secure, and scalable infrastructure for NVIDIA NIM. The combined stack enhances the security, manageability, and performance of generative AI deployments, ensuring that NIM can be deployed and operated efficiently in production environments.

The Future – Putting it all together in easy-to-use configurations.

We plan to provide an NVIDIA NIM on SUSE Enterprise Container Management Guide in the near future, documenting how to put the components together to empower customers and partners alike. The document will show how to build the Rancher cluster, install and deploy RKE2, Longhorn, and NeuVector, and deploy NVIDIA AI Enterprise and NIM as workloads. Stay tuned via SUSE blogs or social media for further availability announcements.

[1] https://www.techtarget.com/searchenterpriseai/definition/generative-AI

[2] https://news.mit.edu/2023/explained-generative-ai-1109

[3] https://www2.deloitte.com/content/dam/Deloitte/us/Documents/consulting/us-state-of-gen-ai-report-q2.pdf

(Visited 1 times, 1 visits today)