In part 1 of the series we introduced the solution and the components used. Now we will look at the first use case leveraging GPUs with Apache Spark 3 and NVIDIA RAPIDS for transaction processing.

NVIDIA RAPIDS:

RAPIDS offers a powerful GPU DataFrame based on Apache Arrow data structures. Arrow specifies a standardized, language-independent, columnar memory format, optimized for data locality, to accelerate analytical processing performance on modern CPUs or GPUs. With the GPU DataFrame, batches of column values from multiple records take advantage of modern GPU designs and accelerate reading, queries, and writing.

See https://developer.nvidia.com/blog/accelerating-apache-spark-3-0-with-gpus-and-rapids/ for more details.

In this use case NVIDIA RAPIDS is run with Apache Spark 3 and validated with a subset of TPC-DS queries. Spark and other components needed for the solution are deployed. Approximately 1TB of data is generated to run TPC-DS against. A subset of TPC-DS queries are then executed with CPU only followed by GPU based acceleration and compared.

Spark operator for Kubernetes was installed using the steps in the Quick Start Guide

- The configuration, –set enableWebhook=true was used during installation of the operator in order for volume mounts to work in a spark operator application.

- A helm repository is then added:

- Then spark-operator is installed. Webhook parameter needs to be set to true for the volume mounts to work. Note that in our case, we had given the operator a name: sparkoperator-ssgash

- helm install sparkoperator-ssgash incubator/sparkoperator –namespace spark-operator –create-namespace –set sparkJobNamespace=default –set enableWebhook=true

- Then cluster role binding was created as follows:

- kubectl create clusterrolebinding add-on-cluster-admin –clusterrole=cluster-admin –serviceaccount=kube-system:default

Spark operator official documentation was used for the deployment in addition to the Spark Operator user guide.

Spark History server is used to view the logs of either already finished or currently running Spark applications.

The Spark history server where the logs will be stored on a PVC which is NFS based was deployed based on this configuration guide

Created a custom docker image based on nvidia/cuda:10.2-devel-ubuntu18.04

that incorporates the following versions of dependency software:

- CUDA (10.2)

- UCX (1.8.1)

- spark v3.0.1

- CUDF v0.14

- Spark RAPIDS v2.12

– a GPU resources script that is needed by spark to make it discover and run tasks on GPUs

Apart from these, installed Java 1,8 SDK, Python 2 and 3 in the customer docker image.

Note that this combination of software was arrived at based on the Dockerfile contents mentioned in the following refernce document:

https://docs.mellanox.com/pages/releaseview.action?pageId=25152352

The Dockerfile that we used for running tpcds on Kubernetes using spark operator is provided in Appendix A.

Build TPC-DS scala distribution along with the dependencies as specified in

https://github.com/NVIDIA/spark-rapids/blob/branch-0.3/docs/benchmarks.md

To run TPC/mortgage related applications with spark-rapids, we need this file:

rapids-4-spark-integration-tests_2.12-0.3.0-SNAPSHOT-jar-with-dependencies.jar

For building this application jar related to integration_test in spark/rapids, I referred to:

https://docs.mellanox.com/pages/releaseview.action?pageId=28938181 (slight variation – we used the branch branch-0.3). To be more specifric, search for “To download RAPIDS plugin for Apache Spark” to reach the appropriate section.

In our tpcds related applications, we used the jar file that was built with dependencies.

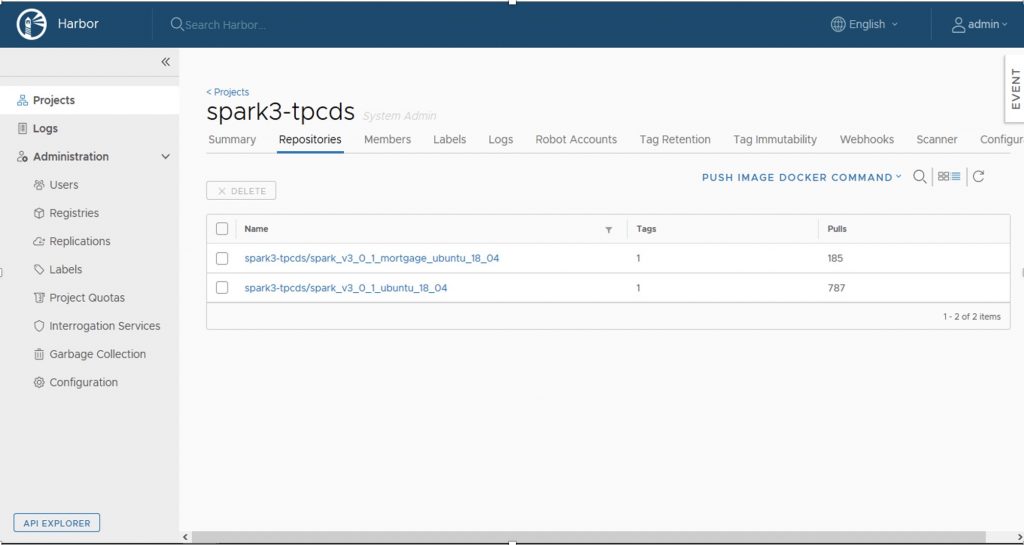

Installed and configured Harbor on a local server so that the custom docker images can be stored locally.

Completed harbor install by following directions from the official site.

A screenshot of the harbor dashboard is shown below.

Figure 6: Harbor Repository used as image repository

TPC-DS data was generated as per procedure in the TPC-DS site.

The data was generated using default options for delimiter (‘|’) for csv file:

./dsdgen -DIR /sparknfs/sparkdata/gen_data/1000scale -SCALE 1000

The above command generates a 1TB dataset for running TPC-DS queries. This 1TB TPC-DS dataset was converted to parquet format using spark application. Note that you must configure spark-local-dir to have more capacity so that the conversion job doesn’t run out of space for storing intermediate files.

Important note: The converted data directories had a ‘.dat’ suffix that needed to be dropped by renaming them in order for the queries to run later.

The yaml file used to convert to parquet format is given in Appendix B.

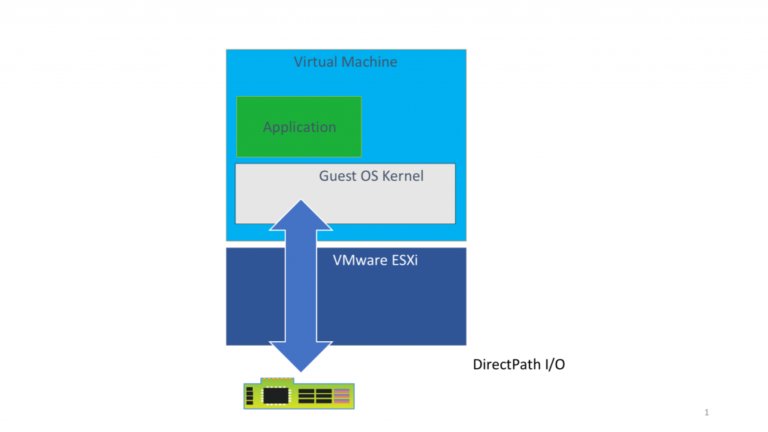

Installed additional software on all kubernetes nodes as well as a kubernetes plugin to enable the cluster to run GPU applications, namely:

- nvidia-docker installation and configuration (in each node)

- nvidia-device-plugin (on the cluster)

Here’s a screenshot showing nvidia device plugin in a Running state on all the worker nodes:

Figure 7: NVIDIA GPU being used across different systems in the worker nodes

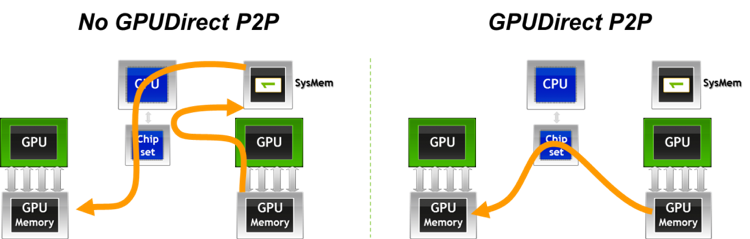

In this exercise we ran a select set of TPC-DS queries (chosen based on run times) in both CPU mode and GPU mode. The GPU mode uses the RAPIDS accelerator and Unified Communication X (UCX). UCX provides an optimized communication layer for Message Passing (MPI), PGAS/OpenSHMEM libraries and RPC/data-centric applications. UCX utilizes high-speed networks for inter-node communication, and shared memory mechanisms for efficient intra-node communication.

In the CPU mode we used AQE (“adaptive query execution”). This is done by setting spark configuration

“spark.sql.adaptive.enabled”: “true” as shown in AQE to speed up Spark SQL at runtime)

Currently GPU runs cannot be run with AQE (“adaptive query execution”) enabled. In both the CPU and GPU cases, we ran a spark application that used one driver and 8 executors.

TPC-DS queries were run using 1TB TPC-DS data that was converted to parquet format.

The comparative runtimes of these queries are shown in the table below:

| TPC-DS Query | GPU Duration | CPU Duration |

| q98 | 1.7 min | 1.4 min |

| q73 | 1.2 min | 1.1 min |

| q68 | 1.9 min | 2.1 min |

| q64 | 6.8 min | 22 min |

| q63 | 1.3 min | 1.2 min |

| q55 | 1.3 min | 1.3 min |

| q52 | 1.3 min | 1.4 min |

| q10 | 1.5 min | 1.4 min |

| q7 | 1.6 min | 1.5 min |

| q6 | 1.6 min | 6.8 min |

| q5 | 3.0 min | 4.7 min |

| q4 | 11 min | 13 min |

Figure 5: Runtime comparison of select TPC-DS queries between CPUs and GPUs

The results show that for longer running transactions in many cases that the GPUs accelerate performance. As we can see, GPU makes a significant improvement for the queries q6 and q64 and makes a noticeable improvement for the queries q4 and q5. The remaining queries which have shorter duration, CPU and GPU run with almost similar times.

In part 3 of the blog series we will look at leveraging Apache Spark 3 to run machine learning with NVIDIA XGBOOST.