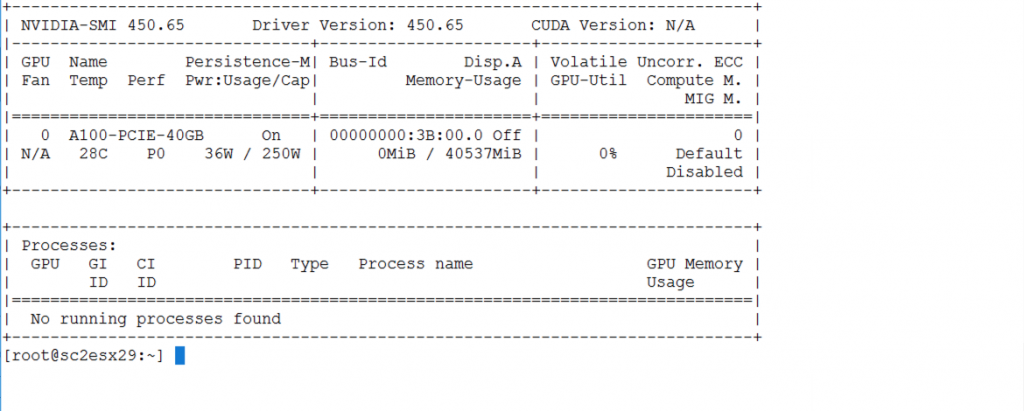

In part 1 of this series on Multi-Instance GPUs (MIG), we saw the concepts in the NVIDIA MIG feature set deployed on vSphere 7 in technical preview. MIG works on the A100 GPU and others from NVIDIA’s Ampere range and it is compatible with CUDA Version 11. In this second article on MIG, we dig a little deeper into the setup of MIG on vSphere and show how they work together. As a brief recap, the value of MIG to the vSphere user is MIG’s ability to do strict isolation between one vGPU’s share of a physical GPU and another on the same host server. This allows the vSphere administrator to treat their GPU power in a cloud provider way and to maximize the utilization of the physical GPU by packing workloads onto it that are independent of each other. The vSphere administrator can give assurance to the vGPU consumer, a data scientist or machine learning practitioner, that their portion of the GPU is isolated for their use only. Or they can dedicate a full GPU to one VM and one user, if the need is there to do that.

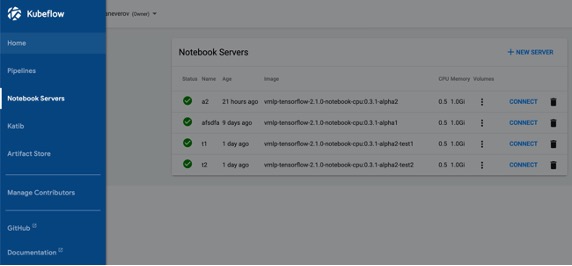

We already understand from part 1 that a GPU Instance represents a slice of a physical GPU capturing memory, a fraction of the overall symmetric multiprocessors (SMs) or cores and the hardware pathways to those items, such as the crossbars and L2 cache. As a reminder, we include the outline MIG architecture again here for your reference.

Figure 1: The MIG Architecture

source: The NVIDIA MIG User Guide

Pre-requisites for MIG Enablement

Contents

- 1 Pre-requisites for MIG Enablement

- 2 Enabling the MIG Feature for a GPU on the ESXi Host

- 3 Creating GPU Instances

- 4 Creating Compute Instances

- 5 Assign the vGPU Profile to a VM

- 6 Install the vGPU Guest OS Driver into the VM

- 7 Viewing GPU/Compute Instances from Within a VM

- 8 Using MIG with Containers

- 9 Conclusion

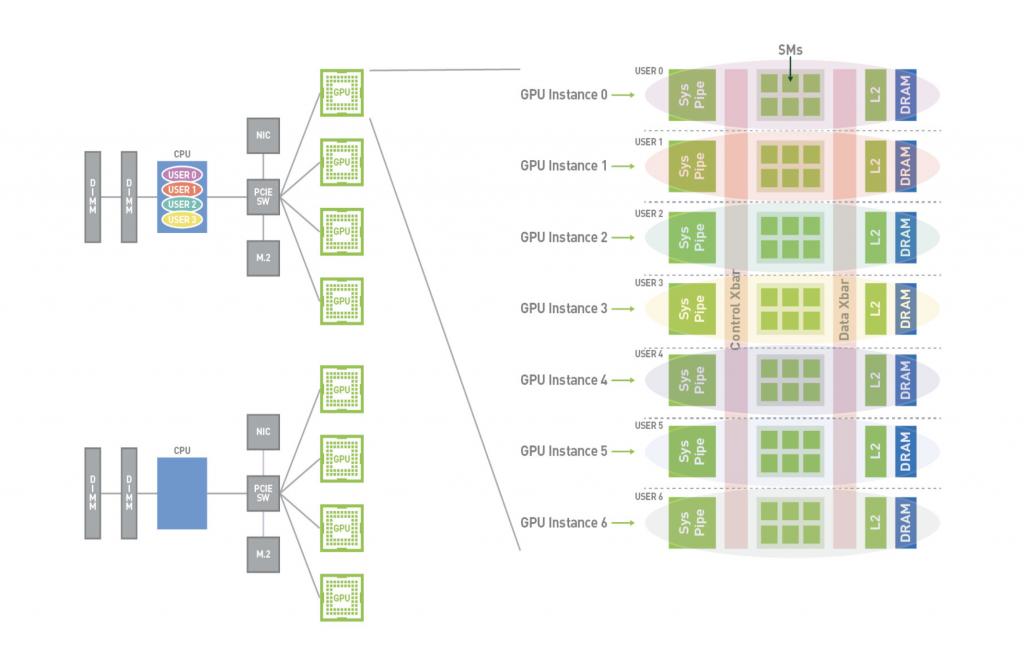

Global SR-IOV

Before we can enable MIG, we first need to enable the “Global SR-IOV” feature at the vSphere host BIOS level through the server management console, or iDRAC. Here is an image of how this is done in the case of a Dell R740 server, using the iDRAC interface. This may be different on your own host servers, depending on your vendor.

Figure 2: Configure SR-IOV Global using the iDRAC user interface on a Dell server.

In the UI above, the important entry to note is the enablement of the “SR-IOV Global” value, seen in the last line. SR-IOV is a pre-requisite for running vGPUs on MIG. SR-IOV is not a pre-requisite for MIG in itself. Think of SR-IOV and the vGPU driver as wrappers around a GPU Instance. A GPU Instance is associated with an SR-IOV Virtual Function at VM/vGPU boot-up time.

ESXi Host Graphics Settings

This part of the setup is described in full section 1.1 of the original vGPU usage article and we won’t repeat it here. It applies in exactly the same way for MIG as it does for pre-MIG vGPU environments (i.e. those that depend on CUDA 10.x).

Enabling the MIG Feature for a GPU on the ESXi Host

By default, MIG is disabled at the host server level. If the NVIDIA ESXi Host Driver (that supports vGPUs) is not installed for earlier pre-MIG uses then MIG by definition will not be present. You can determine whether the NVIDIA ESXi Host Driver is present, when logged in to the ESXi server

esxcli software vib list | grep NVIDIA

This produces the VIB name in the first column of output, such as

NVIDIA-VMware_ESXi_7.0_Host_Driver 440.107-1OEM.700.0.0.15525992 NVIDIA VMwareAccepted 2020-07-27

If an NVIDIA ESXi Host Driver is already installed into the ESXi kernel from a previous pre-MIG use, then you will not see any entry in the nvidia-smi output that mentions MIG (This is expected behavior with the NVIDIA R440.xx series and earlier drivers).

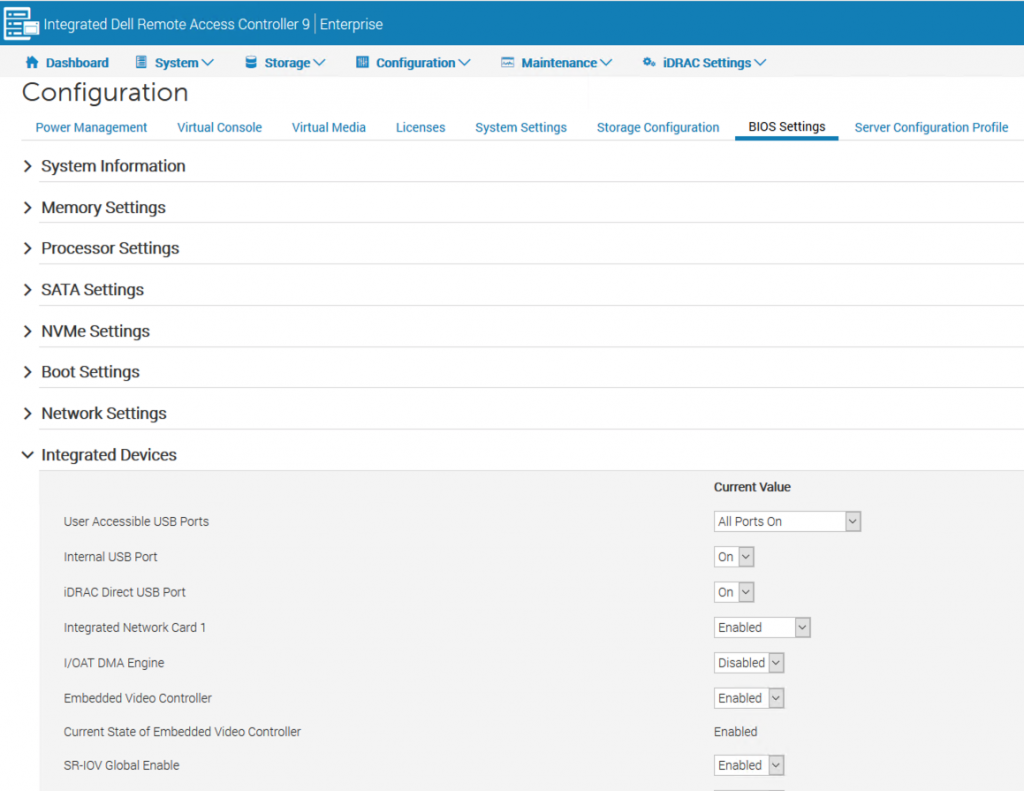

If an NVIDIA ESXi Host driver is currently installed at one of the 450.xx versions, i.e. with CUDA 11 support, then the state of MIG at the host level can be seen using

nvidia-smi

Figure 3: MIG in Disabled Mode – seen on the fourth and seventh rows at the far right side of the nvidia-smi command output

In Figure 3, MIG is shown as Disabled. On the vSphere host, once it is taken into maintenance mode, the appropriate NVIDIA ESXi Host Driver (also called the vGPU Manager) that supports MIG can be installed. The NVIDIA ESXi Host Driver is delivered in a VIB file (a VMware Installable Bundle) that is acquired as part of the vGPU product from NVIDIA. The particular NVIDIA product for compute workloads like machine learning is the Virtual Compute Server or VCS.

Removing an Older ESXi Host Driver VIB

To bring your MIG support up to date, you would uninstall any previous NVIDIA ESXi Host Driver that was present in the ESXi kernel on your host. To do that, once you have placed your host into maintenance mode first, use

esxcli software vib list |grep -i nvidia

to get the VIB name in the first column of the output as we saw above, and then to remove it use

esxcli system maintenanceMode set –enable=true

esxcli software vib remove –vibname=NVIDIA-VMware_ESXi_7.0_Host_Driver

Note: The host server should be in maintenance mode before you attempt to uninstall the VIB. The VIB name string may be different in your own installation, as the versions change over time.

Installing the ESXi Host Driver for MIG Support

Ensure that you have the correct version of the ESXi host driver VIB for current MIG functionality before installing it. At the time of this writing, we installed the ESXi host driver at a technical preview version 450.73 using its VIB file, though this gets updated over time and your own installation may well be at a later version. Installing the VIB is a straightforward process. If your host server is not already in maintenance mode, then use

esxcli system maintenanceMode set –enable=true

Install the VIB using

esxcli software vib install -v <Use the full path name to the NVIDIA vGPU Manager VIB file>

Once the installation has finished, you may take your host out of maintenance mode, if desired, though there will be a host server reboot coming shortly, so leaving it in maintenance mode is a good idea at this point

esxcli system maintenanceMode set –enable=false

Note the two dashes before the “enable” option. Once the MIG-capable VIB or ESXi Host Driver is installed into the ESXi kernel, you can check that the ESXi host driver is active using

nvidia-smi

This produces output that is similar to that seen in Figure 3. There are two more steps to getting MIG enabled.

Enabling MIG for a GPU

To enable MIG on a particular GPU on the ESXi host server, issue the following commands

nvidia-smi -i 0 -mig 1

where the -i option value represents the physical GPU ID on that server, and the -mig 1 value indicates enablement.

Note: If no GPU ID is specified, then MIG mode is applied to all the GPUs on the system.

Following the above setting, we issue a reset on the GPU

nvidia-smi -i 0 –gpu-reset

Note that there are two minus signs before the “gpu-reset”. You may see a “pending” message from the second command above, due to other processes/VMs using the GPU. Rebooting your host server in that case will cause the MIG changes to take effect. Once the server is back up to maintenance mode, then issue the command

nvidia-smi

and you should see that MIG is now enabled:

Figure 4: nvidia-smi output showing that MIG is Enabled (lines 4 and 7 of the text, on the right side)

Take the host server out of maintenance mode to allow VMs to be populated onto it.

Creating GPU Instances

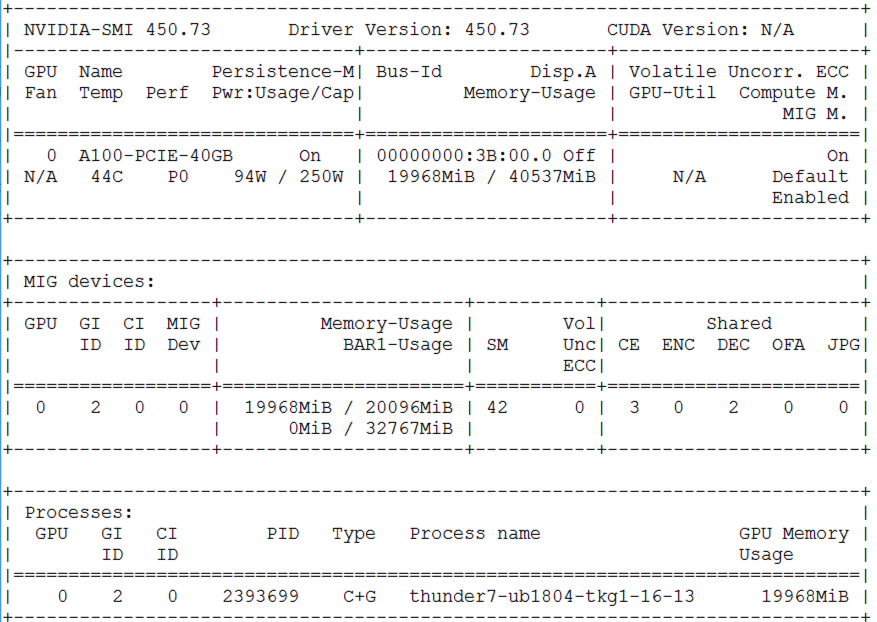

We learned in part 1 that a GPU Instance in MIG is a slice of the overall physical GPU memory and its SMs, as well as the access paths to those items, giving isolation of memory and scheduling to that slice. Before a user can apply a vGPU profile to a VM in the vSphere Client, to use the GPU Instance, the appropriate GPU Instance must be created on the server. We see the set of allowed GPU instance profiles, by using the command

nvidia-smi mig -lgip

At this point, there are no GPU Instances created, so “Instances Free” is zero for each profile.

You will also note that Peer-to-Peer (P2P) communication between GPUs has “No” for all profiles. This means that Peer-to-Peer communication in both the NVLink and PCIe forms is not allowed with MIG-backed vGPUs, as of this writing.

Figure 5: MIG GPU Instance profiles

As an administrator, we create a GPU Instance using the command

nvidia-smi mig -i 0 -cgi 9

In that example, we choose GPU ID zero (-i) on which we create a GPU Instance using profile ID 9, i.e. the “3g.20b” one. That profile ID is shown in the third column from the left in Figure 5.

We can alternatively create two GPU Instances using that same profile on GPU ID 0, using comma-separated profile IDs:

nvidia-smi mig -i 0 -cgi 9,9

Those two GPU Instances occupy the entire 40 Gb of frame-buffer memory and they together take up 6 of the 7 fractions of the total SMs on the A100 GPU, so we cannot create further GPU Instances on that physical GPU.

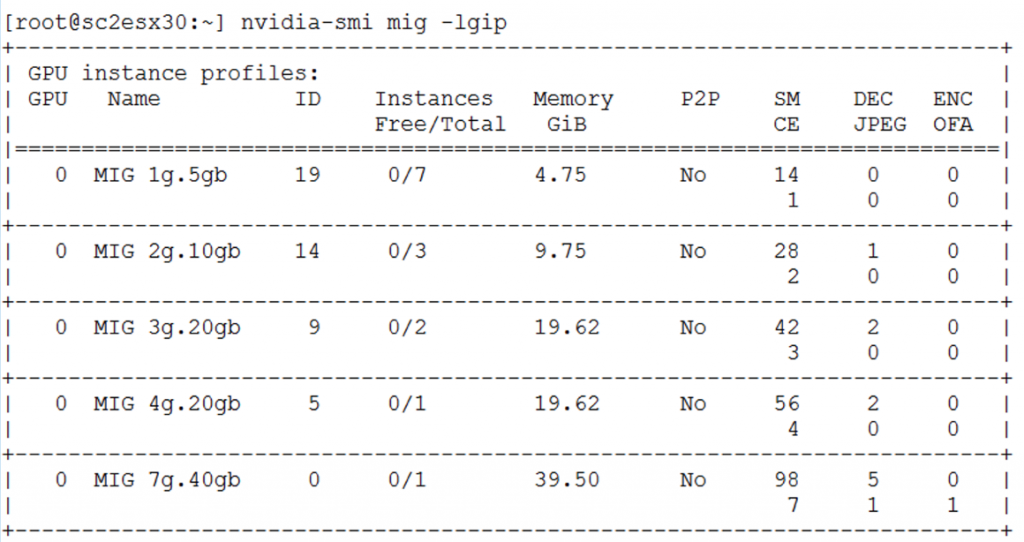

To list the two new GPU Instances, we use

nvidia-smi mig -lgi

Figure 6: The GPU Instances created with their Profile ID, Instance ID and their Placement on the Physical GPU

Creating Compute Instances

We can create one or more Compute Instances within a GPU Instance. A Compute Instance identifies a subset of the SMs owned by the GPU Instance that are ganged together behind a compute front-end. This gives us execution isolation for one container from another, for example – or one process from another. Those containers may execute in parallel on their own Compute Instances (i.e. their own set of SMs). Compute Instances within a particular GPU Instance all share that GPU Instance’s memory.

In the typical case, we can create a Compute Instance that spans the whole of the GPU Instance’s resources, though doing that is not required. If the guest OS of a VM is aware of MIG constructs, and has appropriate permissions, then it can re-configure the Compute Instances within a GPU Instance. It would do that to allocate particular Compute Instances to certain containers, for example. You can find examples of vGPU clients, such as VMs, doing that in the NVIDIA MIG User Guide

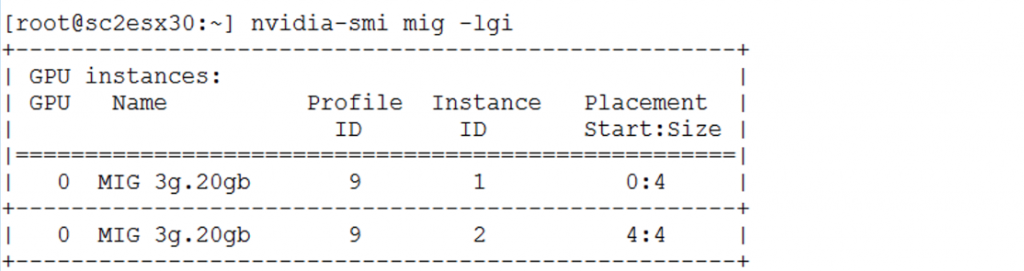

We use a very similar procedure to create a Compute Instance as we did for the GPU Instances. First of all, we list the available Compute Instance Profiles,

nvidia-smi mig -lcip

Figure 7: The MIG Compute Instance Profiles as seen at the ESXi host level

Notice that the Compute Instance profiles shown are compatible with the GPU Instances that we created – they have the same “3g.20gb” in their names. Once we have chosen an appropriate Compute Instance Profile to suit our compute requirements, we pick an existing GPU Instance with ID=1, and create the Compute Instance on it

nvidia-smi mig -gi 1 -cci 2

For the second GPU Instance that we created above, with instance ID=2, we issue a similar Compute Instance creation command

nvidia-smi mig -gi 2 –cci 2

where -cci 2 in these examples refers to the numbered Compute Instance Profile ID that we are applying. The asterisk by the Compute Instance Profile ID in Figure 7 indicates that this profile occupies the full GPU Instance and it is the default one. The other Compute Instance profiles, those with names beginning with “1c” or “2c”, consume a part of their GPU Instance’s SMs, giving a finer level of control over execution of code within the Compute Instance’s boundaries.

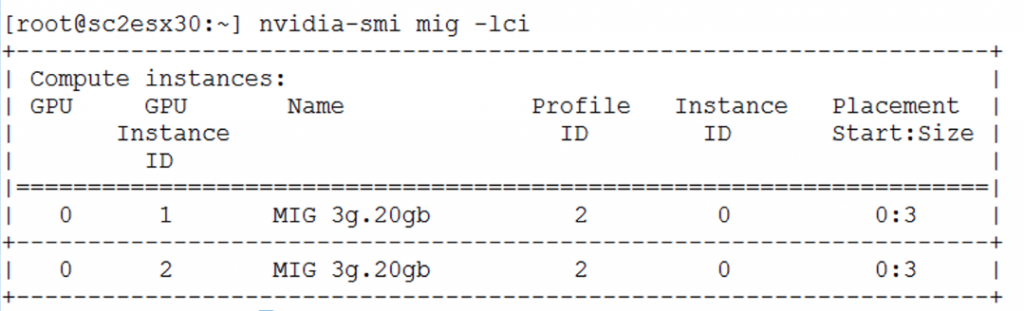

Having done that, we now see our two new Compute Instances, differentiated from each other by their GPU Instance ID.

Figure 8: Two Compute Instances that occupy the separate GPU Instances 1 and 2 fully on a single physical GPU

Assign the vGPU Profile to a VM

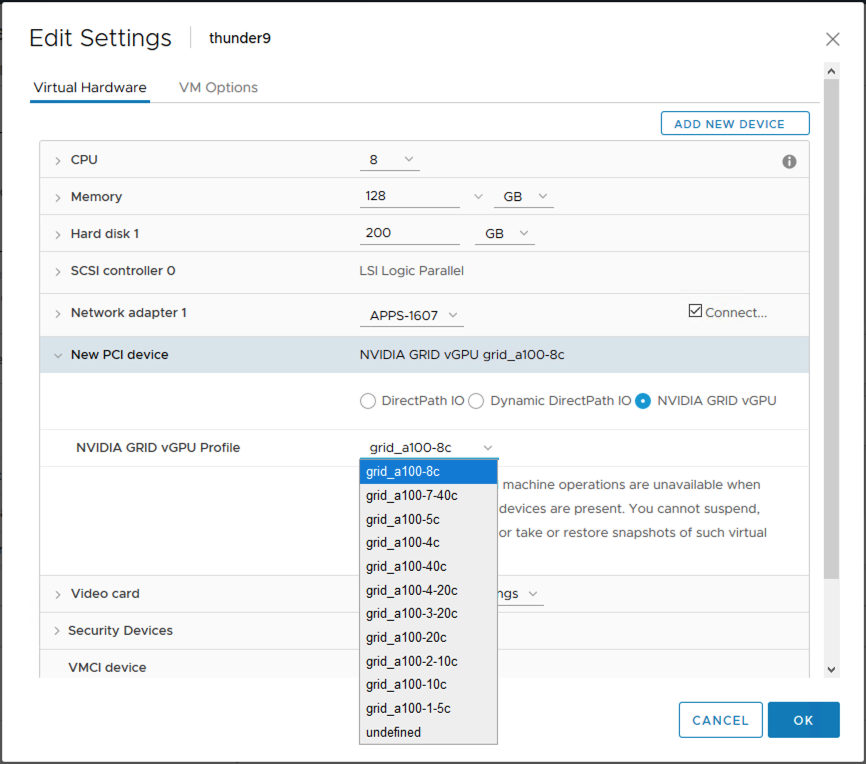

The next step is the assignment of the vGPU profile to the VM within the vSphere Client, as we saw in Part 1. That vGPU profile choice associates the GPU Instance we created with the VM – and enables it to use that particular slice of the GPU hardware.

The dialog for executing that assignment is seen here. In “Edit Settings” we hit “Add New Device”, choose the NVIDIA GRID vGPU PCI device type and pick the profile

Figure 9: Assigning a vGPU profile to a VM in the vSphere Client

In our example, because we created our GPU Instances with the “MIG 3g.20gb” profile, then the applicable vGPU profile for the VM from the list above is the “grid_a100-3-20c” one, as that has a direct mapping to the GPU Instance type. Following this vGPU profile assignment, the association between the GPU Instance and an SR-IOV Virtual Function (VF) occurs at the VM guest OS boot time, when the vGPU is also started.

We can assign that same vGPU profile to a second VM on this server, and the second GPU Instance that we created will be associated with that VM.

Note: With MIG, we can have only one vGPU profile on a VM. For that reason, multiple physical GPUs associated with a VM are not allowed when MIG is on.

Install the vGPU Guest OS Driver into the VM

This installation step is done in exactly the same way we did it for pre-MIG use of vGPUs on VMs. Rather than repeat that process here, we give the reference to the blog article that describes it in more detail. We first ensure that the vGPU guest OS driver is from the same family of drivers as the ESXi host driver we installed using the VIB earlier. We used the NVIDIA vGPU guest OS driver technical preview version 450.63 for this testing work in the VMware lab. Your version may well be different to this one, as newer releases appear over time.

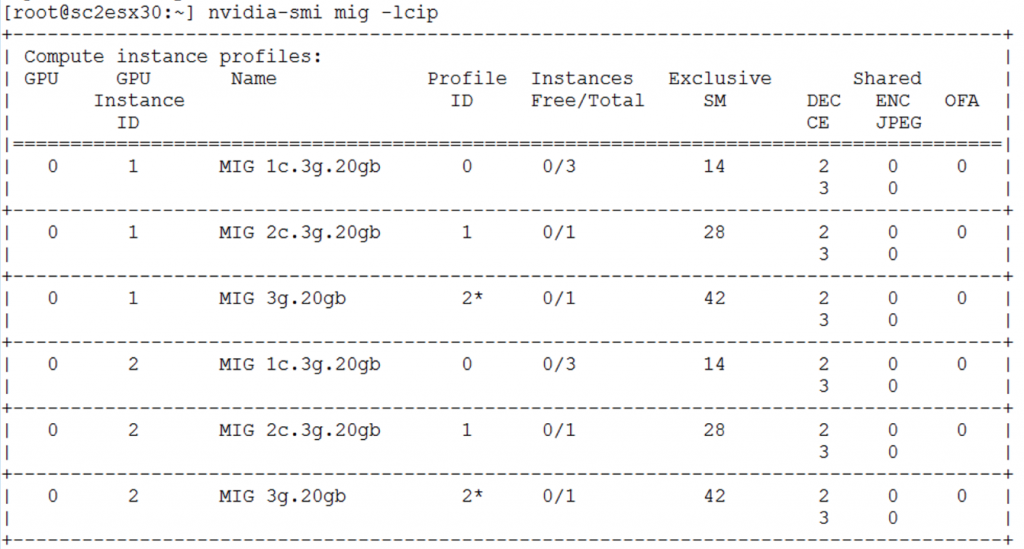

Viewing GPU/Compute Instances from Within a VM

So far, we have created and examined the MIG GPU Instances and Compute Instances at the ESXi host level. We can create and get a VM’s view of its own GIs and CIs as well, provided we have superuser permission within that VM (or system administrator permissions).

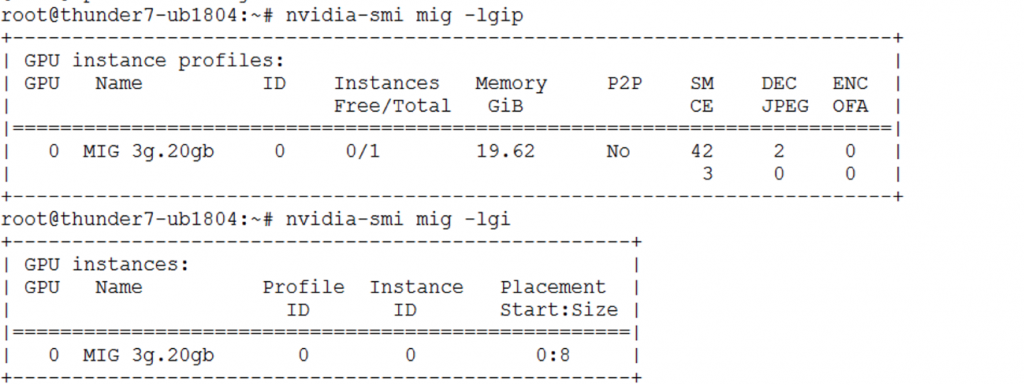

Here is the view of the applicable GPU Instance profiles and GPU Instances from within the Ubuntu 18.04 Linux guest OS of a VM on the host server:

Figure 10: The GPU Instance Profiles and GPU Instance as seen from within a VM

Note that the VM has visibility to only one GPU Instance, although two GPU Instances exist at the ESXi host level. When the VM was assigned its vGPU profile, it got access to that one GPU Instance.

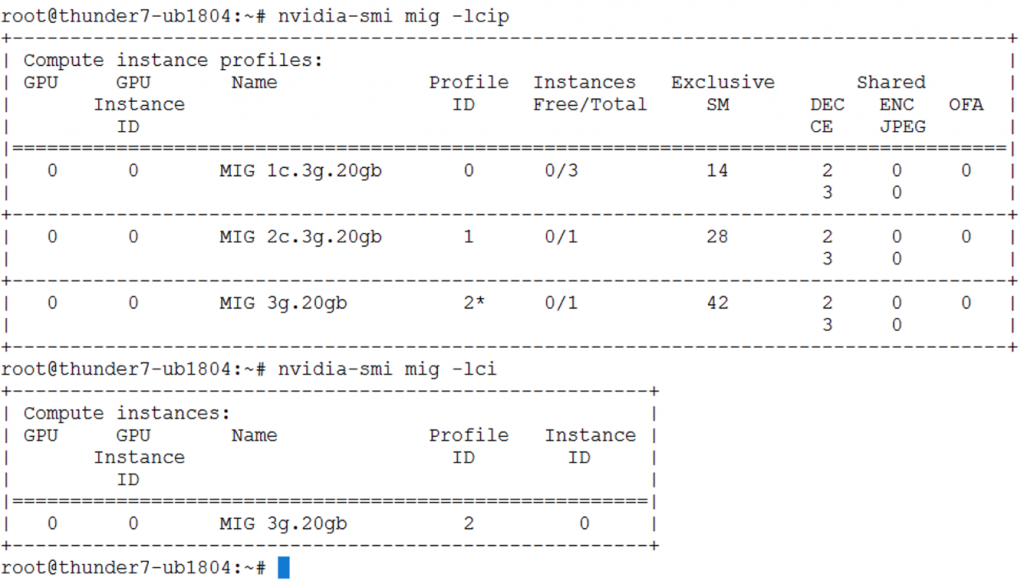

Finally, within the same VM, we list the Compute Instance Profiles and Compute Instances that are visible in the Linux guest OS of the VM.

Figure 11: The Compute Instance profiles and Compute Instance seen from within a VM

Again here, only one Compute Instance is visible to this VM, since that Compute Instance is the sole part of the GPU Instance that this VM owns.

Using MIG with Containers

With our vGPU profile, GPU Instances and Compute Instances in place, we are ready to start using the VMs to do some compute-intensive Machine Learning work. If you have the NVIDIA Container Toolkit installed in your VM, you can try executing a Docker container to test out your setup,

sudo docker run –runtime=nvidia -e NVIDIA_VISIBLE_DEVICES=MIG-GPU-786035d5-1e85-11b2-9fec-ac9c9a792daf/0/0 nvidia/cuda nvidia-smi

You will need to substitute your own Compute Instance device name in the NVIDIA_VISIBLE_DEVICES parameter above. You can get that device name using

nvidia-smi -L

example output:

GPU 0: GRID A100-3-20C (UUID: GPU-786035d5-1e85-11b2-9fec-ac9c9a792daf)

MIG 3g.20gb Device 0: (UUID: MIG-GPU-786035d5-1e85-11b2-9fec-ac9c9a792daf/0/0)

More detail on using these components of MIG for Machine Learning job execution work is to be found in the NVIDIA MIG User Guide.

Conclusion

Multi-instance GPUs, or MIG, is a new feature within the vGPU driver set from NVIDIA at R450 and is supported with CUDA version 11. MIG functionality was tested in technical preview mode on VMware vSphere 7. We tested this combination in the VMware labs and these tests were highly successful. MIG allows the user to specify, at a fine-grained level, that slice of the physical GPU in terms of symmetric multi-processors, GPU memory size and hardware access paths, that is allocated to a VM for its work, using a vGPU profile. This scheme allows sharing of the physical GPU at a much finer level of control than was available earlier. The A100 GPU from NVIDIA is a great match for virtualization on vSphere. The MIG features allow the GPU to be optimized for utilization by packing more workloads onto it. This is ideal for those providing GPU power in a cloud provider style to their users. NVIDIA has also shown in its own tests that the A100 GPU produces excellent high-end performance for machine learning workloads, at the top of the range.