In part 1 of this server we introduced the challenges with GPU usage and the features and components that can make the building blocks for GPU as a service. In this part we will look at how VMware Cloud Foundation components can be assembled to provide GPUs as a service to your end users.

Common GPU Use Cases:

Contents

Table 1: Common GPU use cases

Cloud Infrastructure Components Leveraged for Machine Learning Infrastructure

VMware Tanzu Kubernetes Grid (TKG)

VMware Tanzu Kubernetes Grid is a container services solution that enables Kubernetes to operate in multi-cloud environments. VMware TKG simplifies the deployment and management of Kubernetes clusters with Day 1 and Day 2 operations support. VMware TKG manages container deployment from the application layer all the way to the infrastructure layer, according to the requirements VMware TKG supports high availability, autoscaling, health-checks, and self-repairing of underlying VMs and rolling upgrades for the Kubernetes clusters.

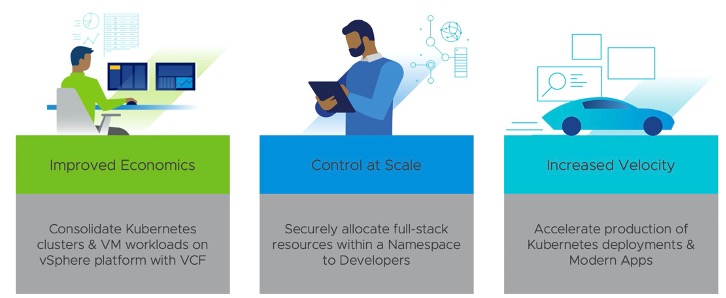

Figure 6: Tanzu Kubernetes Grid Benefits

VMware Cloud Foundation with SDDC Manager

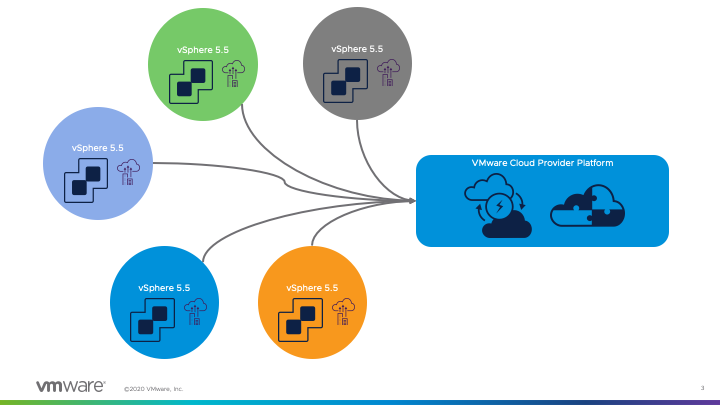

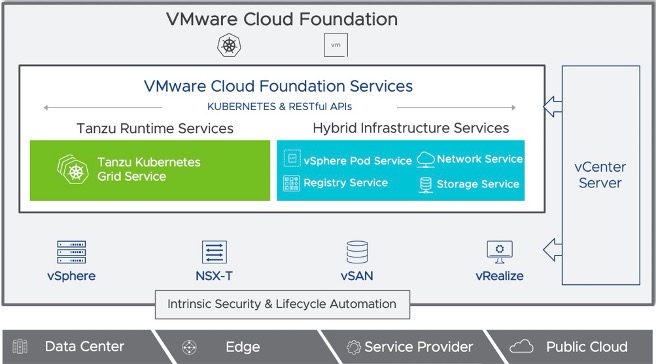

VMware Cloud Foundation is a unified SDDC platform that brings together a hypervisor platform, software-defined services for compute, storage, network, and security and network virtualization into an integrated stack whose resources are managed through a single administrative tool. VMware Cloud Foundation provides an easy path to hybrid cloud through a simple, security-enabled, and agile cloud infrastructure on premises and as-a-service public cloud environments. VMware SDDC Manager manages the start-up of the Cloud Foundation system. It enables the user to create and manage workload domains, and perform lifecycle management to ensure the software components remain up-to-to date. SDDC Manager also monitors the logical and physical resources of VMware Cloud Foundation.

Figure 7: VMware Cloud Foundation with Tanzu Runtime and Hybrid Infrastructure services

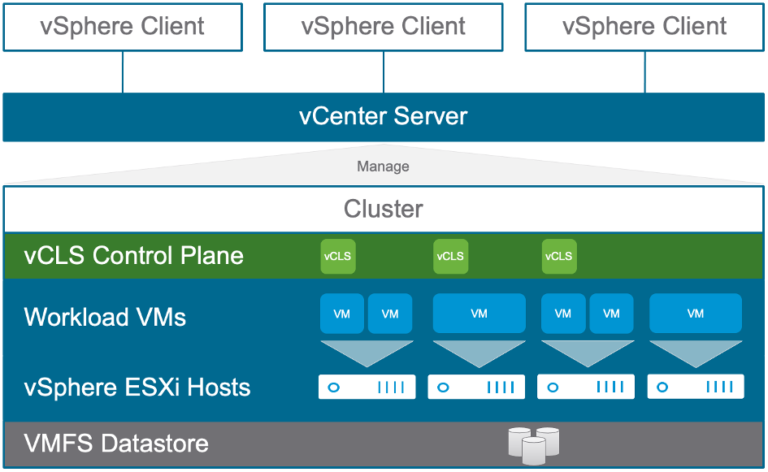

VMware vSphere

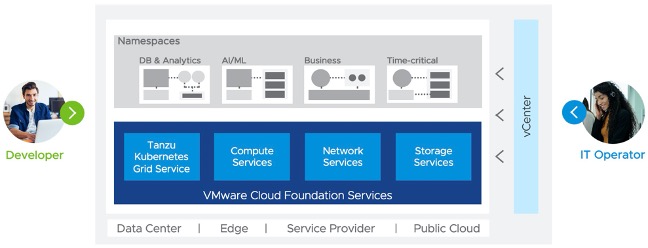

VMware vSphere extends virtualization to storage and network services and adds automated, policy-based provisioning and management. As the foundation for VMware’s complete SDDC platform, vSphere is the starting point for your virtualization infrastructure providing wide ranging support for the latest hardware and accelerators used for Machine Learning. VMware vSphere with Kubernetes (formerly called “Project Pacific”) empowers IT Operators and Application Developers to accelerate innovation by converging Kubernetes, containers and VMs into VMware’s vSphere platform.

VMware has leveraged Kubernetes to rearchitect vSphere and extend its capabilities to all modern and traditional applications.

vSphere with Kubernetes innovations:

- Unite vSphere and Kubernetes by embedding Kubernetes into the control plane of vSphere, unifying control of compute, network and storage resources. Converging VMs and containers using the new native container service that creates high performing, secure and easy to consume containers

- App-focused management with App level control for applying policies, quota and role-based access to Developers. Ability apply vSphere features (HA, vMotion, DRS) at the app level and to the containers. Unified visibility in vCenter for Kubernetes clusters, containers and existing VMs

- Enable Dev & IT Ops collaboration. Developers use Kubernetes APIs to access the datacenter infrastructure (compute, storage and networking). IT operators use vSphere tools to deliver Kubernetes clusters to developers. Consistent view between Dev and Ops via Kubernetes constructs in vSphere

- Enterprises can expect to get improved economics due to the convergence of vSphere, Kubernetes and containers. Operators get control at scale by focusing on managing apps versus managing VMs. Developers & operators collaborate to gain increased velocity due to familiar tools (vSphere tools for Operators and Kubernetes service for Developers).

Figure 8: vSphere 7 with Kubernetes innovations

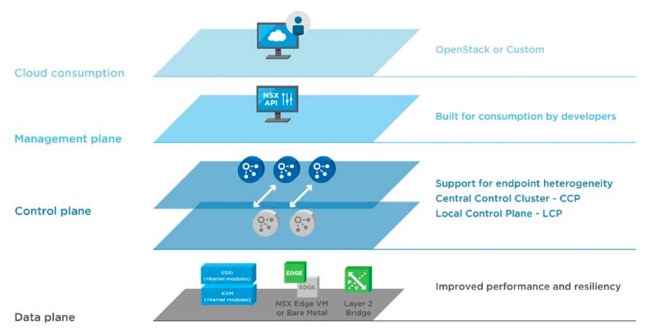

VMWare NSX-T

VMware NSX-T provides an agile software-defined infrastructure to build cloud-native application environments. NSX-T is focused on providing networking, security, automation, and operational simplicity for emerging application frameworks and architectures that have heterogeneous endpoint environments and technology stacks. NSX-T supports cloud-native applications, bare metal workloads, multi-hypervisor

Figure 9: NSX-T Components

environments, public clouds, and multiple clouds. NSX-T is designed for management, operation, and consumption by development organizations. NSX-T Data Center allows IT and development teams to select the technologies best suited for their applications.

Solution Overview

This reference architecture presents a solution that takes all the above virtualization features available to build an optimal machine learning infrastructure

The reference architecture consists of the components shown in Figure 1.

- Choice of different types of servers and hardware

- VMware Cloud Foundation with

-

-

- Traditional Compute

- Kubernetes with TKG

- Aggregated GPU Clusters

- Dedicated Workload Domain

- VMWare NSX-T network virtualization which enables software defined ne

-

- GPU as a Service

-

-

- GPU based compute acceleration

- RDMA based network connectivity

- vSphere Bitfusion for remote full or partial GPU access

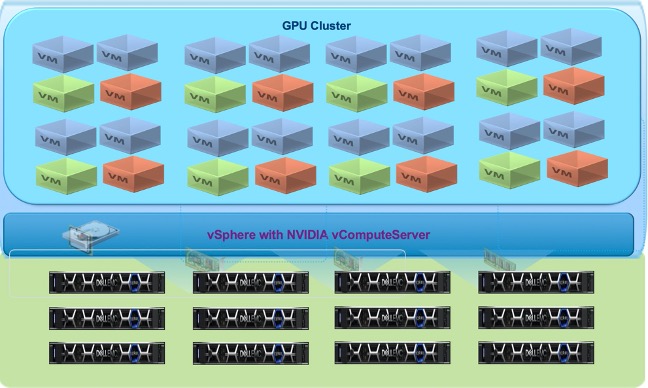

- NVIDIA vComputeServer

-

VMware Cloud Foundation deployed with VMware TKG offers a simple solution for securing and supporting containers within existing environments that already support VMs based on VMware vSphere, without requiring any retooling or re-architecting of the network.

This comprehensive solution can help enterprises virtualize and operationalize GPU based acceleration for machine learning and other HPC applications, to meet the use cases required by the users. The hybrid cloud capability provides flexibility in workload placement as well as business agility.

Solution Components

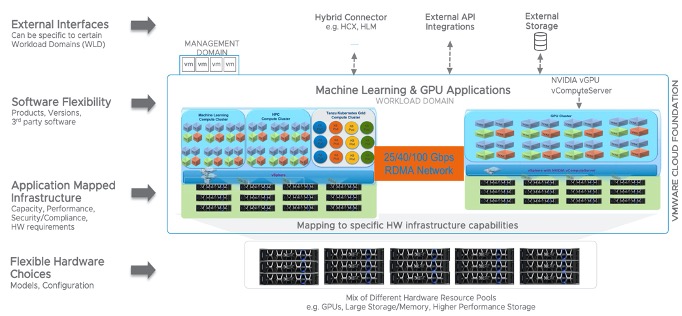

The solution is layered on top of the VMware Cloud Foundation platform and it provides the following levels of technical flexibility:

- Aggregated GPU resources in dedicated clusters

- CPU clusters

- RDMA based high-speed networking

GPU Clusters

Requirements

All GPU resources are consolidated into the GPU Cluster. A requirement analysis of all the potential GPU workloads in the organization should be done. This analysis of the use cases and the potential need for GPUs will provide an estimate of the type of GPUs and the number of GPUs per server. Once this is finalized, servers with the appropriate capacity can be chosen as the building block for the cluster. Figure 12 shows the GPU hardware that is presented through a cluster of specifically-designed virtual machines, called the GPU cluster.

Figure 10: Logical GPU Cluster Architecture

The Dell components recommended for the GPU cluster are shown in Table 2. Depending on the capacity assessment and the need for the GPU compute, multiple Dell servers with the appropriate number of GPUs would be brought together in a VMware cluster.

Compute Clusters

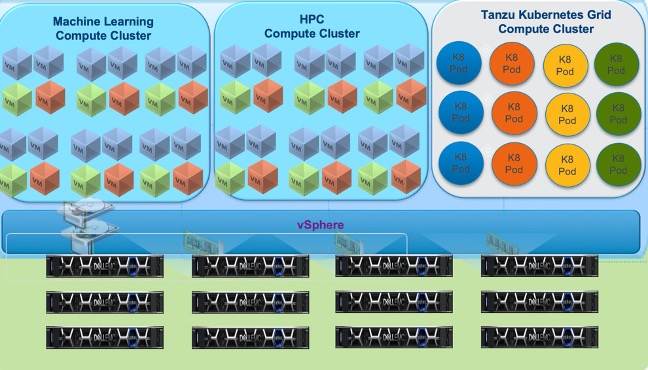

Figure 11: Compute Cluster Logical Architecture

The compute clusters are dedicated to particular application types, such as machine learning. These clusters are the clients of the GPU cluster and make use of the latter’s services.

VMware Cloud Foundation Workload Domain

A workload domain in vCloud Foundation is a collection of compute, storage and networking power that is designed specifically for a particular application type. It has its own vCenter instance and therefore it lives independently of any other Workload Domain on the same hardware. It is independent of vSphere clusters for example and one Workload Domain can contain many vSphere clusters. A workload domain exists within a software defined data center (SDDC) for one region.

Each Workload domain contains the following components:

- One vCenter Server instance connected to a pair of Platform Services Controller instances in the same or another workload domain.

- At least one vSphere cluster with vSphere HA and vSphere DRS enabled.

- One vSphere Distributed Switch for management traffic and NSX logical switching.

- NSX components that connect the workloads in the cluster for logical switching, logical dynamic routing, and load balancing.

- One or more shared storage allocations.

VCF Dedicated Workload Domain for Machine Learning and other GPU Applications

The model behind Cloud Foundation Workload Domains aligns to a concept of “Application Ready Infrastructure” where workload domains can be aligned to specific platform stacks to support different application workloads. This enables quick infrastructure and platform management components to support running a suite of different applications ranging from enterprise applications running in VMs, to Virtual Desktops, to containerized cloud native apps. Cloud Foundation becomes the common cloud platform to run both traditional and cloud native applications – all leveraging a common suite of management tools and driven through the use of automation.

Figure 12: Consolidated GPU Applications Workload Domain

In part 3, we will look at a sample design of a GPU cluster based on fictitious customer requirements.

Call to Action:

- Audit how GPUs are used in your organization’s infrastructure!

- Calculate the costs and utilization of the existing GPUs in the environment

- What are the use cases for GPUs across different groups?

- Propose an internal virtualized GPUaaS infrastructure by combining all resources for better utilization and cost optimization.