This series of blog articles presents different use cases for deploying machine learning algorithms and applications on VMware Cloud on AWS and other VMware Cloud infrastructure. At the time of writing, June 2020, the hardware accelerators for neural networks are not yet available on VMware Cloud on AWS. However, there are many very good reasons to deploy classic machine learning algorithms that perform well on CPU-based VMs onto VMware Cloud on AWS. We describe these use-cases in this and the following articles.

Part 2 of this series on ML on VMware Cloud on AWS is here

Part 3 of the series is here

Use Case 1: Business Data in Tabular Form

Contents

Businesses have most of their intrinsically valuable data today stored in relational databases of various forms, in spreadsheets and even in regular flat files – essentially all of this data is in a structured, tabular form. Data warehouses have been the traditional repository for this type of data, often going back several years in the business.

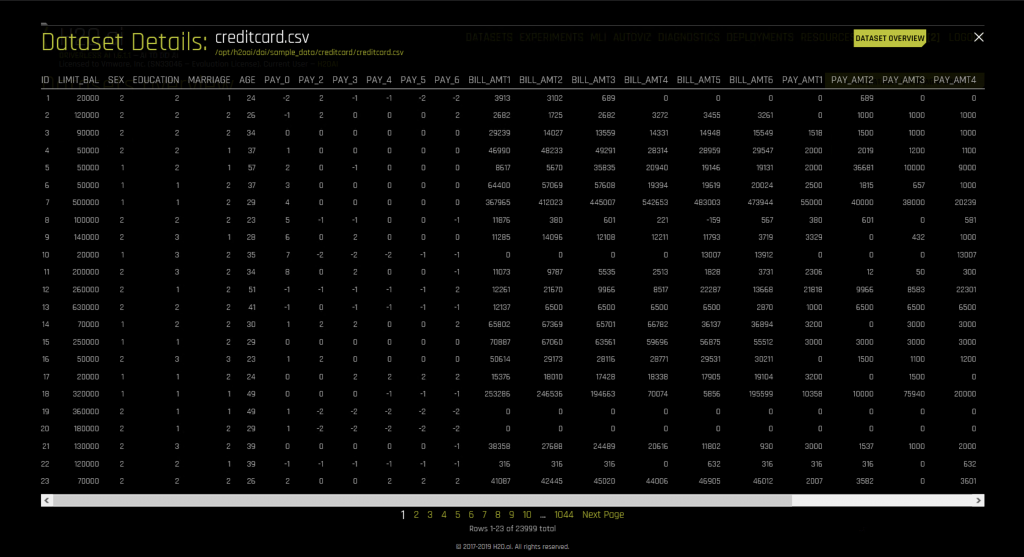

More and more business people are looking at this vast trove of tabular business data and are making use of it for predicting future customer behavior, or to forecast future sales – not solely for querying and reporting on what has already happened. Here is an image from one of the popular ML tools from the H2O.ai company of a table of data after it has been read in and understood by the tool. In this case, the tool is called Driverless AI and we ran it in a VM on VMware Cloud on AWS, as well as on-premises vSphere. In fact, we used vMotion to move it live from host to host on VMware Cloud on AWS while the modeling process was executing – without the data scientist user realizing it. More on that here. As you can see from this simple visualization below, the data is indeed in table form. We can visualize outliers and dependencies or patterns among the values of the cells also in sophisticated ways using tools like this – making the job of the data engineer much easier.

Table-based data is now serving a dual purpose. A main use up to now has been reporting on the current business. The focus is still there, but the realization is that there is a new potential use for the data now. Prediction, based on models trained with historical transaction/business event data, is the domain of machine learning. This is now the modernizing application approach.

Some business examples of the use of ML within applications using tabular data are

- providing a recommendation of related goods and services to a customer based on past/recent purchases. Recommendation engines make up one of the fastest growing business applications that use machine learning models based on tabular sales data;

- analysis of a customer’s “propensity to buy” a product or service, based on what similarly qualified customers have done in the past;

- in finance, predicting a loan applicant’s ability to pay off the principal before approving the person’s loan request.

- assessing home prices based on the features of the home itself and the neighborhood it is in, is also a tabular data-driven example.

There are many more such use-cases. There is clearly a significant benefit to the various businesses in being able to do such predictions.

How Good is the Data?

Tabular data a is often already highly normalized for efficient and flexible SQL querying so as to generate reports of past or current business activity. It has received a fair amount of data engineering and DBA attention up to now, so it likely has many of the attributes of good model training data. It likely has had strict governance rules applied to it – and the heritage or sources of the data is often understood. These conditions make this kind of data ideal for machine learning applications.

Where is the Data?

One of the first and most important workloads that we see being moved today to the VMware Cloud on AWS environment is departmental and enterprise databases, whether those be Oracle RDBMS or SQL Server or others. AWS also provides its own RDS suite on VMware Cloud on AWS. These databases – along with other captured data in less structured object stores like S3 and file systems – can become sources of the required training for the modelling tools. Proximity of the data source to the model training engine is key, as the training process is often iterative, with many separate experiments being conducted and potentially several scans through the full dataset in each one.

Is this Deep Learning?

Data sources made up of images, text and voice content are often found in enterprises also, but when these kinds of data formats are used for the deep learning form of machine learning, they are put to different purposes than tabular data is. Image/text/voice data is not structured as a table layout in the sense that we are discussing here. You could argue that there are rows and columns of pixels in an image – is that not a table? In a sense, it is, but the pixel values do not have the same dependencies or relationships to each other as traditional database columns do. Nor are they as meaningful in themselves to the human reader as the values in a database row, with its column names, would be.

Image/text/voice data is used less often for forecasting business conditions than it is for recognition of patterns, visually or in text form. There are business use-cases for this type of ML/DL too, but this is out of scope for this article. Deep Learning using neural networks on this data requires specialized hardware acceleration to drive performance, and so is more demanding computationally than ML using the more classic statistical methods – which execute fine on traditional processors. You can find many more articles on deep learning on VMware vSphere here. Deep learning, using multi-layered neural networks, is one important form of machine learning, but it is certainly not the only form. A lot of machine learning for business data purposes— both training and inference — can be done on CPUs using table-based data today.

ML Tools and Algorithms for Analyzing Tabular Data

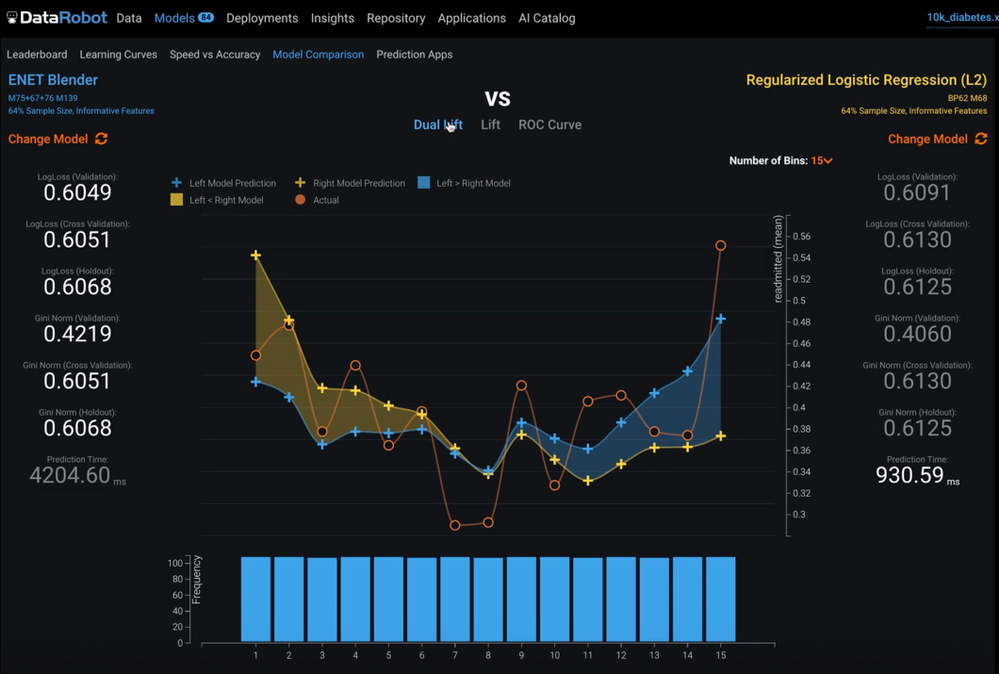

For structured data that looks like a database table, we make more effective use of the classical statistical methods – such as classification, regression, clustering, decision trees and random forest algorithms. Popularly used techniques in these categories are logistic regression and XGBoost algorithms, for example, but there are many more available and their properties are well-understood. You don’t necessarily have to choose between the models to apply to your data, today. You can take a selection of different algorithms, try them out against your available datasets and have the tool show you the accuracy of prediction of each one in a leaderboard. This is what the automated ML or autoML tools do – and there are several of these running today on vSphere. Examples of AutoML tools come from DataRobot, H2O, KNIME and other vendors. We have put these tools through their paces in the lab environment and they behave very well when virtualized. Here is a view from DataRobot’s platform, running on vSphere, and doing a comparison between the accuracy level achieved using two different types of models, a blended set versus regularized logistic regression in this example.

The various vendors of the ML tools spend considerable time and effort building connectors to all kinds of databases, so that the data can be retrieved from them for ML training purposes. Examples of such toolkits and connectors are seen in the product portfolios of companies such as DataRobot, H2O, Domino Data Labs, KNIME and many others. Several of these tools are running on VMware Cloud platforms today. If you work with any of these vendors, you almost certainly will be in the business of using your table-based data for ML. Many connectors to existing databases and other data sources exist for each specific vendor’s toolkit. Here is an image from the KNIME Analytics Platform running on Windows on a VM, showing the setup of its database connector functionality. Other tools do very similar things for access to the data.

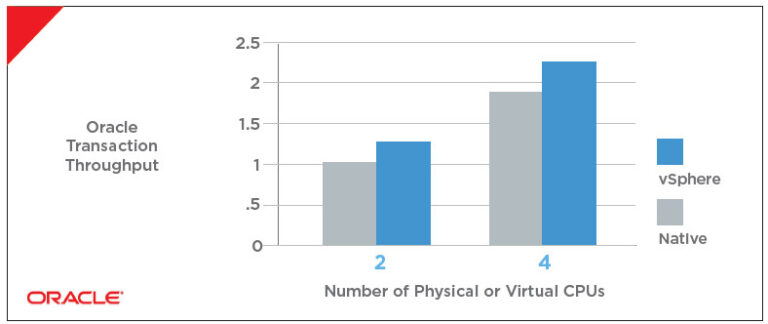

These ML algorithms undergo testing in machine learning applications on VMware vSphere and on VMware Cloud on AWS and they perform very well on both platforms, with results that are competitive with native. VMware Cloud on AWS presents powerful CPU-based machines with high-speed networking and significant RAM and storage capacity. Big servers like these perform well with the scale out application platforms that are a key element of machine learning, and they have plenty of room for multiple VMs operating together on a single dataset. We often hear that Spark is deployed for its SQL functionality to join tables, create new tables and cleanse the data to take the first step in machine learning – data engineering.

Summary

The world of machine learning, as we have seen, breaks down into two main branches. On the one hand, there are the classical statistics algorithms such as generalized linear methods (GLM), support vector machines (SVM) and boosted decision trees. These give great predicitive results on data that is in table form. On the other hand, there are the deep neural network-based models that require specialized hardware for acceleration and are required to process millions of images in the training phase. The first category, made up of the classical statistical methods, has a distinct advantage for business users and data scientists and it executes very well on CPUs – making it a simpler thing to deploy first. That first category, using classical approaches, makes a great complement for your relational database workloads and other structured data that you are moving to the VMware Cloud on AWS today. In part 2 of this series, we will explore model explainability as a second, powerful use-case scenario for machine learning on VMware Cloud on AWS.

Call to Action

Try out the VMware Cloud on AWS platform today for your applications by going to the Hands-on Labs for the service and test it out for yourself – or contact us at VMware for more details.