In this two-part series, we will look at leveraging Bitfusion for machine learning workloads in VMware virtual environments equipped with NVIDIA GPUs. In the first part, we will discuss the options for sharing NVIDIA GPUs. In the second part, we will look at testing details and the performance results of Bitfusion on vSphere with different networking options.

Introduction

There is an increasing need in machine learning, particularly deep learning, to use GPUs to speed up the processing of large computations. Therefore, GPUs are increasingly becoming an important part of Enterprise compute infrastructure. Traditionally, GPUs have been dedicated to specific users, leading to lack of flexibility and reduced resource utilization. Virtualization, which has a reputation for facilitating sharing of hardware resources, can be leveraged for sharing GPUs to increase both flexibility and utilization.

Sharing NVIDIA GPUs in VMware Virtualized Environments

NVIDIA GPUs can currently be shared between VMs in two different ways in VMware virtual environment as described below.

1. NVIDIA vGPU

NVIDIA virtual GPU (vGPU) provides the capability to share a GPU among multiple VMs for graphics and compute workloads in modern business datacenters. With this sharing mechanism, once created, vGPUs can be assigned to VMs running on the ESXi host to which the GPU is attached. GPU memory is space partitioned to create vGPUs and each vGPU is given time-sliced access to all of a GPU’s compute cores.

2. Bitfusion

Bitfusion FlexDirect on VMware vSphere provides the capability to share partial, full or multiple GPUs among multiple VMs. Bitfusion FlexDirect makes GPUs a first-class resource that can be abstracted, partitioned and shared much like traditional compute resources. GPU accelerators can be partitioned into multiple partial GPUs of any size and accessed remotely by VMs, over the network. In other words, VMs running anywhere in the datacenter can be given access to GPU resources that are physically installed in separate ESXi hosts. With Bitfusion, GPUs are now part of a common infrastructure pool and available for use by any VM in a vSphere based environment. Note, however, that because of the remote nature of the solution, the performance of Bitfusion is sensitive to networking.

In this study, we will explore how to leverage Bitfusion FlexDirect on vSphere to run TensorFlow deep learning applications with remotely accessed GPU resources.

Bitfusion Architecture and Primary Use Case

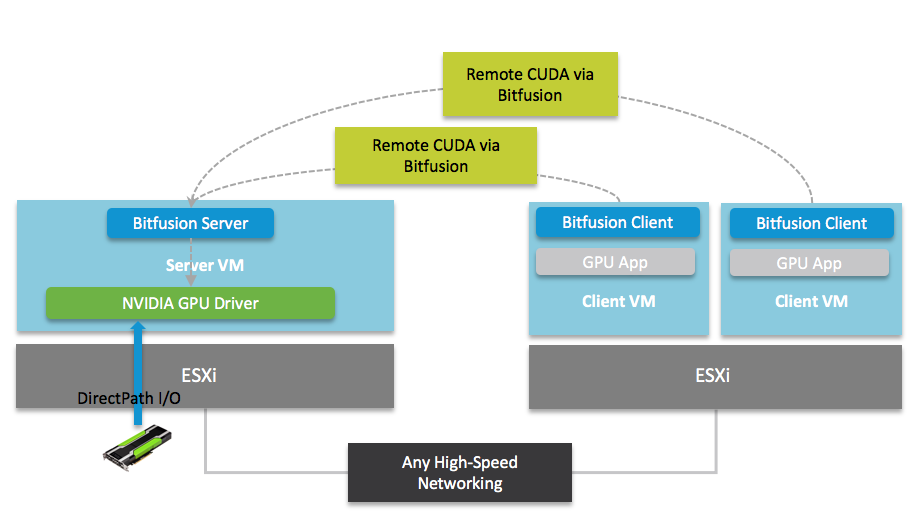

As shown in Figure 1, Bitfusion uses a client-server architecture. The Bitfusion server component runs in a VM that is located on the physical server containing the NVIDIA GPU cards with the GPU cards configured in DirectPath I/O mode into the server VM. The client VMs can run anywhere in the datacenter on hosts without local GPU resources so long as there is a high-speed network connection to the Bitfusion server, such as 10/25/40 GbE Ethernet, RDMA based interconnects (InfiniBand or RDMA over Converged Ethernet) or PVRDMA (a vSphere out-of-box feature, based on RDMA over Converged Ethernet).

The Bitfusion FlexDirect client component runs as a user-space application within each user’s VM instance, without the need for special software in the ESXi hypervisor or any modification of the GPU applications. The Bitfusion FlexDirect server component runs as a system daemon on the GPU server VM and exposes the individual physical GPUs as a GPU resource pool to be consumed by client VMs. Upon completion of the application, the GPU resources are put back into the resource pool.

The primary use case for Bitfusion is remote GPU. It allows remotely attaching one, multiple or partial GPUs to client VMs dynamically. Remote partial GPUs can be useful for ML/DL development and inference phases, since such workloads commonly don’t need a full GPU. With remote partial GPUs, GPU memory is space partitioned. For example, with a 16GB GPU card, one could create multiple partial GPUs, e.g., two 8GB partial GPUs and four 4GB partial GPUs using FlexDirect. This allows sharing the same GPU across multiple users in a multi-tenant environment. When client applications/processes are scheduled on the GPU by Bitfusion Server, a separate server process will be spawned for each process in order to keep device memory and execution context isolated.

Figure 1: Bitfusion FlexDirect architecture

In this part 1, we have presented an overview of the Bitfusion architecture and primary use case for the technology. In part 2, we will describe our performance testing methodology and present our Bitfusion results using three different networking options for remote usage of GPU.