In part 1 we introduced the solution and its deployment. In part 2 we will look at the validation of the solution and the results.

Testing Methodology:

Contents

The goal of the testing is to validate the vSphere platform for running Caffe2 and PyTorch. Some of the capabilities such as sharing GPUs between containers was evaluated and tested.

- For the baseline, a container with a full GPU was launched in Kubernetes and three different deep learning models were run on the two platforms Caffe2 and PyTorch.

- The same tests were repeated with four concurrent pods sharing the same GPU. Bitfusion FlexDirect was used to provide partial (0.25) GPU access to containers.

- The results were then compared against the baseline

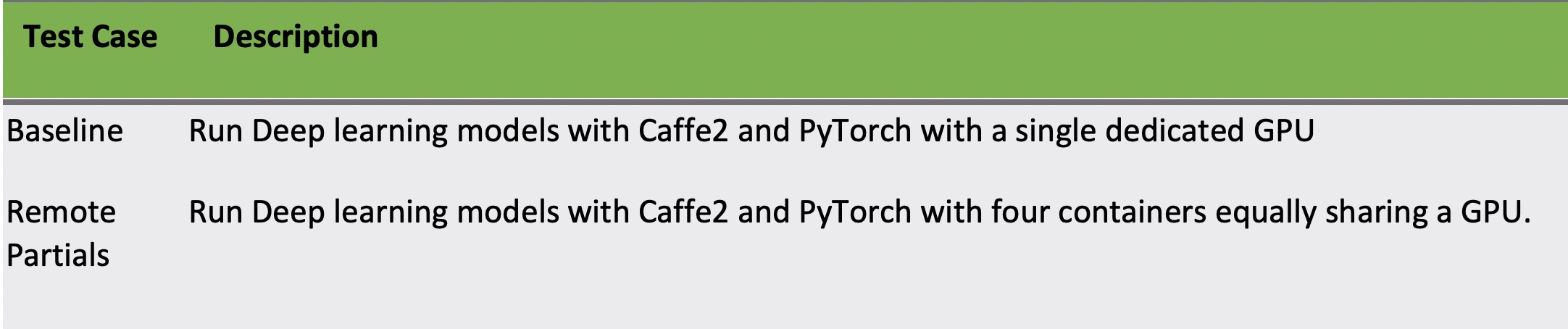

The two cases are summarized in the table below.

Table 3: Remote and Full GPU use Cases

Results:

The baseline tests were run on a single container with full access to the GPU. The models were run in sequence multiple times to get the baseline images processed per second. Appendix A and B provide details about the containers used for Caffe2 and PyTorch.

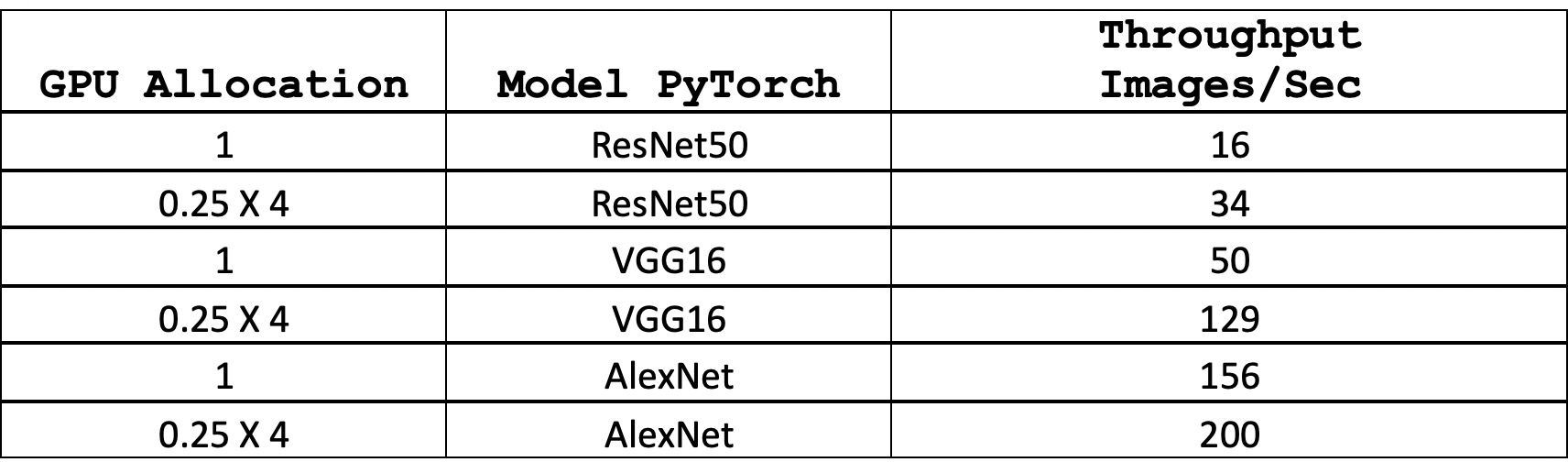

Table 4: Image Throughput with PyTorch testing

For the sharing use case, the benchmarking jobs run randomly across all four client containers in parallel. The baseline tests were run on a single virtual machine with full access to the remote GPU. The four docker containers were allocated 25% of the remote GPU resources. The tests were repeated multiple times allowing for repeatability. The throughput measured in images per second and the time of completion was compared between the shared use case and the baseline.

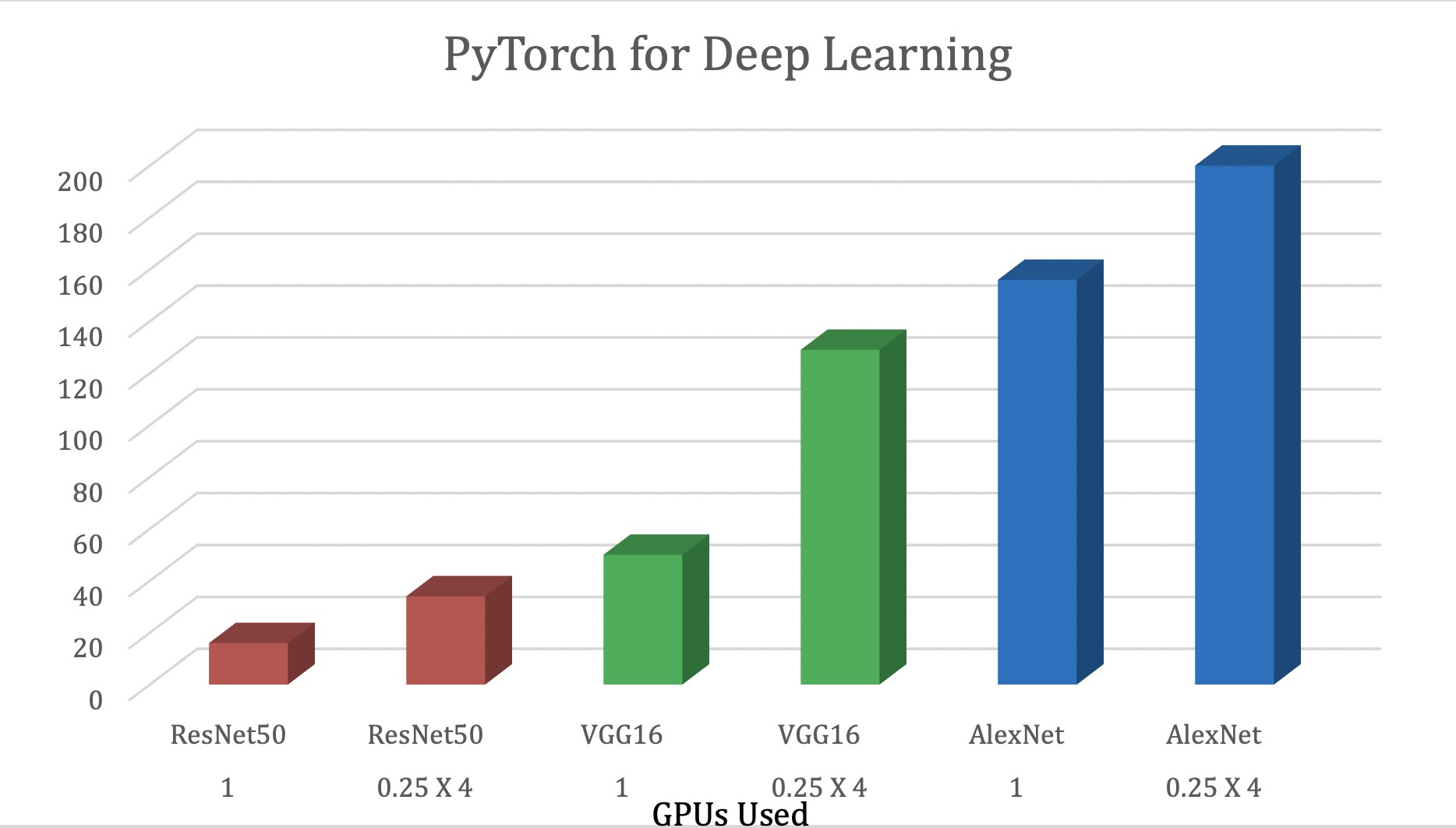

The testing was then run for PyTorch leveraging custom scripting and the deep learning benchmark. The results from the PyTorch testing are shown in tabular and graphical format.

Figure 4: Results from running different deep learning models on PyTorch with and without GPU sharing

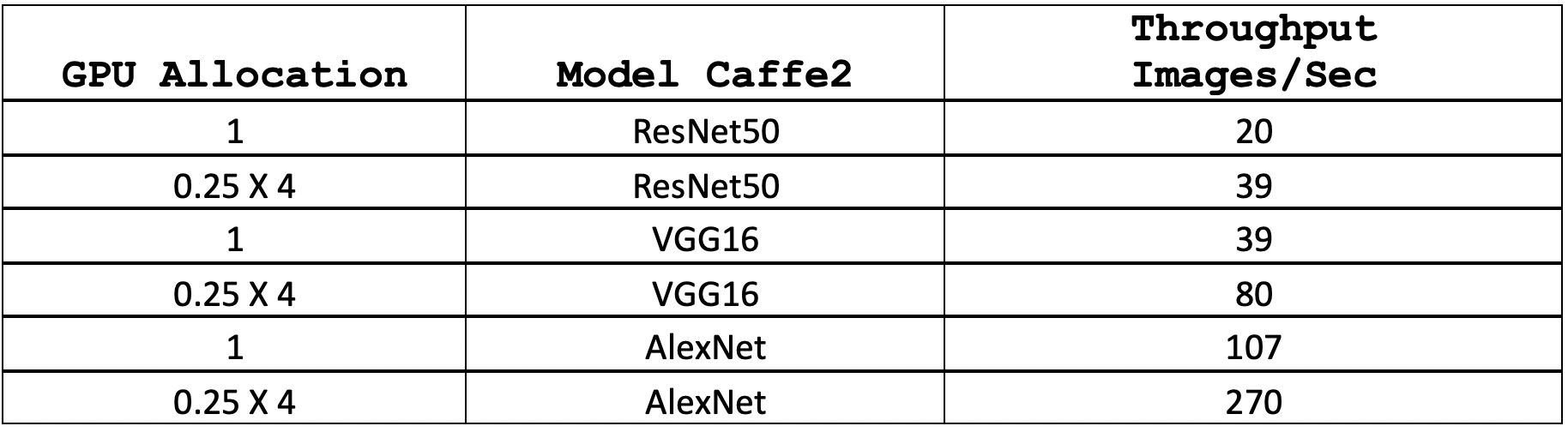

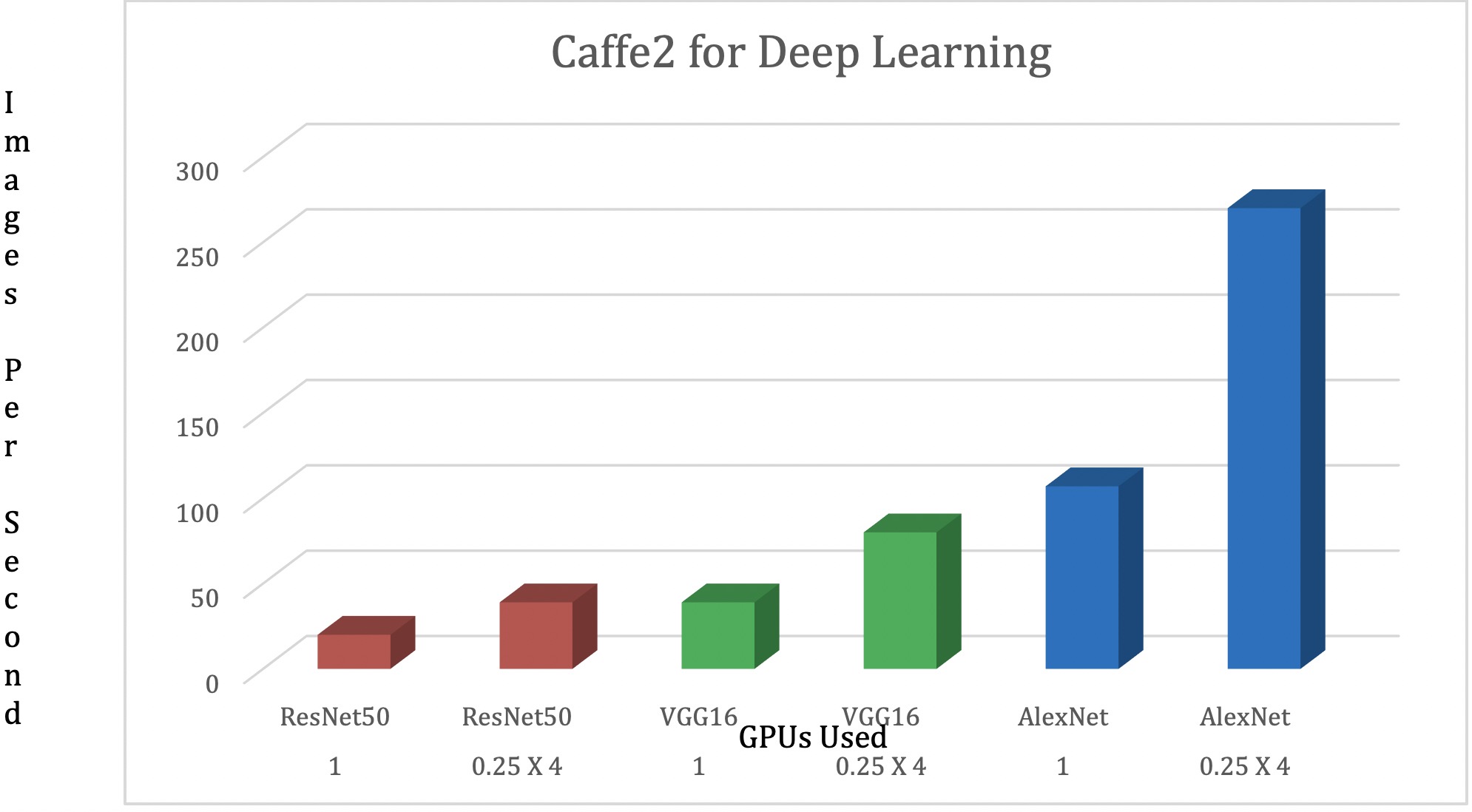

The testing was then repeated for Caffe2 leveraging custom scripting and the deep learning benchmark. The results from the Caffe2 testing are shown in tabular and graphical format.

Table 5: Image Throughput with Caffe2 testing

Figure 5: Results from running different deep learning models on Caffe2 with and without GPU sharing

The results from both the PyTorch and Caffe2 testing clearly show benefits to sharing GPUs across multiple containers. This could be a result of the entire GPU not being used by the different models. Each model shows gains for sharing but it differs based on their profile.

Conclusion:

We have shown that Caffe2 & PyTorch Deep learning frameworks work well with VMware SDDC & PKS. The solution effectively leveraged a Deep Learning benchmarking suite with Caffe2 & PyTorch to automate and run common machine learning models with scalability and improved utilization of NVIDIA GPUs. The solution showcases the benefits of combining best in class infrastructure provide by the VMware SDDC with production grade Kubernetes of PKS, to run open source ML platforms like Caffe2 & PyTorch efficiently.

Appendix A: PyTorch Container details

#

# This example Dockerfile illustrates a method to install

# additional packages on top of NVIDIA’s PyTorch container image.

#

# To use this Dockerfile, use the docker build command.

# See https://docs.docker.com/engine/reference/builder/

# for more information.

#

# This is for HP DLB cookbook – pytorch

# But experimenter fails – looks like it needs python 2.7

FROM nvcr.io/nvidia/pytorch:18.06-py3

# Install flexdirect

RUN cd /tmp && wget -O installfd getfd.bitfusion.io && chmod +x installfd && ./installfd -v fd-1.11.2 — -s -m binaries

# Install python2.7 which is needed for experimenter.py used in hpdlb benchmark

RUN apt-get update && apt-get install -y –no-install-recommends

python2.7

&&

rm -rf /var/lib/apt/lists/

RUN mkdir -p /workspace/dlbs

WORKDIR /workspace/dlbs

#

# IMPORTANT:

# Build docker image from the dir: /data/tools/deep-learning-benchmark

#

COPY ./dlbs /workspace/dlbs

Appendix B: Caffe2 Container details

#

# This example Dockerfile illustrates a method to install

# additional packages on top of NVIDIA’s Caffe2 container image.

#

# To use this Dockerfile, use the docker build command.

# See https://docs.docker.com/engine/reference/builder/

# for more information.

#

# This is for HP DLB cookbook – Caffe2

FROM nvcr.io/nvidia/caffe2:18.05-py2

# Install flexdirect

RUN cd /tmp && wget -O installfd getfd.bitfusion.io && chmod +x installfd && ./installfd -v fd-1.11.2 — -s -m binaries

RUN mkdir -p /workspace/dlbs

WORKDIR /workspace/dlbs

#

# IMPORTANT:

# Build docker image from the dir: /data/tools/deep-learning-benchmark

#

COPY ./dlbs /workspace/dlbs