High Performance Computing (HPC) workloads have traditionally been run only on bare-metal, unvirtualized hardware. However, performance of these highly parallel technical workloads has increased dramatically over the last decade with the introduction of increasingly sophisticated hardware support for virtualization, enabling organizations to begin to embrace the numerous benefits that a virtualization platform can offer. This blog illustrates the application of virtualization to HPC and presents some selected performance studies of HPC throughput workloads in a virtualized, multi-tenant computing environment. For comprehensive performance results and discussions, please read VMware technical white paper “Virtualizing HPC Throughput Computing Environments”.

HPC Workloads

Contents

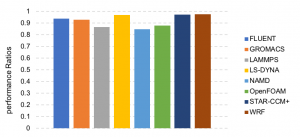

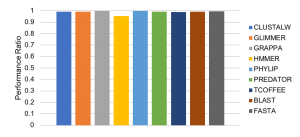

HPC workloads can be broadly divided into two categories: parallel distributed workloads and throughput workloads. Parallel distributed applications—often these are MPI applications—consist of many simultaneously running processes that communicate with each other, often with extremely high intensity. Figure 1shows performance results for a variety of popular open-source and commercial MPI applications using VMware vSphere DirectPath I/O™.

Figure 1. Performance of a variety of MPI applications running on a 16-node cluster using 320 MPI processes. Results are shown as the ratios of unvirtualized and virtualized performance. Higher is better.

On the other hand, throughput workloads often require a large number of tasks to be run to complete a job, with each task running independently with no communication between the tasks. Rendering the frames of a digital movie is a good example of such a throughput workload: each frame can be computed independently and in parallel. Throughput workloads currently run with very little degradation on vSphere, and in some circumstances they can run slightly faster when virtualized. This can be seen in Figure 2.

Figure 2. Performance of typical HPC throughput applications comparing virtualized and unvirtualized performance as ratios. Higher is better.

This blog focuses primarily on the performance of running throughput workloads on VMware vSphere. Detailed information related to parallel distributed workloads can be found in VMware technical white paper “Performance of RDMA and HPC Applications in Virtual Machines using FDR InfiniBand on VMware vSphere”.

Virtualizing HPC Workloads

Although HPC workloads are most often run on bare-metal systems, this has started to change over the last several years as organizations have come to understand that many of the benefits that virtualization offers to the enterprise can often also add value in HPC environments. The following are among those benefits:

- Hetergogeneity: support a more diverse end-user population with differing software requirements.

- Security: provide data security and compliance by isolating user workloads into separate VMs.

- Flexibility: provide fault isolation, root access, and other capabilities not available in traditional HPC platform.

- Ease of management: creates a more dynamic IT environment in which VMs and their encapsulated workloads can be live-migrated across the cluster for load balancing, for maintenance, for fault avoidance, and so on.

- Performance: many workloads have been successfully virtualized to achieve near bare-metal performance.

Virtualizing HPC Throughput Computing

To support multi-tenancy with per-user software stack, multiple virtual clusters should be hosted simultaneously on a physical cluster. Then, the key to maximizing resource utilization is CPU overcommitment. Specifically, each virtual cluster consists of the same number of virtual machines as the number of physical hosts, and each virtual machine contains the same number of vCPUs as the number of CPUs on a physical host. In this way, when one virtual cluster does not fully utilize its allocated resources, the idle resources can be freely available to other virtual clusters. This blog explores this scenario in details to evaluate whether such an approach can deliver similar job throughput rates to that achievable with a bare-metal cluster.

Test Bed Configuration

We used an 18-node cluster with Dell PowerEdge servers as a part of a collaboration with the Dell EMC HPC and AI Innovation Lab in Austin, Texas. In this test bed, one server is dedicated as the login node, one as the management node, and the remaining 16 as the compute nodes. More details about the hardware and software stack can be found in VMware technical white paper “Virtualizing HPC Throughput Computing Environments”.

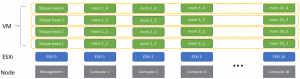

To mimic a real user-private, overcommitted execution environment, four TORQUE clusters are constructed to create four separate virtual clusters. Each virtual cluster consists of one TORQUE head node and 16 TORQUE MOMs[1], all of which run as VMs. This is illustrated in Figure 3. When all four virtual clusters are powered up, there is a 4X CPU overcommitment.

Figure 3. Four virtual clusters share a single physical cluster to achieve 4x CPU overcommitment. Each dotted-line enclosure designates a separate virtual cluster.

Job Execution Time

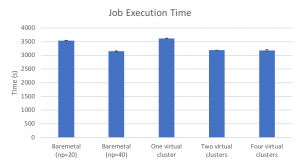

A fair performance comparison between bare-metal and virtual clusters is to contrast the completion time for a fixed sequence of jobs. Using the job sequence previously described, the average execution time of three runs in different environments is shown in Figure 4. Because each node in our test bed has 20 cores, in the first experiment we configured each TORQUE MOM with 20 job slots. As reflected in Figure 4, the execution with one virtual cluster is very close to that of bare metal (first column), with only a 2.2 percent overhead. Furthermore, when multiple virtual clusters are used to achieve CPU overcommitment, the execution time is reduced, implying an improved throughput. Through careful analysis, we identified that the throughput improvement can mainly be attributed to increased CPU utilization when more jobs are concurrently scheduled to execute. This has been verified by modifying each TORQUE MOM in the bare-metal environment to use 40 job slots, after which the throughput is improved to the same level as the overcommitted virtual environment. This can be seen in the second column of Figure 4.

Figure 4. Comparison of job execution time between bare metal and virtual. Lower is better.

CPU Utilization

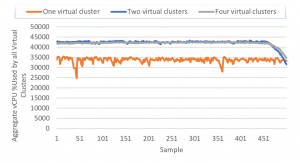

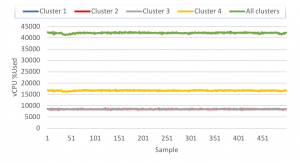

Besides execution time, another performance metric that we monitored is the total CPU utilization across the whole physical cluster. We used esxtop to sample CPU utilization of all VMs at 5-second intervals, and the results for one, two, and four virtual clusters are shown in Figure 5.

Figure 5. Total CPU utilization across 16 nodes.

Clearly, the the CPU utilizations in Figure 5 are consistent with the job execution times in Figure 4 for all the virtual cases. For example, the lowest CPU utilization case—one virtual cluster—matches the longest job execution time, and higher CPU utilization with two and four virtual clusters corresponds to shorter execution time. It is straightforward that the higher CPU utilization with two and four virtual clusters is due to resource consolidation brought about by virtualization. At the same time, improved utilization comes with better consistency, where the utilization with two and four virtual clusters is much smoother than in the single-cluster case.

In addition to the basic benefits of virtualization previously described, the proportional, share-based scheduler of the ESXi hypervisor offers another very useful degree of flexibility. This section continues the CPU overcommitment study by configuring virtual clusters with different shares, as can be done when creating a multitenant environment with quality-of-service guarantees.

As an experiment, we set the shares for the VMs in four virtual clusters to achieve a 2:1:1:1 ratio between the VMs on each compute node. The total CPU utilization as well as the per-cluster CPU utilizations from one run are shown in Figure 6. Figure 6 demonstrates that, not only the maximum CPU utilization is achieved, but also the CPU utilization ratio among the virtual clusters is the same as the specified shares ratio.

Figure 6. CPU utilization for four virtual clusters with 2:1:1:1 shares.

Summary

A rising trend toward virtualizing HPC environments is being driven by a motivation to take advantage of the increased flexibility and agility that virtualization offers. This blog explores the concepts of virtual throughput clusters and CPU overcommitment with VMware vSphere to create multitenant and agile virtual HPC computing environments that offer the ability to deliver quality-of-service guarantees between HPC users with good aggregate performance. Again, this blog is an overview of our full study available at VMware technical white paper “Virtualizing HPC Throughput Computing Environments”.

[1] The TORQUE term for compute node manager.

Authors: Michael Cui and Josh Simons.