Storage – the final frontier. These are the voyages of any Business Critical Oracle database, its endless mission: to meet the business SLA, to sustain increasing workload demands and seek out new challenges, to boldly go where no database has gone before.

Storage is one of the most important aspect of any IO intensive workloads, Oracle workloads typically fit this bill and we all know how a mis-configured Storage or incorrect tuning often leads to database performance issues, irrespective of any architecture where the database is hosted on.

As part of my pre-sales Oracle Specialist role where I talk to Customers , Partners and VMware field, I always bring up the fact how we can go and procure ourselves the biggest and baddest piece of infrastructure on this face of earth and all it takes is one incorrect setting or mis-configuration and everything goes to “Hell in a Handbasket”.

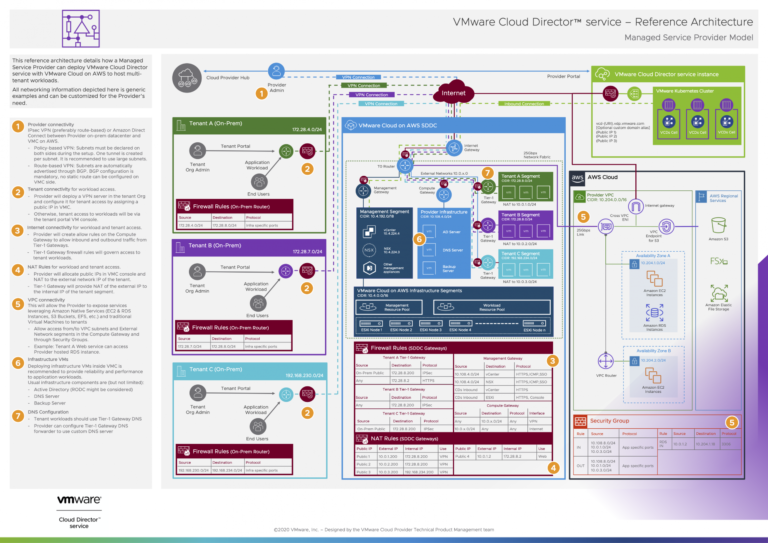

The focus of the blog is on identifying the various storage options available to house the oracle database workloads on VMware Software Defined Data Center (SDDC).

Storage on VMware vSphere platform can be of one of the following

- vmdk (Block or NFS Datastore)

- Physical / Virtual Raw Device Mapping (RDM)

- vSAN Datastore (Object Store File System (OSFS))

- Virtual Volumes (vVOL)

- In Guest (dNFS / iSCSI / NFS)

Key points to take away from this blog

- vmdk/s provisioned from VMFS / vsanDatastore / vVOL compatible datastores can be used as Oracle ASM using ASMLib / ASMFD / Linux udev for device persistence or File system storage for database storage

- RDM (Physical / Virtual) can also be used as VM storage for Oracle databases.

- VMware recommends using VMDK (s) for provisioning storage for ALL Oracle environments

- In guest storage options are also available as database storage

Example of Storage provisioning using EMC Unity array

Let’s look at the basic storage building blocks in case of a vSphere cluster connected to an EMC Unity array using block storage for example:

-Disks (Magnetic Spindles / SSD) which comprises the raw storage of an array

-Disks are assigned to Dynamic / Traditional storage pools which can have RAID group configurations (Raid 1+0, Raid 5, Raid 6)

-LUN/s are created on the RAID Groups

-LUN/s are then volume accessed / mapped / masked to ESXi hosts in the vSphere Cluster

https://www.emc.com/collateral/white-papers/h16289-dell-emc-unity-dynamic-pools.pdf

Now we can either

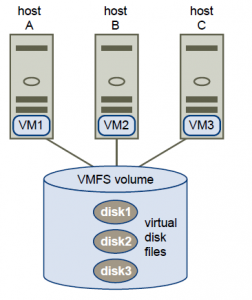

1) Create VMFS datastores on these LUN/s

Working with Datastores

https://docs.vmware.com/en/VMware-vSphere/6.5/com.vmware.vsphere.storage.doc/GUID-3CC7078E-9C30-402C-B2E1-2542BEE67E8F.html

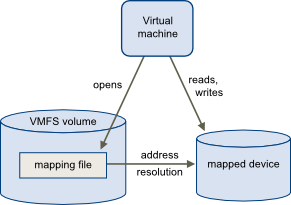

2) Present these LUN’s as Physical / Virtual RDM to the VM

https://docs.vmware.com/en/VMware-vSphere/6.5/com.vmware.vsphere.storage.doc/GUID-9E206B41-4B2D-48F0-85A3-B8715D78E846.html

Raw Device Mapping

https://docs.vmware.com/en/VMware-vSphere/6.5/com.vmware.vsphere.storage.doc/GUID-B3522FF1-76FF-419D-8DB6-F15BFD4DF12A.html

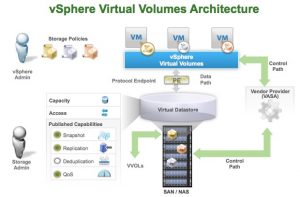

3) We can use VMware Virtual Volumes (vVOL) technology wherein vmdk’s of VM can be placed in Storage Containers and are backed up by vVOL/s.

Working with Virtual Volumes

https://docs.vmware.com/en/VMware-vSphere/6.5/com.vmware.vsphere.storage.doc/GUID-0F225B19-7C2B-4F33-BADE-766DA1E3B565.html

More information about Oracle on VMware Virtual Volumes (vVOL) can be found below

http://www.vmware.com/files/pdf/products/vvol/vmware-oracle-on-virtual-volumes.pdf

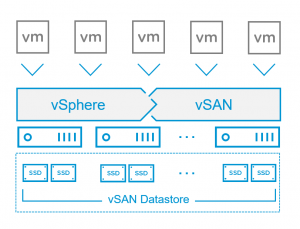

VMware vSAN

In case of a vSAN Hyper Converged Infrastructure (HCI) platform, we have a choice of placing the VM vmdk’s on vSAN datastore (vsanDatastore).

Introduction to vSAN

https://docs.vmware.com/en/VMware-vSphere/6.5/com.vmware.vsphere.virtualsan.doc/GUID-18F531E9-FF08-49F5-9879-8E46583D4C70.html

In Guest Storage

Last, we also have a choice going with In Guest storage i.e. storage mounted directly on the guest operating system

-Oracle Direct NFS (dNFS)

-Kernel NFS

-iSCSI

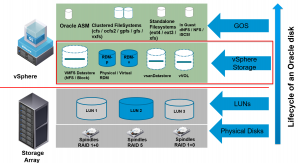

The above options can be summarized by the below illustration.

Oracle Storage Options on vSphere SDDC

On a high level, Oracle can consume storage in a VM

- vmdk’s on VMFS / vsanDatastore / vVOL compatible datastore

- Physical / Virtual RDM

- In Guest options (dNFS / NFS / iSCSI)

1) Oracle storage on vmdk’s on VMFS / vsanDatastore / vVOL compatible datastore

Once a vmdk is added to a Linux VM, when we login to the guest operating system, we have a choice of either using the newly created Linux device as

- File system

- Oracle Automatic Store Management (ASM) disk.

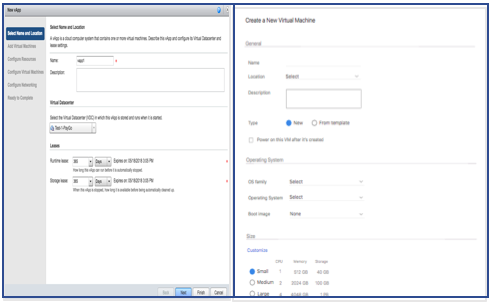

VM vmdk/s are created on the VMFS datastore as shown in the documentation below.

Virtual Disk Configuration

https://docs.vmware.com/en/VMware-vSphere/6.5/com.vmware.vsphere.vm_admin.doc/GUID-90FD3678-AC9F-40CC-BB66-F499141E2B99.html

Linux File system

Oracle Linux supports a large number of local file system types that you can configure on block devices, including btrfs , ext3, ext4, xfs, ocfs2, vfat

Local File Systems in Oracle Linux 7

https://docs.oracle.com/cd/E52668_01/E54669/html/ol7-about-localfs.html

Oracle Automatic Store Management (ASM)

Oracle ASM is Oracle’s recommended storage management solution that provides an alternative to conventional volume managers, file systems, and raw devices.

Oracle ASM is a volume manager and a file system for Oracle Database files that supports single-instance Oracle Database and Oracle Real Application Clusters (Oracle RAC) configurations.

More information on Oracle ASM can be found in the link below

https://docs.oracle.com/database/122/OSTMG/asm-intro.htm#OSTMG036

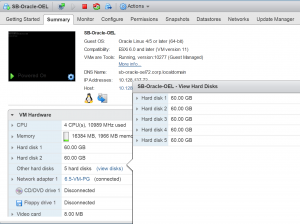

Example VM Setup

VM ‘SB-Oracle-OEL’ is running a single instance Oracle 12.2 database on Oracle Linux Server release 7.4 and has 5 vmdk’s as shown below

[root@sb-oracle-oel72 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

fd0 2:0 1 4K 0 disk

sda 8:0 0 60G 0 disk

└─sda1 8:1 0 60G 0 part

sdb 8:16 0 60G 0 disk

└─sdb1 8:17 0 60G 0 part

└─vg3_backup-LogVol_backup 249:2 0 60G 0 lvm /backup

sdc 8:32 0 60G 0 disk

└─sdc1 8:33 0 60G 0 part

sdd 8:48 0 60G 0 disk

├─sdd1 8:49 0 500M 0 part /boot

└─sdd2 8:50 0 59.5G 0 part

├─ol-swap 249:0 0 6G 0 lvm [SWAP]

├─ol-root 249:1 0 36G 0 lvm /

└─ol-home 249:4 0 17.6G 0 lvm /home

sde 8:64 0 60G 0 disk

└─sde1 8:65 0 60G 0 part

└─vg2_oracle-LogVol_u01 249:3 0 60G 0 lvm /u01

sr0 11:0 1 1024M 0 rom

[root@sb-oracle-oel72 ~]

Layout of the Linux disks:

-/dev/sda is for ASM disk for database ‘ORA12CM’

-/dev/sdc is for ASM disk for database ‘RMANDB’

-/dev/sdb for RMAN backup (/backup file system)

-/dev/sdd is for root volume ( / )

-/dev/sde is for Oracle binaries mount point (/u01)

Persistent Naming issue in Linux

On Linux o/s, device names like /dev/sda and /dev/sdb switch around on each reboot, culminating in an unbootable system, kernel panic, or a block device disappearing. Persistent naming solves these issues.

More information on Persistent Naming can be found below

https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/7/html/storage_administration_guide/persistent_naming

Linux LVM label, however , provides correct identification and device ordering for a physical device, since devices can come up in any order when the system is booted. An LVM label remains persistent across reboots and throughout a cluster. So file systems using LVM2 does not have this issue

https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/7/html/logical_volume_manager_administration/lvm_components#physical_volumes

Persistent device naming in Linux for Oracle database storage can be done in either of the 3 ways using

a) Oracle ASMLib

http://www.oracle.com/technetwork/server-storage/linux/persistence-088235.html

Oracle ASMLIB requires that the disk be partitioned for use with Oracle ASM. You can use the raw device without partitioning as is if you are using Linux UDEV for Oracle ASM purposes. For Oracle OCFS2 or clustered file system, partitioning of disk is required.

Partitioning is a good practice anyways to prevent anyone from attempting to create a partition table and file system on any raw device he gets his hands on which will lead to issues if the device is being used by ASM.

After partitioning using Linux utilities e.g fdisk or parted, we can then create the ASM disk using Oracle ASMLib commands.

parted or fdisk

1) Starting from RHEL 6, Redhat recommends use of parted

2) fdisk doesn’t understand GUID Partition Table (GPT) and is not designed for large partitions

3) fdisk cannot be used for drives greater than 2TB size

Partitioning with fdisk

http://www.tldp.org/HOWTO/Partition/fdisk_partitioning.html

Configuring Disk Devices to Use Oracle ASMLIB

https://docs.oracle.com/en/database/oracle/oracle-database/12.2/cwlin/configuring-disk-devices-to-use-oracle-asmlib.html#GUID-5464167D-B8D6-4204-8C43-8F1E56E0ACC0

b) Oracle ASMFD

Starting 12.1, Oracle introduced the ASM Filter Driver (Oracle ASMFD) which is a kernel module that resides in the I/O path of the Oracle ASM disks. Oracle ASM uses the filter driver to validate write I/O requests to Oracle ASM disks.

Oracle ASMFD simplifies the configuration and management of disk devices by eliminating the need to rebind disk devices used with Oracle ASM each time the system is restarted.

Oracle ASM Filter Driver rejects any I/O requests that are invalid. This action eliminates accidental overwrites of Oracle ASM disks that would cause corruption in the disks and files within the disk group.

After the disk is partitioned, Use the ASMCMD utility to provision disk devices for use with Oracle ASM Filter Driver

https://docs.oracle.com/en/database/oracle/oracle-database/12.2/ostmg/administer-filter-driver.html#GUID-E1E9DA6F-6E4B-427A-83AE-7F9DFCE068D9

c) Linux udev

Udev Rules

Udev uses rules files that determine how it identifies devices and creates device names. The udev service (systemd-udevd) reads the rules files at system startup and stores the rules in memory. If the kernel discovers a new device or an existing device goes offline, the kernel sends an event action (uevent) notification to udev, which matches the in-memory rules against the device attributes in /sys to identify the device. As part of device event handling, rules can specify additional programs that should run to configure a device.

https://docs.oracle.com/cd/E52668_01/E54669/html/ol7-s2-devices.html

Configuring Device Persistence Manually for Oracle ASM

https://docs.oracle.com/en/database/oracle/oracle-database/12.2/ladbi/configuring-device-persistence-manually-for-oracle-asm.html#GUID-70D50812-CCB2-41E4-AA3B-4689E1DA934E

Steps for creating a VM vmdk/s on a vsanDatastore or vVOL compatible datastore is exactly the same as in the case of provisioning vmdk’s on an VMFS datastore.

After creating VM vmdk/s on a vsanDatastore or vVOL compatible datastore, all steps for provisioning Oracle database storage either using file system OR Oracle ASM using ASMLib/ASMFD/Linux udev for device persistence is exactly the same.

2) Oracle workloads on RDM/s (Physical / Virtual)

When you give your virtual machine direct access to a raw SAN LUN, you create an RDM disk that resides on a VMFS datastore and points to the LUN. You can create the RDM as an initial disk for a new virtual machine or add it to an existing virtual machine. When creating the RDM, you specify the LUN to be mapped and the datastore on which to put the RDM.

https://docs.vmware.com/en/VMware-vSphere/6.5/com.vmware.vsphere.storage.doc/GUID-D06EC5D3-FCE6-4E07-8967-0D146A12FEA0.html

The blog below shows how to add shared RDM’s in Physical and Virtual Compatibility mode to VM.

To be “RDM for Oracle RAC”, or not to be, that is the question

https://blogs.vmware.com/apps/2017/08/rdm-oracle-rac-not-question.html

After creating VM rdm/s, all steps for provisioning Oracle database storage either using file system OR Oracle ASM using ASMLib/ASMFD/Linux udev for device persistence is exactly the same as detailed above.

3) Oracle workloads on In Guest Storage (dNFS / NFS / iSCSI )

Direct NFS Client integrates the NFS client functionality directly in the Oracle software to optimize the I/O path between Oracle and the NFS server. This integration can provide significant performance improvements.

About Direct NFS Client Mounts to NFS Storage Devices

https://docs.oracle.com/en/database/oracle/oracle-database/12.2/ladbi/about-direct-nfs-client-mounts-to-nfs-storage-devices.html#GUID-31591084-74BD-4B66-8C5B-68BF0FEE8750

Oracle workloads can also be provisioned on in guest iSCSI targets. After creating in guest iSCSI targets, all steps for provisioning Oracle database storage either using file system OR Oracle ASM using ASMLib/ASMFD/Linux udev for device persistence is exactly the same as detailed above.

https://docs.oracle.com/cd/E52668_01/E54669/html/ol7-s17-storage.html

Key points to take away from this blog

- vmdk’s provisioned from VMFS / vsanDatastore / vVOL compatible datastores can be used as Oracle ASM using ASMLib / ASMFD / Linux udev for device persistence or File system storage for database storage

- RDM (Physical / Virtual) can also be used as VM storage for Oracle databases.

- VMware recommends using VMDK (s) for provisioning storage for ALL Oracle environments

- In guest storage options are also available as database storage

Conclusion

Storage is one of the most important aspect of an IO intensive Oracle workload and VMware vSphere provides a lot of storage options by which we are effectively able to harness the power of the vSphere platform and Storage to effectively meet the business SLA’s.

All Oracle on vSphere white papers including Oracle licensing on vSphere/vSAN, Oracle best practices, RAC deployment guides, workload characterization guide can be found in the url below

Oracle on VMware Collateral – One Stop Shop

https://blogs.vmware.com/apps/2017/01/oracle-vmware-collateral-one-stop-shop.html