As you dive into the inner-workings of the new version of VMware vSphere (aka ESXi), one of the gems you will discover to your delight is the enhanced virtual machine portability feature that allows you to vMotion a running pair of clustered Windows workloads that have been configured with shared disks.

In vSphere 6.0, you can configure two or more VMs running Windows Server Failover Clustering (or MSCS for older Windows OSes), using common, shared virtual disks (RDM) among them AND still be able to successfully vMotion any of the clustered nodes without inducing failure in WSFC or the clustered application. What’s the big-deal about that? Well, it is the first time VMware has ever officially supported such configuration without any third-party solution, formal exception, or a number of caveats. Simply put, this is now an official, out-of-the-box feature that does not have any exception or special requirements other than the following:

- The VMs must be in “Hardware 11” compatibility mode – which means that you are either creating and running the VMs on ESXi 6.0 hosts, or you have converted your old template to Hardware 11 and deployed it on ESXi 6.0

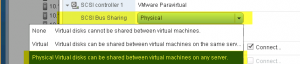

- The disks must be connected to virtual SCSI controllers that have been configured for “Physical” SCSI Bus Sharing mode

- And the disk type *MUST* be of the “Raw Device Mapping” type. VMDK disks are *NOT* supported for the configuration described in this document.

What is the value of this new feature?

Concurrent Host and Guest cluster provide a much-improve high availability option for virtualized workloads. It is a configuration that makes virtualization that much more superior to physical configuration and this is something that has been in much demand among our customers for a very long time. With this configuration, customers are able to provide application-level High Availability using the Windows Failover Clustering feature with which most Windows administrators are already familiar while (at the same time) providing machine-level resilience for both the ESXi hosts and the virtual machine using vSphere HA and vMotion.

- vSphere HA ensures availability in a vSphere cluster by ensuring that, in the event of unplanned outage affecting an ESXi host, the VMs running on that host are automatically powered on on other ESXi hosts in the cluster.

- In the event of a planned outage, a vSphere administrator can move a VM from an ESXi host while the VM is up and running and its application and services continue to be accessible to the end-user or dependent process/service. This movement of the VM is done using vMotion.

- vMotion can also be used (manually or automatically) for resource-balancing in a given vSphere cluster through the VMware Dynamic Resource Scheduling (DRS) feature.

Configuring vMotion-compatible Shared Disks VMs (we assume here that the vSphere cluster is operational and contains ESXi 6.0)

- Verify that the VM’s hardware version is at hardware 11

- If it’s not, you must upgrade it to 11

- Ensure that the VMs are powered off

- Add a virtual SCSI controller to the VMs

- Set the SCSI controller’s bus sharing mode to “Physical”

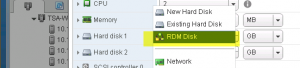

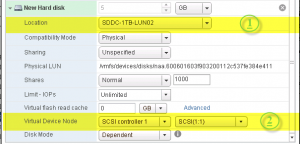

- Add a new RDM disk to the first VM

- (1) It is recommended that you store the mapping file in a location that is centrally and easily accessible to all the ESXi hosts in the vSphere cluster (you never know which host may house the VM at a point in future)

- (2) Ensure that the new disk is connected to the “Physical Mode” SCSI controller configured in previous steps

- Power on and log into the VM. Configure and format the disk in disk manager as desired.

- Add an “existing disk” to the second VM.

- Ensure that you are selecting the disk that you added to the first VM (we are sharing disks here, remember?)

- Ensure that this disk is also connected to the “Physical Mode” SCSI controller configured in previous steps

- Power on this VM and log into Windows.

- The disk should be visible in disk manager on both VMs

- Repeat this process for all other VMs that will be sharing this disk (up to 5 such VMs are supported on vSphere)

That, my friend, is the extent of the configuration steps required to share vMotion-compatible disks among VMs in vSphere 6.0

Now, go ahead and migrate any of the VMs while they are powered on.

There is a catch – or two …. ok, maybe three catches

Yes, you knew this was coming, didn’t you?

- VMware still does not support Storage vMotion for VMs that are configured with shared disks. If you attempt to perform a Storage vMotion on such workloads, the attempt will fail.

- While it is technically possible to successfully use a VMDK (instead of an RDM) disk for the configuration described above, please be advised that VMware does not support such configuration. You will be able to vMotion the workloads successfully and things will appear to behave optimally and without a hitch. PLEASE DO NOT DO SO. Such configuration may lead to instability and data corruption. Please see Configuring Microsoft Cluster Service fails with the error: Validate SCSI-3 Persistent Reservation for more information.

- Insufficient bandwidth WILL hamper your vMotion operation and cause service interruption for your clustered workloads. Wait, are you surprised? How do you suppose we get a running VM from one host to another? Teleportation? No, we copy it over the wire incrementally. We strive to complete the copy and the switch-over very rapidly. IF the vMotion network is congested or insufficient (say, perhaps, you try to vMotion a running “monster VM” with, say 128 CPUs and 4TB of RAM over a 1GB link that is shared with other trafffic), the copy and switch-over operation will take a very long time, long enough for the VM to lose heartbeat with its peer nodes and, consequently, trigger a failover or shutdown of its cluster service for lack of quorum.

To avoid the issue described in the previous paragraph (and to ensure the overall health and functionality of your vMotion operations), VMware recommends the following:

- Put the vMotion VMKernel Portgroup on a 10GB (or higher) network

- If you do not have a 10GB or higher network facilities, create more than one vMotion VMKernel Portgroup in the vSphere cluster. Use separate 1GB NIC for each portgroup

- IF using 1GB NICs, consider enabling jumbo-frames at all levels of the network stack (from physical switches all the way to the in-guest network card)

- IF none of the recommended options above is possible, consider tuning the cluster services inside the Guest OS to tolerate longer heartbeat timeouts. See Tuning Failover Cluster Network Thresholds for more information and recommended settings.

That’s all. Nothing fanciful or complicated – we took care of the complexities for you, so go ahead and vMotion that shared-disk clustered workload. But don’t forget the RDM.