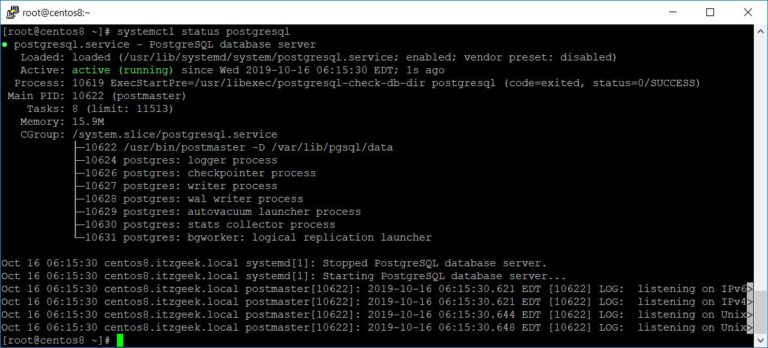

Picture the scene: at 3 a.m., a sensor at a retail kiosk triggered a silent alarm. Investigators discovered that the container runtime had been tampered with — a misconfiguration had left the node exposed. Within hours, engineers across open source communities identified the flaw, patched the upstream components and published remediation guidance. The fix was fast, decentralized and vendor-neutral.

This type of incident illustrates both the potential and the pressure of cloud native edge computing. By design, edge systems operate in remote, resource-constrained environments and run business-critical workloads, often without local staff or real-time observability.

To secure these deployments, enterprises must modernize their edge infrastructure. For most organizations, that effort involves much more than just deploying containers. It requires investment in resilient systems that can absorb change, automate configuration enforcement and adapt quickly to emerging threats.

What is cloud native edge?

Contents

Edge computing refers to processing data at, or near, its source — enabling faster and more efficient decision-making. First, sensors, cameras or other embedded devices capture the raw input. Instead of sending that data to a central data center or cloud platform, edge systems process it locally using ruggedized computing nodes. As a result, the system produces a decision or response almost instantly.

The practice of cloud native edge computing applies the design principles of cloud native infrastructure — containers, orchestration and declarative automation — to the decentralized model of edge computing. With a cloud native approach, teams deploy applications at the edge that are portable, resilient and easy to manage at scale.

For nearly all cloud native edge platforms, open source is the de facto foundation. Open source tools and platforms emerge from communities that collaborate across enterprises, industries and geographies. Through a shared commitment to transparency and ecosystem flexibility, these technologies accelerate innovation and reduce long-term costs.

In most cases, the entire backbone of cloud native edge computing is a stack of open source technologies:

- Linux provides the secure, modular operating system layer.

- Containers isolate workloads, ensuring consistent execution across environments.

- Kubernetes handles orchestration and scaling utilizing Git-based workflows to automate deployment and policy changes through version-controlled declarations.

These components are not proprietary add-ons. Rather, they are community-maintained standards backed by the Cloud Native Computing Foundation and Linux Foundation. Specifications such as OCI, CNI and CSI ensure that edge platforms remain modular and vendor-neutral. Together, these specifications allow edge systems to evolve without needing to be fully rewritten.

For teams accustomed to legacy infrastructure, this model may seem ambitious. However, it already powers critical systems in telecom towers, manufacturing plants, autonomous vehicles and energy grids — delivering speed, consistency and resilience at scale.

Recognize potential wins

The shift to cloud native edge computing can deliver several tangible business outcomes. For example, edge computing inherently reduces latency. Traditional systems route data back to centralized infrastructure for processing, which introduces delays and limits responsiveness. Cloud native edge systems process data on-site, eliminating the delays of backhaul communication. This local responsiveness supports real-time analytics, closed-loop controls and immediate decision making.

Long-term cost efficiency is another important driver. Lightweight Linux distributions and optimized containers allow you to run workloads on existing or low power hardware. Open source orchestration tools reduce licensing complexity and simplify integration across environments. In contrast, conventional platforms often rely on custom integrations or per-node licensing. Both can lead to increased operational overhead as fleets expand. These efficiencies can lower capital expenditures and accelerate time-to-value.

Scalability, which is built into the model, also provides advantages. Teams that manage infrastructure as code and use GitOps workflows can deploy to thousands of sites using a consistent process. At the same time, automated pipelines make updates traceable, repeatable and less prone to errors. Without these workflows, teams often fall back on manual scripts, remote access and ad hoc provisioning methods that don’t scale beyond initial pilots.

There are also several benefits related to system adaptability. With a cloud native approach, you can roll out new services, integrate observability tools and improve energy efficiency without redesigning their architecture. This flexibility gives open source edge technologies long-term relevance in fast-changing environments. Platforms like SUSE Edge extend that foundation with enterprise support and additional operational reliability.

Prepare for potential risks

While cloud native edge architectures offer clear operational advantages, they also introduce new risks — especially for security, compliance and lifecycle management at scale.

One of the most pressing challenges is patch latency. When vulnerabilities emerge in container runtimes or orchestration layers, many edge environments struggle to respond quickly. Disconnected networks, limited bandwidth and manual approval loops can delay updates. However, in some cases, these delays can extend exposure across hundreds or thousands of sites. Without automated pipelines for delivering pre-staged, signed images, teams may struggle to meet modern security demands.

Configuration drift is another major concern. Small inconsistencies in OS versions, Kubernetes policies or runtime behavior can create instability or open audit gaps. Many teams try to enforce uniformity through documentation or one-off scripts, but these break down as edge Kubernetes deployments scale. A more resilient approach relies on declarative workflows, GitOps pipelines and policy-as-code frameworks. In addition to defining the desired system state, these automation and governance practices continuously reconcile against it.

Many organizations manage these risks with infrastructure that prioritizes drift detection, versioned system images and repeatable automation.

Explore proven edge practices

Open source edge computing thrives on transparency, shared tooling and iterative improvement. The following best practices reflect proven strategies — aligned with the implementation patterns of enterprises already running large-scale edge Kubernetes fleets — that help deliver both operational clarity and security assurance. In industrial, retail, healthcare and other edge-reliant environments, these approaches also support the unification of data flows between Information Technology and Operational Technology systems.

- Immutable operating systems

Build edge systems using read-only partitions and signed boot mechanisms. This protects the OS from unauthorized modification and ensures each node boots into a known good state. - GitOps deployment automation

Use Git repositories as the single source of truth for infrastructure and application configuration. Automate change rollout and rollback without manual intervention or scripting. - Kubernetes native policy enforcement

Encode security and compliance controls as policy-as-code and enforce them within Kubernetes. This ensures consistency across clusters and alignment with both IT and OT requirements. - Verified software provenance

Generate and validate software bills of materials during builds. Sign container images to prove origin, trace dependencies and streamline vulnerability response. - Offline-ready update pipelines

Prepare updates for clusters with intermittent or no connectivity. Enable edge nodes to apply patches locally while preserving auditability and lifecycle control. - Fleetwide observability and tracing

Standardize telemetry collection across all clusters. Make logs, metrics and traces visible to Ops, Security and Platform teams from a unified interface.

Together, these practices can enable consistent configuration, secure workflows and operational confidence at scale. They are especially relevant to deployments where IT and OT converge at the edge, even in isolated environments or across diverse hardware footprints.

Plan your edge deployment

When putting these best practices into action, some organizations may look to commercial platforms or open source distributions for implementation. Others may focus on refining internal architectures or updating procurement criteria.

In either case, the following questions offer a practical lens for assessing how well a strategy aligns with the design goals of cloud native edge computing. They may help surface implementation gaps, clarify trade-offs and guide long-term planning for secure, scalable operations.

- Is the platform 100% open source and upstream-aligned?

- Can it operate reliably across different hardware profiles, such as industrial gateways, retail kiosks or ruggedized IoT devices?

- Does it support GitOps and declarative automation natively?

- Can updates and patches be staged for offline deployment?

- Is hardened Linux included, with lifecycle guarantees and long-term support?

- Does it offer built-in tools or APIs to monitor power efficiency, idle time and hardware utilization?

Align with open standards

The strongest force in edge innovation is not a single technology. Rather, it is the way open communities collaborate. Projects stewarded by the CNCF and LF Edge shape common practices, vet code through broad peer review and release updates. These projects progress at a pace that few proprietary development cycles can sustain. From day one, this model delivers wider testing and built-in interoperability — which provides critical advantages when you are tasked with securing thousands of remote nodes.

Because these projects publish open standards, integration is an upfront design feature. You can scale across diverse hardware without rewriting code or waiting for vendor roadmaps. Vendors that contribute upstream and operate transparently add another layer of trust. They respect openness, support collaboration and help teams build for tomorrow without sacrificing control today. This is the commitment and operating model that SUSE embraces through solutions like SUSE Edge Suite.

Advance your edge strategy

At the edge, performance and security go hand in hand. Cloud native edge computing supports both goals — especially when teams adopt declarative workflows, automate compliance and unify observability across distributed fleets.

This shift requires rethinking how systems are built, updated and verified. While implementation demands investment, it delivers a repeatable blueprint for bridging IT and operational technology — and for establishing a foundation that is secure, scalable and resilient. When powered by open source technologies such as Linux, containers, Kubernetes and GitOps, these systems enable enterprises to run modern workloads across thousands of locations with minimal operational friction.

Is it time to move beyond the data center? Download the Cloud Native Edge Essentials e-book to get access to implementation guides and platform strategies that support scalable, secure deployments at the network edge.

(Visited 1 times, 1 visits today)