By Bob Goldsand, Oleg Ulyanov, and Todd Muirhead

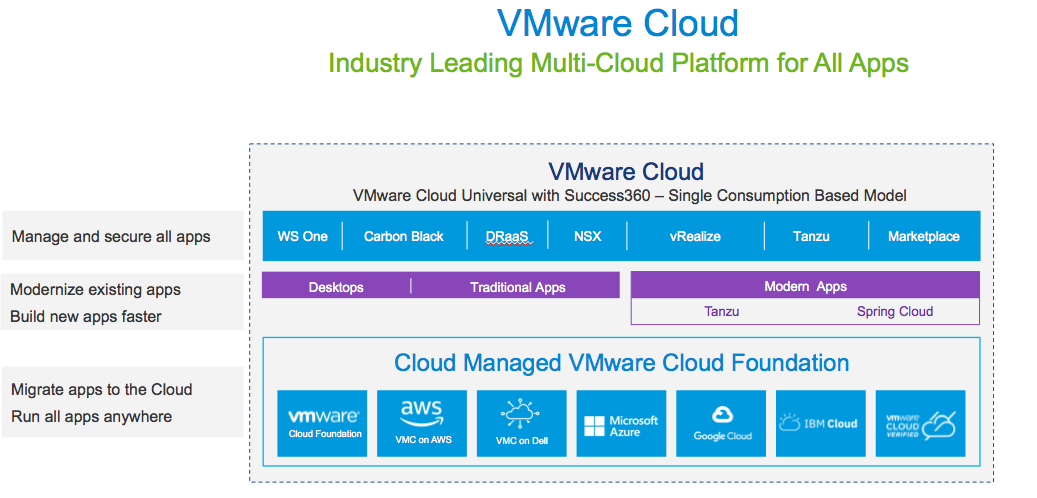

VMware’s Any Device, Any Application, Any Cloud vision is a winning strategy and resonates well with our customers. The vSphere cloud platform enables our customers to unify around a single enterprise ready platform, while leveraging existing skillsets for private, hybrid, or public cloud deployments. We are fielding more and more workload based questions from customers and partners looking for prescriptive guidance to expand upon VMware’s Any, Any, Any vision.

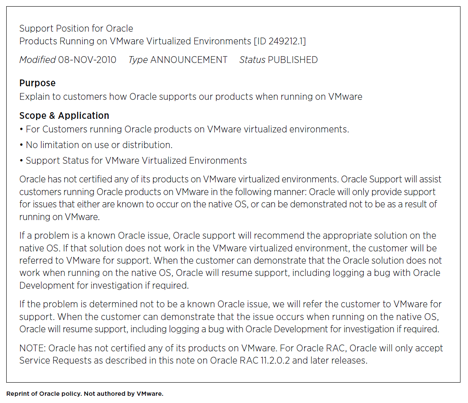

There are many surveys which report about 80% of enterprise mission critical workloads have not migrated to the cloud. This is of particular interest to us as we have all spent our careers in the enterprise space. Collectively we have authored, co-authored, or been credited with a large percentage of the VMware white papers published in the enterprise space. These best practice and performance papers include Oracle, SAP, SAP HANA, SAP/Sybase ASE & IQ, Microsoft SQL Server; covering transactional, analytic, in-memory, media & entertainment, and Telco workloads.

Enterprise Cloud Strategy

Contents

When devising a cloud strategy for enterprise workloads, consistent and predictable performance is a fundamental requirement. Regardless of where that workload resides, whether in a private, hybrid, or public cloud, their service level agreements must be maintained. Any cost savings associated with the cloud are inconsequential if SLAs cannot be met. Customer’s expectations when moving to one of these cloud models is that performance will not be sacrificed. Paul Maritz, CEO of VMware from 2008 to 2012, said “Cloud is about how you do computing, not where you do computing.”

A good starting point for any cloud discussion requires a clear understanding of how shared deployments differ from dedicated host deployments in the cloud. With the shared model, resources are shared among the customers or tenants on a physical server. With dedicated hosts, you are the only tenant or customer on that physical server and can utilize the resources and optimize your workloads as necessary. The differences in these two cloud models can potentially degrade or enhance performance.

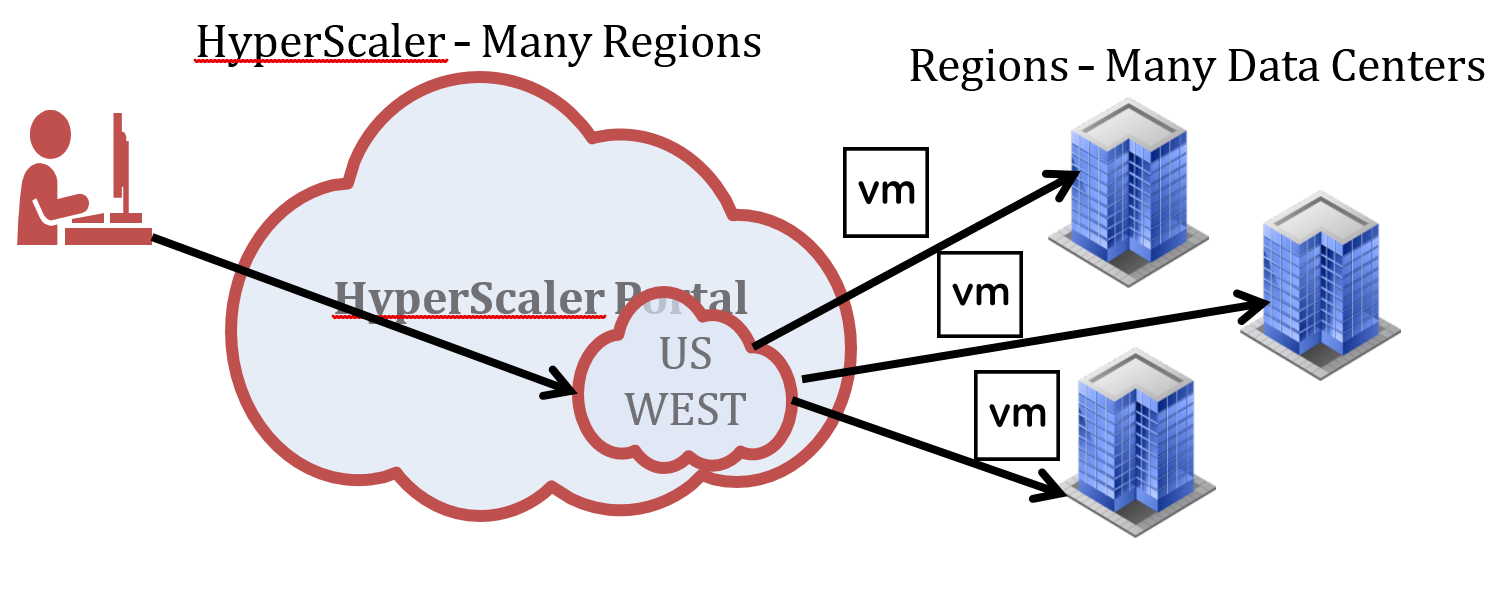

Using the shared cloud model, workloads are deployed as virtual machines into a specific geography and then region. These regions can consist of many data centers, as shown in Figure 2.

Note: Geography and region terminology will differ from Hyperscaler to Hyperscaler

The shared model often relies on vast economies of scale to be workable. The size and scale is so big that these large clouds are often referred to as “HyperScalers”. By co-locating virtual machines representing many different customers on hosts as efficiently as possible, they are able to provide service to their customers for a lower cost. Small customers are able to use portions of large high-speed servers for an on-demand price that can be metered by the hour. This makes it very easy for small customers and small applications be easily developed and hosted – without having to commit to an entire dedicated host.

When deployed in a shared model Virtual Machines can be placed in one or more of the data centers which constitute a region. Specific placement of the virtual machines is based on many factors such as resiliency and resource availability. Many hyperscaler’s data centers are purpose built consisting of racks managing just databases or application or specialized services.

In a shared model the inability to control VM placement and always keep related VMs as close together as possible for data locality reasons can negatively affect performance and ultimately have SLA implications. Virtually all enterprise applications are multi-tiered, so if an application tier VMs is running in a different datacenters or rack than the database tier VM is, unnecessary latencies are introduced which will degrade performance. As an example think of a massively parallel processing (MPP) database where nodes could be potentially distributed across data centers; any MPP database query will only be as fast as its slowest node. This conflict with the customer’s key assumption that performance should not degrade when moving workloads to the cloud.

In the shared model, VM placement is based on what data center or server resources are available at the time that the workload and it’s VMs are deployed. When they are shut down and then restarted they will be again placed based on resource availability at that time. Upon each shutdown/startup operation the latencies associated with the application stack or tiers can vary and can affect performance accordingly.

To control cloud cost, a tactic that is often used is to shut down VMs at night. Then each morning when they are started back up it “shuffles” the VM placement deck resulting in a new set of application latencies. This can result in performance that is inconsistent and unpredictable.

These potential issues with shared model are mitigated as much as possible. The Hyperscalers use complex and efficient algorithms when placing virtual machines in and given region. They also have developed features, which enable customers to influence VM placement and minimize latency as well. Depending on the application the affect to performance of these effects can vary widely.

Cloud Dedicated Hosts

In a dedicated host model, the customer is the sole tenant of the cluster and no resources are shared. This is the model or platform VMware customers are accustomed to deploying their virtual machines and their associated business critical workloads on. One aspect that differentiates VMware based clouds is the ability to perform workload management and place VMs based on their workload characteristics.

Some of the tools used to perform workload management are Live-Migration (vMotion) and Distributed Resource Scheduler (DRS). vMotion can migrate running VMs based on workload requirements. Distributed Resource Scheduler can balance and optimize VM workloads and migrations in an automated, semi-automated, or manual manner. These migrations can be based on customer defined rules or resource contention.

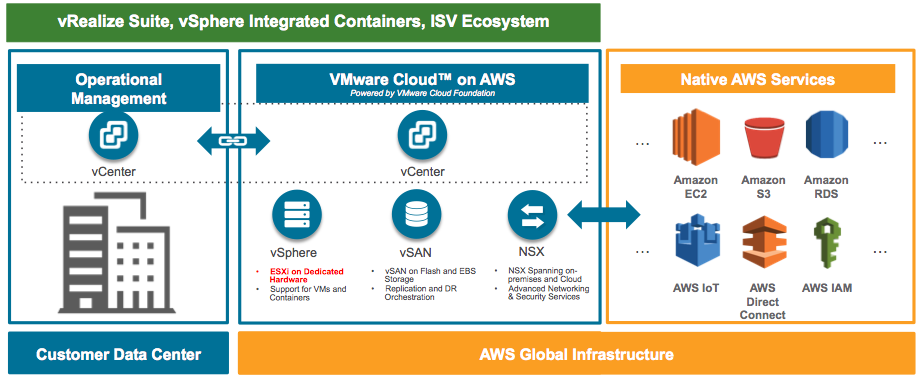

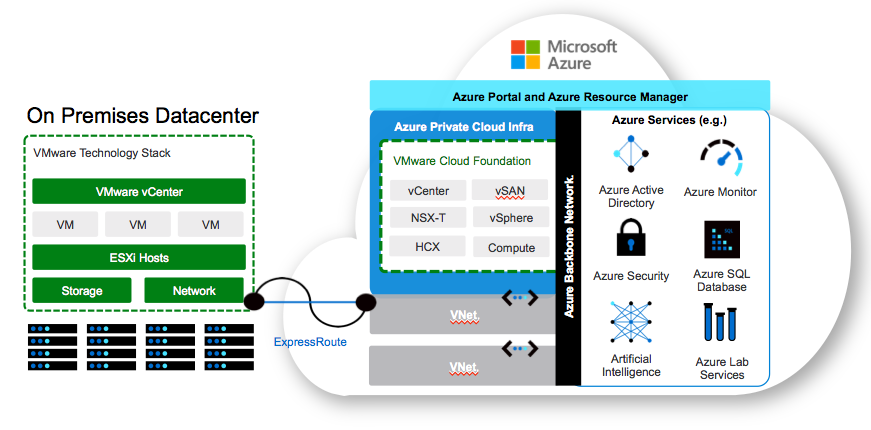

Using dedicated hosts and clusters along with vMotion and DRS can control VM placement and in turn reduce the latency associated with running VM in different racks or datacenters in a shared model. Using dedicated hosts running on VMware’s platform means that performance should not degrade when migrating to the public cloud. Two examples of VMware’s public cloud solutions, which leverage these principles, are, VMware Cloud On AWS and Microsoft Azure VMware Solutions shown below.

NSX Performance Acceleration

VMware NSX Data Center is known for its ability to abstract networking constructs, but what is lessen known is that it can also improve application performance. VM to VM communications within the application stack or a network intensive business process flow can benefit greatly from the improved optimized network path that is possible with NSX. Studies done previously showed the potential performance gains for network intensive applications. The ability to use NSX in a VMware cloud environment provides opportunities for performance optimization. A future blog will get into additional details around this topic.

Conclusion

There is no right or wrong model; it will primarily depend on the workload and your enterprises requirements. Our goal is to be informative, develop guidance, and provide VMware’s collective expertise and experience in the enterprise space. In subsequent blogs we will cover multi-cloud strategies, scaling workloads, multi-cloud management, workload optimization, relative performance, and selected networking topics.