Machine Learning (ML) development processes have several similarities to software development, but they are also different in subtle ways. This article describes the application of software development disciplines like version control, checking work into a repository, sharing artifacts between practitioners, dataset and model governance, etc., to the field of machine learning, where versions and models can proliferate and be difficult to track and even more importantly, to reproduce. This article will be of particular interest to those organizations that write code as part of their ML work and want more control over the various models and artifacts produced.

Dotscience is a software company that tackles the problems that come up in rapidly changing ML development and solves them using on-premise or cloud infrastructure, for example that supplied by the VMware vSphere platform. Dotscience engineers worked with VMware to test their applications for the data scientist on vSphere with Kubernetes. The Dotscience solution uses Kubernetes clusters on vSphere here as the deployment target for the trained model – in ML terms, the location where the model is used in “inference” or production.

Problems with the Machine Learning Development Process

Contents

In machine learning today, a great deal of attention is paid to the application of different statistical techniques such as logistic regression, and different types of neural networks, such as CNNs, to the training of an ML model. Model choice and the tuning of hyper-parameters is a very interesting field, but it requires careful artifact management and run tracking in order to get the best results from it. Examples of common issues we have heard about from customers are:

- how is the particular model type and the hyper-parameters applied to it, tracked for each experiment done? Some organizations may do this on a spreadsheet, if at all, today.

- how do I ensure that the right combination of these deliverables (the model, its parameters and code) make it safely through to production deployment in their intended form?

- how do I iterate on the development/training of any single model with many different data sets?

- how do data scientists in the ML world review each other’s work and make contributions to someone else’s model training? How do they share models?

- how can I be sure that I can re-produce a given model and its behavior with known data?

- how do I know all the versions of the datasets that the model was trained on, and when, with what results?

- how do I track the accuracy achieved in each experiment that I run?

In general, the issues may be thought of as bringing more order to the new field of development and deployment of ML models with governance over their input data sets. Providing answers to these problems is a field we call ML Operations or MLOps. This is a field that is rapidly emerging in the broader area of machine learning, just as DevOps did in the software development/system admin ones.

The Dotscience Architecture

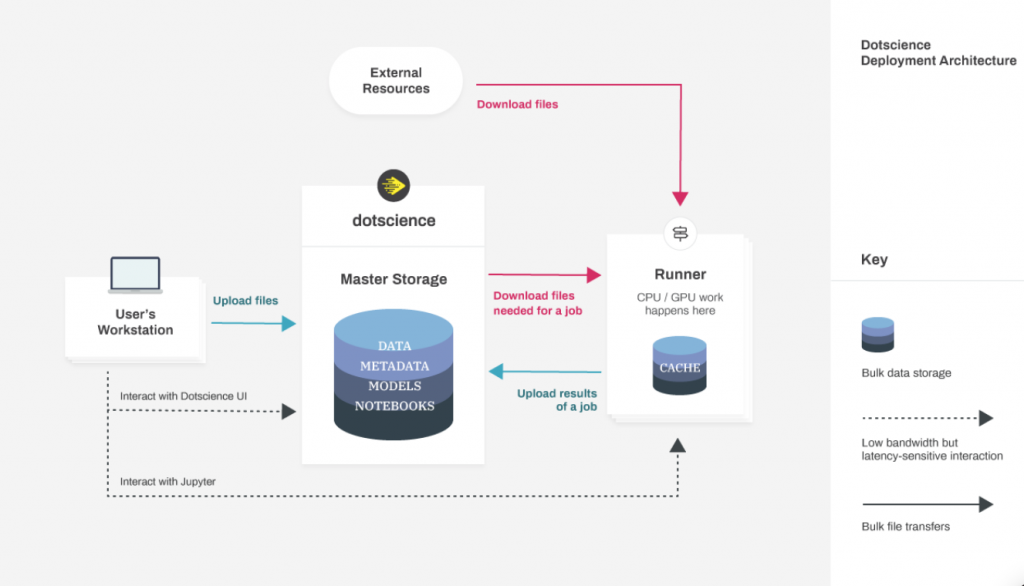

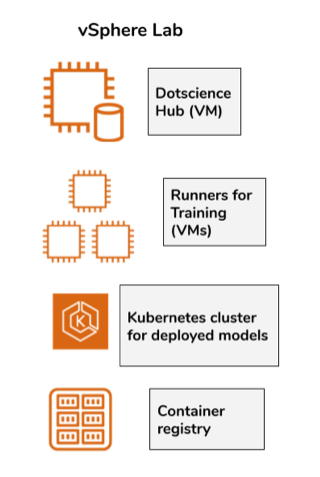

Dotscience provides a set of different components that answer the above challenges. Not only is each ML experiment tracked as a “model run” but each data set is tracked through its processes as a “data run” also. Runs encompass arbitrary code execution. This provides an answer to the question of data and model provenance and control. The outline architecture for the Dotscience solution is seen below, with virtual machines and containers in the infrastructure.

In the diagram above we see that in Dotscience on vSphere, the “Runners” in Dotscience are provided using virtual machines for doing development. Data scientists who deal with models at the code level often work in Jupyter Notebooks, for example. Here, the data scientist has the freedom to change the code for their model as they see fit and to test it with different datasets and different parameters in a free-flowing way. By instrumenting their key sections of code in a simple way, the progress of the model through its experimental runs may be tracked closely and stored for future reference.

Code artifacts such as Python or Scala are tracked in the VM or set of VMs that make up the Dotscience Hub, which is where the data scientist developer logs in. This area keeps a history of versions of these items for future reference. Once the data scientist developer matures their model to an accepted level then its stored version in Dotscience may be used, or it can be checked into an external repository such as Github.

Subsequently, the ML model, with its supporting code, is further tested to a higher, production-level degree. Then it can be stored into the Dotscience Container Registry or another Container Repository for re-use by the testing team or the deployment team.

When enough testing of the model and its surrounding code has been done using that Container Repository image, then the set is promoted into a Kubernetes environment for production deployment. Doscience streamlines the entire process, from experimentation through to production deployment, for the user. The end user does not have to deal with the details of Kubernetes for this work.

Dotscience for Production Monitoring

Production deployment brings with it new challenges also. How do I know from one day to the next whether my deployed model is actually still accurate and performing well? With data patterns changing in the production world, will my model continue to offer valuable insights, when it was trained on data that does not look like the patterns it is now seeing? Dotscience applies a statistics-based approach to solving this ML-in-production issue. Monitoring models is harder than monitoring container-based microservices. This is so because the model inputs and the data can drift away from previously-seen patterns, and the model output can therefore drift too, producing inaccurate predictions or characterizations. The solution to this production issue is to apply statistical distribution methods to the classifications produced to detect the drift and then take action to re-train the model.

The vSphere Solutions Lab for Testing Dotscience

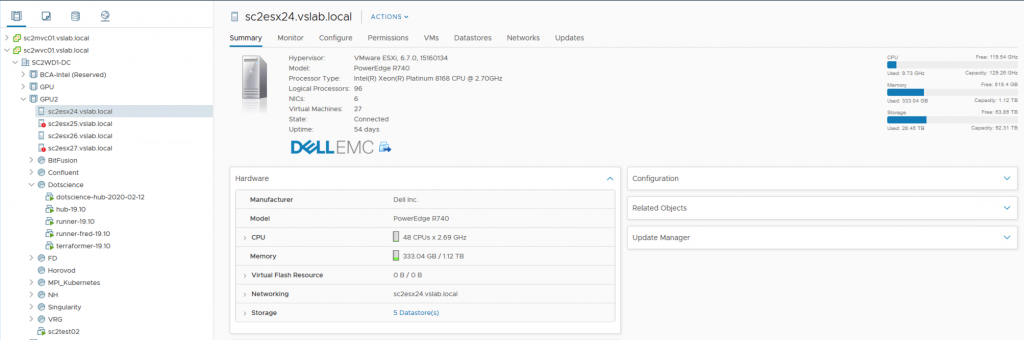

In the VMware Solutions Lab, the Dotscience staff implemented the various parts of their solution on a combination of classic VMware virtual machines and on Kubernetes-managed containers.

The vSphere 7 infrastructure excels at hosting these two deployment mechanisms in a consistent fashion – both VMs, Pods and Namespaces are visible in the new vSphere Client management interface. In the current lab work, a TKG (Tanzu Kubernetes Grid) cluster was created and used on vSphere 6.7, also, to host the components shown above and the Dotscience tooling proved to work extremely well in the virtualized and containerized environment.

Conclusion

Machine Learning development brings with it new challenges in the areas of reproducibility, dataset and model management, and monitoring effectiveness of trained models in production. This article explores the deployment of a structured solution to these problems from a VMware partner, Dotscience, using both virtual machines and containers managed by Kubernetes to make up the solution.

For further information on the DotScience products and solutions, go to the Dotscience site

For more information on VMware and Machine Learning, check out the articles here

For more on VMware vSphere 7 with Kubernetes, take a look here