Introducing …. the new lean, mean, fighting machine!! Oracle RAC on VMware Cloud on AWS SDDC 1.8v2 , all muscles, no fat!!

No more need to reserve all the storage upfront for installing and running Oracle RAC on VMware Cloud on AWS starting 1.8v2 !!

“To be precise” – No more Eager Zero Thick disk(s) needed for Oracle RAC shared vmdk’s on VMware Cloud on AWS starting 1.8v2. Read on.

Oracle RAC on VMware vSAN

Contents

- 1 Oracle RAC on VMware vSAN

- 2 Oracle RAC on VMware Cloud on AWS

- 3 What’s New Sept 24th, 2019 (SDDC Version 1.8v2)

- 4 Key points to take away from this blog

- 5 Setup – Oracle RAC on VMware Cloud on AWS SDDC Version 1.8v2

- 6 Test Suite Setup

- 7

- 8 Test Case 1: Online change of Storage Policy for shared vmdk with no RAC downtime

- 9 Summary

By default, in a VM, the simultaneous multi-writer “protection” is enabled for all. vmdk files ie all VM’s have exclusive access to their vmdk files. So, in order for all of the VM’s to access the shared vmdk’s simultaneously, the multi-writer protection needs to be disabled. The use case here is Oracle RAC.

KB 2121181 provides more details on how to set the multi-writer option to allow VM’s to share vmdk’s on VMware vSAN .

Requirement for shared disks with the multi-writer flag setting for a RAC environment is that the shared disk is has to be set to Eager Zero Thick provisioned.

In vSAN , the Eager Zero thick attribute can be controlled by a rule in the VM Storage Policy called ‘Object Space Reservation’ which need to be set to 100% which pre-allocates all object’s components on disk.

More details can be found in KB 2121181.

Oracle RAC on VMware Cloud on AWS

VMware Cloud on AWS is an on-demand service that enables customers to run applications across vSphere-based cloud environments with access to a broad range of AWS services. Powered by VMware Cloud Foundation, this service integrates vSphere, vSAN and NSX along with VMware vCenter management, and is optimized to run on dedicated, elastic, bare-metal AWS infrastructure. ESXi hosts in VMware Cloud on AWS reside in an AWS availability Zone (AZ) and are protected by vSphere HA.

The ‘Oracle Workloads and VMware Cloud on AWS: Deployment, Migration, and Configuration ‘ can be found here.

What’s New Sept 24th, 2019 (SDDC Version 1.8v2)

As per the updated release notes, starting VMware Cloud on AWS SDDC version v1.8v2 , vSAN datastores in VMware Cloud on AWS now support multi-writer mode on Thin-Provisioned VMDKs.

Previously, prior SDDC Version 1.8v2, vSAN required VMDKs to be Eager Zero Thick provisioned for multi-writer mode to be enabled. This change enables deployment of workloads such as Oracle RAC with Thin-Provisioned, multi-writer shared VMDKs.

More details about this change can be found here.

What this means is customers NO longer have to provision storage up-front when provisioning space for an Oracle RAC shared storage on VMware Cloud on AWS SDDC version 1.8 v2.

The RAC shared vmdk’s can be thin provisioned to start with and can slowly consume space as and when needed.

Key points to take away from this blog

This blog focuses on validating the various test cases which was run on

- 6 node Stretched Clusters for VMware Cloud on AWS SDDC Version 1.8v2

- 2 Node 18c Oracle RAC cluster on Oracle Linux Server release 7.6

- Database Shared storage on Oracle ASM using Oracle ASMLIB

Key points to take away

- Prior SDDC Version 1.8v2, Oracle RAC on VMware Cloud on AWS requires the shared VMDKs to be Eager Zero Thick provisioned (OSR=100) for multi-writer mode to be enabled

- Starting SDDC Version 1.8v2, Oracle RAC on VMware Cloud on AWS does NOT require the shared VMDKs to be Eager Zero Thick provisioned (OSR=100) for multi-writer mode to be enabled

- shared VMDKs can be thin provisioned (OSR=0) for multi-writer mode to be enabled

- For existing Oracle RAC deployments

- Storage Policy change of a shared vmdk from OSR=100 to OSR=0 (and vice-versa if needed) can be performed ONLINE without any Oracle RAC downtime

Setup – Oracle RAC on VMware Cloud on AWS SDDC Version 1.8v2

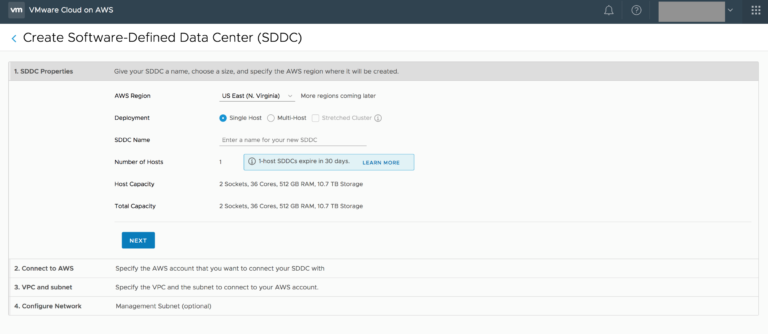

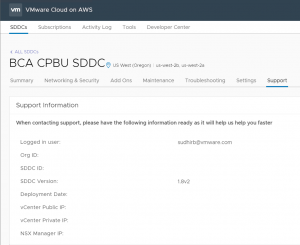

The VMware Cloud on AWS SDDC Version is 1.8v2 as shown below.

Setup is a 6 node i3 VMware Cloud on AWS SDDC cluster stretched across 2 Availability Zones (AZ’s) , ‘us-west-2a’ and ‘us-west-2b’.

Each ESXi server in the i3 VMware Cloud on AWS SDDC has 2 sockets, 18 cores per socket with 512GB RAM. Details of the i3 SDDC cluster type can be found here.

An existing 2 Node 18c Oracle RAC cluster with RAC VM’s ‘rac18c1’ and ‘rac18c2’ , operating system Oracle Linux Server release 7.6, was chosen for the test cases.

This RAC cluster had shared storage on Oracle ASM using Oracle ASMLIB. The shared disks for the RAC shared vmdk’s were provisioned with OSR=100 as per KB 2121181.

As part of creating any Oracle RAC cluster on vSAN, the first step is to create a VM Storage Policy that will be applied to the virtual disks which will be used as the cluster’s shared storage.

In this example we had 2 VM Storage Policies

- ‘Oracle RAC VMC AWS Policy – OSR 100 RAID 1’

- ‘Oracle RAC VMC AWS Policy – OSR 0 RAID 1’

The VM storage policy ‘Oracle RAC VMC AWS Policy – OSR 100 RAID 1’ is shown as below. The rules include

- Failures to tolerate (FTT) set to 1 failure – RAID-1 (Mirroring)

- Number of disk stripes per object set to 1

- Object Space Reservation (OSR) set to Thick provisioning

The VM storage policy ‘Oracle RAC VMC AWS Policy – OSR 0 RAID 1’ is shown as below. The rules include

- Failures to tolerate (FTT) set to 1 failure – RAID-1 (Mirroring)

- Number of disk stripes per object set to 1

- Object Space Reservation (OSR) set to Thin provisioning

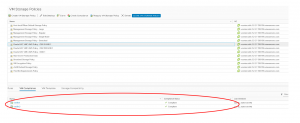

Currently both RAC VM’s have shared vmdk’s with VM storage policy ‘Oracle RAC VMC AWS Policy – OSR 100 RAID 1’ and are complaint with the storage policy.

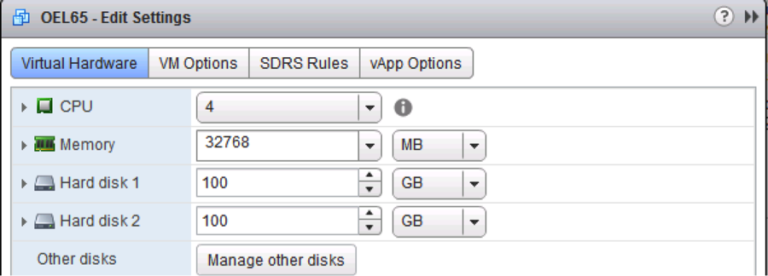

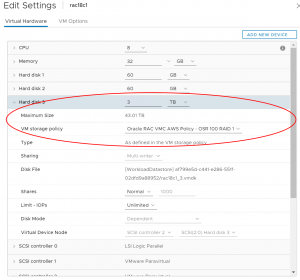

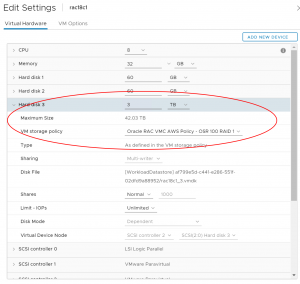

Details of RAC VM ‘rac18c1’ is shown as below.

RAC VM ‘rac18c1’ has 8 vCPU’s, 32GB RAM with 3 vmdk’s.

- Non-shared vmdk’s

- SCSI 0:0 rac18c1.vmdk of size 60G [ Operating System ]

- SCSI 0:0 rac18c1_1.vmdk of size 60G [ Oracle Grid and RDBMS Binaries]

- Shared vmdk

- SCSI 2:0 rac18c1_3.vmdk of size 3TB [ Database ]

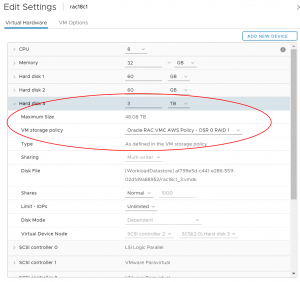

Details of the 3TB shared vmdk can be seen below. Notice that the shared vmdk is provisioned with VM storage policy ‘Oracle RAC VMC AWS Policy – OSR 100 RAID 1’.

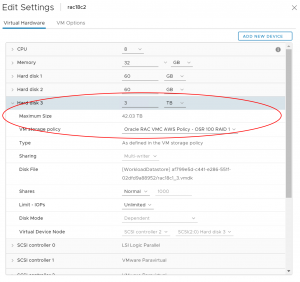

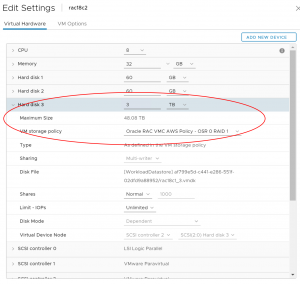

Details of RAC VM ‘rac18c2’ is shown as below. Both VM’s are the same from CPU and Memory perspective,

RAC VM ‘rac18c2’ has 8 vCPU’s, 32GB RAM with 3 vmdk’s.

- Non-shared vmdk’s

- SCSI 0:0 rac18c2.vmdk of size 60G [ Operating System ]

- SCSI 0:0 rac18c2_1.vmdk of size 60G [ Oracle Grid and RDBMS Binaries]

- Shared vmdk

- SCSI 2:0 rac18c1_3.vmdk of size 3TB [ Database ]

Details of the 3TB shared vmdk can be seen below. Notice that RAC VM ‘rac18c2’ points to RAC VM ‘rac18c1’ shared vmdk which has been provisioned with VM storage policy ‘Oracle RAC VMC AWS Policy – OSR 100 RAID 1’.

Operating System ‘fdisk’ command reveals the OS sees a 3TB disk on both VM’s

[root@rac18c1 ~]# fdisk -lu /dev/sdd

Disk /dev/sdd: 3298.5 GB, 3298534883328 bytes, 6442450944 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk label type: dos

Disk identifier: 0x4f8c7d76

Device Boot Start End Blocks Id System

/dev/sdd1 2048 4294967294 2147482623+ 83 Linux

[root@rac18c1 ~]#

[root@rac18c2 ~]# fdisk -lu /dev/sdd

Disk /dev/sdd: 3298.5 GB, 3298534883328 bytes, 6442450944 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk label type: dos

Disk identifier: 0x4f8c7d76

Device Boot Start End Blocks Id System

/dev/sdd1 2048 4294967294 2147482623+ 83 Linux

[root@rac18c2 ~]#

Looking at the actual space consumed for RAC VM ‘rac18c1’ , the shared vmdk ‘rac18c1_3.vmdk’ shows up as 6TB , reason the VM storage policy had

- OSR=100 , so Eager Zero Thick provisioned

- Failures to tolerate (FTT) set to ‘1 failure – RAID-1 (Mirroring)’ , so 2 copies

The actual space consumed for RAC VM ‘rac18c2’ is shown as below.

All Cluster Services & Resource are up.

[root@rac18c1 ~]# /u01/app/18.0.0/grid/bin/crsctl stat res -t

——————————————————————————–

Name Target State Server State details

——————————————————————————–

Local Resources

——————————————————————————–

ora.ASMNET1LSNR_ASM.lsnr

ONLINE ONLINE rac18c1 STABLE

ONLINE ONLINE rac18c2 STABLE

ora.DATA_DG.dg

ONLINE ONLINE rac18c1 STABLE

ONLINE ONLINE rac18c2 STABLE

ora.LISTENER.lsnr

ONLINE ONLINE rac18c1 STABLE

ONLINE ONLINE rac18c2 STABLE

ora.chad

ONLINE ONLINE rac18c1 STABLE

ONLINE ONLINE rac18c2 STABLE

ora.net1.network

ONLINE ONLINE rac18c1 STABLE

ONLINE ONLINE rac18c2 STABLE

ora.ons

ONLINE ONLINE rac18c1 STABLE

ONLINE ONLINE rac18c2 STABLE

——————————————————————————–

Cluster Resources

——————————————————————————–

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE rac18c2 STABLE

ora.LISTENER_SCAN2.lsnr

1 ONLINE ONLINE rac18c1 STABLE

ora.LISTENER_SCAN3.lsnr

1 ONLINE ONLINE rac18c1 STABLE

ora.MGMTLSNR

1 ONLINE ONLINE rac18c1 169.254.10.195 192.1

68.140.207,STABLE

ora.asm

1 ONLINE ONLINE rac18c1 Started,STABLE

2 ONLINE ONLINE rac18c2 Started,STABLE

3 OFFLINE OFFLINE STABLE

ora.cvu

1 ONLINE ONLINE rac18c1 STABLE

ora.mgmtdb

1 ONLINE ONLINE rac18c1 Open,STABLE

ora.qosmserver

1 ONLINE ONLINE rac18c1 STABLE

ora.rac18c.db

1 ONLINE ONLINE rac18c1 Open,HOME=/u01/app/o

racle/product/18.0.0

/dbhome_1,STABLE

2 ONLINE ONLINE rac18c2 Open,HOME=/u01/app/o

racle/product/18.0.0

/dbhome_1,STABLE

ora.rac18c1.vip

1 ONLINE ONLINE rac18c1 STABLE

ora.rac18c2.vip

1 ONLINE ONLINE rac18c2 STABLE

ora.scan1.vip

1 ONLINE ONLINE rac18c2 STABLE

ora.scan2.vip

1 ONLINE ONLINE rac18c1 STABLE

ora.scan3.vip

1 ONLINE ONLINE rac18c1 STABLE

——————————————————————————–

[root@rac18c1 ~]#

Database Details

- Recommendation is to have multiple ASM disks group on multiple PVSCSI controllers as per the Oracle Best Practices Guide

- RAC database has 1 ASM disk group ‘DATA_DG’ with 1 ASM Disk 3TB created for sake of simplicity to show all the test cases

- Actual ASM Disk provisioned size = 3TB

- Database Space used < 3TB

- Tablespace ‘SLOB’ was created on the ASM Disk Group ‘DATA_DG’ of size 1TB and loaded with SLOB data.

- ASM Disk Group ‘DATA_DG’ also houses all the other components of the Database and the RAC Cluster including OCR, VOTE, Redo Log file, Data Files etc.

Test Suite Setup

Key points to be kept in mind as part of the test cases

- Prior to SDDC Version 1.8v2, Oracle RAC on VMware Cloud on AWS required the shared VMDKs to be Eager Zero Thick provisioned (OSR=100) for multi-writer mode to be enabled

- Starting SDDC Version 1.8v2, Oracle RAC on VMware Cloud on AWS does NOT require the shared VMDKs to be Eager Zero Thick provisioned (OSR=100) for multi-writer mode to be enabled

- shared VMDKs can be thin provisioned (OSR=0) for multi-writer mode to be enabled

- Existing / Current RAC vmdk’s were provisioned as EZT using OSR=100

- Online change of Storage Policy of a shared vmdk from OSR=100 to OSR=0 and vice-versa can be performed without any Oracle RAC downtime

As part of the test suite, 5 test cases were performed against the RAC cluster

- Current shared vmdk Storage Policy

- ‘Oracle RAC VMC AWS Policy – OSR 100 RAID 1’

- Create a new VM Storage Policy

- ‘Oracle RAC VMC AWS Policy – OSR 0 RAID 1’

- Test Case 1-4

- Using existing ASM Disk Group (DATA_DG)

- Change Storage Policy of the shared vmdk’s OSR=100 to OSR=0 online without any RAC downtime

- Change Storage Policy of the shared vmdk’s OSR=0 to OSR=100 online without any RAC downtime

- Repeat the above 2 tests again just for consistency sake and check DB and OS error logs

- Using existing ASM Disk Group (DATA_DG)

- Test Case 5

- Using existing ASM Disk Group (DATA_DG)

- ASM Disk Group (DATA_DG) has 1 ASM disk with VM Storage Policy OSR=100

- Add a new vmdk with VM Storage Policy OSR=0

- Guest Operating System only goes off the vmdk provisioned size

- no idea what the actual allocated vmdk size is on the underlying storage

- Drop old ASM disk with VM Storage Policy OSR=100 with ASM rebalance operation

- Check DB and OS error logs

- Using existing ASM Disk Group (DATA_DG)

SLOB Test Suite 2.4.2 was used with run time of 15 minutes with 128 threads

- UPDATE_PCT=30

- SCALE=10G

- THINK_TM_FREQUENCY=0

- RUN_TIME=900

All test cases were performed and the results were captured . Only Test Case 1 is published as part of this blog. The other test cases had the same exact outcome and hence is not included in this blog.

As part of this test case, while the Oracle RAC was running, the Storage Policy of the shared vmdk was changed from ‘Oracle RAC VMC AWS Policy – OSR 100 RAID 1’ to ‘Oracle RAC VMC AWS Policy – OSR 0 RAID 1’.

1) Start SLOB load generator for 15 minutes against the RAC database with 128 threads against the SCAN to load balance between the 2 RAC instances

2) Edit settings for RAC VM ‘rac18c1’.

3) Change the shared vmdk Storage Policy from OSR=100 to OSR=0

4) Edit settings for RAC VM ‘rac18c2’ and change shared vmdk Storage Policy from OSR=100 to OSR=0

5) Check both RAC VM’s ‘rac18c1’ and ‘rac18c2’ Storage Policy Compliance

6) In the Datastore view, Check RAC VM ‘rac18c1’ shared vmdk ‘rac18c1_3.vmdk’ size.

- As per VM settings, the Allocated Size is 3 TB but

- Actual size on vSAN datastore is < 3TB

This indicates that the shared vmdk is indeed thin-provisioned , not thick provisioned.

7) The O/S still reports the ASM disks with original size i.e 3 TB

[root@rac18c1 ~]# fdisk -lu /dev/sdd

Disk /dev/sdd: 3298.5 GB, 3298534883328 bytes, 6442450944 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk label type: dos

Disk identifier: 0x4f8c7d76

Device Boot Start End Blocks Id System

/dev/sdd1 2048 4294967294 2147482623+ 83 Linux

[root@rac18c1 ~]#

[root@rac18c2 ~]# fdisk -lu /dev/sdd

Disk /dev/sdd: 3298.5 GB, 3298534883328 bytes, 6442450944 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk label type: dos

Disk identifier: 0x4f8c7d76

Device Boot Start End Blocks Id System

/dev/sdd1 2048 4294967294 2147482623+ 83 Linux

[root@rac18c2 ~]#

8) No errors were observed in the database Alert log files and OS /var/log/messages file for both RAC instances

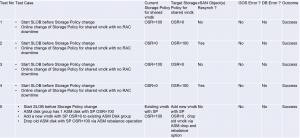

Summary of the test matrix along with the outcome for the various test cases are as below.

Summary

- Prior to SDDC Version 1.8v2, Oracle RAC on VMware Cloud on AWS required the shared VMDKs to be Eager Zero Thick provisioned (OSR=100) for multi-writer mode to be enabled

- Starting SDDC Version 1.8v2, Oracle RAC on VMware Cloud on AWS does NOT require the shared VMDKs to be Eager Zero Thick provisioned (OSR=100) for multi-writer mode to be enabled

- shared VMDKs can be thin provisioned (OSR=0) for multi-writer mode to be enabled

- Online change of Storage Policy of a shared vmdk from OSR=100 to OSR=0 and vice-versa can be performed without any Oracle RAC downtime

- All above test cases were performed and the results were captured . Only Test Case 1 is published as part of this blog. The other test cases had the same exact outcome and hence is not included in this blog.

All Oracle on vSphere white papers including Oracle on VMware vSphere / VMware vSAN / VMware Cloud on AWS , Best practices, Deployment guides, Workload characterization guide can be found in the url below

Oracle on VMware Collateral – One Stop Shop

http://www.vmw.re/oracle-one-stop-shop