Introduction to Linux Huge Pages

Contents

- 1 Introduction to Linux Huge Pages

- 2 Advantages of HugePages for Oracle workloads

- 3 Restrictions with HugePages Configurations for Oracle workloads

- 4

- 5 Disabling Transparent HugePages

- 6 Oracle VM and SLOB Workload Setup

- 7 Configure 2M Huge Pages for Oracle Workloads

- 8 Test Cases

- 9 Oracle without 2M Huge Pages support

- 10 Oracle with 2M Huge Pages support

- 11 Analysis of the tests

- 12 VMware vSphere 6.7 – Backing Guest vRAM with 1GB Pages

- 13 Considerations using VMware vSphere 6.7 1GB Huge Page for Guests

- 14 Configure 1GB OS Huge Page for Oracle Workloads

- 15 Taking advantage of the 1GB OS Huge Page

- 16 Conclusion

Much has been written and spoken about Linux Huge Page feature.

The Red Hat Documentation explains about the performance benefits of using Huge Pages.

Essentially , Memory is managed in blocks known as pages. CPUs have a built-in memory management unit (MMU) that contains a list of these pages, with each page referenced through a page table entry.

To manage large amounts of memory , we need to either

- increase the number of page table entries in the MMU OR

- increase the page size.

The first option is very expensive and results in slow performance as owning to lack of huge page support, the system falls back to slower, software-based memory management, which causes the entire system to run more slowly.

Also reading address mappings from the page table is time-consuming and resource-expensive, so CPUs are built with a cache for recently-used addresses: the Translation Lookaside Buffer (TLB). However, the default TLB can only cache a certain number of address mappings. If a requested address mapping is not in the TLB (that is, the TLB is missed), the system still needs to read the page table to determine the physical to virtual address mapping.

Because of the relationship between application memory requirements and the size of pages used to cache address mappings, applications with large memory requirements are more likely to suffer performance degradation from TLB misses than applications with minimal memory requirements. It is therefore important to avoid TLB misses wherever possible.

The second method is the Linux 2.6 onwards implementation of what is called Huge Pages. Enabling HugePages makes it possible to support memory pages greater than the default (usually 4 KB). The Huge page support is built on top of multiple page size support that is provided by most modern architectures. For example, x86 CPUs normally support 4K and 2M (1G if architecturally supported) page sizes, ia64 architecture supports multiple page sizes 4K, 8K, 64K, 256K, 1M, 4M, 16M,256M and ppc64 supports 4K and 16M.

More information on this can be found at ‘RHEL 7 Memory’ , ‘HugeTLBPage’ and ‘Page table’.

Advantages of HugePages for Oracle workloads

The advantages of HugePages are aptly described in the Oracle documentation :

- Increased performance through increased TLB hits

- Pages are locked in memory and never swapped out, which provides RAM for shared memory structures such as SGA

- Contiguous pages are preallocated and cannot be used for anything else but for System V shared memory (for example, SGA)

- Less bookkeeping work for the kernel for that part of virtual memory because of larger page sizes

More information on this can be found here.

Restrictions with HugePages Configurations for Oracle workloads

Automatic Memory Management (AMM) and HugePages are not compatible. When you use AMM, the entire SGA memory is allocated by creating files under /dev/shm. When Oracle Database allocates SGA with AMM, HugePages are not reserved. To use HugePages on Oracle Database 12c, you must disable AMM

More information on this can be found here.

Disabling Transparent HugePages

Transparent HugePages memory is enabled by default with Red Hat Enterprise Linux 6, SUSE 11, and Oracle Linux 6 with earlier releases of Oracle Linux Unbreakable Enterprise Kernel 2 (UEK2) kernels. Transparent HugePages memory is disabled by default in later releases of UEK2 kernels.

Transparent HugePages can cause memory allocation delays at runtime. To avoid performance issues, Oracle recommends that you disable Transparent HugePages on all Oracle Database servers. Oracle recommends that you instead use standard HugePages for enhanced performance.

Transparent HugePages memory differs from standard HugePages memory because the kernel khugepaged thread allocates memory dynamically during runtime. Standard HugePages memory is pre-allocated at startup, and does not change during runtime.

Refer to Oracle Metalink Note “Disable Transparent HugePages on SLES11, RHEL6, RHEL7, OL6, OL7, and UEK2 and above (Doc ID 1557478.1)”. – Because Transparent HugePages are known to cause unexpected node reboots and performance problems with RAC, Oracle strongly advises to disable the use of Transparent HugePages. In addition, Transparent Hugepages may cause problems even in a single-instance database environment with unexpected performance problems or delays. As such, Oracle recommends disabling Transparent HugePages on all Database servers running Oracle.

More information on this can be found here.

Oracle VM and SLOB Workload Setup

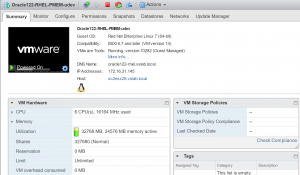

VM ‘Oracle122-RHEL-PMEM-udev’ is configured with 6 vCPU’s, 32GB RAM without any VM level Memory Reservation.

The OS is Red Hat Enterprise Linux Server release 7.5 (Maipo) and Oracle Single Database 12.2.0.1.0. Oracle ASM is used for the Oracle database datafiles.

The Oracle System Global Area (SGA) was set to 24GB (sga_target and sga_max_size set to 24G). The PGA was set to 10G (pga_aggregate_limit=10G).

Configure 2M Huge Pages for Oracle Workloads

The steps to configure 2M huge pages on Linux for Oracle workloads can be found here. Remember , Automatic Memory Management (AMM) and HugePages are not compatible.

Test Cases

We will run 2 tests below and check for the value of the entry ‘PageTables’ in the output of the Linux command ‘/proc/meminfo’

- without Huge Pages

- with Huge Pages

PageTables: Amount of memory dedicated to the lowest level of page tables. This can increase to a high value if a lot of processes are attached to the same shared memory segment.

More information on Page Tables can be found at the RHEL article ‘Interpreting /proc/meminfo and free output for Red Hat Enterprise Linux 5, 6 and 7’.

SLOB was used as the Oracle I/O workload generation tool kit to generate workload against the database. It’s a single schema setup with 100 threads running against the SLOB schema.

The SLOB configuration file is as below. The workload run time is 5 minutes (300 seconds).

oracle@oracle122-rhel:DBPROD:/u01/software/SLOB/SLOB> cat slob.conf

#### SLOB 2.4.0 slob.conf

UPDATE_PCT=100

SCAN_PCT=0

RUN_TIME=300

WORK_LOOP=0

SCALE=28G

SCAN_TABLE_SZ=1M

WORK_UNIT=64

REDO_STRESS=HEAVY

LOAD_PARALLEL_DEGREE=20

#THREADS_PER_SCHEMA=600

THREADS_PER_SCHEMA=1

DATABASE_STATISTICS_TYPE=awr # Permitted values: [statspack|awr]

#### Settings for SQL*Net connectivity:

#### Uncomment the following if needed:

ADMIN_SQLNET_SERVICE=DBPROD_PMEM_RHEL_PDB1

SQLNET_SERVICE_BASE=DBPROD_PMEM_RHEL_PDB1

#SQLNET_SERVICE_MAX=”if needed, replace with a non-zero integer”

#

#### Note: Admin connections to the instance are, by default, made as SYSTEM

# with the default password of “manager”. If you wish to use another

# privileged account (as would be the cause with most DBaaS), then

# change DBA_PRIV_USER and SYSDBA_PASSWD accordingly.

#### Uncomment the following if needed:

DBA_PRIV_USER=sys

SYSDBA_PASSWD=vmware123

#### The EXTERNAL_SCRIPT parameter is used by the external script calling feature of runit.sh.

#### Please see SLOB Documentation at https://kevinclosson.net/slob for more information

EXTERNAL_SCRIPT=”

#########################

#### Advanced settings:

#### The following are Hot Spot related parameters.

#### By default Hot Spot functionality is disabled (DO_HOTSPOT=FALSE).

DO_HOTSPOT=FALSE

HOTSPOT_MB=8

HOTSPOT_OFFSET_MB=16

HOTSPOT_FREQUENCY=3

#### The following controls operations on Hot Schema

#### Default Value: 0. Default setting disables Hot Schema

HOT_SCHEMA_FREQUENCY=0

#### The following parameters control think time between SLOB

#### operations (SQL Executions).

#### Setting the frequency to 0 disables think time.

THINK_TM_FREQUENCY=0

THINK_TM_MIN=.1

THINK_TM_MAX=.5

oracle@oracle122-rhel:DBPROD:/u01/software/SLOB/SLOB>

The SLOB workload generator runtime command used is ‘/u01/software/SLOB/SLOB/runit.sh -s 1 -t 100’ .

Oracle without 2M Huge Pages support

Let’s run a workload against the database without any Huge Page support and see the value of the entry ‘PageTables’ in the output of the Linux command ‘/proc/meminfo’.

Run the SLOB workload via command ‘/u01/software/SLOB/SLOB/runit.sh -s 1 -t 100’.

The start time and stop time for the workload is Tue Nov 20 09:54:18 2018 and Tue Nov 20 09:59:40 2018 respectively.

At the start of the workload, the ‘PageTables’ entry value is 100212 kB.

[root@oracle122-rhel ~]# cat /proc/meminfo

MemTotal: 32781000 kB

MemFree: 4486760 kB

MemAvailable: 5496128 kB

Buffers: 18188 kB

Cached: 26958836 kB

SwapCached: 0 kB

Active: 2850436 kB

Inactive: 24939772 kB

Active(anon): 2652436 kB

Inactive(anon): 23990792 kB

Active(file): 198000 kB

Inactive(file): 948980 kB

Unevictable: 0 kB

Mlocked: 0 kB

SwapTotal: 6291452 kB

SwapFree: 6291452 kB

Dirty: 116 kB

Writeback: 0 kB

AnonPages: 813216 kB

Mapped: 1951948 kB

Shmem: 25830048 kB

Slab: 126696 kB

SReclaimable: 86464 kB

SUnreclaim: 40232 kB

KernelStack: 7872 kB

PageTables: 100212 kB

NFS_Unstable: 0 kB

Bounce: 0 kB

WritebackTmp: 0 kB

CommitLimit: 22681952 kB

Committed_AS: 27974276 kB

VmallocTotal: 34359738367 kB

VmallocUsed: 33759788 kB

VmallocChunk: 34325131260 kB

HardwareCorrupted: 0 kB

AnonHugePages: 0 kB

CmaTotal: 0 kB

CmaFree: 0 kB

HugePages_Total: 0

HugePages_Free: 0

HugePages_Rsvd: 0

HugePages_Surp: 0

Hugepagesize: 2048 kB

DirectMap4k: 118656 kB

DirectMap2M: 4075520 kB

DirectMap1G: 31457280 kB

[root@oracle122-rhel ~]#

Looking at the values of the ‘PageTables’ entry in the ‘/proc/meminfo’ output we can see there is a gradual increase of number of page table entries as the workload runs , the reason being Huge Page is not setup.

[root@oracle122-rhel ~]# date ; echo ; cat /proc/meminfo | grep PageTables

Tue Nov 20 09:54:06 PST 2018

PageTables: 100212 kB

[root@oracle122-rhel ~]# date ; echo ; cat /proc/meminfo | grep PageTables

Tue Nov 20 09:54:27 PST 2018

PageTables: 100572 kB

[root@oracle122-rhel ~]# date ; echo ; cat /proc/meminfo | grep PageTables

Tue Nov 20 09:54:35 PST 2018

PageTables: 245776 kB

[root@oracle122-rhel ~]# date ; echo ; cat /proc/meminfo | grep PageTables

Tue Nov 20 09:54:41 PST 2018

PageTables: 1296428 kB

[root@oracle122-rhel ~]# date ; echo ; cat /proc/meminfo | grep PageTables

Tue Nov 20 09:54:46 PST 2018

PageTables: 2114144 kB

……

[root@oracle122-rhel ~]# date ; echo ; cat /proc/meminfo | grep PageTables

Tue Nov 20 09:58:00 PST 2018

PageTables: 4584096 kB

……

[root@oracle122-rhel ~]# date ; echo ; cat /proc/meminfo | grep PageTables

Tue Nov 20 09:59:19 PST 2018

PageTables: 4597900 kB

……

[root@oracle122-rhel ~]# date ; echo ; cat /proc/meminfo | grep PageTables

Tue Nov 20 09:59:49 PST 2018

PageTables: 174384 kB

[root@oracle122-rhel ~]#

The sar output below shows the swapping activity during the workload period. Keep in mind , the workload was not designed to produce a lot of swapping.

[root@oracle122-rhel ~]# sar -S 5 1000000

Linux 3.10.0-862.3.3.el7.x86_64 (oracle122-rhel.vslab.local) 11/20/2018 _x86_64_ (6 CPU)

09:52:59 AM kbswpfree kbswpused %swpused kbswpcad %swpcad

09:53:04 AM 6291452 0 0.00 0 0.00

09:53:09 AM 6291452 0 0.00 0 0.00

………

09:54:54 AM 6291452 0 0.00 0 0.00

09:54:59 AM 6279420 12032 0.19 0 0.00

……

09:55:54 AM 6279420 12032 0.19 0 0.00

09:55:59 AM 6279420 12032 0.19 0 0.00

09:56:04 AM 6279420 12032 0.19 0 0.00

…….

09:57:19 AM 6279420 12032 0.19 0 0.00

09:57:24 AM 6279420 12032 0.19 0 0.00

09:57:24 AM kbswpfree kbswpused %swpused kbswpcad %swpcad

09:57:29 AM 6279420 12032 0.19 0 0.00

09:57:34 AM 6279420 12032 0.19 0 0.00

09:57:39 AM 6279420 12032 0.19 0 0.00

…….

09:58:09 AM 6279420 12032 0.19 0 0.00

09:58:14 AM 6279420 12032 0.19 0 0.00

……..

09:59:29 AM 6279420 12032 0.19 0 0.00

09:59:34 AM 6279420 12032 0.19 0 0.00

…..

09:59:53 AM 6279420 12032 0.19 0 0.00

Average: 6282754 8698 0.14 0 0.00

[root@oracle122-rhel ~]#

Oracle with 2M Huge Pages support

Let’s run a workload against the database with Huge Page support and see the value of the entry ‘PageTables’ in the output of the Linux command ‘/proc/meminfo’.

The steps to configure 2M huge pages on Linux for Oracle workloads can be found here.

The parameter ‘vm.nr_hugepages’ needs to be configured in the sysctl.conf file as shown below.

[root@oracle122-rhel ~]# cat /etc/sysctl.d/99-oracle-database-server-12cR2-preinstall-sysctl.conf

# sysctl settings are defined through files in

# /usr/lib/sysctl.d/, /run/sysctl.d/, and /etc/sysctl.d/.

#

# Vendors settings live in /usr/lib/sysctl.d/.

# To override a whole file, create a new file with the same in

# /etc/sysctl.d/ and put new settings there. To override

# only specific settings, add a file with a lexically later

# name in /etc/sysctl.d/ and put new settings there.

#

# For more information, see sysctl.conf(5) and sysctl.d(5).

# oracle-database-server-12cR2-preinstall setting for fs.file-max is 6815744

fs.file-max = 6815744

………

vm.swappiness = 1

vm.nr_hugepages = 12290

[root@oracle122-rhel ~]#

The ‘memlock’ setting in this example is set to unlimited, specific values can be used instead.

[root@oracle122-rhel limits.d]# cat /etc/security/limits.d/oracle-database-server-12cR2-preinstall.conf

….

oracle soft memlock unlimited

oracle hard memlock unlimited

grid soft memlock unlimited

grid hard memlock unlimited

[root@oracle122-rhel limits.d]#

The database is restarted with ‘use_large_pages’ initialization parameter set to *.USE_LARGE_PAGES=’only’

Run the SLOB workload via command ‘/u01/software/SLOB/SLOB/runit.sh -s 1 -t 100’

The start time and stop time for the workload is Tue Nov 20 11:07:44 2018 and Tue Nov 20 11:13:05 2018 respectively.

At the start of the workload, the ‘PageTables’ entry value is 62608 kB.

[root@oracle122-rhel ~]# cat /proc/meminfo

MemTotal: 32781000 kB

MemFree: 4666508 kB

MemAvailable: 5416560 kB

Buffers: 15968 kB

Cached: 1618560 kB

SwapCached: 356 kB

Active: 1893596 kB

Inactive: 634444 kB

Active(anon): 1494936 kB

Inactive(anon): 103924 kB

Active(file): 398660 kB

Inactive(file): 530520 kB

Unevictable: 0 kB

Mlocked: 0 kB

SwapTotal: 6291452 kB

SwapFree: 6282236 kB

Dirty: 100 kB

Writeback: 0 kB

AnonPages: 942316 kB

Mapped: 133792 kB

Shmem: 656188 kB

Slab: 75664 kB

SReclaimable: 32688 kB

SUnreclaim: 42976 kB

KernelStack: 7904 kB

PageTables: 62608 kB

NFS_Unstable: 0 kB

Bounce: 0 kB

WritebackTmp: 0 kB

CommitLimit: 10096992 kB

Committed_AS: 2845696 kB

VmallocTotal: 34359738367 kB

VmallocUsed: 33759788 kB

VmallocChunk: 34325131260 kB

HardwareCorrupted: 0 kB

AnonHugePages: 0 kB

CmaTotal: 0 kB

CmaFree: 0 kB

HugePages_Total: 12290

HugePages_Free: 0

HugePages_Rsvd: 0

HugePages_Surp: 0

Hugepagesize: 2048 kB

DirectMap4k: 118656 kB

DirectMap2M: 4075520 kB

DirectMap1G: 31457280 kB

[root@oracle122-rhel ~]#

Looking at the values of the ‘PageTables’ entry in the ‘/proc/meminfo’ output we can see, the number of page table entries reaches a steady state and then there is no more increase , as the workload runs , the reason being Huge Page are being used.

oracle@oracle122-rhel:DBPROD:/home/oracle> date ; echo ; cat /proc/meminfo | grep PageTables

Tue Nov 20 11:07:50 PST 2018

PageTables: 61676 kB

oracle@oracle122-rhel:DBPROD:/home/oracle> date ; echo ; cat /proc/meminfo | grep PageTables

Tue Nov 20 11:07:51 PST 2018

PageTables: 61676 kB

….

oracle@oracle122-rhel:DBPROD:/home/oracle> date ; echo ; cat /proc/meminfo | grep PageTables

Tue Nov 20 11:08:18 PST 2018

PageTables: 133540 kB

……

oracle@oracle122-rhel:DBPROD:/home/oracle> date ; echo ; cat /proc/meminfo | grep PageTables

Tue Nov 20 11:09:23 PST 2018

PageTables: 134156 kB

oracle@oracle122-rhel:DBPROD:/home/oracle> date ; echo ; cat /proc/meminfo | grep PageTables

Tue Nov 20 11:10:07 PST 2018

PageTables: 133540 kB

oracle@oracle122-rhel:DBPROD:/home/oracle> date ; echo ; cat /proc/meminfo | grep PageTables

Tue Nov 20 11:10:15 PST 2018

PageTables: 133540 kB

oracle@oracle122-rhel:DBPROD:/home/oracle> date ; echo ; cat /proc/meminfo | grep PageTables

Tue Nov 20 11:10:16 PST 2018

PageTables: 133868 kB

…….

oracle@oracle122-rhel:DBPROD:/home/oracle> date ; echo ; cat /proc/meminfo | grep PageTables

Tue Nov 20 11:10:58 PST 2018

PageTables: 133560 kB

……

oracle@oracle122-rhel:DBPROD:/home/oracle> date ; echo ; cat /proc/meminfo | grep PageTables

Tue Nov 20 11:12:12 PST 2018

PageTables: 134600 kB

……..

oracle@oracle122-rhel:DBPROD:/home/oracle> date ; echo ; cat /proc/meminfo | grep PageTables

Tue Nov 20 11:12:14 PST 2018

PageTables: 134600 kB

………

oracle@oracle122-rhel:DBPROD:/home/oracle> date ; echo ; cat /proc/meminfo | grep PageTables

Tue Nov 20 11:12:54 PST 2018

PageTables: 135100 kB

……….

oracle@oracle122-rhel:DBPROD:/home/oracle> date ; echo ; cat /proc/meminfo | grep PageTables

Tue Nov 20 11:13:53 PST 2018

PageTables: 64572 kB

As already explained before, the workload was not designed to produce a lot of swapping.

[root@oracle122-rhel ~]# sar -S 5 1000000

Linux 3.10.0-862.3.3.el7.x86_64 (oracle122-rhel.vslab.local) 11/20/2018 _x86_64_ (6 CPU)

11:07:21 AM kbswpfree kbswpused %swpused kbswpcad %swpcad

11:07:26 AM 6282236 9216 0.15 484 5.25

11:07:31 AM 6282236 9216 0.15 484 5.25

11:07:36 AM 6282236 9216 0.15 484 5.25

11:07:41 AM 6282236 9216 0.15 484 5.25

11:07:46 AM 6282236 9216 0.15 484 5.25

………

11:14:06 AM 6282236 9216 0.15 504 5.47

11:14:11 AM 6282236 9216 0.15 504 5.47

11:14:13 AM 6282236 9216 0.15 504 5.47

Average: 6282236 9216 0.15 496 5.38

[root@oracle122-rhel ~]#

Analysis of the tests

From the 2 tests above , we can see using HugePages results in less bookkeeping work for the kernel for that part of virtual memory because of larger page sizes and increased performance through increased TLB hits.

VMware vSphere 6.7 – Backing Guest vRAM with 1GB Pages

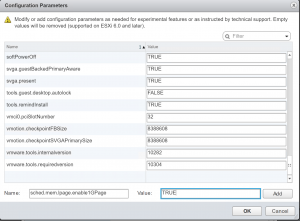

As part of VMware vSphere release 6.7, 1GB Huge page support is provided for VM’s. In order to use 1GB pages for backing guest memory , the following pre-conditions must be met –

- Set VM Option sched.mem.lpage.enable1GPage = “TRUE” for the VM under advanced options after powering off the VM (You can only enable 1GB pages on a VM that is powered off)

- A VM with 1GB pages enabled must have full memory reservation. Otherwise, the VM will not be able to power on

Actually, I was able to power on the VM without setting any Memory Reservations without any issues . Keep in mind this was the only VM running on the host at that point of time.

Considerations using VMware vSphere 6.7 1GB Huge Page for Guests

- 1GB page vRAM backing is opportunistic and 1GB pages are allocated on a best effort basis. This includes cases where host CPUs do not have 1GB capabilities. To maximize the chances of having guest vRAM backed with 1GB pages, we recommended to start VMs requiring 1GB pages on a freshly booted host because over time the host RAM is fragmented.

- A VM with 1GB pages enabled can be migrated to a different host. However, the 1GB page size might not be allocated on the destination host in the same amount as it was on the source host. You might also see part of vRAM backed with a 1GB page on the source host is no longer backed with a 1GB page on the destination host.

- The opportunistic nature of 1GB pages extends to vSphere services such as HA and DRS that might not preserve 1GB page vRAM backing. These services are not aware of 1GB capabilities of destination host and do not take 1GB memory backing into account while making placement decisions

More details on this can be found here.

Configure 1GB OS Huge Page for Oracle Workloads

We now need to configure the OS to take advantage of the 1GB Huge Page support.

From the ‘The Linux kernel user’s and administrator’s guide’ :

hugepagesz = [HW,IA-64,PPC,X86-64] The size of the HugeTLB pages. On x86-64 and powerpc, this option can be specified multiple times interleaved with hugepages= to reserve huge pages of different sizes. Valid pages sizes on x86-64 are 2M (when the CPU supports “pse”) and 1G (when the CPU supports the “pdpe1gb” cpuinfo flag).

Oracle VM has 6 vCPU’s and cpuinfo shows pdpe1gb flag

[root@oracle122-rhel ~]# cat /proc/cpuinfo | grep pdpe1gb

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ss syscall nx pdpe1gb rdtscp lm constant_tsc arch_perfmon nopl xtopology tsc_reliable nonstop_tsc eagerfpu pni pclmulqdq ssse3 fma cx16 pcid sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand hypervisor lahf_lm abm 3dnowprefetch fsgsbase tsc_adjust bmi1 hle avx2 smep bmi2 invpcid rtm mpx avx512f avx512dq rdseed adx smap clflushopt clwb avx512cd avx512bw avx512vl xsaveopt xsavec ibpb ibrs stibp arat pku ospke spec_ctrl intel_stibp arch_capabilities

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ss syscall nx pdpe1gb rdtscp lm constant_tsc arch_perfmon nopl xtopology tsc_reliable nonstop_tsc eagerfpu pni pclmulqdq ssse3 fma cx16 pcid sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand hypervisor lahf_lm abm 3dnowprefetch fsgsbase tsc_adjust bmi1 hle avx2 smep bmi2 invpcid rtm mpx avx512f avx512dq rdseed adx smap clflushopt clwb avx512cd avx512bw avx512vl xsaveopt xsavec ibpb ibrs stibp arat pku ospke spec_ctrl intel_stibp arch_capabilities

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ss syscall nx pdpe1gb rdtscp lm constant_tsc arch_perfmon nopl xtopology tsc_reliable nonstop_tsc eagerfpu pni pclmulqdq ssse3 fma cx16 pcid sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand hypervisor lahf_lm abm 3dnowprefetch fsgsbase tsc_adjust bmi1 hle avx2 smep bmi2 invpcid rtm mpx avx512f avx512dq rdseed adx smap clflushopt clwb avx512cd avx512bw avx512vl xsaveopt xsavec ibpb ibrs stibp arat pku ospke spec_ctrl intel_stibp arch_capabilities

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ss syscall nx pdpe1gb rdtscp lm constant_tsc arch_perfmon nopl xtopology tsc_reliable nonstop_tsc eagerfpu pni pclmulqdq ssse3 fma cx16 pcid sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand hypervisor lahf_lm abm 3dnowprefetch fsgsbase tsc_adjust bmi1 hle avx2 smep bmi2 invpcid rtm mpx avx512f avx512dq rdseed adx smap clflushopt clwb avx512cd avx512bw avx512vl xsaveopt xsavec ibpb ibrs stibp arat pku ospke spec_ctrl intel_stibp arch_capabilities

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ss syscall nx pdpe1gb rdtscp lm constant_tsc arch_perfmon nopl xtopology tsc_reliable nonstop_tsc eagerfpu pni pclmulqdq ssse3 fma cx16 pcid sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand hypervisor lahf_lm abm 3dnowprefetch fsgsbase tsc_adjust bmi1 hle avx2 smep bmi2 invpcid rtm mpx avx512f avx512dq rdseed adx smap clflushopt clwb avx512cd avx512bw avx512vl xsaveopt xsavec ibpb ibrs stibp arat pku ospke spec_ctrl intel_stibp arch_capabilities

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ss syscall nx pdpe1gb rdtscp lm constant_tsc arch_perfmon nopl xtopology tsc_reliable nonstop_tsc eagerfpu pni pclmulqdq ssse3 fma cx16 pcid sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand hypervisor lahf_lm abm 3dnowprefetch fsgsbase tsc_adjust bmi1 hle avx2 smep bmi2 invpcid rtm mpx avx512f avx512dq rdseed adx smap clflushopt clwb avx512cd avx512bw avx512vl xsaveopt xsavec ibpb ibrs stibp arat pku ospke spec_ctrl intel_stibp arch_capabilities

[root@oracle122-rhel ~]#

Remove any prior settings in the sysctl.conf file for the 2M Huge pages. The OS needs to be setup for the 1GB Huge Page support before Oracle can take advantage of that.

Red Hat Enterprise Linux 6.7 systems and onwards support 2 MB and 1 GB huge pages, which can be allocated at boot or at runtime.

The grub file must be setup as shown below:

[root@oracle122-rhel sysctl.d]# cat /etc/default/grub

GRUB_TIMEOUT=5

GRUB_DISTRIBUTOR=”$(sed ‘s, release .*$,,g’ /etc/system-release)”

GRUB_DEFAULT=saved

GRUB_DISABLE_SUBMENU=true

GRUB_TERMINAL_OUTPUT=”console”

GRUB_CMDLINE_LINUX=”crashkernel=auto rd.lvm.lv=rhel/root rd.lvm.lv=rhel/swap rhgb quiet net.ifnames=0 biosdevname=0 ipv6.disable=1 elevator=noop transparent_hugepage=never vmw_pvscsi.cmd_per_lun=254 vmw_pvscsi.ring_pages=32 numa=off ipv6.disable=1 default_hugepagesz=1G hugepagesz=1G hugepages=25”

GRUB_DISABLE_RECOVERY=”true”

[root@oracle122-rhel sysctl.d]#

Rebuild the kernel and reboot the OS to let the the grub file changes take effect via command ‘grub2-mkconfig -o /boot/grub2/grub.cfg’.

After reboot, check the output of the ‘/proc/meminfo’ command:

[root@oracle122-rhel ~]# cat /proc/meminfo

MemTotal: 32781000 kB

MemFree: 4242024 kB

MemAvailable: 5143100 kB

Buffers: 15344 kB

Cached: 1591996 kB

SwapCached: 0 kB

Active: 1152192 kB

Inactive: 808700 kB

Active(anon): 1005336 kB

Inactive(anon): 12784 kB

Active(file): 146856 kB

Inactive(file): 795916 kB

Unevictable: 0 kB

Mlocked: 0 kB

SwapTotal: 6291452 kB

SwapFree: 6291452 kB

Dirty: 44 kB

Writeback: 0 kB

AnonPages: 353404 kB

Mapped: 144532 kB

Shmem: 664572 kB

Slab: 65028 kB

SReclaimable: 29332 kB

SUnreclaim: 35696 kB

KernelStack: 7376 kB

PageTables: 24664 kB

NFS_Unstable: 0 kB

Bounce: 0 kB

WritebackTmp: 0 kB

CommitLimit: 9574752 kB

Committed_AS: 1931700 kB

VmallocTotal: 34359738367 kB

VmallocUsed: 33760828 kB

VmallocChunk: 34325131260 kB

HardwareCorrupted: 0 kB

AnonHugePages: 0 kB

CmaTotal: 0 kB

CmaFree: 0 kB

HugePages_Total: 25

HugePages_Free: 25

HugePages_Rsvd: 0

HugePages_Surp: 0

Hugepagesize: 1048576 kB

DirectMap4k: 141184 kB

DirectMap2M: 5101568 kB

DirectMap1G: 30408704 kB

[root@oracle122-rhel ~]#

Mount the 2MB and 1GB huge pages on the host:

# mkdir /dev/hugepages1G

# mount -t hugetlbfs -o pagesize=1G none /dev/hugepages1G

[root@oracle122-rhel ~]# df -a

Filesystem 1K-blocks Used Available Use% Mounted on

rootfs – – – – /

sysfs 0 0 0 – /sys

proc 0 0 0 – /proc

devtmpfs 16378504 0 16378504 0% /dev

securityfs 0 0 0 – /sys/kernel/security

tmpfs 25165824 655360 24510464 3% /dev/shm

devpts 0 0 0 – /dev/pts

tmpfs 16390500 9176 16381324 1% /run

…..

……

tmpfs 3278100 0 3278100 0% /run/user/0

none 0 0 0 – /dev/hugepages1G

[root@oracle122-rhel ~]#

Restart libvirtd if needed to enable the use of 1GB huge pages on guests:

systemctl restart libvirtd

Now the OS has the 1GB Huge Page support enabled.

Taking advantage of the 1GB OS Huge Page

Now let’s see if Oracle workloads can take advantage of the 1GB Huge Page.

oracle@oracle122-rhel:DBPROD:/home/oracle> ./start_db

SQL*Plus: Release 12.2.0.1.0 Production on Tue Nov 20 17:01:47 2018

Copyright (c) 1982, 2016, Oracle. All rights reserved.

SQL> Connected to an idle instance.

SQL> ORA-27123: unable to attach to shared memory segment

Linux-x86_64 Error: 22: Invalid argument

Additional information: 2736

Additional information: 131075

Additional information: 1610612736

SQL> alter system register

*

ERROR at line 1:

ORA-01034: ORACLE not available

Process ID: 0

Session ID: 0 Serial number: 0

SQL> Disconnected

oracle@oracle122-rhel:DBPROD:/home/oracle>

Check if system has adequate free memory.

oracle@oracle122-rhel:DBPROD:/home/oracle> free -g

total used free shared buff/cache available

Mem: 31 25 3 0 1 4

Swap: 5 0 5

oracle@oracle122-rhel:DBPROD:/home/oracle>

Trace the Oracle startup process to see what is real issue

oracle@oracle122-rhel:DBPROD:/home/oracle> strace -p 12009

strace: Process 12009 attached

read(0, “startup pfile=/u01/app/oracle/pr”…, 1024) = 73

write(10, “134263^5a200376377377377377377377377221″…, 482) = 482

read(11, “vf 11vf 12”, 8208) = 22

write(10, “vf 12”, 11) = 11

read(11, “2736411n4″…, 8208) = 187

open(“/u01/app/oracle/product/12.2.0/dbhome_1/rdbms/mesg/oraus.msb”, O_RDONLY) = 9

fcntl(9, F_SETFD, FD_CLOEXEC) = 0

lseek(9, 0, SEEK_SET) = 0

read(9, “2523”1233tt″…, 280) = 280

lseek(9, 512, SEEK_SET) = 512

read(9, “321f201265>V[3oW200240212202231k273360375377377″…, 512) = 512

lseek(9, 1024, SEEK_SET) = 1024

read(9, “30$.009BNYdlv203215240310321331″…, 512) = 512

lseek(9, 132608, SEEK_SET) = 132608

read(9, “vGfJHffIftJf221Kf264″…, 512) = 512

close(9) = 0

open(“/u01/app/oracle/product/12.2.0/dbhome_1/rdbms/mesg/oraus.msb”, O_RDONLY) = 9

fcntl(9, F_SETFD, FD_CLOEXEC) = 0

lseek(9, 0, SEEK_SET) = 0

read(9, “2523”1233tt″…, 280) = 280

lseek(9, 512, SEEK_SET) = 512

read(9, “321f201265>V[3oW200240212202231k273360375377377″…, 512) = 512

lseek(9, 1024, SEEK_SET) = 1024

read(9, “30$.009BNYdlv203215240310321331″…, 512) = 512

lseek(9, 132096, SEEK_SET) = 132096

read(9, “v<fJ=fi>f201?f274@f323″…, 512) = 512

close(9) = 0

open(“/u01/app/oracle/product/12.2.0/dbhome_1/oracore/mesg/lrmus.msb”, O_RDONLY) = 9

fcntl(9, F_SETFD, FD_CLOEXEC) = 0

lseek(9, 0, SEEK_SET) = 0

read(9, “2523”1233tt″…, 280) = 280

uname({sysname=”Linux”, nodename=”oracle122-rhel.vslab.local”, …}) = 0

write(10, “24163m63763773773773773773773771376377377″…, 161) = 161

read(11, “316101t1”, 8208) = 25

open(“/u01/app/oracle/product/12.2.0/dbhome_1/dbs/pfile_0904.txt”, O_RDONLY) = 12

stat(“/u01/app/oracle/product/12.2.0/dbhome_1/dbs/pfile_0904.txt”, {st_mode=S_IFREG|0644, st_size=1111, …}) = 0

fstat(12, {st_mode=S_IFREG|0644, st_size=1111, …}) = 0

mmap(NULL, 4096, PROT_READ|PROT_WRITE, MAP_PRIVATE|MAP_ANONYMOUS, -1, 0) = 0x7fd09e13d000

read(12, “*.audit_file_dest=’/u01/admin/DB”…, 4096) = 1111

get_mempolicy(NULL, NULL, 0, NULL, 0) = 0

getrlimit(RLIMIT_STACK, {rlim_cur=10240*1024, rlim_max=32768*1024}) = 0

open(“/proc/sys/kernel/shmmax”, O_RDONLY) = 13

fstat(13, {st_mode=S_IFREG|0644, st_size=0, …}) = 0

mmap(NULL, 4096, PROT_READ|PROT_WRITE, MAP_PRIVATE|MAP_ANONYMOUS, -1, 0) = 0x7fd09e13c000

read(13, “4398046511104n”, 1024) = 14

close(13) = 0

munmap(0x7fd09e13c000, 4096) = 0

get_mempolicy(NULL, NULL, 0, NULL, 0) = 0

open(“/proc/meminfo”, O_RDONLY) = 13

fstat(13, {st_mode=S_IFREG|0444, st_size=0, …}) = 0

mmap(NULL, 4096, PROT_READ|PROT_WRITE, MAP_PRIVATE|MAP_ANONYMOUS, -1, 0) = 0x7fd09e13c000

read(13, “MemTotal: 32781000 kBnMemF”…, 1024) = 1024

read(13, ” 0 kBnCmaFree: “…, 1024) = 258

close(13) = 0

munmap(0x7fd09e13c000, 4096) = 0

times(NULL) = 429587365

write(10, “25063o73763773773773773773773771376377377″…, 168) = 168

read(11, “316101t1”, 8208) = 25

write(10, “23263o103763773773773773773773771376377377″…, 154) = 154

read(11, “316101t1”, 8208) = 25

write(10, “21763ot3763773773773773773773771376377377″…, 143) = 143

read(11, “316101t1”, 8208) = 25

write(10, “23763on3763773773773773773773771376377377″…, 159) = 159

read(11, “316101t1”, 8208) = 25

write(10, “22663ov3763773773773773773773771376377377″…, 150) = 150

read(11, “316101t1”, 8208) = 25

write(10, “24563of3763773773773773773773771376377377″…, 165) = 165

read(11, “316101t1”, 8208) = 25

write(10, “24563or3763773773773773773773771376377377″…, 165) = 165

read(11, “316101t1”, 8208) = 25

write(10, “24563o163763773773773773773773771376377377″…, 165) = 165

read(11, “316101t1”, 8208) = 25

write(10, “22363o173763773773773773773773771376377377″…, 147) = 147

read(11, “316101t1”, 8208) = 25

write(10, “22363o203763773773773773773773771376377377″…, 147) = 147

read(11, “316101t1”, 8208) = 25

write(10, “23563o213763773773773773773773771376377377″…, 157) = 157

read(11, “316101t1”, 8208) = 25

write(10, “21363o223763773773773773773773771376377377″…, 139) = 139

read(11, “316101t1”, 8208) = 25

write(10, “21763o233763773773773773773773771376377377″…, 143) = 143

read(11, “316101t1”, 8208) = 25

write(10, “23763o243763773773773773773773771376377377″…, 159) = 159

read(11, “316101t1”, 8208) = 25

write(10, “25063o253763773773773773773773771376377377″…, 168) = 168

read(11, “316101t1”, 8208) = 25

write(10, “24263o263763773773773773773773771376377377″…, 162) = 162

read(11, “316101t1”, 8208) = 25

write(10, “23763o273763773773773773773773771374177376377377″…, 159) = 159

read(11, “316101t1”, 8208) = 25

write(10, “22563o303763773773773773773773771374177376377377″…, 149) = 149

read(11, “316101t1”, 8208) = 25

write(10, “22263o313763773773773773773773771374177376377377″…, 146) = 146

read(11, “316101t1”, 8208) = 25

write(10, “24063o323763773773773773773773771374177376377377″…, 160) = 160

read(11, “316101t1”, 8208) = 25

write(10, “22263o333763773773773773773773771374177376377377″…, 146) = 146

read(11, “316101t1”, 8208) = 25

write(10, “23763o343763773773773773773773771374177376377377″…, 159) = 159

read(11, “316101t1”, 8208) = 25

write(10, “23163o353763773773773773773773771374177376377377″…, 153) = 153

read(11, “316101t1”, 8208) = 25

write(10, “23063o363763773773773773773773771374177376377377″…, 152) = 152

read(11, “316101t1”, 8208) = 25

write(10, “22763o373763773773773773773773771374177376377377″…, 151) = 151

read(11, “316101t1”, 8208) = 25

write(10, “21763o 3763773773773773773773771374177376377377″…, 143) = 143

read(11, “316101t1”, 8208) = 25

write(10, “24463o!3763773773773773773773771374177376377377″…, 164) = 164

read(11, “316101t1”, 8208) = 25

write(10, “22763o”3763773773773773773773771374177376377377″…, 151) = 151

read(11, “316101t1”, 8208) = 25

write(10, “22163o#3763773773773773773773771374177376377377″…, 145) = 145

read(11, “316101t1”, 8208) = 25

write(10, “21763o$3763773773773773773773771374177376377377″…, 143) = 143

read(11, “316101t1”, 8208) = 25

write(10, “21163o%3763773773773773773773771374177376377377″…, 137) = 137

read(11, “316101t1”, 8208) = 25

write(10, “23263o&3763773773773773773773771374177376377377″…, 154) = 154

read(11, “316101t1”, 8208) = 25

write(10, “22563o’3763773773773773773773771374177376377377″…, 149) = 149

read(11, “316101t1”, 8208) = 25

close(12) = 0

munmap(0x7fd09e13d000, 4096) = 0

write(10, “-63n(3763773773773773773773771374177376377377″…, 45) = 45

read(11, “316101t1”, 8208) = 25

close(9) = 0

write(10, “356030)374177376377377377377377377377”, 29) = 29

read(11, “1X6411363i″…, 8208) = 344

write(1, “ORA-27123: unable to attach to s”…, 53) = 53

write(1, “Linux-x86_64 Error: 22: Invalid “…, 41) = 41

write(1, “Additional information: 2736n”, 29) = 29

write(1, “Additional information: 163843n”, 31) = 31

write(1, “Additional information: 16106127″…, 35) = 35

write(1, “SQL> “, 5) = 5

read(0, “”, 1024) = 0

write(10, “r63t*”, 13) = 13

read(11, “216t1”, 8208) = 17

lseek(4, 15872, SEEK_SET) = 15872

read(4, “n2432D2442_245220624622772522371″…, 512) = 512

write(1, “Disconnectedn”, 13) = 13

write(10, “n6 @”, 10) = 10

close(10) = 0

close(11) = 0

rt_sigprocmask(SIG_BLOCK, [INT], NULL, 8) = 0

rt_sigaction(SIGINT, {SIG_DFL, ~[ILL TRAP ABRT BUS FPE SEGV USR2 TERM XCPU XFSZ SYS RTMIN RT_1], SA_RESTORER|SA_RESTART|SA_SIGINFO, 0x7fd0986b7680}, {0x7fd099c68db0, ~[ILL TRAP ABRT BUS FPE KILL SEGV USR2 TERM STOP XCPU XFSZ SYS RTMIN RT_1], SA_RESTORER|SA_RESTART|SA_SIGINFO, 0x7fd0986b7680}, 8) = 0

rt_sigprocmask(SIG_UNBLOCK, [INT], NULL, 8) = 0

close(8) = 0

munmap(0x7fd09e017000, 176128) = 0

close(5) = 0

close(3) = 0

close(4) = 0

munmap(0x7fd09e102000, 143360) = 0

exit_group(0) = ?

+++ exited with 0 +++

oracle@oracle122-rhel:DBPROD:/home/oracle>

On further investigation , Oracle Metalink Note 1607545.1 ‘Startup Error ORA-27123: Unable To Attach To Shared Memory Segment Linux-x86_64 Error: 22: Invalid argument (Doc ID 1607545.1)’ talks about this issue with similar example of starting up a DB with SGA of 3GB with 1GB huge pages and encountering the same error.

As per the Metalink note, ‘As per unpublished Bug 17271305, the issue is in Oracle code. Ideally the fixed SGA would be in 2MB HugePages (it is small enough to fit into a 2MB huge page), but would require additional changes to Oracle code since the Linux shm*() interface only supports one hugepage size (whatever is set to default, either 2MB or 1GB).’.

Conclusion

From the above tests , we can see using HugePages results in less bookkeeping work for the kernel for that part of virtual memory because of larger page sizes and increased performance through increased TLB hits.

Starting VMware vSphere 6.7, 1GB Huge page support is provided for VM’s vRAM with the caveats that the VM needs full memory reservations along with the fact that 1GB page vRAM backing is opportunistic and 1GB pages are allocated on a best effort basis. The opportunistic nature of 1GB pages extends to vSphere services such as HA and DRS that might not preserve 1GB page vRAM backing.

Red Hat Enterprise Linux 6.7 systems and onwards support 2 MB and 1 GB huge pages, which can be allocated at boot or at runtime.

At the time of writing this blog, Oracle workloads can take advantage of the 2M OS Huge Pages , unfortunately they cannot take advantage of the 1GB Huge Page as the Oracle kernel code needs to be changed to take advantage of that .

All Oracle on vSphere white papers including Oracle on VMware vSphere / VMware vSAN / VMware Cloud on AWS , Best practices, Deployment guides, Workload characterization guide can be found at Oracle on VMware Collateral – One Stop Shop