In this blog series, we will be covering several aspects of Cross-VDC Networking inside of VMware vCloud Director 9.5. This was created by Daniel Paluszek, Abhinav Mishra, and Wissam Mahmassani.

With the release of VMware vCloud Director 9.5, which is packed with a lot of great new features, one of the significant additions is the introduction of Cross-VDC networking.

The intent of this blog series is to review the following:

- Introduction and capabilities of Cross-VDC networking

- Use cases

- Getting started with Cross-VDC networking

- Recommended high-level provider design

- Failure scenarios and considerations

- Demonstration videos

Before we get too far, we are going to apply some definitions for the terms we will utilize throughout this blog series.

Glossary:

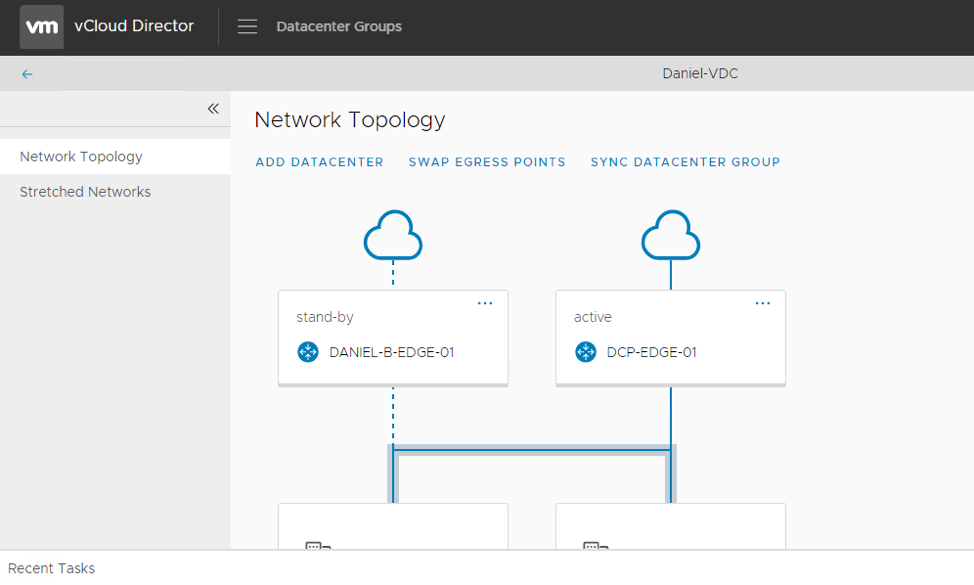

- Common Egress Points: Active/Standby Configuration

- There is a single Active Egress point, which all traffic will route to for North-South by default. There is a standby Egress point which kicks in when the Active Egress Point is down.

- Egress Points Per Fault Domain: Active/Active Configuration

- Multiple Egress points with Local Egress. A Local Egress Point is defined per Fault Domain so traffic will route through the local egress point by default. Each fault domain essentially has its own local egress point.

- Egress Point: An Egress Point is an Org vDC Edge Gateway, used for North-South traffic. An Egress Point can either be an Active egress point or a standby egress point (when the Datacenter group is of type Common Egress Points).

- Datacenter Group: A Datacenter/VDC Group is a collection of Organization VDCs (up to 4) for Stretched Networking and defined points of Egress. There are two types of VDC Groups.

- Universal Router: A Universal Router is essentially a Universal DLR in NSX. When a VDC Group is created, a Tenant Universal Router is automatically created. There is a 1:1 mapping currently between a Datacenter Group and a Universal Router. The Universal Router allows for simple East-West connectivity for workloads connected to L2 Stretched Networks across sites. In addition, the Universal DLR is peered to the Egress Points, via iBGP during routing configuration (which is automatically created by vCD).

- Stretched Network: This is a network that is stretched across the Org VDCs (via NSX Universal Logical Switch). Workloads can be connected to these stretched networks. Only IPv4 is supported on a Stretched Network at this time. When a stretched network is created, a corresponding Org VDC Network that backs the stretched network is created for each Org VDC participating in the Datacenter Group. Only the Static IP Pools can be managed for these backing Org VDC Networks.

- Network Provider Scope: Network Provider Scope is equal to the fault domain that is used for routing. It corresponds to a Tenant Facing tag that the user can see when configuring routing at that given scope/fault domain. The Network Provider Scope needs to be unique across each vCenter/NSX instance across vCD Sites.

- Universal VXLAN Network Pool: This corresponds to the Universal Transport Zone. A Universal VXLAN Network Pool, where the Universal Transport Zone is essentially imported into vCD, needs to be created only once on each vCD Site.

- Control VM Parameters: This corresponds to the vCenter Resource Pool, Datastore, and HA Management Interface that is used for deploying the Control VM for the Universal Router on each fault domain/vCenter NSX Pairing. This needs to be configured on each vCenter/NSX Pairing just like the Network Provider Scope.

Introduction to Cross-VDC Networking

Contents

Let’s get started with what Cross-VDC networking inside of vCloud Director 9.5. In essence, we now can utilize the capability of Cross-vCenter NSX to stretch Layer 2 networks (Stretched Networks) and have routing and egress across Organization VDCs that are from different sites. This allows us to provide self-service L2 capability for Organization Administrators between (or within) vCloud Director organization Virtual Data Centers (orgVDCs). All universal objects are propagated to the vCD API and can be consumed from the UI or API.

Before this 9.5 release and integration with Cross-vCenter NSX, let’s talk about what we did in prior releases. vCloud networks fell into two categories, networks created by vCD (various types of OrgVDC networks) and those created outside of, and then “adopted” by, vCD (or External Networks). In prior versions, an OrgVDC network could be “stretched” but only by sharing it within the scope of a single vCD instance. Similarly, an external network could be stretched between separate vCD instances, but neither instance would have insight into these capabilities while being purely managed by the Provider. vCD 9.5 changes that with the introduction of Cross-VDC Networking.

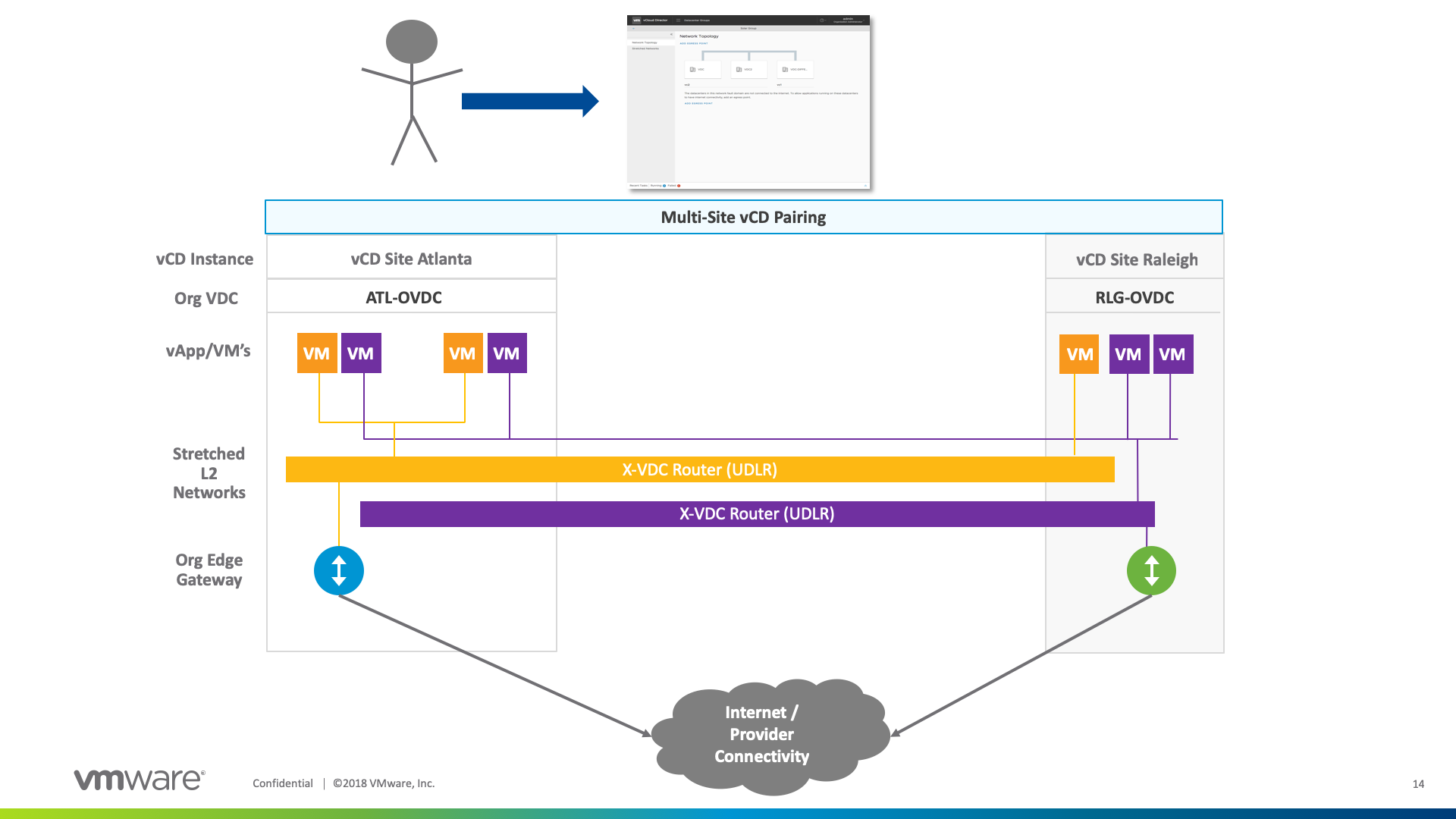

In the below example, we can see the tenant that has access to two distinct sites by using vCloud Director Multi-Site management. From there, this tenant is able to create stretched L2 networks while setting up an Active/Standby or Active/Active egress points for these specific networks. These stretched networks connect to traditional vCD managed Edge services and route traffic respectively to the provider network (or Internet).

What’s unique in this approach is the abstraction of the underlying vCenter/NSX instance as we are providing stretched networking between Org VDC Constructs using Cross-VC NSX Concepts such as Universal Transport Zone, Universal Distributed Logical Router, and Universal Logical Switches. The end benefit is the continued flexibility of stretched networking between one or more vCloud Director instances. Moreover, when creating Egress Points, BGP Routes are automatically plumbed to the Universal DLR and Egress Points allowing for easy setup and management of routes across sites.

The same considerations are still in play as cross-vCenter NSX: storage and compute are independent between orgVDCs and there is a requirement for less than 150ms response time between the two sites. Last of all, the interconnect between the two sites must support a minimum MTU of 1600 to run the VXLAN overlay protocol.

Capabilities of Cross-VDC Networking

- Managed and created by the provider administrator tenant administrator. Provider has the ability to define a fault domain for each NSX/VC Pairing.

- Ability to stretch L2 Networks across Org VDCs in a VDC Group (up to 4).

- vCloud Director takes care of the “heavy-lifting” of creating the Universal DLR when creating a VDC Group, which is comprised of two or more Org VDCs.

- The user also has the ability to configure the VDC Group to be Active-Active (Local Egress/Egress Points per fault domain), or Active-Standby (Common Egress across fault domains).

- vCloud Director automatically takes care of the BGP Routing between the Universal DLR and Edge Gateways when creating/managing Egress Points.

- When creating a stretched network, the L2 Stretched Network is created in all the VDCs that span the VDC Group. Expanding/Shrinking a VDC Group automatically expands or shrinks the Stretched Networks appropriately.

- Ability to utilize IP pools on a per site basis for IP address management inside of vCD.

Use Cases

The most important discussion is the use of Cross-VDC networking and how it pertains to customer requirements. In essence, we have seen Cross-vCenter NSX utilized for a multi-tiered application architecture that is typically based on traditional software development methods. For example, there may be a requirement for the same Layer 2 domain for the application or database layer. Therefore, the ability to provide that same Layer 2 domain between multiple sites can increase the overall availability of the solution.

The use cases for Cross-VDC networking revolve around the following –

- Consistent Logical Networking and Spanned Resource Pooling

- Application Availability, Mobility, and Migration

- Business Continuity and Disaster Recovery

Consistent Logical Networking and Spanned Resource Pooling

There is a significant advantage of simplifying networking between virtual data center constructs for tenants. The ease of establishing a set “floor plan” wherever your workloads reside can reduce the operational complexity of planning for future growth and scalability requirements.

From a resource pooling perspective, this allows for the ability to have consistent networking between multiple vCloud Director instances. A workload does not need to be isolated to a single oVDC, we can now span the network traffic between multiple vCD orgVDC’s to achieve balancing and utilization.

Application Availability, Mobility, Migration

When utilizing an Active/Active crossVDC configuration, one could provide redundant services between multiple sites via site load balancer solutions. This can lead to a higher application availability for mission-critical solutions while not being constrained to a specific site. Moreover, the ease of migration is greatly simplified since the L2 network resides already on the destination site. vCloud Availability for Cloud-to-Cloud can complement Cross-VDC on providing migration between orgVDC’s.

Business Continuity and Disaster Recovery

With the ability of stretching a L2 network between two orgVDC constructs, tenants and providers can provide recovery functionality that is demanded in disaster recovery solutions. Since the logical networking spans multiple oVDCs, one can fail over workloads without any IP address changes.

Using the local egress functionality provides advanced control on how the egress (exiting) traffic routes within the VDC group. This allows a specific Org VDC Edge Gateway to be used for a set of Stretched Networks that are part of a VDC Group. In the event of a failed egress point, BGP weights will kick in and start routing traffic to the standby egress point.

On the next blog post, we will review how to get started with Cross-VDC configuration inside of vCloud Director 9.5. Thanks!