This blog is an update of Josh Simons’ previous blog “How to Enable Compute Accelerators on vSphere 6.5 for Machine Learning and Other HPC Workloads”, and explains how to enable Nvidia V100 GPU, which comes with a larger PCI BARs (Base Address Registers) than previous GPU models, in Passthrough mode on vSphere 6.0 p4 and beyond. In addition, performance results are presented to demonstrate that V100 GPU in passthrough mode can achieve very close performance to bare-metal.

Preliminaries

Contents

To enable Nvidia V100 GPU for passthrough your host BIOS must be configured correctly, and the virtual machine destined to run these accelerated workloads must meet specific requirements as well. This section describes all of these requirements.

Note that while VMware supports VM Direct Path I/O (passthrough) as an ESXi feature and a device listed here may work correctly in passthrough mode, you should contact your device/system vendor if you require a formal statement of support for a particular device model.

Which Devices?

This article is only relevant if your PCI device maps memory regions whose sizes total more than 16GB. Devices in this class include the Nvidia K40m, K80, P100, and V100; Intel Xeon Phi; and some FPGA cards. Devices for which these instructions should not be necessary include the Nvidia K40c, K2, K20m, and AMD FirePro W9100, S7150x2. For these cards, simply follow the published instructions to enable passthrough devices under vSphere. As a general rule, cards that require more than 16GB of memory mapping are high end cards. You should follow the instructions in this article to enable them for use in passthrough mode within a virtual machine.

Host BIOS

Your host BIOS must be configured to support the large memory regions needed by these high-end PCI devices. To enable this, find the host BIOS setting for “above 4G decoding” or “memory mapped I/O above 4GB” or “PCI 64 bit resource handing above 4G” and enable it. The exact wording of this option varies by system vendor, though the option is often found in the PCI section of the BIOS menu. Consult your system provider if necessary to enable this option.

Guest OS

To access these large memory mappings, your guest OS must boot with EFI. That is, you must enable EFI in the VM and then do an EFI installation of the guest OS.

Enabling High-end Devices

With the above requirements satisfied, two entries must be added to the VM’s VMX file, either by modifying the file directly or by using the vSphere client to add these capabilities. The first entry is:

|

pciPassthru.use64bitMMIO=“TRUE” |

Specifying the 2nd entry requires a simple calculation. Sum the GPU memory sizes of all GPU devices(*) you intend to pass into the VM and then round up to the next power of two. For example, to use passthrough with two 32 GB V100 devices, the value would be: 32 + 32 = 64, rounded up to the next power of two to yield 128. Use this value in the 2nd entry:

|

pciPassthru.64bitMMIOSizeGB=“128” |

With these two changes to the VMX file, follow the standard vSphere instructions for enabling passthrough devices at the host level and for specifying which devices should be passed into your VM. The VM should now boot correctly with your device(s) in passthrough mode.

(*) Note that some products, like the Nvidia K80 GPU, have two PCI devices on each physical PCI card. Count the number of PCI devices you intend to pass into the VM and not the number of PCI cards. For example, if you intend to use both of the K80’s GPU devices within a single VM, then your device count is two, not one.

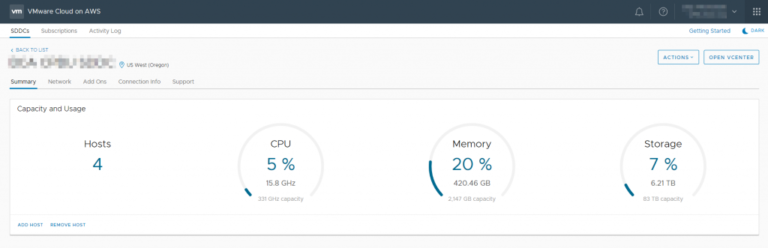

Performance evaluation

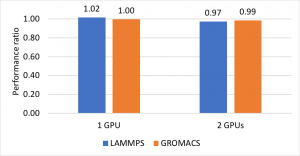

This section presents some preliminary performance evaluation by comparing GPU passthrough on vSphere 6.7 with bare-metal. The server used in this test is a Dell R740, which has 2 10-core sockets, 192 GB of memory, and 2 V100 GPUs. We used two popular HPC benchmark applications, i.e., LAMMPS and GROMACS, and measured the performance ratio which is defined as the bare-metal execution time divided by the virtual execution time (higher is better). For each application, 1 GPU and 2 GPUs were tested separately. Based on the figure below, it is clear to see that the performance gap is always below 3%. In the case of using 1 V100 GPU, LAMMPS can even achieve better performance in virtual than in bare-metal.

Figure 1: GPU performance comparison between passthrough mode in virtual and bare-metal. 1 and 2 GPUs are tested separated.

Troubleshooting

If you have followed the above instructions and your VM still does not boot correctly with the devices enabled, the material in this section may be helpful. If you have tried the suggestions below and are still having problems, please contact us and we’ll be happy to help you.

Mapping Issue

If you see an error similar to the following in the VM’s vmware.log file:

|

I120: PCIPassthru: 0000:82:00.0 : Device BAR 0 requested 64–bit memory address that exceeded MPN type (62105227100160) |

then your BIOS settings do not meet ESXi requirements for enabling this type of passthrough device. Specifically, ESXi 6.0 p4 through ESXi 6.5 requires that memory mapped for PCI devices all be below 16TB. It may be possible to work around this problem if your BIOS supports the ability to control how high in the host’s memory address space PCI memory regions are mapped. Some manufacturers — SuperMicro, for example — have BIOS options to change how high this memory is mapped. On SuperMicro systems, the MMIOHBase parameter can be changed to a lower value from its default of 56TB. Sugon systems also have a similar (hidden) BIOS setting. Check with your system vendor to learn whether your BIOS supports this remapping feature. Another solution is to update your ESXi version to 6.5 u1 or above, which has removed the 16TB limitation.

Incorrect Configuration

An error in the vmware.log file of the following form:

|

2016–07–07T09:18:37.624Z| vmx| I120: PCIPassthru: total number of pages needed (2097186) exceeds limit (917504), failing |

indicates that you have not correctly enabled “above 4GB” mappings in your host BIOS as described in the “Host BIOS” section above, or not correctly specified the VMX entries.

Cannot Use Device

If you have followed all of the above instructions and your VM has booted correctly, but you see a message similar to the following when running the nvidia-smi utility in your guest OS:

|

Unable to determine the device handle for GPU 0000:13:00.0: Unknown Error |

then we suggest contacting Nvidia directly or performing a web search using this string to find additional information that may be of help.

Author: Michael Cui