This is part 3 of a series of blog articles on the subject of using GPUs with VMware vSphere.

Part 1 of this series presents an overview of the various options for using GPUs on vSphere

Part 2 describes the DirectPath I/O (Passthrough) mechanism for GPUs

Part 3 gives details on setting up the NVIDIA Virtual GPU (vGPU) technology for GPUs on vSphere

Part 4 explores the setup for the Bitfusion Flexdirect method of using GPUs

In this article, we describe the NVIDIA vGPU (formerly “Grid”) method for using GPU devices on vSphere. The focus in this blog is on the use of GPUs for compute workloads (such as for machine learning, deep learning and high performance computing applications) and we are not looking at GPU usage for virtual desktop infrastructure (VDI) here.

The method of GPU usage on vSphere described here makes use of the products within the NVIDIA vGPU family. This vGPU family includes the “NVIDIA Virtual ComputeServer”, (VCS) and the “NVIDIA Quadro Virtual Datacenter Workstation” (vDWS) products for GPU access and management on vSphere, as well as other products. Here, we use the term “NVIDIA vGPU” as a synonym for the software product you choose from the vGPU family of products. NVIDIA recommends the vCS software product for machine learning and AI workloads, whereas vDWS was used for that purpose before vCS appeared on the market. These are licensed software products from NVIDIA.

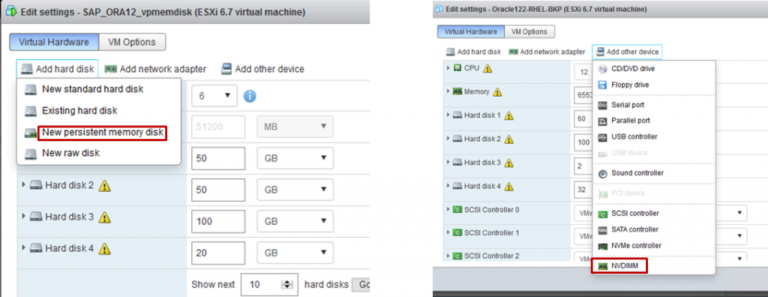

Figure 1: Parts of the NVIDIA vGPU product shown in the ESXi Hypervisor and in virtual machines

Figure 1 shows the relationship of the parts of the NVIDIA vGPU product to each other in the overall vSphere and virtual machine architecture.

The NVIDIA vGPU software includes two separate components:

- The NVIDIA Virtual GPU Manager, that is loaded as a VMware Installation Bundle (VIB) into the vSphere ESXi hypervisor itself and

- A separate guest OS NVIDIA vGPU driver that is installed within the guest operating system of your virtual machine (the “guest VM driver”).

Using the NVIDIA vGPU technology with vSphere allows you to choose between dedicating a full GPU device to one virtual machine or to allow partial sharing of a GPU device by more than one virtual machine.

The reasons for choosing this NVIDIAvGPU option are

- we know that the applications in your VMs do not need the power of full GPU;

- there is a limited number of GPU devices and we want them to be available to more than one team of users simultaneously;

- we sometimes want to dedicate a full GPU device to one VM, but at other times allow partial use of a GPU to a VM.

The released versions of the NVIDIA vGPU Manager and guest VM drivers that you install must be compatible. For all the versions of the software, versions of vSphere and the hardware versions, consult the current NVIDIA Release Notes. At the time of this writing, the NVIDIA vGPU release notes are located here.

1.NVIDIA vGPU Setup on the vSphere Host Server

Contents

- 1 1.NVIDIA vGPU Setup on the vSphere Host Server

- 1.1 1.1 Set the GPU Device to vGPU Mode Using the vSphere Host Graphics Setting

- 1.2 1.2 Check the Host Graphics Settings

- 1.3 1.3 Install the NVIDIA vGPU Manager VIB into the ESXi Hypervisor

- 1.4 1.4 List the VIB that was installed in the ESXi Hypervisor

- 1.5 1.5 Check the NVIDIA Driver Operation

- 1.6 1.6 Check the GPU Virtualization Mode

- 1.7 1.7 Disabling Error Correcting Code (ECC)

- 2 2. Choosing the vGPU Profile for the Virtual Machine

- 3 3. VM Guest Operating System vGPU Driver Installation

- 4

- 5 4. Install and Test the CUDA Libraries

- 6 References

The vSphere ESXi host server-specific part of the setup process is described first here. In order to set up the NVIDIA vGPU environment you will need:

- The licensed NVIDIA vGPU product (including the VIB for vSphere and the guest OS driver)

- Administrator login access to the console of your vSphere/ESXi machine

VMware recommends that you choose vSphere version 6.7 for this work. Choosing vSphere 6.7 update 1 will allow you to use the vMotion feature along with your GPU-enabled VM’s. If you choose to use vSphere 6.5 then ensure you are on update 1 before proceeding.

Carefully review the pre-requisites and other details in the NVIDIA vGPU Software User Guide document

The host server part of the NVIDIA vGPU installation process makes use of a “vib install” technique that is used in vSphere for installing drivers into the ESXi hypervisor itself. For more information on using vSphere VIBs, you should check this material

The NVIDIA vGPU Manager is contained in the VIB package that is downloaded from NVIDIA’s website. The package can be found by searching for the NVIDIA Quadro Virtual DataCenter Workstation (or vDWS) products on the NVIDIA site.

To install the NVIDIA vGPU Manager software into the vSphere ESXi hypervisor follow the procedure below.

1.1 Set the GPU Device to vGPU Mode Using the vSphere Host Graphics Setting

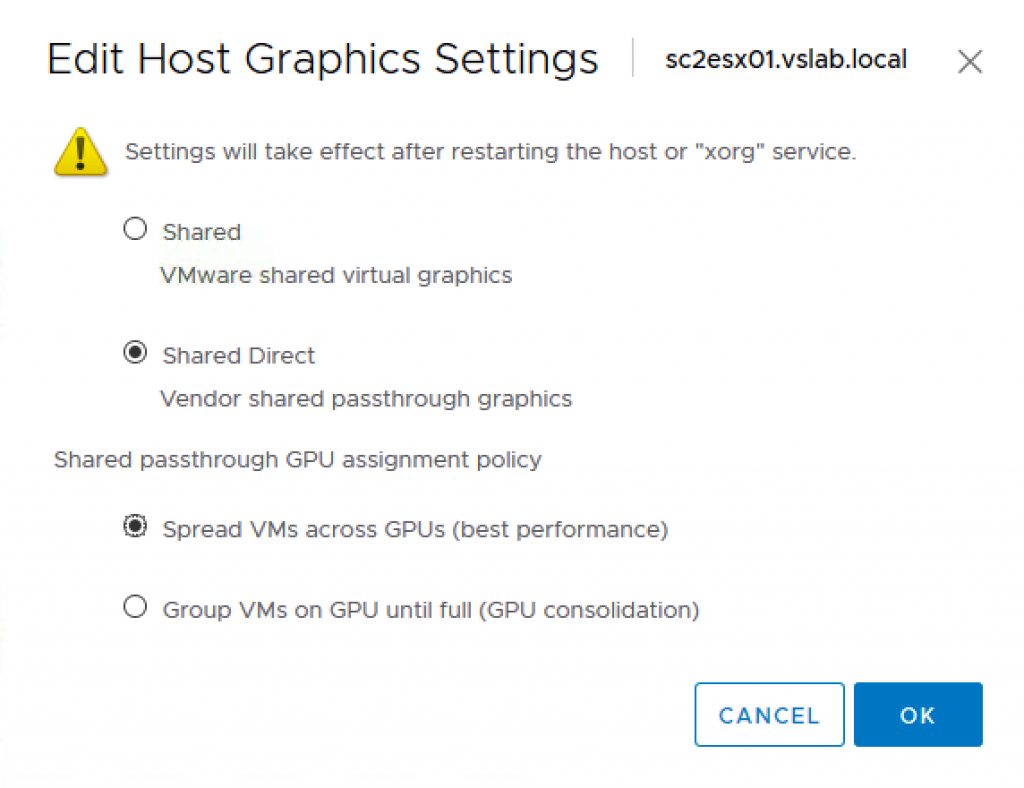

A GPU card can be configured in one of two modes: vSGA (shared virtual graphics) and vGPU. The NVIDIA card should be configured with vGPU mode. This is specifically for use of the GPU in compute workloads, such as in machine learning or high performance computing applications.

Access the ESXi host server either using the ESXi shell or through SSH. You will need to enable SSH access using the ESXi management console as SSH is disabled by default.To enable vGPU mode on the ESXi host, use the command line to execute this command:

# esxcli graphics host set –-default-type SharedPassthru

You may also get to this setting through the vSphere Client by choosing your host server and using the navigation

“Configure -> Hardware -> Graphics -> Host Graphics tab -> Edit”

A server reboot is required once the setting has been changed. The settings should appear as shown in Figure 2 below.

Figure 2: Edit Host Graphics screen for a Host Server in the vSphere Client

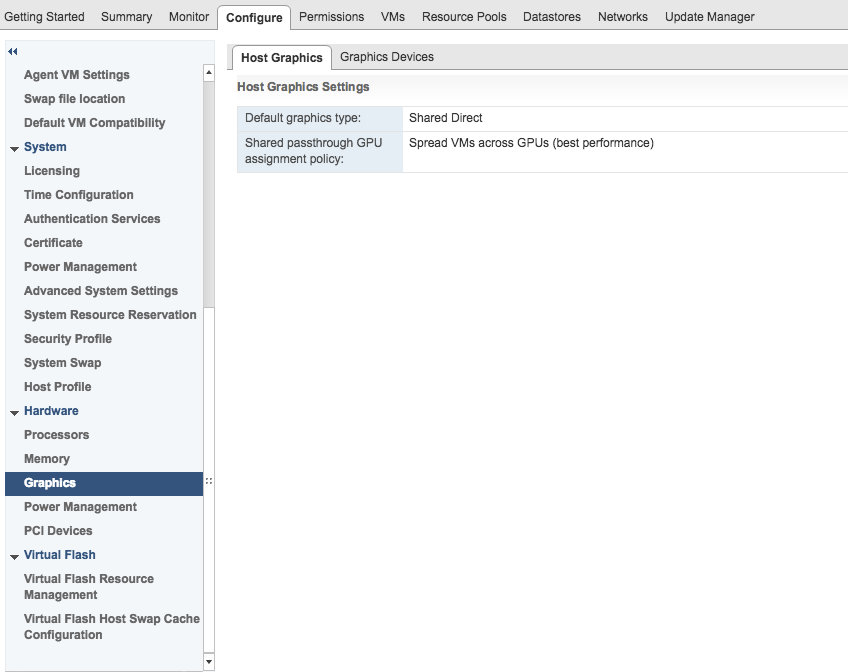

1.2 Check the Host Graphics Settings

To check that the settings have taken using the command line, type

# esxcli graphics host get

This command should produce output as follows:

|

Default Graphics Type: SharedPassthru Shared Passthru Assignment Policy: Performance |

These settings can also be seen in the vSphere Client above as seen in Figure 3 below.

Figure 3: Host Graphics checking in the vSphere Client

1.3 Install the NVIDIA vGPU Manager VIB into the ESXi Hypervisor

Before installing the VIB, place the ESXi host server into maintenance mode (i.e. all virtual machines are moved away or quiesced)

# esxcli system maintenanceMode set –enable true

Use two minus signs before the “enable” option above.

Note: Ensure that you install the VIB AFTER enabling the vGPU mode on your ESXi host. Otherwise, if you try to enable “Shared Direct” in the vSphere Client UI for this device, once the VIB is installed, it will not take effect.

To install the VIB, use a command similar to the following (where the path to your VIB may differ)

# esxcli software vib install -v /vmfs/volumes/ARL-ESX14-DS1/NVIDIA/NVIDIA-VMware_ESXi_6.7_Host_Driver_390.42-1OEM.670.0.0.7535516.vib

This command produces the following output:

|

1 2 3 4 5 6 7 8 9 10 11 |

Installation Result Message: Operation finished successfully. Reboot Required: false VIBs Installed: NVIDIA_bootbank_NVIDIA–VMware_ESXi_6.7_Host_Driver_390.42–1OEM.670.0.0.7535516 VIBs Removed: VIBs Skipped: |

Take the ESXi host server out of Maintenance Mode, using this command

# esxcli system maintenanceMode set –enable false

Use two minus signs before the “enable” option above.

1.4 List the VIB that was installed in the ESXi Hypervisor

To list the VIBs installed on the ESXi host and ensure that the NVIDIA VIB was done correctly, use the command:

# esxcli software vib list |grep –i NVIDIA

|

NVIDIA–VMware_ESXi_6.5_Host_Driver 384.43–10EM.650.0.0.4598673 |

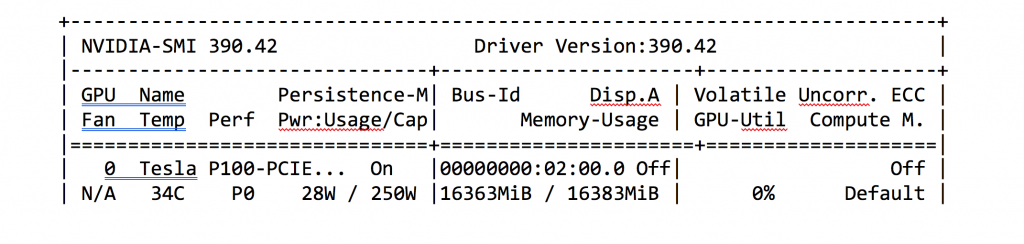

1.5 Check the NVIDIA Driver Operation

To confirm that the GPU card and ESXi are working together correctly, use the command:

# nvidia-smi

Figure 4: Tabular output from the nvidia-smi command showing details of the NVIDIA vGPU setup

1.6 Check the GPU Virtualization Mode

To check the GPU Virtualization Mode, use the command

# nvidia-smi –q | grep –i virtualization

|

GPU Virtualization Mode Virtualization mode : Host VGPU |

1.7 Disabling Error Correcting Code (ECC)

This section applies only to versions of NVIDIA vGPU software before release 9.0 (the latter shipped in June 2019). If you are using NVIDIA vGPU release 9.0 or later, you can skip this section (1.7). In NVIDIA vGPU release 9.0, ECC is supported.

NVIDIA GPU Cards that use the Pascal architecture, such as Tesla V100, P100, P40, as well as the Tesla M6 and M60 GPUs, support ECC memory for improved data integrity. However, the NVIDIA vGPU software does not support ECC. You must therefore ensure that ECC memory is disabled on all GPUs when using NVIDIA vGPU. Once the NVIDIA vGPU Manager is installed into vSphere ESXi, issue the following command to disable ECC on ESXi:

# nvidia-smi –e 0

|

Disabled ECC support for GPU 0000…. All done. |

Now check that the ECC mode is disabled:

# nvidia-smi –q

|

ECC Mode Current : Disabled Pending : Disabled |

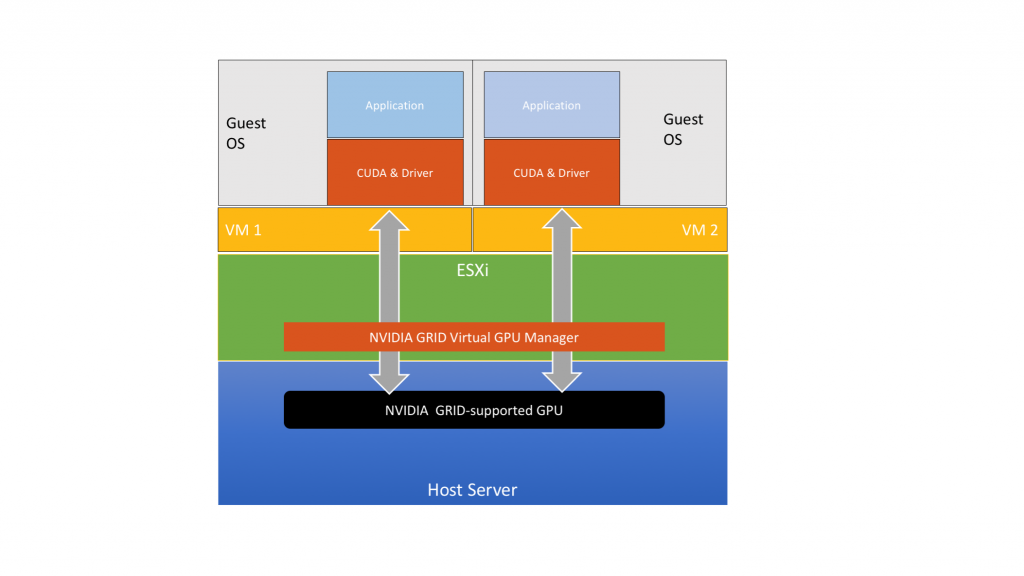

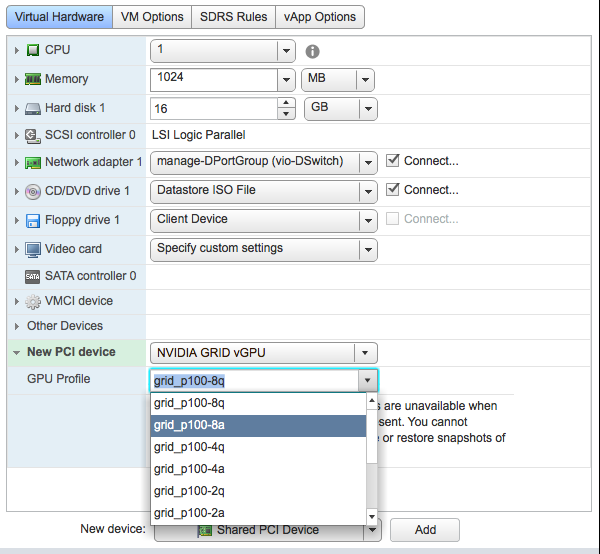

2. Choosing the vGPU Profile for the Virtual Machine

Once you have the NVIDIA vGPU Manager operating correctly, then you can choose a vGPU Profile for the virtual machine. A vGPU profile allows you to assign a GPU solely to one virtual machine’s use or to be used in a shared mode with others. When there is one physical GPU card on a host server, then all virtual machines on that server that require access to the GPU will use the same vGPU profile.

When using the earlier vDWS product within the NVIDIA vGPU family, only one vGPU profile was allowed per VM. This restriction was lifted with the release in 2019 of the NVIDIA vGPU vCS (Virtual Compute Server) product, that shipped in 2019. Now, with NVIDIA Virtual Compute Server on vSphere, you can have more than one vGPU profile for any one VM. This vCS feature requires you to be running on vSphere 6.7 update 3 or later. This multiple vGPU vCS feature allows you to use more than one physical GPU from one VM, through the use of vGPU profiles.

In the vSphere Client, choose the VM, press the right mouse button and choose “Edit Settings” from the menu. The choose the “Virtual Hardware” tab. You can then use the “GPU Profile” dropdown menu to choose a particular profile from the set presented. The number in the second from last character in the profile represents the number of GB of GPU memory that the profile will reserve for your VM.

Figure 5: Choosing the vGPU Profile for a VM in the vSphere Client

One example vGPU profile we can choose from the above list for the VM is : grid_P100-8q. This profile allows the VM to use at most 8GB of the physical GPU’s memory (which is 16GB in total). Two separate virtual machines on the host server with this profile may therefore share the same physical GPU.

By choosing the vGPU profile that assigns the full memory of the GPU to a VM, we can then dedicate that GPU in full to that VM, for example using the profile named “grid_p100-16q” in the case of a GPU with 16 GB of memory.

3. VM Guest Operating System vGPU Driver Installation

Consult the NVIDIA User Guide for detailed instructions on this process.

Note: Ensure that the NVIDIA vGPU Manager version that was installed into the ESXi hypervisor is exactly the same as the driver version you are installing into the guest OS for your VM.

The following example installation steps are detailed for an Ubuntu guest operating system in a virtual machine. The process would look very similar for another Linux flavor of guest OS, such as CentOS, though the individual commands may differ.

3.1 Developer Tools Installation

Ensure that the developer tools such as “gcc” are installed using the commands as follows:

# apt update

# apt upgrade

# sudo apt install build-essential

3.2 Prepare the Installation File

Download the “.run” file for the NVIDIA vGPU Linux guest VM driver from the NVIDIA site.

NOTE: This is a special driver that comes with the NVIDIA vGPU software – it is not a stock NVIDIA driver that is found outside of that product.

Copy the NVIDIA vGPU Linux driver package (for example the NVIDIA-Linux-x86_64-390.42-grid.run file) into the Linux VM’s file system.

3.3 Exit from X-Windows Server

Before running the installer program for the driver, ensure that you have exited from the X-windows server in the VM and terminate all OpenGL applications.

On Red Hat Enterprise Linux and CentOS systems, exit the X server by taking the guest OS to level 3

# sudo init 3

On an Ubuntu system, first switch to a console login prompt using CTRL-ALT-F1. Then login and shut down the display manager

# sudo services lightdm stop

3.4 Install the NVIDIA Linux vGPU Driver

Install the NVIDIA Linux vGPU Driver from a console shell:

# chmod +x NVIDIA-Linux-x86_64-390.42-grid.run

# sudo sh ./NVIDIA-Linux-x86_64-390.42-grid.run

This may produce messages related to X-windows issues that you may ignore. Accept the license agreement to continue.

Confirm the setup with the command

# nvidia-smi

This produces an output table that is similar to that seen in Figure 4 above.

3.5 Applying the License

Add your license server’s address to the /etc/nvidia/gridd.conf file (which is originally copied from /etc/nvidia/grid.conf.template). An example entry is:

ServerAddress=10.1.2.3

Set the FeatureType entry to 1

This is for NVIDIA vGPU – for more details see the section on “Licensing NVIDIA vGPU on Linux” in the NVIDIA vGPU User Guide.

Save your changes to the /etc/nvidia/gridd.conf file.

3.6 Restart the NVIDIA vGPU Service

Restart the nvidia-gridd service in the virtual machine using the command below.

# sudo service nvidia-gridd restart

Use the following command to check that the /var/log/syslog file has appropriate messages in it that indicate that the license was acquired and that the system is licensed correctly

# sudo grep grid /var/log/messages

There should be entries in that file that are similar to the following:

|

The license was acquired successfully from the correct server URL The system is licensed for GRID vGPU<strong> </strong> |

4. Install and Test the CUDA Libraries

This section has two approaches to completing the task, the first using containers to simplify the versioning of the various components – and the second more manually-driven approach.

4.1 Installation Using Containers

The CUDA and ML frameworks can be installed using Docker containers. This is provided so that the person who is installing can avoid the complexity of installing each component one by one. The approach involved the use of the “nvidia-docker” tool along with the CUDA/machine learning or high performance computing containers supplied by NVIDIA. This approach to installing these components is detailed in the VMware Enabling Machine Learning as a Service with GPU Acceleration document.

Both methods of installation work with virtual machines on vSphere.

4.2 Manual Installation

This section describes the installation of the CUDA libraries without the use of containers.

4.2.1 Download the Libraries

Ensure that you are using compatible versions of the CUDA libraries with the version of your guest operating system driver. As an example of version compatibility, version 9.1 of the CUDA libraries is compatible with the “390” version of the drivers.

Download the appropriate packages (using a “wget” command, for example). The file name examples we use here for CUDA version 9.1 may not apply to your installation as subsequent versions become available.

https://developer.nvidia.com/compute/cuda/9.1/Prod/local_installers/cuda_9.1.85_387.26_linux

https://developer.nvidia.com/compute/cuda/9.1/Prod/patches/1/cuda_9.1.85.1_linux

https://developer.nvidia.com/compute/cuda/9.1/Prod/patches/2/cuda_9.1.85.2_linux

https://developer.nvidia.com/compute/cuda/9.1/Prod/patches/3/cuda_9.1.85.3_linux

4.2.2 CUDA Library Installation

To install the CUDA libraries, use the command:

# sudo sh cuda_9.1.85_387.26_linux

Accept the EULA conditions.

When prompted to install the driver, answer “No”

Respond “yes” to the questions about libraries, symbolic links and samples.

4.3 CUDA Application Code Testing

Compile and run the example “deviceQuery” program. This should work correctly.

Compile and run the “vectorAdd” program. Ensure that the GPU is working.

You may now proceed to installing the cuDNN and your chosen platform for machine learning application development (such as TensorFlow)- or you may use the container method for installation as an alternative.

References

- VMWare Virtual Machine Graphics Acceleration Guide

- NVIDIA GRID SOFTWARE – User Guide, July 2018

- NVIDIA Virtual GPU Software for VMware vSphere – Release Notes, July 2018

- NVIDIA list of GRID-certified servers with GPU types supported

- NVIDIA Virtual vGPU Support Site (for Evaluations)

- NVIDIA Virtual GPU Packaging, Pricing and Licensing Guide

- NVIDIA Virtual GPU Software Quick Start Guide

- Enabling Machine Learning as a Service with GPU Acceleration Using vRealize Automation Technical White Paper