While virtualization has proven to provide enterprises with cost-effective, scalable and reliable IT computing, the approach to modern HPC has not evolved. Rather, it remains bound by the dedication of physical hardware to obtain explicit runtimes and maximum performance. This blog identifies the ways in which virtualization accelerates the delivery of HPC environments and provides a system design that demonstrates how virtualization and HPC technologies work together to deliver a secure, elastic, fully-managed, self-service, virtual HPC environment. Before diving into the reference architecture for HPC, it is worth reviewing what HPC is, the primary workload types, and the specific ways in which virtualization significantly improves HPC operational efficiency.

What is HPC?

HPC is the use of multiple computers and parallel-processing techniques to solve complex computational problems. HPC systems have the ability to deliver sustained performance through the concurrent use of computing resources and they are typically used for solving advanced scientific and engineering problems and performing research activities through computer modeling, simulation and analysis[1].HPC is continuously evolving to meet increasing demands for processing capabilities. It brings together several technologies including computer architecture, algorithms, programs and electronics, and system software under a single canopy to solve advanced problems effectively and quickly. Multidisciplinary areas that can benefit from HPC include:

- Aerospace

- Biosciences

- Energy

- Electronic Design

- Environment and Weather

- Finance

- Geographic Information

- Media and Film

This reference architecture targets two major types of HPC workloads:

Message Passing Interface (MPI) Workloads

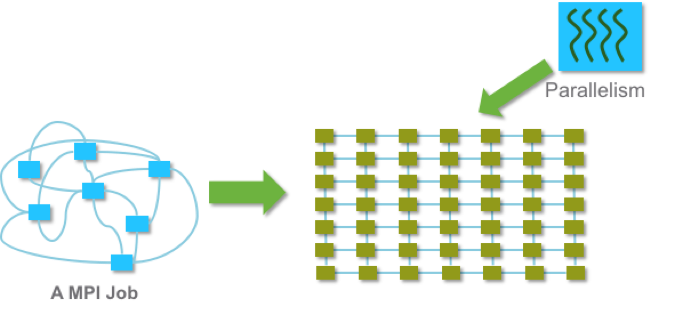

MPI workloads consist of multiple simultaneously running processes on different compute nodes that need to communicate with each other, often with extremely high frequency, making their performance sensitive to interconnect latency and bandwidth.

Figure 1 illustrates the coupling characteristic of MPI workloads: a MPI job is decomposed into a number of small tasks and these tasks communicate via MPI and are mapped to available processor cores. For example, weather forecast modeling is computationally intensive with the demand for computing power increasing at higher resolutions. In order to run the models in a feasible amount of time, they are decomposed into multiple pieces, with the calculation results from each piece affecting all adjacent pieces. This requires continual message passing between nodes at extremely low latency. Typically, these models are run on a distributed memory system using the MPI standard to communicate during job execution. Other examples of MPI workloads include molecular dynamics, computational fluid dynamics, oil and gas reservoir simulation, jet engine design, and emerging distributed machine learning workloads.

Figure 1: Characteristic of MPI workloads

Throughput Workloads

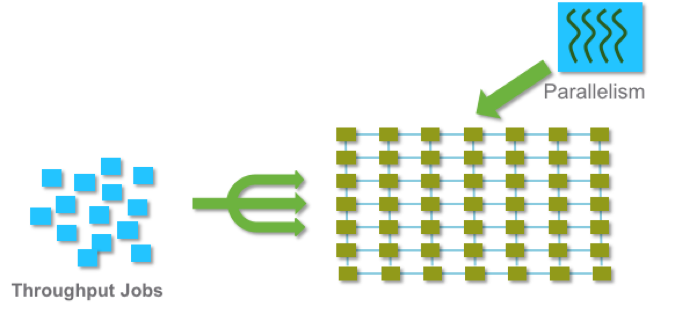

With throughput workloads, a task is divided into many small jobs that run simultaneously, but unlike MPI workloads there is no communication between jobs, as illustrated in Figure 2. Digital movie-rendering is a typical example of a throughput workload. A movie will be divided into individual frames and distributed across the cluster so each frame can be rendered simultaneously on a different processor core and then assembled at the end to produce a complete movie. Other examples of throughput workloads include Monte Carlo simulations in financial risk-analysis, electronic design-automation and genomics analysis, where each program runs in a long-time scale or features hundreds, thousands or even millions of executions with varying inputs.

Figure 2: Characteristic of throughput workloads

HPC workloads have traditionally been run only on bare-metal hardware. This is because virtualization has been associated with a performance penalty due to the hypervisor adding a layer of software between the operating system and hardware. While historically this was true, performance for these highly computational intensive workloads has increased dramatically with advances in both the hypervisor and hardware virtualization support in x86 microprocessors. Combined with the numerous operational benefits that virtualization offers, virtualization of HPC environments is becoming more common. In this section, we discuss the particular benefits of virtualization that enhance HPC environments and productivity.

Benefits of Virtual Machines

The fundamental element of virtualization is the virtual machine (VM) – a software abstraction that supports running an operating system and its applications in an environment whose resource configuration may be different from that of the underlying hardware. The benefits of VMs in an HPC environment include:

- By using VMs, different resource configurations, operating systems, and HPC software can be flexibly mixed on the same physical hardware. In addition, with a self-provisioning model, IT departments can deliver various environments with decreased time-to-solution for researchers, scientists, and engineers per each user’s requirements.

- Increased control and research reproducibility. Infrastructure and HPC administrators can dynamically resize, pause, take snapshots, back up, replicate to other virtual environments, or simply wipe and redeploy virtual machines based on their role-based permissions. Since configurations and files are encapsulated within each VM the VMs can be archived and rerun for research reproduce purpose, such as compliance.

- Improved resource-prioritization and balancing. Compute resources for VMs can be prioritized individually, or in a pool. It’s also possible to migrate running VMs and their encapsulated workloads across the cluster for load balancing. This migration increases overall cluster efficiency compared with a bare-metal approach.

- Fault isolation. By running jobs in an isolated VM environment, each job is protected from potential faults caused by jobs running in different VMs.

Security

Security rules and policies can be defined and applied based on environment, workflow, VM, physical sever, and operator, including:

- Actions controlled via user permissions and logged for audit reporting. For example, root access privileges are only granted as needed and based on the specified VMs, preventing compromise of other HPC workflows.

- Isolated workflows where sensitive data cannot be shared with other HPC environments, workflows, or users running on the same underlying hardware.

Resilience and Redundancy

HPC VMs provide fault resilience, dynamic recovery and other capabilities not available in traditional HPC environments. Specifically, HPC VMs enable:

- Hardware maintenance without impacting operational HPC workflows or serviceability.

- Automatic restart on another physical servers within the cluster following a server failure.

- Live migration to another physical host when resources of a given host are at capacity.

Performance

Performance is paramount in HPC and technical computing environments. Throughput workloads generally run at close to full speed in a virtualized environment — with less than 5% performance degradation compared to native, and just 1~2% in many cases. This has been verified through tests of various applications across multiple disciplines, including life sciences, electronic design automation, and financial risk analysis.mFurthermore, the study in Virtualizing HPC Throughput Computing Environments demonstrates that with CPU over-commitment capability and the concept of creating multi-tenant virtual clusters on VMware vSphere, performance of high throughput workloads in virtual environment can sometimes exceed the performance of bare-metal environments.

The performance degradations of MPI workloads are often higher than throughput workloads, due to microsecond network latency combined with intensive communication among processes between nodes. The performance study Virtualized HPC Performance with VMware vSphere 6.5 on a Dell PowerEdge C6320 Cluster, has shown that performance degradations for a range of common MPI applications can be kept under 10%, with the highest scale testing (using 32 nodes with 640 cores) showing larger slowdowns in some cases.

For either throughput or MPI applications that leverage compute accelerators, such as HPC workloads using General-Purpose Graphics Processing Units (GPGPU), the ability to map the physical PCIe resources directly to VMs (VMware Direct Path I/O), delivers virtualized performance that is near bare-metal performance.

Designing virtualized HPC environments requires understanding how the architecture will compare to that of traditional bare-metal HPC environments. In this section, we first introduce the architecture of a traditional HPC environment and then present how it can be converted into virtual environment through the use of VMware software.

Traditional HPC

Figure 3 Traditional bare-metal HPC cluster

As illustrated in figure 3, the typical configuration of a traditional bare-metal HPC cluster includes the following components:

Management node(s):

- Login node manages user logins.

- Master node for scheduling jobs.

- Other services, such as DNS and gateway.

- For small clusters, login, job scheduler and other services can run on same node, however, it is necessary to separate them when the number of nodes grow larger.

- All management nodes typically run a Linux OS.

Compute nodes:

- Compute nodes are deliciated for computational tasks.

- All compute nodes run the same Linux OS as the management nodes and are managed by job a scheduler running on the master node.

Networking and Interconnect:

- Management traffic typically communicates with 10/25 Gb/s Ethernet among management nodes and compute nodes.

- If there are needs for fast inter-communication, this is achieved through remote direct memory access (RDMA) capable interconnects, such as InfiniBand or RoCE (RDMA over Ethernet) host channel adapters (HCA) and switches.

Storage:

- Network File System (NFS) is often used for home directories and project space mounted across all nodes. It can also often provide a space for research groups sharing data.

- Parallel file systems such as Lustre or IBM Spectrum can be used for HPC workloads that require access to large files, simultaneous access from multiple compute nodes, and massive amount of data. The implementation of parallel file systems makes it easy to scale in terms of capability and performance. It takes advantage of RDMA transfers with large bandwidth and reduced CPU usage. The parallel file system is usually used as scratch space and intended for work that requires optimized I/O. These include: workload setup, pre-processing, running, and post processing.

Basic Virtualized HPC Architecture

Figure 4: Virtualized HPC cluster with single VM per node (basic architecture)

As illustrated in Figure 4, based on the architecture of traditional HPC, the management nodes and compute nodes are easily virtualized with VMware vSphere, which includes two core components: ESXi and vCenter Server. VMware ESXi is a Type-1 hypervisor installed directly on the bare-metal server providing a layer of abstraction for virtual machines to run while mapping host resources such as CPU, memory, storage, and network to each VM. vCenter Server provides centralized management of the hosts and virtual machines, coordinating resources for the entire cluster.

The management login node, master node for job scheduling DNS and gateway run as VMs on one or more management nodes within the same cluster. Each VM will run Linux as the “guest OS” which will be installed on virtual machine disks (VMDKs) that are stored securely on a shared datastore.

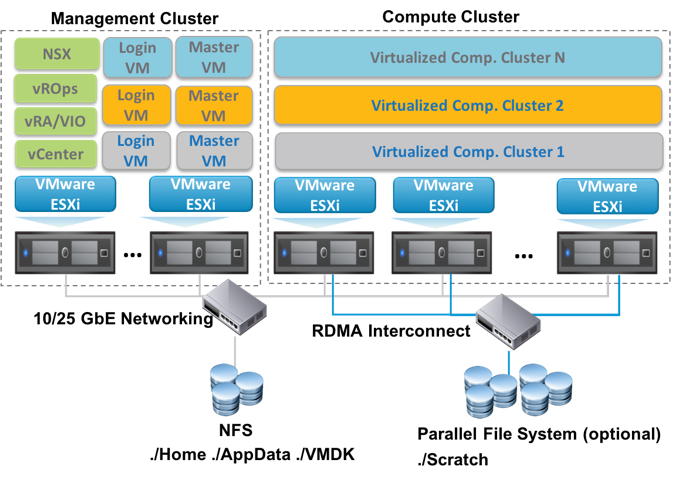

Secure HPC Cloud Architecture

Using the virtualized HPC cluster as a foundation, other VMware products and technologies can be leveraged to deploy a secure private cloud environment for HPC, as shown in Figure 5.

Figure 5: Secure Private HPC Cloud Architecture

The additional VMware software for operations that can be leveraged include:

- VMware vSAN is a high-performance hyper-converged storage solution that aggregates SSDs across servers to provide a shared datastore for VMDKs in the management cluster. This provides a simple, cost-effective, and highly available solution that is easy to manage and won’t impact the performance of storage used in the compute clusters.

- VMware NSX is a network virtualization platform that delivers networking and security entirely in software, abstracted from the underlying physical infrastructure. It provides switching, routing, load-balancing and firewall to the management and vHPC applications through optimized networking and security policies that are distributed throughout the cluster. Network and security policies are handled at the Virtual Switch and apply only to the Ethernet network, not to the RDMA interconnects, which should be configured as a closed system that connects only the compute nodes and parallel file system.

- VMware vRealize Automation (vRA) accelerates the deployment and management of applications and compute services, empowering IT and researchers to automate the deployment of vHPC workloads that can be requested and delivered on demand through a self-service portal. The concept of a “Blueprint” is used to describe the deployment and configuration of VMs, virtual networks, storage, and security properties of an application as it’s provisioned to a cluster.

- VMware vRealize Operations (vROps) is a robust operations management platform that delivers performance optimization through efficient capacity management, proactive planning and intelligent remediation. It continuously monitors the health, risk, and efficiency of the vHPC clusters through real-time predictive analytics to identify and prevent problems before they occur.

- VMware Integrated OpenStack (VIO) is a VMware-supported OpenStack distribution that makes it easy for IT to run an enterprise-grade OpenStack cloud on top of VMware virtualization technologies, boosting productivity by providing simple, standard and vendor-neutral OpenStack API access to VMware Infrastructure.

Figure 6: Secure Private HPC Cloud Architecture with vRealize Automation and NSX

Figure 6 presents a secure private cloud architecture with vRealize Automation and NSX. This architectural approach takes the basic virtualized HPC architecture as shown in Figure 4 and wraps it in a private cloud infrastructure. This adds self-provisioning, which allows individual departments or researchers/engineers to instantiate the resources they need for their project without needing to wait for the IT department to create the resource for them. When an end-user instantiates a virtual HPC cluster, it is done using a blueprint which specifies the required machine attributes, the number of machines, and the software that should be included in the VM, including operating system and middleware – which allows full customization to their requirements. By the same token, the blueprint approach also allows the central IT group to enforce corporate IT requirements by including those in the blueprint as well – for example, security and data protection policies. Detailed information contained in this blog post can be found in the comprehensive HPC Reference Architecture White Paper.

In part 2 we will look at the makeup of management/compute clusters and some sample designs.