” Give me ten men like Clouseau, and I can destroy the world…” said Former Chief Inspector Dreyfus about Inspector Jacques Clouseau and his incompetence, clumsy and chaotic detective skills

The previous blog Around the “Storage World” in no time – Storage vMotion and Oracle Workloads focused on how we can storage vMotion an Oracle Standalone and Oracle RAC Cluster , from one datastore to another datastore within a storage array OR between 2 storage arrays, accessed by the same SDDC Cluster , without ANY downtime.

The previous blog Migrating non-production Oracle RAC using VMware Storage vMotion with minimal downtime focused on on storage vMotion of a 2 Node non-production Oracle RAC Cluster with storage on one datastore to a datastore on a different storage system with minimal downtime and time.

This blog will not focus on the ‘how to perform Storage vMotion” aspect of the entire process, instead , it will focus on what we uncovered during our investigations (sans the incompetence, clumsiness and chaos that followed Inspector Jacques Clouseau) into Storage vMotioning a Oracle RAC cluster to vSAN Storage.

Important Update –

Prior VMware vSAN 6.7 P01 (ESXi 6.7 Patch Release ESXi670-201912001), Oracle RAC on vSAN requires

- shared VMDKs to be Eager Zero Thick provisioned (OSR=100) & multi-writer attribute enabled

Starting VMware vSAN 6.7 P01 (ESXi 6.7 Patch Release ESXi670-201912001), Oracle RAC on vSAN does NOT require the shared VMDKs to be Eager Zero Thick provisioned (OSR=100) for multi-writer mode to be enabled

- shared VMDKs can be thin provisioned (OSR=0) & multi-writer attribute enabled

High Level Migration Process

Contents

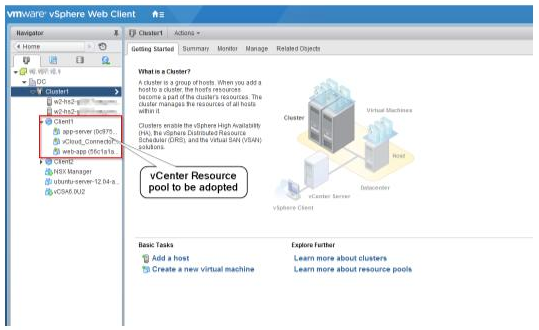

We had a vSAN Cluster , ESXi Version 6.5.0.13000 Build 7515524 has access to 3 different storage types

- Local VMFS6 on one of the vSAN Hosts on an unused local NVMe drive

- NFS VAAI complaint storage mounted on the vSAN Hosts

- vSAN storage

We followed the below steps detailed in the Migrating non-production Oracle RAC using VMware Storage vMotion with minimal downtime blog and tried to Storage vMotion the Oracle RAC cluster to vSAN using the Web Client.

- Shutdown both the RAC VM’s , ‘pvrdmarac1’ and ‘pvrdmarac2’

- On ‘pvrdmarac2’ remove ALL the shared vmdk/s using the Web Client

- DO NOT DELETE THE VMDK/s from storage

- Perform storage vMotion of VM ‘pvrdmarac2’ only to the new storage array

- Now VM ‘pvrdmarac1’ has 2 genres of vmdk

- non-shared vmdk/s ( i.e. OS , Oracle binaries etc )

- shared vmdk/s with multi-writer settings

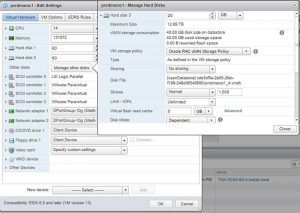

- On VM ‘pvrdmarac1’, remove the multi-writer setting for all shared disks using the web client

- Perform storage vMotion of VM ‘pvrdmarac1’to the new storage array

- At this point VM ‘pvrdmarac1’vmdk/s is on the new storage with both

- original non-shared vmdks

- common vmdk/s without multi-writer setting

- On VM ‘pvrdmarac1’, add multi-writer settings for all common vmdk/s back using the Web Client

- VM ‘pvrdmarac1’ now has

- non-shared vmdk/s

- shared vmdk/s with multi-writer setting

- For VM ‘pvrdmarac2’, add ALL shared vmdk/s with the multi-writer settings using the ‘Existing Hard Disk’ option using the web client

- At this point VM ‘pvrdmarac1’ and ‘pvrdmarac2’ vmdk/s are on the new storage

- original non-shared vmdks

- common vmdk/s with multi-writer setting

- Power on RAC VM ‘pvrdmarac1’ and ‘pvrdmarac2’

- Click OK for option ‘I copied it’ when prompted

- RAC Cluster has been successfully migrated over to the new cluster

We had no issues with any steps till step 7 where we were able to successfully Storage vMotion VM’s ‘pvrdmarac1’ and ‘pvrdmarac2’ to vSAN storage.

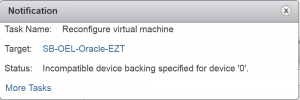

When we go to add the multi-writer setting in step 8 to shared disks , that’s where we started getting interesting errors.

Errors

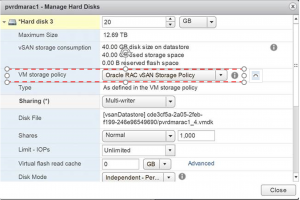

Let’s take the example of vmdk ‘pvrdmarac1_4.vmdk’ and enable the multi-writer flag via the web client.

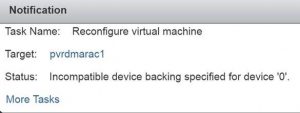

We get the error ‘Incompatible device backing specific for device ‘0’ ‘.

Hmm , let’s take a look at the vmdk on vsanDatastore.

Troubleshooting

1. Check the disk characteristics and the actual size on vsanDatastore.

[root@wdc-esx27:/vmfs/volumes/vsan:5283c85154c8d44f-d969fa7d157edc33/cde3cf5a-2a05-2feb-f199-246e96549690] cat pvrdmarac1_4.vmdk

# Disk DescriptorFile

version=4

encoding=”UTF-8″

CID=9ae4a16f

parentCID=ffffffff

isNativeSnapshot=”no”

createType=”vmfs”

# Extent description

RW 41943040 VMFS “vsan://aee8cf5a-2007-8da2-67dd-246e96549690“

# The Disk Data Base

#DDB

ddb.adapterType = “lsilogic”

ddb.deletable = “true”

ddb.geometry.cylinders = “2610”

ddb.geometry.heads = “255”

ddb.geometry.sectors = “63”

ddb.longContentID = “c2a926b28d71320a9addb1f89ae4a16f”

ddb.thinProvisioned = “1”

ddb.uuid = “60 00 C2 9e 6b 1e 86 ff-43 3a 9e 2a c1 c3 db 27”

ddb.virtualHWVersion = “13”

[root@wdc-esx27:/vmfs/volumes/vsan:5283c85154c8d44f-d969fa7d157edc33/cde3cf5a-2a05-2feb-f199-246e96549690]

[root@wdc-esx27:/vmfs/volumes/vsan:5283c85154c8d44f-d969fa7d157edc33/cde3cf5a-2a05-2feb-f199-246e96549690] cmmds-tool find -u aee8cf5a-2007-8da2-67dd-246e96549690 -f json

{

“entries”:

[

{

“uuid”: “aee8cf5a-2007-8da2-67dd-246e96549690”,

“owner”: “598273c6-2b47-6652-7ba4-246e96549690”,

“health”: “Healthy”,

“revision”: “1”,

“type”: “DOM_OBJECT”,

“flag”: “2”,

“minHostVersion”: “3”,

“md5sum”: “abbbf9edd17dce85468e41aba7f0d426”,

“valueLen”: “1400”,

“content”: {“type”: “Configuration”, “attributes”: {“CSN”: 1, “addressSpace”: 21474836480, “scrubStartTime”: 1523574958321294, “objectVersion”: 5, “highestDiskVersion”: 5, “muxGroup”: 2973725105745444, “groupUuid”: “cde3cf5a-2a05-2feb-f199-246e96549690”, “compositeUuid”: “aee8cf5a-2007-8da2-67dd-246e96549690“}, “child-1”: {“type”: “RAID_1”, “attributes”: {“scope”: 3}, “child-1”: {“type”: “Component”, “attributes”: {“capacity”: 21474836480, “addressSpace”: 21474836480, “componentState”: 5, “componentStateTS”: 1523574958, “faultDomainId”: “598273c6-2b47-6652-7ba4-246e96549690”, “subFaultDomainId”: “598273c6-2b47-6652-7ba4-246e96549690”}, “componentUuid”: “aee8cf5a-4edb-e1a2-99b3-246e96549690”, “diskUuid”: “524f367b-f676-df95-13b9-976900325631”}, “child-2”: {“type”: “Component”, “attributes”: {“capacity”: 21474836480, “addressSpace”: 21474836480, “componentState”: 5, “componentStateTS”: 1523574958, “faultDomainId”: “598231ef-dc82-ab11-6414-246e965377b8”, “subFaultDomainId”: “598231ef-dc82-ab11-6414-246e965377b8”}, “componentUuid”: “aee8cf5a-4f63-e3a2-e10b-246e96549690”, “diskUuid”: “52437f9f-4407-6b17-185b-3f283ac3fa04”}}, “child-2”: {“type”: “Witness”, “attributes”: {“componentState”: 5, “componentStateTS”: 1523574958, “isWitness”: 1, “faultDomainId”: “59823277-24b2-1a08-e42c-246e96537d48”, “subFaultDomainId”: “59823277-24b2-1a08-e42c-246e96537d48”}, “componentUuid”: “aee8cf5a-c192-e4a2-3712-246e96549690”, “diskUuid”: “522d223d-58fb-e969-cc77-7f3a46a8ad98”}},

“errorStr”: “(null)”

}

,{

“uuid”: “aee8cf5a-2007-8da2-67dd-246e96549690”,

“owner”: “598273c6-2b47-6652-7ba4-246e96549690”,

“health”: “Healthy”,

“revision”: “0”,

“type”: “POLICY”,

“flag”: “2”,

“minHostVersion”: “3”,

“md5sum”: “a1f12c0a96159761011b53b4bdef36d3”,

“valueLen”: “424”,

“content”: {“stripeWidth”: 1, “cacheReservation”: 0, “proportionalCapacity”: 100, “hostFailuresToTolerate”: 1, “forceProvisioning”: 0, “spbmProfileId”: “59e12967-8de3-45aa-b623-e80dbba46b5f”, “spbmProfileGenerationNumber”: 0, “CSN”: 1, “spbmProfileName”: “Oracle RAC vSAN Storage Policy”},

“errorStr”: “(null)”

}

,{

“uuid”: “aee8cf5a-2007-8da2-67dd-246e96549690”,

“owner”: “598273c6-2b47-6652-7ba4-246e96549690”,

“health”: “Healthy”,

“revision”: “1”,

“type”: “CONFIG_STATUS”,

“flag”: “2”,

“minHostVersion”: “3”,

“md5sum”: “dc3aacf3a85e80aab37df7a1132c98c4”,

“valueLen”: “64”,

“content”: {“state”: 7, “CSN”: 1},

“errorStr”: “(null)”

}

]

}

[root@wdc-esx27:/vmfs/volumes/vsan:5283c85154c8d44f-d969fa7d157edc33/cde3cf5a-2a05-2feb-f199-246e96549690]

[root@wdc-esx27:/vmfs/volumes/vsan:5283c85154c8d44f-d969fa7d157edc33/cde3cf5a-2a05-2feb-f199-246e96549690] cmmds-tool find -u aee8cf5a-4f63-e3a2-e10b-246e96549690 -f json

{

“entries”:

[

{

“uuid”: “aee8cf5a-4f63-e3a2-e10b-246e96549690”,

“owner”: “598231ef-dc82-ab11-6414-246e965377b8”,

“health”: “Healthy”,

“revision”: “83”,

“type”: “LSOM_OBJECT”,

“flag”: “2”,

“minHostVersion”: “3”,

“md5sum”: “7ae4fbe82ebc41fdc1e5b5cc5e2b848f”,

“valueLen”: “88”,

“content”: {“diskUuid”: “52437f9f-4407-6b17-185b-3f283ac3fa04”, “compositeUuid”: “aee8cf5a-2007-8da2-67dd-246e96549690”, “capacityUsed”: 21915238400, “physCapacityUsed”: 9005170688, “dedupUniquenessMetric”: 1},

“errorStr”: “(null)”

}

]

}

[root@wdc-esx27:/vmfs/volumes/vsan:5283c85154c8d44f-d969fa7d157edc33/cde3cf5a-2a05-2feb-f199-246e96549690]

capacityUsed = 21915238400 bytes ~ 20.41 GB

physCapacityUsed = 9005170688 bytes ~ 8.38 G

Why is vSAN reporting a mismatch between the size allocated and actual capacity used ?

2. Verify if the Storage Policy for Oracle RAC vmdk/s are correct

pvrdmarac1_4.vmdk is complaint with the Storage policy set for Oracle RAC

Further checking, the storage policy has OSR set to 100%

Lets check if all vmdk’s of pvrdmarc1 are complaint

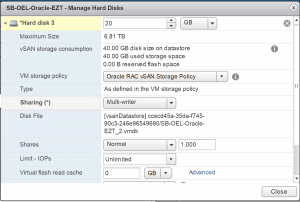

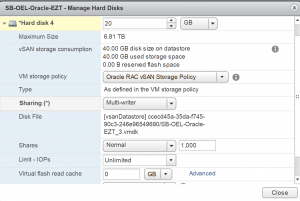

3. Let’s see if this is a repeatable behavior. Let’s us use a brand new VM ‘SB-OEL-Oracle-EZT’ which has 4 disks and see if we can repeat the same behavior.

- A thin provisioned vmdk for OS on NFS VAAI complaint datastore

- A thin provisioned vmdk for Oracle binaries on NFS VAAI complaint datastore

- A 20G Eager Zero thick (EZT) with multi-writer flag (MWF) vmdk on NFS VAAI compatible datastore created with the Oracle RAC storage policy [ SB-OEL-Oracle-EZT_2.vmdk ]

- 20G Eager Zero thick with multi-writer flag vmdk on a local VMFS6 datastore created with the Oracle RAC storage policy [ SB-OEL-Oracle-EZT.vmdk ]

Before starting Storage vMotion , gather disk characteristics of both NFS and VMFS backed vmdk’s

Check the disk characteristics of vmdk on NFS VAAI complaint datastore.

[root@wdc-esx27:/vmfs/volumes/a665071c-f6850cfa/SB-OEL-Oracle-EZT] cat SB-OEL-Oracle-EZT_2.vmdk

# Disk DescriptorFile

version=1

encoding=”UTF-8″

CID=fffffffe

parentCID=ffffffff

isNativeSnapshot=”no”

createType=”vmfs”

# Extent description

RW 41943040 VMFS “SB-OEL-Oracle-EZT_2-flat.vmdk“

# The Disk Data Base

#DDB

ddb.adapterType = “lsilogic”

ddb.geometry.cylinders = “2610”

ddb.geometry.heads = “255”

ddb.geometry.sectors = “63”

ddb.longContentID = “b8641681ea637c9ed9f904b3fffffffe”

ddb.uuid = “60 00 C2 9f e3 02 57 4d-3d 35 31 7f 37 c0 67 33”

ddb.virtualHWVersion = “13”

[root@wdc-esx27:/vmfs/volumes/a665071c-f6850cfa/SB-OEL-Oracle-EZT]

Check the NFS storage array to make sure that the vmdk is really EZT.

Use the KB Determining if a VMDK is zeroedthick or eagerzeroedthick (1011170) to find out if the vmdk is really EZT

[root@wdc-esx27:/vmfs/volumes/a665071c-f6850cfa/SB-OEL-Oracle-EZT] vmkfstools -D SB-OEL-Oracle-EZT_2-flat.vmdk

Could not get the dump information for ‘SB-OEL-Oracle-EZT_2-flat.vmdk’ (rv -1)

Could not dump metadata for ‘SB-OEL-Oracle-EZT_2-flat.vmdk’: Inappropriate ioctl for device

Error: Inappropriate ioctl for device

[root@wdc-esx27:/vmfs/volumes/a665071c-f6850cfa/SB-OEL-Oracle-EZT]

On researching further , we came across an good blog post from Cormac , and as per the blog , on VMFS, we can query TBZ (To Be Zeroed), but on NFS, we have no way of retrieving this information.

That’s explains the error above for NFS backed vmdk.

Now lets check the disk characteristics of vmdk on local VMFS6 datastore

[root@wdc-esx27:/vmfs/volumes/5ad424bc-b9083175-d3e4-246e96549690/SB-OEL-Oracle-EZT] cat SB-OEL-Oracle-EZT.vmdk

# Disk DescriptorFile

version=1

encoding=”UTF-8″

CID=fffffffe

parentCID=ffffffff

isNativeSnapshot=”no”

createType=”vmfs”

# Extent description

RW 41943040 VMFS “SB-OEL-Oracle-EZT-flat.vmdk“

# The Disk Data Base

#DDB

ddb.adapterType = “lsilogic”

ddb.geometry.cylinders = “2610”

ddb.geometry.heads = “255”

ddb.geometry.sectors = “63”

ddb.longContentID = “3b9a56edc5b747487a787383fffffffe”

ddb.uuid = “60 00 C2 91 56 be a7 50-9c 87 2f 62 2c c7 72 1a”

ddb.virtualHWVersion = “13”

[root@wdc-esx27:/vmfs/volumes/5ad424bc-b9083175-d3e4-246e96549690/SB-OEL-Oracle-EZT]

[root@wdc-esx27:/vmfs/volumes/5ad424bc-b9083175-d3e4-246e96549690/SB-OEL-Oracle-EZT] vmkfstools -D SB-OEL-Oracle-EZT-flat.vmdk

Lock [type 10c00001 offset 123207680 v 9, hb offset 3178496

gen 901, mode 3, owner 00000000-00000000-0000-000000000000 mtime 107516

num 1 gblnum 0 gblgen 0 gblbrk 0]

MW Owner[0] HB Offset 3178496 5ac6a50d-d976b979-50d0-246e96549690

Addr <4, 48, 0>, gen 2, links 1, type reg, flags 0, uid 0, gid 0, mode 600

len 21474836480, nb 20480 tbz 0, cow 0, newSinceEpoch 20480, zla 3, bs 1048576

[root@wdc-esx27:/vmfs/volumes/5ad424bc-b9083175-d3e4-246e96549690/SB-OEL-Oracle-EZT]

As per the KB Determining if a VMDK is zeroedthick or eagerzeroedthick (1011170) , review the output. If the output has tbz at zero, the VMDK is eagerzeroedthick. Otherwise it is zeroedthick. TBZ represents the number of blocks in the disk remaining To Be Zeroed.

Power off VM ‘SB-OEL-Oracle-EZT’ to remove the multi writer for both NFS and VMFS6 backed vmdk’s.

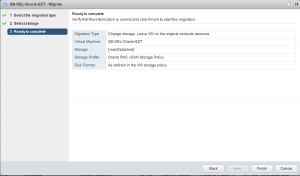

Now lets Storage vMotion VM ‘SB-OEL-Oracle-EZT’ to the vsanDatastore.

After Storage vMotion operation completes, lets check if the vmdk’s on vSAN are complaint with the Oracle RAC storage policy? Yes its fully complaint

Now let’s try setting the Multi-writer flag setting on the vmdk which was Storage vMotioned from NFS VAAI datastore.

Same error ?

Now let’s try setting the Multi-writer flag setting on the vmdk which was Storage vMotioned from local VMFS6 datastore.

Same error ?

4. Lets look at the disk characteristics of both the vmdk’s on the vsanDatastore

vmdk source is NFS VAAI datastore

[root@wdc-esx27:/vmfs/volumes/vsan:5283c85154c8d44f-d969fa7d157edc33/ccecd45a-35da-f745-90c3-246e96549690] cat SB-OEL-Oracle-EZT_3.vmdk

# Disk DescriptorFile

version=4

encoding=”UTF-8″

CID=fffffffe

parentCID=ffffffff

isNativeSnapshot=”no”

createType=”vmfs”

# Extent description

RW 41943040 VMFS “vsan://dbf1d45a-3a30-7809-f6be-246e96549690“

# The Disk Data Base

#DDB

ddb.adapterType = “lsilogic”

ddb.deletable = “true”

ddb.geometry.cylinders = “2610”

ddb.geometry.heads = “255”

ddb.geometry.sectors = “63”

ddb.longContentID = “3b9a56edc5b747487a787383fffffffe”

ddb.thinProvisioned = “1”

ddb.uuid = “60 00 C2 91 56 be a7 50-9c 87 2f 62 2c c7 72 1a”

ddb.virtualHWVersion = “13”

[root@wdc-esx27:/vmfs/volumes/vsan:5283c85154c8d44f-d969fa7d157edc33/ccecd45a-35da-f745-90c3-246e96549690]

[root@wdc-esx27:/vmfs/volumes/vsan:5283c85154c8d44f-d969fa7d157edc33/ccecd45a-35da-f745-90c3-246e96549690] cmmds-tool find -u dbf1d45a-3a30-7809-f6be-246e96549690 -f json

{

“entries”:

[

{

“uuid”: “dbf1d45a-3a30-7809-f6be-246e96549690”,

“owner”: “598273c6-2b47-6652-7ba4-246e96549690”,

“health”: “Healthy”,

“revision”: “2”,

“type”: “DOM_OBJECT”,

“flag”: “2”,

“minHostVersion”: “3”,

“md5sum”: “089be2babc59616bccdd489ac16ddc9f”,

“valueLen”: “1448”,

“content”: {“type”: “Configuration”, “attributes”: {“CSN”: 2, “addressSpace”: 21474836480, “scrubStartTime”: 1523904987849208, “objectVersion”: 5, “highestDiskVersion”: 5, “muxGroup”: 651492071447096868, “groupUuid”: “ccecd45a-35da-f745-90c3-246e96549690”, “compositeUuid”: “dbf1d45a-3a30-7809-f6be-246e96549690”}, “child-1”: {“type”: “RAID_1”, “attributes”: {“scope”: 3}, “child-1”: {“type”: “Component”, “attributes”: {“capacity”: 21474836480, “addressSpace”: 21474836480, “componentState”: 5, “componentStateTS”: 1523904987, “faultDomainId”: “598273c6-2b47-6652-7ba4-246e96549690”, “lastScrubbedOffset”: 21474836480, “subFaultDomainId”: “598273c6-2b47-6652-7ba4-246e96549690”}, “componentUuid”: “dbf1d45a-03db-2c0b-360c-246e96549690“, “diskUuid”: “524f367b-f676-df95-13b9-976900325631”}, “child-2”: {“type”: “Component”, “attributes”: {“capacity”: 21474836480, “addressSpace”: 21474836480, “componentState”: 5, “componentStateTS”: 1523904987, “faultDomainId”: “59823277-24b2-1a08-e42c-246e96537d48”, “subFaultDomainId”: “59823277-24b2-1a08-e42c-246e96537d48”}, “componentUuid”: “dbf1d45a-d666-2e0b-6eca-246e96549690”, “diskUuid”: “52061b7f-d719-75f9-a2ca-1dc7531a91ea”}}, “child-2”: {“type”: “Witness”, “attributes”: {“componentState”: 5, “componentStateTS”: 1523904987, “isWitness”: 1, “faultDomainId”: “598231ef-dc82-ab11-6414-246e965377b8”, “subFaultDomainId”: “598231ef-dc82-ab11-6414-246e965377b8”}, “componentUuid”: “dbf1d45a-dd1f-2f0b-9529-246e96549690”, “diskUuid”: “52487414-d8da-c8ae-074d-b4c5b600f2ef”}},

“errorStr”: “(null)”

}

,{

“uuid”: “dbf1d45a-3a30-7809-f6be-246e96549690”,

“owner”: “598273c6-2b47-6652-7ba4-246e96549690”,

“health”: “Healthy”,

“revision”: “1”,

“type”: “POLICY”,

“flag”: “2”,

“minHostVersion”: “3”,

“md5sum”: “6080a58bed84432dc8bda95a5f68bf34”,

“valueLen”: “424”,

“content”: {“stripeWidth”: 1, “cacheReservation”: 0, “proportionalCapacity”: 100, “hostFailuresToTolerate”: 1, “forceProvisioning”: 0, “spbmProfileId”: “59e12967-8de3-45aa-b623-e80dbba46b5f”, “spbmProfileGenerationNumber”: 0, “CSN”: 2, “spbmProfileName”: “Oracle RAC vSAN Storage Policy”},

“errorStr”: “(null)”

}

,{

“uuid”: “dbf1d45a-3a30-7809-f6be-246e96549690”,

“owner”: “598273c6-2b47-6652-7ba4-246e96549690”,

“health”: “Healthy”,

“revision”: “2”,

“type”: “CONFIG_STATUS”,

“flag”: “2”,

“minHostVersion”: “3”,

“md5sum”: “d27268a3cc8409117485c5e9f6218aff”,

“valueLen”: “64”,

“content”: {“state”: 7, “CSN”: 2},

“errorStr”: “(null)”

}

]

}

[root@wdc-esx27:/vmfs/volumes/vsan:5283c85154c8d44f-d969fa7d157edc33/ccecd45a-35da-f745-90c3-246e96549690]

[root@wdc-esx27:/vmfs/volumes/vsan:5283c85154c8d44f-d969fa7d157edc33/ccecd45a-35da-f745-90c3-246e96549690] cmmds-tool find -u dbf1d45a-03db-2c0b-360c-246e96549690 -f json

{

“entries”:

[

{

“uuid”: “dbf1d45a-03db-2c0b-360c-246e96549690”,

“owner”: “598273c6-2b47-6652-7ba4-246e96549690”,

“health”: “Healthy”,

“revision”: “1”,

“type”: “LSOM_OBJECT”,

“flag”: “2”,

“minHostVersion”: “3”,

“md5sum”: “d13f4dd9537ab330313ec224a775b512”,

“valueLen”: “88”,

“content”: {“diskUuid”: “524f367b-f676-df95-13b9-976900325631”, “compositeUuid”: “dbf1d45a-3a30-7809-f6be-246e96549690”, “capacityUsed”: 21915238400, “physCapacityUsed”: 4194304, “dedupUniquenessMetric”: 0},

“errorStr”: “(null)”

}

]

}

[root@wdc-esx27:/vmfs/volumes/vsan:5283c85154c8d44f-d969fa7d157edc33/ccecd45a-35da-f745-90c3-246e96549690]

capacityUsed = 21915238400 ~ 20.41G

physCapacityUsed = 4194304 = 4M

vmdk source is VMFS 6 datastore

[root@wdc-esx27:/vmfs/volumes/vsan:5283c85154c8d44f-d969fa7d157edc33/ccecd45a-35da-f745-90c3-246e96549690] cat SB-OEL-Oracle-EZT_2.vmdk

# Disk DescriptorFile

version=4

encoding=”UTF-8″

CID=fffffffe

parentCID=ffffffff

isNativeSnapshot=”no”

createType=”vmfs”

# Extent description

RW 41943040 VMFS “vsan://23f1d45a-51b7-6f32-6294-246e96549690“

# The Disk Data Base

#DDB

ddb.adapterType = “lsilogic”

ddb.deletable = “true”

ddb.geometry.cylinders = “2610”

ddb.geometry.heads = “255”

ddb.geometry.sectors = “63”

ddb.longContentID = “b8641681ea637c9ed9f904b3fffffffe”

ddb.thinProvisioned = “1”

ddb.uuid = “60 00 C2 9f e3 02 57 4d-3d 35 31 7f 37 c0 67 33”

ddb.virtualHWVersion = “13”

[root@wdc-esx27:/vmfs/volumes/vsan:5283c85154c8d44f-d969fa7d157edc33/ccecd45a-35da-f745-90c3-246e96549690]

[root@wdc-esx27:/vmfs/volumes/vsan:5283c85154c8d44f-d969fa7d157edc33/ccecd45a-35da-f745-90c3-246e96549690] cmmds-tool find -u 23f1d45a-51b7-6f32-6294-246e96549690 -f json

{

“entries”:

[

{

“uuid”: “23f1d45a-51b7-6f32-6294-246e96549690”,

“owner”: “598273c6-2b47-6652-7ba4-246e96549690”,

“health”: “Healthy”,

“revision”: “10”,

“type”: “DOM_OBJECT”,

“flag”: “2”,

“minHostVersion”: “3”,

“md5sum”: “072d1921e141f3e47311af1e7f8d4cd2”,

“valueLen”: “1528”,

“content”: {“type”: “Configuration”, “attributes”: {“CSN”: 7, “SCSN”: 3, “addressSpace”: 21474836480, “scrubStartTime”: 1523904803799538, “objectVersion”: 5, “highestDiskVersion”: 5, “muxGroup”: 291204101257457188, “groupUuid”: “ccecd45a-35da-f745-90c3-246e96549690”, “compositeUuid”: “23f1d45a-51b7-6f32-6294-246e96549690”}, “child-1”: {“type”: “RAID_1”, “attributes”: {“scope”: 3}, “child-1”: {“type”: “Component”, “attributes”: {“capacity”: 21474836480, “addressSpace”: 21474836480, “componentState”: 5, “componentStateTS”: 1523904803, “faultDomainId”: “598273c6-2b47-6652-7ba4-246e96549690”, “lastScrubbedOffset”: 21474836480, “subFaultDomainId”: “598273c6-2b47-6652-7ba4-246e96549690”}, “componentUuid”: “23f1d45a-e450-ca32-66cb-246e96549690“, “diskUuid”: “524f367b-f676-df95-13b9-976900325631”}, “child-2”: {“type”: “Component”, “attributes”: {“capacity”: 21474836480, “addressSpace”: 21474836480, “componentState”: 5, “componentStateTS”: 1524025007, “faultDomainId”: “59822d81-cbb0-2ae2-0ed9-246e96537848”, “lastScrubbedOffset”: 21474836480, “subFaultDomainId”: “59822d81-cbb0-2ae2-0ed9-246e96537848”}, “componentUuid”: “aec6d65a-de65-779c-b667-246e96549690”, “diskUuid”: “5286a2a5-0bba-0677-dc22-944cf7ff2daa”}}, “child-2”: {“type”: “Witness”, “attributes”: {“componentState”: 5, “componentStateTS”: 1524025024, “isWitness”: 1, “faultDomainId”: “59823277-24b2-1a08-e42c-246e96537d48”, “subFaultDomainId”: “59823277-24b2-1a08-e42c-246e96537d48”}, “componentUuid”: “c0c6d65a-2f6f-1663-d1b8-246e96549690”, “diskUuid”: “52061b7f-d719-75f9-a2ca-1dc7531a91ea”}},

“errorStr”: “(null)”

}

,{

“uuid”: “23f1d45a-51b7-6f32-6294-246e96549690”,

“owner”: “598273c6-2b47-6652-7ba4-246e96549690”,

“health”: “Healthy”,

“revision”: “6”,

“type”: “POLICY”,

“flag”: “2”,

“minHostVersion”: “3”,

“md5sum”: “aac6f4d6bbcc2473f8e10fc072c94446”,

“valueLen”: “456”,

“content”: {“stripeWidth”: 1, “cacheReservation”: 0, “proportionalCapacity”: 100, “hostFailuresToTolerate”: 1, “forceProvisioning”: 0, “spbmProfileId”: “59e12967-8de3-45aa-b623-e80dbba46b5f”, “spbmProfileGenerationNumber”: 0, “CSN”: 7, “SCSN”: 3, “spbmProfileName”: “Oracle RAC vSAN Storage Policy”},

“errorStr”: “(null)”

}

,{

“uuid”: “23f1d45a-51b7-6f32-6294-246e96549690”,

“owner”: “598273c6-2b47-6652-7ba4-246e96549690”,

“health”: “Healthy”,

“revision”: “8”,

“type”: “CONFIG_STATUS”,

“flag”: “2”,

“minHostVersion”: “3”,

“md5sum”: “eb9c369b772e1296a7609f41fbd2a00f”,

“valueLen”: “96”,

“content”: {“state”: 7, “CSN”: 7, “SCSN”: 3},

“errorStr”: “(null)”

}

,{

“uuid”: “23f1d45a-51b7-6f32-6294-246e96549690”,

“owner”: “00000000-0000-0000-0000-000000000000”,

“health”: “Unhealthy”,

“revision”: “3”,

“type”: “DISCARDED_COMPONENTS”,

“flag”: “0”,

“minHostVersion”: “0”,

“md5sum”: “c34b5356c5f678574a77a5a945d3b180”,

“valueLen”: “64”,

“content”: “[1524025024, [ ”23f1d45a-ddb9-cb32-d7c1-246e96549690”], [ ”52487414-d8da-c8ae-074d-b4c5b600f2ef”]]”,

“errorStr”: “(null)”

}

]

}

[root@wdc-esx27:/vmfs/volumes/vsan:5283c85154c8d44f-d969fa7d157edc33/ccecd45a-35da-f745-90c3-246e96549690]

[root@wdc-esx27:/vmfs/volumes/vsan:5283c85154c8d44f-d969fa7d157edc33/ccecd45a-35da-f745-90c3-246e96549690] cmmds-tool find -u aec6d65a-de65-779c-b667-246e96549690 -f json

{

“entries”:

[

{

“uuid”: “aec6d65a-de65-779c-b667-246e96549690”,

“owner”: “59822d81-cbb0-2ae2-0ed9-246e96537848”,

“health”: “Healthy”,

“revision”: “1”,

“type”: “LSOM_OBJECT”,

“flag”: “2”,

“minHostVersion”: “3”,

“md5sum”: “bc0fcb8324507a47b9ce2b39fda21d6f”,

“valueLen”: “88”,

“content”: {“diskUuid”: “5286a2a5-0bba-0677-dc22-944cf7ff2daa”, “compositeUuid”: “23f1d45a-51b7-6f32-6294-246e96549690”, “capacityUsed”: 21915238400, “physCapacityUsed”: 4194304, “dedupUniquenessMetric”: 0},

“errorStr”: “(null)”

}

]

}

[root@wdc-esx27:/vmfs/volumes/vsan:5283c85154c8d44f-d969fa7d157edc33/ccecd45a-35da-f745-90c3-246e96549690]

capacityUsed = 21915238400 ~ 20.41G

physCapacityUsed = 4194304 = 4M

Both vmdk’s ,one from NFS and one VMFS6 datastores , after Storage vMotion to vSAN , show differences in space allocated and actual capacity used.

5. Using the web client or command ‘vmkfstools’ , try creating a new 12G vmdk on VM ‘pvrdmarac1’, eager zero thick (EZT). I used the ‘vmkfstols’ command.

[root@wdc-esx27:~] vmkfstools -c 12G -d eagerzeroedthick -W vsan /vmfs/volumes/vsanDatastore/pvrdmarac1/test.vmdk

Creating disk ‘/vmfs/volumes/vsanDatastore/pvrdmarac1/test.vmdk’ and zeroing it out…

Create: 100% done.

[root@wdc-esx27:~]

Check the disk characteristics

[root@wdc-esx27:/vmfs/volumes/vsan:5283c85154c8d44f-d969fa7d157edc33/cde3cf5a-2a05-2feb-f199-246e96549690] cat test.vmdk

# Disk DescriptorFile

version=4

encoding=”UTF-8″

CID=fffffffe

parentCID=ffffffff

isNativeSnapshot=”no”

createType=”vmfs”

# Extent description

RW 25165824 VMFS “vsan://6befd05a-2e1b-b0a1-10b2-246e96549690“

# The Disk Data Base

#DDB

ddb.adapterType = “lsilogic”

ddb.geometry.cylinders = “1566”

ddb.geometry.heads = “255”

ddb.geometry.sectors = “63”

ddb.longContentID = “637bc7ed753c7e966f213549fffffffe”

ddb.uuid = “60 00 C2 91 f3 58 49 ce-30 8f 43 03 9d d7 7d cd”

ddb.virtualHWVersion = “13”

[root@wdc-esx27:/vmfs/volumes/vsan:5283c85154c8d44f-d969fa7d157edc33/cde3cf5a-2a05-2feb-f199-246e96549690]

[root@wdc-esx27:/vmfs/volumes/vsan:5283c85154c8d44f-d969fa7d157edc33/cde3cf5a-2a05-2feb-f199-246e96549690] cmmds-tool find -u 6befd05a-2e1b-b0a1-10b2-246e96549690 -f json

{

“entries”:

[

{

“uuid”: “6befd05a-2e1b-b0a1-10b2-246e96549690”,

“owner”: “598273c6-2b47-6652-7ba4-246e96549690”,

“health”: “Healthy”,

“revision”: “2”,

“type”: “DOM_OBJECT”,

“flag”: “2”,

“minHostVersion”: “3”,

“md5sum”: “1102aa1a4698aeaa08a7f093c25dde8d”,

“valueLen”: “1448”,

“content”: {“type”: “Configuration”, “attributes”: {“CSN”: 2, “addressSpace”: 12884901888, “scrubStartTime”: 1523642219640557, “objectVersion”: 5, “highestDiskVersion”: 5, “muxGroup”: 507376883371240996, “groupUuid”: “cde3cf5a-2a05-2feb-f199-246e96549690”, “compositeUuid”: “6befd05a-2e1b-b0a1-10b2-246e96549690”}, “child-1”: {“type”: “RAID_1”, “attributes”: {“scope”: 3}, “child-1”: {“type”: “Component”, “attributes”: {“capacity”: 12884901888, “addressSpace”: 12884901888, “componentState”: 5, “componentStateTS”: 1523642219, “faultDomainId”: “59822d81-cbb0-2ae2-0ed9-246e96537848”, “lastScrubbedOffset”: 12884901888, “subFaultDomainId”: “59822d81-cbb0-2ae2-0ed9-246e96537848”}, “componentUuid”: “6befd05a-63f7-01a2-0ba9-246e96549690“, “diskUuid”: “52aecdd7-8b13-d363-969e-5bd7f888dc6f”}, “child-2”: {“type”: “Component”, “attributes”: {“capacity”: 12884901888, “addressSpace”: 12884901888, “componentState”: 5, “componentStateTS”: 1523642219, “faultDomainId”: “59823277-24b2-1a08-e42c-246e96537d48”, “subFaultDomainId”: “59823277-24b2-1a08-e42c-246e96537d48”}, “componentUuid”: “6befd05a-b891-03a2-b152-246e96549690”, “diskUuid”: “522d223d-58fb-e969-cc77-7f3a46a8ad98”}}, “child-2”: {“type”: “Witness”, “attributes”: {“componentState”: 5, “componentStateTS”: 1523642219, “isWitness”: 1, “faultDomainId”: “598273c6-2b47-6652-7ba4-246e96549690”, “subFaultDomainId”: “598273c6-2b47-6652-7ba4-246e96549690”}, “componentUuid”: “6befd05a-b143-04a2-6522-246e96549690”, “diskUuid”: “524062e5-0cc0-cd78-bfbf-988518d18bcc”}},

“errorStr”: “(null)”

}

,{

“uuid”: “6befd05a-2e1b-b0a1-10b2-246e96549690”,

“owner”: “598273c6-2b47-6652-7ba4-246e96549690”,

“health”: “Healthy”,

“revision”: “1”,

“type”: “POLICY”,

“flag”: “2”,

“minHostVersion”: “3”,

“md5sum”: “63b483f9933980da115cf8b9d750d58b”,

“valueLen”: “120”,

“content”: {“proportionalCapacity”: 100, “hostFailuresToTolerate”: 1, “CSN”: 2},

“errorStr”: “(null)”

}

,{

“uuid”: “6befd05a-2e1b-b0a1-10b2-246e96549690”,

“owner”: “598273c6-2b47-6652-7ba4-246e96549690”,

“health”: “Healthy”,

“revision”: “2”,

“type”: “CONFIG_STATUS”,

“flag”: “2”,

“minHostVersion”: “3”,

“md5sum”: “d27268a3cc8409117485c5e9f6218aff”,

“valueLen”: “64”,

“content”: {“state”: 7, “CSN”: 2},

“errorStr”: “(null)”

}

]

}

[root@wdc-esx27:/vmfs/volumes/vsan:5283c85154c8d44f-d969fa7d157edc33/cde3cf5a-2a05-2feb-f199-246e96549690]

[root@wdc-esx27:/vmfs/volumes/vsan:5283c85154c8d44f-d969fa7d157edc33/cde3cf5a-2a05-2feb-f199-246e96549690] cmmds-tool find -u 6befd05a-b891-03a2-b152-246e96549690 -f json

{

“entries”:

[

{

“uuid”: “6befd05a-b891-03a2-b152-246e96549690”,

“owner”: “59823277-24b2-1a08-e42c-246e96537d48”,

“health”: “Healthy”,

“revision”: “1”,

“type”: “LSOM_OBJECT”,

“flag”: “2”,

“minHostVersion”: “3”,

“md5sum”: “f6860eeb0f09cb56a6172f504a24ed41”,

“valueLen”: “88”,

“content”: {“diskUuid”: “522d223d-58fb-e969-cc77-7f3a46a8ad98”, “compositeUuid”: “6befd05a-2e1b-b0a1-10b2-246e96549690”, “capacityUsed”: 13153337344, “physCapacityUsed”: 4194304, “dedupUniquenessMetric”: 0},

“errorStr”: “(null)”

}

]

}

[root@wdc-esx27:/vmfs/volumes/vsan:5283c85154c8d44f-d969fa7d157edc33/cde3cf5a-2a05-2feb-f199-246e96549690]

capacityUsed = 13153337344 ~ 12.25 G

physCapacityUsed = 4194304 = 4M

Even though the size allocated, and size actually used differ, we were able to create it EZT with MWF

Now zero the blocks

[root@wdc-esx27:/vmfs/volumes/vsan:5283c85154c8d44f-d969fa7d157edc33/cde3cf5a-2a05-2feb-f199-246e96549690] vmkfstools -w /vmfs/volumes/vsanDatastore/pvrdmarac1/test.vmdk

All data on ‘/vmfs/volumes/vsanDatastore/pvrdmarac1/test.vmdk’ will be overwritten with zeros.

vmfsDisk: 1, rdmDisk: 0, blockSize: 1048576

Zeroing: 100% done.

[root@wdc-esx27:/vmfs/volumes/vsan:5283c85154c8d44f-d969fa7d157edc33/cde3cf5a-2a05-2feb-f199-246e96549690]

Run the disk characteristics check again.

[root@wdc-esx27:/vmfs/volumes/vsan:5283c85154c8d44f-d969fa7d157edc33/cde3cf5a-2a05-2feb-f199-246e96549690] cmmds-tool find -u 6befd05a-b891-03a2-b152-246e96549690 -f json

{

“entries”:

[

{

“uuid”: “6befd05a-b891-03a2-b152-246e96549690”,

“owner”: “59823277-24b2-1a08-e42c-246e96537d48”,

“health”: “Healthy”,

“revision”: “52”,

“type”: “LSOM_OBJECT”,

“flag”: “2”,

“minHostVersion”: “3”,

“md5sum”: “d0149fba035b7fb321d7bf09bb74ac9c”,

“valueLen”: “88”,

“content”: {“diskUuid”: “522d223d-58fb-e969-cc77-7f3a46a8ad98”, “compositeUuid”: “6befd05a-2e1b-b0a1-10b2-246e96549690”, “capacityUsed”: 13153337344, “physCapacityUsed”: 12889096192, “dedupUniquenessMetric”: 1},

“errorStr”: “(null)”

}

]

}

[root@wdc-esx27:/vmfs/volumes/vsan:5283c85154c8d44f-d969fa7d157edc33/cde3cf5a-2a05-2feb-f199-246e96549690]

capacityUsed = 13153337344 ` 12.25G

physCapacityUsed = 12889096192 ~ 12.003 G

We would have to run the ‘vmkfstools -w’ command to manually zero out the blocks and only then, the capacityUsed and physCapacityUsed numbers will correlate.

Research

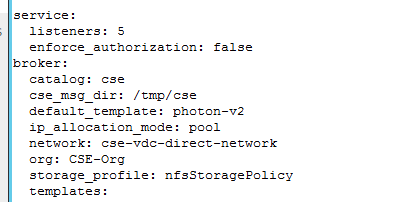

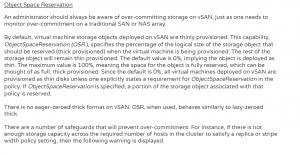

From the vSAN Design and Sizing Guide

Observations

- Based on the vSAN Design and Sizing guide, by specifying OSR=100%

- logically the space requested for will be allocated but

- it will NOT eager zero out all the blocks ie the format will more like a LZT disk

- We observed , when we Storage vMotioned EZT vmdk’s from NFS VAAI / EZT vmdk’s Local VMFS6 datastore to vsanDatastore, the Storage vMotion process is successful but vSAN ignores the EZT setting.

- The above explains why when we go to enable the multi-writer setting, it fails for the very same reason ie the vmdk is not an EZT vmdk.

- When we create a EZT vmdk on vsan , either through the web client or using ‘vmkfstools’ command , the vmdk is created with the logical size specified but not eager zeroed. The EZT setting is enabled on the vmdk though.

- We would have to run the ‘vmkfstools -w ’ command to manually zero out the blocks if needed. The capacityUsed and physCapacityUsed numbers will then corelate

Solution

Back to the problem at hand.

We are step 7 where VM ‘pvrdmarac1’vmdk/s is on the new storage with both

- original non-shared vmdks

- common vmdk/s without multi-writer setting

The next step 8 is , on VM ‘pvrdmarac1’, add multi-writer settings for all common vmdk/s back using the Web Client

As we found, we were stuck with a bunch of RAC vmdk’s and we could not enable the MWF settings on them for the above reasons that they were not EZT when they landed on vSAN storage.

Option 1

- Clone new vmdk/s from the vSAN vmdk/s using the vmkfstools command using the ‘-d eagerzeroedthick’ option

- vmkfstools -i source.vmdk -d eagerzeroedthick destination.vmdk

- Add the new cloned vmdk’s to the VM ‘pvrdmarac1’

- Remove the old vmdk’s from VM ‘pvrdmarac1’

- Enable MWF on the new added vmdk’s

- Continue from Step 10 as detailed in the high-level migration process section

Option 2

- Create new vmdk/s of required size on vSAN and zero the blocks out manually

- vmkfstools -c xxG -d eagerzeroedthick -W vsan XYZ.vmdk

- vmkfstools -w XYZ.vmdk (not seems necessary from mwf perspective but its good for performance)

vmkfstools -c 2T -d eagerzeroedthick -W vsan /vmfs/volumes/vsanDatastore/pvrdmarac1/data.vmdk

vmkfstools -w /vmfs/volumes/vsanDatastore/pvrdmarac1/data.vmdk

- Add these new vmdk’s to VM ‘pvrdmarac1’

- Startup VM ‘pvrdmarac1’

- Partition the new added vmdk’s with appropriate offset

- Create ASM disk on the new vmdk’s

oracleasm createdisk DATA_DISK01 /dev/sdp1

oracleasm scandisks

oracleasm listdisks

- Add new vmdk’s to the existing ASM disk groups

alter diskgroup DATA_DG add disk ‘/dev/oracleasm/disks/DATA_DISK01’;

alter diskgroup DATA_DG drop disk ‘DATA_DG_0000’;

For CRS and VOTE Disk groups, refer to the Oracle documentation for migrating CRS and VOTE to new ASM disk groups

- Drop old vmdk’s from the ASM disk groups and remove old vmdk’s from VM ‘pvrdmarac1’

- Power down VM ‘pvrdmarac1’

- Enable MWF on the new added vmdk’s for VM ‘pvrdmarac1’

- Continue from Step 10 as detailed in the high-level migration process section

Summary

- Based on the vSAN Design and Sizing guide, by specifying OSR=100%

- logically the space requested for will be allocated but

- it will NOT eager zero out all the blocks ie the format will more like a LZT disk

- We observed , when we Storage vMotioned EZT vmdk’s from NFS VAAI OR EZT vmdk’s Local VMFS6 datastore OR EZT vmdk’s on vsanDatastore to vsanDatastore, the Storage vMotion process is successful but vSAN ignores the EZT setting.

- The above explains why when we go to enable the multi-writer setting, it fails for the very same reason ie the vmdk is not an EZT vmdk.

- When we create a EZT vmdk on vsan , either through the web client or using ‘vmkfstools’ command , the vmdk is created with the logical size specified but not eager zeroed. The EZT setting is enabled on the vmdk though.

- We would have to run the ‘vmkfstools -w ’ command to manually zero out the blocks if needed. The capacityUsed and physCapacityUsed numbers will then corelate

With VMware Cloud on AWS as the target for storage vMotion , same effect is observed as the underlying storage is vSAN under the covers.

Important Update –

Prior VMware vSAN 6.7 P01 (ESXi 6.7 Patch Release ESXi670-201912001), Oracle RAC on vSAN requires

- shared VMDKs to be Eager Zero Thick provisioned (OSR=100) & multi-writer attribute enabled

Starting VMware vSAN 6.7 P01 (ESXi 6.7 Patch Release ESXi670-201912001), Oracle RAC on vSAN does NOT require the shared VMDKs to be Eager Zero Thick provisioned (OSR=100) for multi-writer mode to be enabled

- shared VMDKs can be thin provisioned (OSR=0) & multi-writer attribute enabled

Acknowledgements

A big shout out to Chen Wei, Storage Product Marketing for all his help and advice with this investigation.

All Oracle on VMware SDDC / VMware Cloud on AWS white papers including licensing guides , best practices, RAC deployment guides, workload characterization guide can be found at Oracle on VMware Collateral – One Stop Shop